The machine as a partner: Human-machine teaming design using the PRODEC method

Abstract

BACKGROUND:

Human-machine teaming (HMT) typically combines perspectives from systems engineering, artificial intelligence (AI) and human-centered design (HCD), to achieve human systems integration (HSI) through the development of an integrative systems representation that encapsulates human and machine attributes and properties.

OBJECTIVE:

The study explores the main factors contributing to performance, trust and collaboration between expert human operators and increasingly autonomous machines, by developing and using the PRODEC method. PRODEC supports HSI by improving the agile HCD of advanced sociotechnical systems at work, which qualify as human-machine teamwork.

METHODS:

PRODEC incorporates scenario-based design and human-in-the-loop simulation at design and development time of a sociotechnical system. It is associated with the concept of digital twin. A systemic representation was developed and used, associated with metrics for the evaluation of human-machine teams.

RESULTS:

The study is essentially methodological. In practice, PRODEC has been used and validated in the MOHICAN project that dealt with the integration of pilots and virtual assistants onboard advanced fighter aircraft. It enabled the development of appropriate metrics and criteria of performance, trust, collaboration, and tangibility (i.e., issues of complexity, maturity, flexibility, stability, and sustainability), which were associated with the identification of emergent functions that help redesign and recalibrate the air combat virtual assistant as well as fighter pilot training.

CONCLUSION:

PRODEC addresses the crucial issue of how AI systems could and should influence requirements and design of sociotechnical systems that support human work, particularly in contexts of high uncertainty. However, PRODEC is still work in progress and advanced visualization techniques and tools are needed to increase physical and figurative tangibility.

1Introduction

Human machine teaming (HMT), where the machine is increasingly autonomous, has applications in a wide range of industries such as aeronautics and space, defense, transportation, energy, healthcare, and manufacturing. Although HMT, also called Human-Autonomy Teaming and Human Artificial Intelligence (AI) Teaming (HAT), is now an accepted concept [1], the concept of autonomy needs to be better defined, when applied to both humans and machines, as well as humans and machines combined. Today, we have software-based algorithms (some of which are AI-based) that confer some autonomy on machines capable of performing tasks normally assigned to people, involving perception, conversation, and decision making [2].

Let’s provide useful working definitions in this article that address the complementary combination of human and/or machine autonomy, where machine autonomy is the capacity of a machine to act safely and efficiently on its own in an environment, and human autonomy refers to people solving problems, often unexpected, using appropriate human skills and knowledge, as well as technological and organizational support. This dual concept of autonomy requires a redefinition of what a system is. We consider that systems can include people, and people can include machines. This consideration leads to the concept of “sociotechnical system,” defined as a system of human and machine systems interacting within complex societal infrastructures and generating the emergence of human behaviors in various domains such as healthcare, transportation, defense, and environmental systems issues [3–5]. Therefore, we talk about systems of systems, or agencies as societies of agents [6], which can themselves be considered as combinations of human or machine agents. This approach provides us with a framework to further define human systems integration (HSI).

In addition, autonomy requires adaptation. People adapt to machines, even “autonomous” machines, and machines adapt to people using both supervised and/or unsupervised machine learning. The stability of this co-learning mechanism requires metrics to support the maturity of the resulting sociotechnical system.

Section 1 of this article provides an integrative representation of physical and cognitive systems useful for HSI investigations of HMT systems. This is where our ontological approach differs from previous approaches to human-machine systems, as it is based on a representation that allows both humans and machines to be defined as systems (e.g., sociotechnical systems). This representation is integrative because it integrates the physical and cognitive aspects of the human and the machine.

Section 2 is devoted to presenting what we can understand by autonomy, whether for humans, machines, or human-machine systems. In addition, it is crucial to improve our understanding of the automation-autonomy distinction from an HSI perspective [7, 8]. It is important to note that current automated machines are not self-directed [9]. Indeed, we have massively automated machines during the 20th century, but this type of automation has rarely led to convincing autonomy of the machine (e.g., the ability to make choices when faced with an expected or unexpected situation). Although different levels of automation have long been defined [10, 11], automated systems are designed to perform specific sets of largely deterministic steps to achieve a limited set of predefined outcomes [12].

While automation has proven to be a useful and essential solution to safety in well-specified contexts, it makes work processes more rigid, often counterproductive, or even dangerous, specifically in unexpected situations in which flexibility is needed to perform problem-solving tasks. Specifically, how can technology, organizations and people’s competence improve this flexible problem-solving in unexpected situations? We have attempted to answer this question by defining and developing the PRODEC method, which identifies human and machine activity during design and development, using scenario-based design and human-in-the-loop simulations, to better explore and elicit the emergence of potential socio-cognitive augmentation that could improve the autonomy of the sociotechnical system under consideration, which should have the ability to self-manage in a safe, efficient, and comfortable manner. Socio-cognitive augmentation is made incrementally explicit through the agile development of prototypes and formative evaluations, as well as formulated as emergent functions and structures of appropriate agents or systems of the overall sociotechnical system under consideration.

Section 3 describes the PRODEC approach and method developed and refined primarily on the MOHICAN project, which focused on intelligent assistance to fighter pilots, and two other projects devoted to tele-robotics on oil-and-gas drilling platforms [13] and AI-assisted remote maintenance of helicopter engines [14]. In this article, we use the first project as a running example. We have also been influenced by a recent state-of-the-art in the field of human-machine teaming [3], where the machine is becoming increasingly autonomous. This state-of-the-art in HMT is very comprehensive and covers HSI processes and measures of HMT collaboration and performance. However, this very dense work does not cover the need to put into perspective a system representation that supports human-machine teamwork and that addresses automation and autonomy at all possible relevant levels of granularity, which is precisely the goal of the ongoing analytical and empirical work presented in this article and embodied in the PRODEC method. PRODEC is also based on new maturity and tangibility metrics.

PRODEC encapsulates techniques of knowledge elicitation, agile design and development, and formative evaluation. It is based on generic multi-agent models [13], and deeply rooted in previous work that includes types and levels of human interaction with automation [15], distributed cognition [16], multi-agent systems [17], human-robot interaction [18], systemic interaction models [7, 28], organizational automation [20], computer-supported cooperative work [21], trust in automation [22], and other relevant approaches and models. The development of PRODEC is still a work in progress on three large industrial projects already mentioned, exploring various kinds of metrics that assess team performance, HMT issues of trust and collaboration, as well as organization models in relation with authority sharing, and tangibility considerations (i.e., complexity, maturity, flexibility, stability, and sustainability issues, as explained in [19]).

PRODEC focuses on both procedural and problem-solving skills for both humans and machines. This distinction addresses the differences between handling expected and unexpected events in complex sociotechnical systems. Using a new knowledge representation that supports transdisciplinary HMT research and development, PRODEC directly addresses the critical question of how AI systems “could and should influence requirements and the design of systems that support human work, particularly in settings that are high in uncertainty” [3].

Section 4 is dedicated to HMT metrics to better master these new machines considered as partners. Of course, since the AI-based systems that we are co-developing and operating are still immature to some extent, the results are still preliminary. However, the current success of the PRODEC approach in the military and civilian worlds is worth presenting.

The conclusion reviews the current theoretical and practical results of HMT, using a possible future air combat system as an example. It offers several perspectives and examines the role of humans and organizations in more generic life-critical systems of systems, including the issue of authority sharing.

2Towards a physical and cognitive systemic representation

The Human Machine Teaming (HMT) approach, which considers “the machine as a partner,” requires a better understanding of the evolution from rigid automation to flexible autonomy, where autonomy is considered not only for people and machines as individuals, but also for combinations of people and machines as human-machine teams, and even teams of teams.

Therefore, emphasis should be placed on the cross-fertilization of multi-agent representations provided by AI and those of systems of systems developed in systems engineering (SE) [23, 24]. In AI, this understanding is based on the concept of an agent, which represents a human or a machine capable of identifying a situation, deciding, and planning appropriate actions. In this context, for example, the armed forces are composed of “human and machine agents,” with the machines being increasingly equipped with AI algorithms. AI refers to systems that demonstrate intelligent behavior by analyzing their environment and acting – with some degree of autonomy – to achieve specific goals.

Command and control (C2) systems are now integrated with cockpits and, more generally, inter-connectivity has become a real support to air force operations but also requires human-centered systemic integration. This leads to the definition of specific methods and tools such as scenario-based system design and digital twins [25]. The development of AI-based systems contributes to the generation of new emerging human roles and then functions that need to be identified more precisely. Specifically, safer, more effective, and more comfortable HMT integration requires a better understanding of emerging human factors such as situational awareness, decision making, and risk taking. In addition, the trust-collaboration-performance triad needs to be better articulated and measurable.

In 2019 in the United States, the Systems Engineering Research Center (SERC) developed a roadmap structuring and guiding AI and autonomy research to support SE [26]. They introduced the acronym AI4SE (Artificial Intelligence for Systems Engineering). Conversely, SE practices could be used to support the development of increasingly automated and increasingly autonomous systems (SE4AI). These AI4SE and SE4AI approaches primarily view AI as machine learning (ML) and data science. Whether supervised, unsupervised, or reinforcement-based, ML has a specific role that requires addressing three types of problems: learning assurance, explainability, and security risk mitigation [27]. In practice, the major problem remains, namely the certification of learning systems. Indeed, certification requires that all elements of a software code be delivered unambiguously, which is not possible for neural network systems. For this reason, our vision of the AI-SE combination does not address issues of complementarity, but rather disciplinary issues and perspectives of similarity and collaboration.

A major problem remains, namely the certification of learning systems. Indeed, certification requires that all elements of a software code be delivered unambiguously, which is not possible for neural network systems. However, two major points must be considered: AI is much broader than ML and data science, and we should consider that AI and SE have much in common. Indeed, AI is a very broad discipline that includes data-driven AI (i.e., data science), knowledge-based AI (i.e., rule-based systems, expert systems), hybrid AI (i.e., the right mix of neural networks and symbolic AI), and distributed and embedded AI (i.e., multi-agent systems). AI and SE are typically combined with software engineering, safety/security engineering, and human factors and ergonomics. Alternative initiatives are currently being considered to investigate an HSI representation where cognitive and physical systemic dimensions can be harmoniously handled together [28].

This representation of physical and cognitive systems is very useful for analyzing the contemporary shift from 20th century technology-centered engineering, which began with physical systems and ended with software augmented systems – in effect, a shift from hardware to software – to 21st century human-centered engineering design, which begins with a set of PowerPoint boards expressing ideas, animates them, and then realizes a virtual prototype and human-in-the-loop simulations. PRODEC was developed to support the early human systems integration into design and development processes (i.e., incrementally address human requirements in an efficient and agile manner). Specifically, increasingly autonomous (AI-based or data-driven) functions can be considered and, more importantly, tested much earlier than before. However, the correct realization of this new trend requires an appropriate representation of physical and cognitive systems for the design of the dual concept of human and machine autonomy.

The concepts of system and agent are representations, respectively used in SE and AI. In this article, we use the terms “system” and “agent” to refer to the same entity. Furthermore, a system can be defined as a “system of systems” (SoS) [24], and an agent as a “multi-agent system” or a society of agents [29]. Autonomy is a central element of this systemic definition, as systems can be dependent, independent, or interdependent on each other. For example, some future air combat systems, considered SoSs, are described as autonomous, in the sense that they can live on their own for a period of time if necessary, and are required to share situational awareness, maintain trust with each other, be reliable, and agree on a collaborative and distributed work organization. “By 2040, the Future Combat Air System (FCAS) will be based on a networked architecture, bringing together manned and unmanned platforms within a system of systems. This paradigm is fully in line with Man-Unmanned Aircraft teaming, i.e., the hybridization of human and machine in a holistic cognitive system. Precisely, Paul Scharre [30] refers to this hybridization through the mythological form of the ‘centaur warrior’, half man, half horse” [31].

What is a system? Several definitions have been proposed. A system is generally defined as “a combination of interacting systemic elements organized to achieve one or more stated objectives” [32, 33], and “something that does something (activity = function) and has a structure, which evolves over time, in something (environment) for something (purpose)” [34].

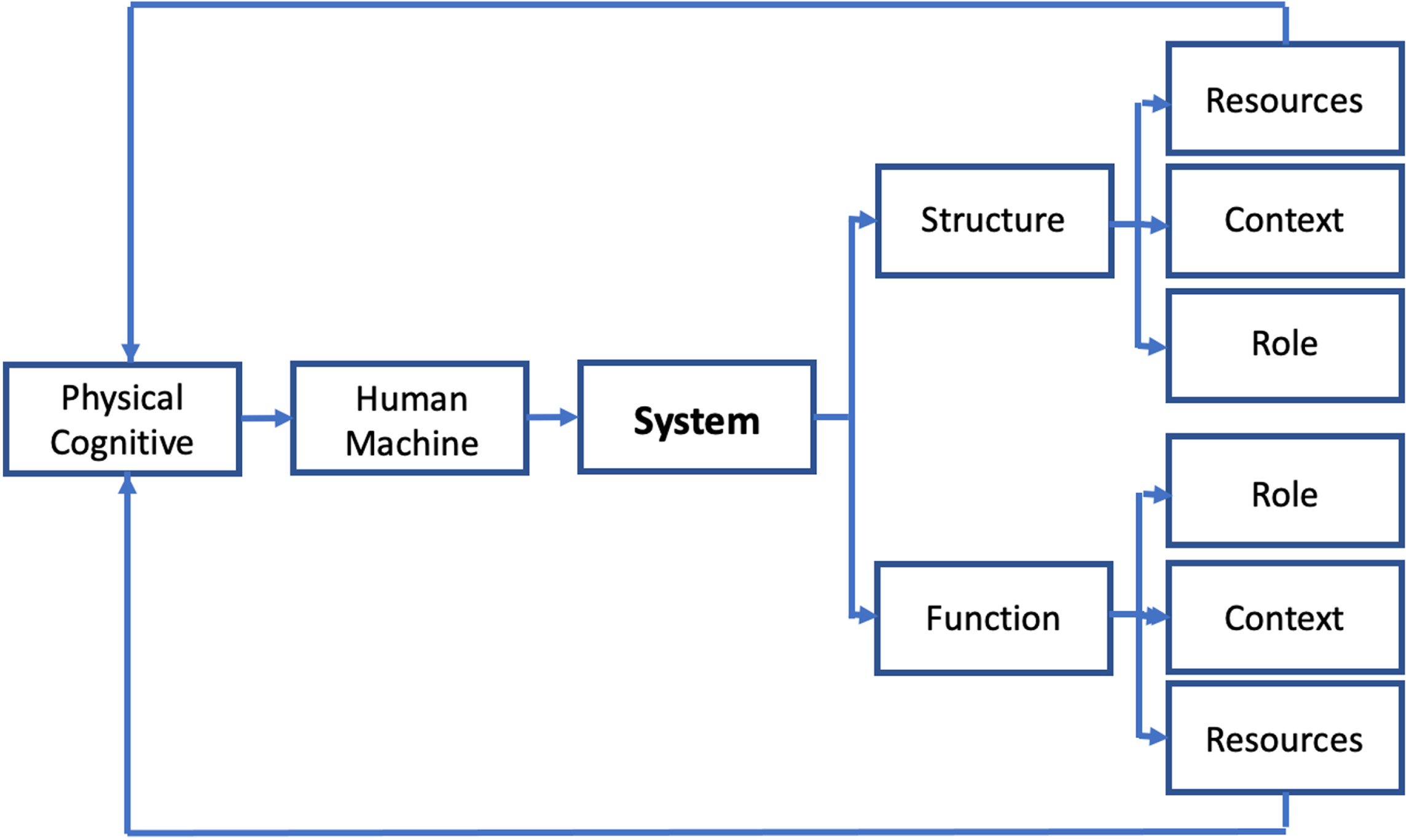

Most people think of a system as a machine. In fact, when doctors talk about the cardiovascular system, they are talking about a representation of a human organ that allows blood to flow throughout the body, not a machine in the mechanical sense. Social scientists talk about sociocultural systems. Here again, they speak of representations (Fig. 1). More generally, we will speak of “natural systems” (including human systems), and “artificial systems” (including machine systems), which may include other systems, recursively defining the broader concept of system of systems. For example, the human body (i.e., a natural system) can include a pacemaker (i.e., an artificial system), and conversely, a car (i.e., an artificial system) can include a human being (i.e., a natural system). A system is defined by its structure and function which can be physical and/or cognitive. More specifically example, the human heart has a specific structure that allows it to pump blood (i.e., its main function).

Fig. 1

A system as a representation.

The PRODEC method has been used in an air combat system project called MOHICAN. The goal of this project was to derive performance metrics to assess the collaboration between pilots and cognitive systems, as well as trust in those cognitive systems. Within MOHICAN, we explored the relevance and usability of a wide range of physical, informational, human, environmental and organizational parameters already considered by previous studies on automation and autonomy. Examples of cognitive functions tested in MOHICAN are adapted trust and interdependence, delegation of authority, and action initiative (taking and abandoning). MOHICAN will be used in this article to illustrate how the PRODEC method can be applied.

3The automation-autonomy distinction: The flexibility challenge

In sociotechnical systems, a better understanding of automation and autonomy requires defining the concept of “cognitive function” [35]. Not only do humans have cognitive functions that are described as natural, but also that digitized machines can have artificial cognitive functions. The representation of cognitive functions is generally defined in two ways: (1) a logical way where a cognitive function is seen as an application used by a human or a machine to transform a task (what is prescribed to be performed) into an activity (what is effectively performed); (2) a teleological way that provides a cognitive function with attributes such as a role, a validity context, and resources.

The role of the function is the identity of the corresponding agent or system within the organization under consideration (e.g., the role of the letter carrier within the postal organization is to deliver letters). The context of validity is usually defined in time (e.g., 8 a.m. to 5 p.m., with a two-hour break at noon, every working day), space (e.g., knowledge of the clearly specified neighborhood where letters are to be delivered), and normal, abnormal, or emergency situations. Resources could be physical (e.g., a bag and a bicycle) or cognitive (e.g., a cognitive pattern-matching function used to determine whether the address on the letter matches actual street and house information).

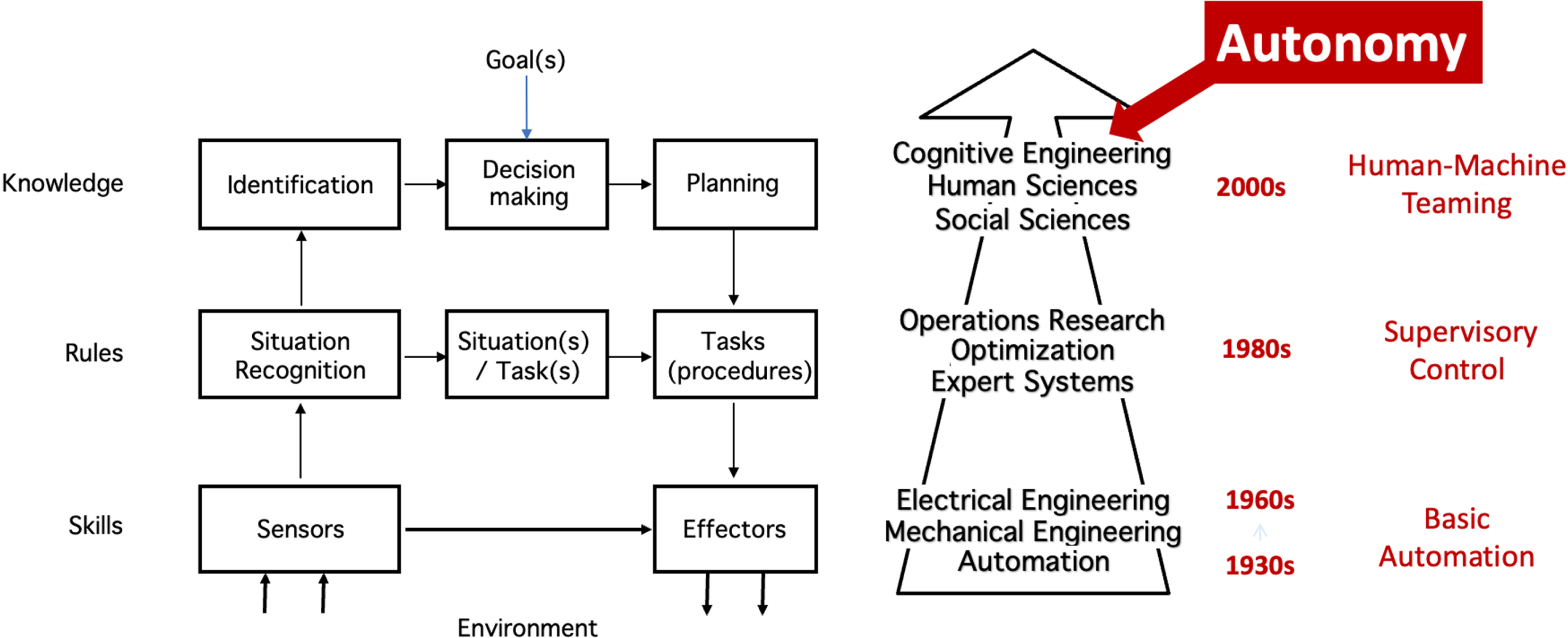

Cognitive function analysis has been successfully used in several aerospace projects [5, 36]. Considerations of function allocation in the HMT era have recently been published [37], based on an extension of Fitts’s MABA-MABA approach [38]. Cognitive functions (CFs) can be categorized according to Rasmussen’s model of human behavior to better interpret the emergence of contributing engineering disciplines with respect to machine automation [39] (Fig. 2).

Fig. 2

The evolution from automation to autonomy based on Rasmussen’s model.

Both people and machines can be “automated” (Fig. 3). First, human cognitive functions can be automated either by intensive training and long experience or by following a procedure (e.g., the pilot’s checklist). Second, from an engineering perspective, machines automation involves developing appropriate algorithms that replace human (i.e., natural) cognitive functions with machine (i.e., software and thus artificial) cognitive functions. However, both types of automation can only work and be used in very specific contexts (i.e., in expected situations). Outside of these contexts (i.e., in unexpected situations), their rigidity can cause incidents or accidents [40, 41]. Therefore, in unexpected situations, the people in charge must solve problems by themselves using the physical and/or cognitive resources available, whether they are humans or machines. Therefore, these actors need flexibility, as well as appropriate knowledge and skills to solve problems, often unexpected, requiring autonomy, as opposed to following procedures or monitoring the automation of machines based on initial planning.

Fig. 3

From rigid automation to flexible autonomy.

PRODEC is based on four broad categories of cognitive functions that contribute to autonomy: situational awareness (perception, monitoring, construction of mental model and knowledge, trend identification and detection of anomalies); reasoning (generally involving three types of inferences: deduction, induction, and abduction); action (mission planning, activity and resource scheduling, task execution and control, fault diagnosis, crisis management, learning, and adaptation); and collaboration (shared knowledge and understanding, prediction of behaviors and intentions, negotiation of tasks and goals, operational trust building). When dealing with machine agents, the related cognitive functions must be built, verified, and validated through a series of tests on human-in-the-loop simulations, which raises systemic problems of designing specific architectures.

Resources that are useful and can be used in unexpected situations are called “FlexTech” [7]. Indeed, FlexTech resources are there to help human operators be more autonomous and allow them to easily move from rigid automation to flexible autonomy. Operational flexibility is achieved when each agent in a problem-solving situation, trying to react to an unexpected event for example, finds effective help from one or more of these partners. For example, the “undo” function is a FlexTech resource used in word processing to remove a misspelled word, thus providing flexibility to writers in their typing tasks. The “undo” function cannot qualify as an autonomous function; however, its availability and effectiveness allow a writer to be autonomous in the sense that he or she does not need external assistance or perform very complicated tasks to correct a typo. The “undo” FlexTech function is very different from an automated lexical and/or syntactic text checker that automatically changes sentences as you type and is very rarely context sensitive. Automation replaces humans in very specific contexts, while FlexTech supports human activity in much broader contexts. Automation can be very inefficient when used out of context, decreasing situational awareness, and generally resulting in performance decrements that place the human operator out of the loop [42]. In practice, human operators using automated machines tend to be complacent, especially when the automation works perfectly in the contexts in which it has been validated but become fragile outside of these contexts.

At this point, it is important to distinguish between a partner and a teammate. A teammate is a member of a team, and a partner is an associate with a team in a common activity or interest. Therefore, the term “partner” was chosen instead of “teammate” to describe AI-based or data-intensive machines.

In the case of the letter carrier, the cognitive function “delivering letters” can be automated at different levels of automation. The letter carrier’s bicycle can be replaced by a more or less automated car that offers more physical comfort in terms of fatigue, for example – note that we see here how physical and cognitive functions can be articulated. If e-mail can be seen as a different mode of communication than the paper letter, it can also be seen as a higher level of automation of mail delivery, in which postal workers are radically replaced by computers, software and information networks. The uses of email also assume that the various human agents involved have appropriate tools with adequate physical and cognitive functions defined in specific contexts of validity (e.g., a functioning power supply and servers, and an appropriate user interface).

Autonomy is a property that gives a human or a machine sufficient robustness to function without external intervention or supervision. In the case of a human, some more or less automated machines allow us to be more autonomous and, more generally provide us with functions that we do not naturally have. Two examples can be cited: exoskeleton systems can offer more autonomy to handicapped people; the airplane allows us to fly, whereas the human being is not naturally able to fly.

While airplanes allow us to travel a greater distance in less time, they also introduce new scheduling constraints – we need to learn how to use their services. In the case of a machine, machine autonomy is necessary when a system must make a time and/or life critical decision that cannot wait for external help. This is especially true when managing a remote system; the system must then be equipped with rich embedded data and decision-making systems [41]. For example, NASA’s Curiosity and Perseverance rovers can autonomously move from one point to another on Mars using stereo vision and on-board path planning. In both cases, autonomy requires a clear representation of the system, whether it is a human or a machine (Fig. 1).

4PRODEC for human systems integration

AI-based systems cannot be successfully developed without appropriate HSI methods, metrics, and evaluation tools. HSI favors Scenario-Based Design (SBD) to ground proposed solutions [43]. This type of design requires subject matter experts, such as fighter pilots, air traffic controllers, and C2 personnel. The PRODEC method has been developed to promote knowledge acquisition by subject matter experts, creativity, scenario design, and human-in-the-loop simulations (HITLS) which allow, through their use, discovery of some emergent properties of the studied sociotechnical system from the analysis of the activity coming from the interactions between the different human and machine systems [13]. PRODEC is useful for the analysis, design, and evaluation of a complex sociotechnical system. The knowledge obtained from business experts in the form of procedural scenarios (the PRO part of the PRODEC method, for “procedural”) can be transcribed in the form of directed graphs, for example of the tasks involved in the description of a mission or a job to be performed. Such directed graphs can be developed using BPMN (Business Process Modeling and Notation) [44]. The explicitness of knowledge from experience helps to develop the task analysis by decomposing a high-level task into subtasks, and so on. The analysis of these incrementally developed task graphs allows the discovery of functions that were not initially anticipated. These are called “emergent features.” It may also turn out that “emergent structures,” which had been forgotten in the architecture of the sociotechnical system under development, appear as integral parts. These “discoveries” of emerging functions and/or structures discovered during HITLS contribute incrementally to the improvement of the declarative scenarios (the DEC part, for “declarative”) expressing the configurations of the sociotechnical system under development.

The PRODEC cycle can be described as follows: (Step-1) initial task analysis; (Step-2) elicitation of emergent functions and structures; (Step-3) implementation of the prototype using the declarative representation shown in Fig. 4; (Step-4) human-in-the-loop simulation; (Step-5) observation and analysis of the activity for elicitation of emergent properties; (Step-6) modification of the previous task analysis; and (Step-7) for further refinement, return to Step-2. Task analysis (i.e., the development of procedural scenarios in steps 1 and 6) allows for the implementation of scenarios that not only guide the development of prototypes to test new system configurations, but also allow for the gradual elicitation of emergent properties and the step-by-step modification of the task analysis.

Fig. 4

Declarative representation of a human-machine system of systems.

The PRODEC method was used in the MOHICAN project to elicit the various human and machine functions from three fighter pilots to be tested against metrics of operational performance enabling the assessment of trust and collaboration between a fighter pilot and the virtual assistant being developed. To explore trust and collaboration between the Human and the Machine on most of the operational tasks that could be entrusted to the system of systems (e.g., latest generation fighter aircraft, crew), the operational scenario selected mixed planned air-to-ground attack sequences, dynamic targeting phases, and finally air-to-air interception phases. The crews were immersed in three complex missions in a semi-permissive environment, where they could not avoid considering the enemy threat, nor coordination with friendly forces.

The following PRODEC cycle was used:

Step-1: Task analysis. We first developed several mission timelines divided into specific flight phases from expert pilots’ stories. These flight phases corresponded to generic permanent processes or temporary processes activated according to the context and the tasks to be performed. In total, three generic permanent processes have been identified (e.g., piloting) and eight temporary processes (e.g., ground-to-air threat avoidance). A task analysis was carried out for each multi-agent process. This knowledge acquisition from experts was done through interviews, direct form filling (using Excel spreadsheets and transcription using BPMN), and GEM (Group Elicitation Method) sessions [45]. Although the operational context was complex, the method made it possible to precisely determine the tasks and subtasks to be performed, the necessary resources, the agents involved, the useful cognitive functions requested and their intensity (attention interval) for each process of a mission. This led to the definition of the multi-agent context, and consequently the future domain of use of the models.

Step-2: Physical and cognitive structure-function analysis (derived from the original cognitive function analysis (CFA) supported by the AUTOS pyramid, see [35]). We identified human and machine cognitive (and physical) functions from the task analysis. CFA is declarative (i.e., it enables the declaration of methods, functions and so on, which can be allocated to human and/or machine agents). In total, 21 functions were identified and classified according to Endsley’s SA model and described in a SADMAT format (Situation Awareness, Decision Making or Action Taking) [13]. Each function was defined by its role, context of validity, and useful human and/or machines resources. For example, the “Acquiring Information” function enables the pilot to retrieve data via the weapons system, geographic information systems, and other systems. This function can be evaluated from a variety of perspectives, including accuracy, time, workload, utility, and so on. Among the cognitive resources associated with this function, we can name perception and among the physical resources related to the context to which it appeals, we can name the information coming from the GIS (Geographic Information System), the weapon system, the Targeting pod, and so on. Of course, this depends on the context and resources available. Using the CFA approach to cognitive function modeling, we considered their roles, contexts of validity, and resources [35]. From this representation of human knowledge, a distribution of tasks between humans and virtual assistants was proposed and then resulting activity was analyzed via human-in-the-loop simulations while considering the complexity of the operational context.

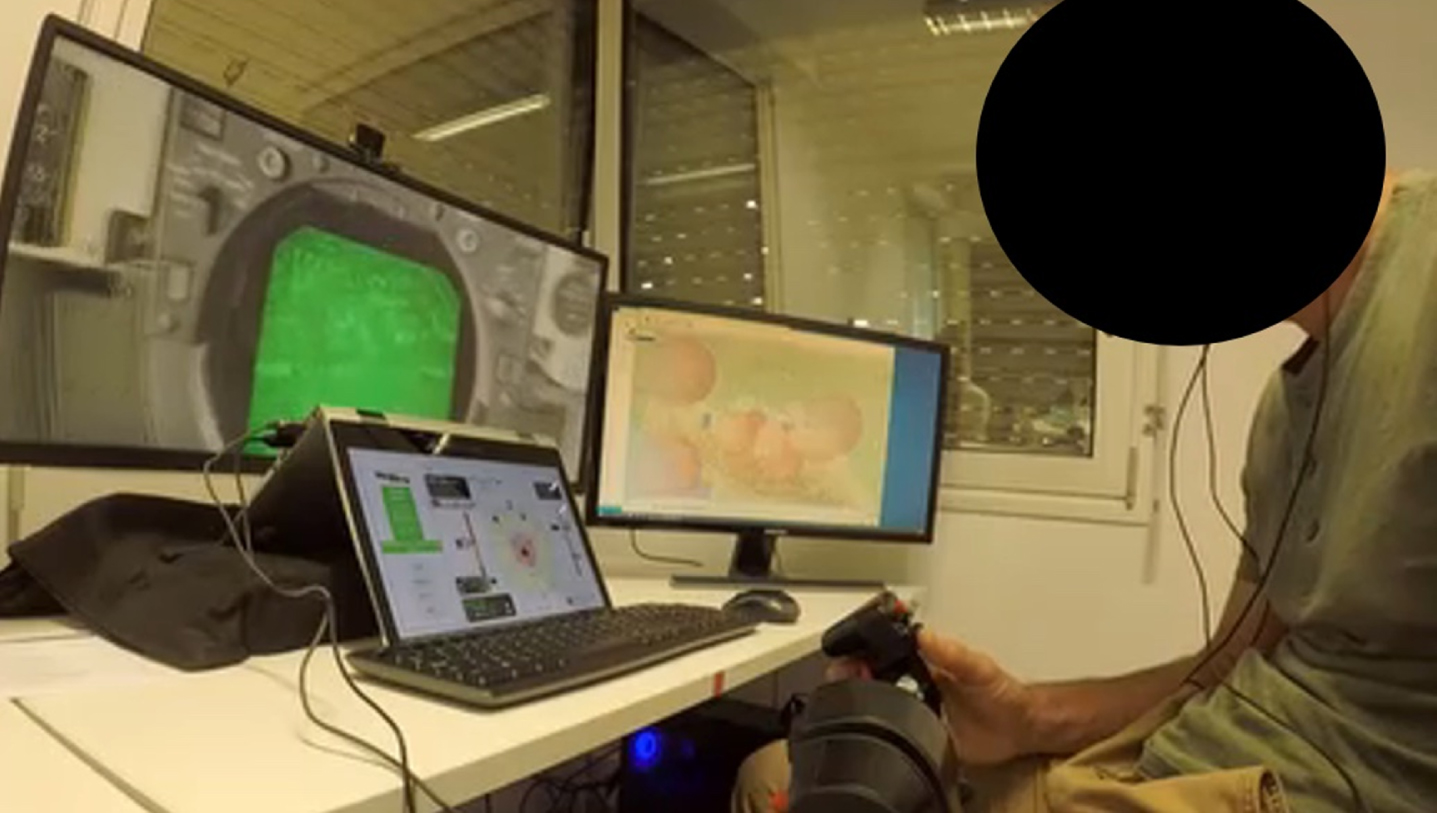

Step-3: Creation and use of a simulation environment. We used an existing digital combat simulator that allows single and multiplayer piloting in large-scale military operations. The single-pilot cockpit, equipped with a virtual assistant called JESTER, was used in a multitasking mode, enabling delegation of tactical functions to JESTER. A contextual menu and a voice recognition system allowed the pilot to make requests on JESTER. This first setup required the development of complementary functions to improve the maturity of the virtual assistant. In total, three versions of the virtual assistant have been developed (JESTER+ / JESTER BASIC / JESTER ADVANCED). Figure 5 shows a picture of the experimental setup with the JESTER ADVANCED version of the virtual assistant. Some functions of the virtual assistant using a voice recognition system were simulated using a Wizard of Oz technique (e.g., Air Defense Package Leader, C2 personnel). Of course, each pilot was previously trained to fly the aircraft simulator as well as the different versions of the virtual assistant. The duration of each simulation was approximately one hour.

Fig. 5

Experimental set up of the MOHICAN project.

Step-4: Human-in-the-loop simulations (HITLS). We conducted a series of HITLS based on dense and realistic scenarios contextualizing the previously described BPMN models. Five HITLS were carried out, involving three fighter pilots at every iteration, where the difficulty of the scenarios increased from one simulation to the next, and functions of the virtual assistant were upgraded three times in an agile manner. To increase the level of difficulty of the experiments, the operational context has been hardened to make it iteratively more complex (e.g., targets more difficult to identify, multiplication of threats and their technicality, reduction of margins (e.g., time, fuel, etc.).

Fig. 6

Example of task (left side) and activity (right side) analyses using BPMN [44] graphs, and identification of emergent properties (in blue on activity side).

![Example of task (left side) and activity (right side) analyses using BPMN [44] graphs, and identification of emergent properties (in blue on activity side).](https://content.iospress.com:443/media/wor/2022/73-s1/wor-73-s1-wor220268/wor-73-wor220268-g006.jpg)

Step-5: Activity observation and analysis. Pilot’s activity was recorded for further activity analysis, as well as HMT performance analysis based on a performance model, with new structure-function analysis leading to progressive tangibilization of the domain ontology. Tangibility is taken in the sense defined in [16]. Note that in many cases, the physical simulation facility had to be modified incrementally using rapid prototyping to improve the virtual assistant design. During the simulation phase, we collected human factors data (e.g., eye tracking, heart rate, video, etc.) and meaningful operational parameters regarding fighter pilot’s activity.

Step-6: Incremental discovery of emergent properties and features. A BPMN was created after each simulation and for each pilot. The comparison of tasks and activities re-instantiated in a BPMN-CFA process form enabled us to explore and eventually discover emergent functions and structures that were reintroduced in the next design iteration (both tasks and in virtual assistant were modified). Figure 6 presents the analysis of the first iteration of simulations with the JESTER+ version of the virtual assistant. Through BPMN-CFA analysis, we identified the emergent function related to the need to remind the pilot of the safe altitude and heading. This function has been added to the next virtual assistant version that is JESTER BASIC. It has been analyzed that in the case of an operational context without major difficulties, the level of trust and collaboration of the pilot towards the virtual assistant for this function was high. On the other hand, in the case of an increase in the difficulty of the scenario and the mobilization of significant cognitive resources on the part of the pilot, the level of trust decreased sharply. The pilot’s trust in the onboard virtual assistant is a complex, dynamic and multidimensional concept that is highly context and time dependent (i.e., the virtual assistant can quickly gain or lose the pilot’s trust during a mission). Although this notion of context is difficult to formalize, an index evaluating pilot’s theoretical workload in real time has been developed and implemented in the latest version of the virtual assistant (JESTER ADVANCED). This index was formalized from pilot’s activity and simulation context data acquisition and interpretation.

Step-7: Stop when satisfactory metrics, criteria, functions, and structures were found, otherwise back to step 1. At each iteration, the method consisting of the core measures, intermediate criteria, and high-level metrics was challenged and updated, considering their relevance, validity (meaning and content), and ease of implementation. After five iterations, we managed to improve the method by evaluating intermediate criteria and combining them to obtain an assessment of degrees of trust and collaboration. In the same way, the analysis of emerging functions at each iteration has allowed the level of trust between the pilot and the virtual assistant to evolve.

5Model-based human autonomy teaming metrics

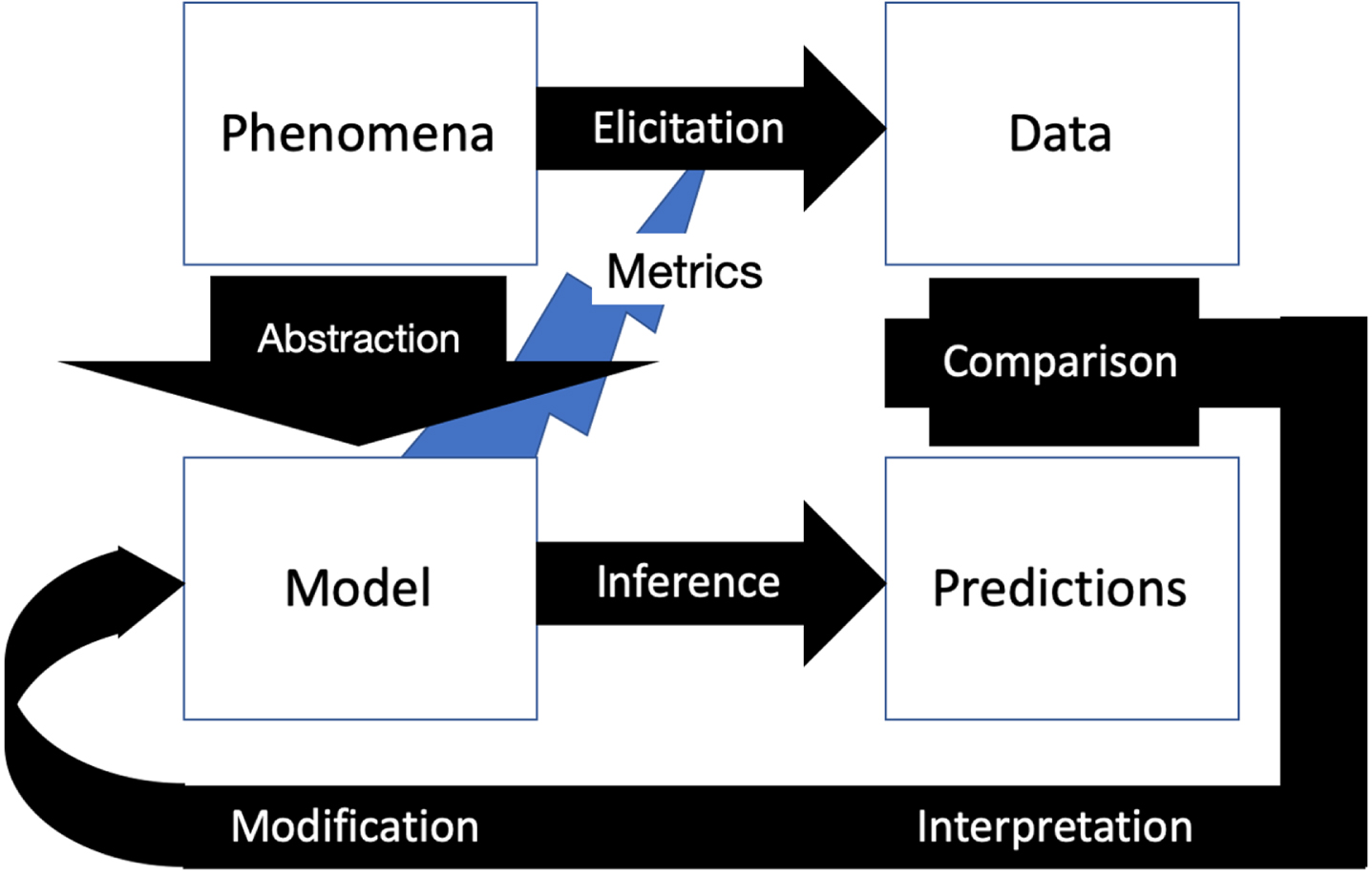

A model is an abstraction of a real-world phenomenon, which is used to increase our understanding of it by evaluating various appropriate metrics. Metrics are associated with performance evaluation platforms for the verification and validation of the relevance and effectivity of the design of a system [46]. They are necessarily based on relevant models. We generally refer to this approach as the model-based approach (Fig. 7). In the context of HSI, these models are now translated into the form of digital twins, which supports model based HSI [25].

Fig. 7

Modeling method for exploring phenomena.

For example, we are developing this digital twin concept in several domains including operational maintenance of helicopter engines [14] and remote operations of an oil well [47]. In these two application cases, we are working on simulations, machine learning and reasoning systems to facilitate decision making. Individuals involved in such simulations are likely to develop trust or distrust in all or parts of the system being operated and, as a result, develop appropriate strategies to implement. Trust is therefore a major issue in the partnership between humans and machines with autonomous capabilities, especially as trust in systems that are constantly evolving is difficult to rationalize.

Several iterations are necessary to ensure that the model being used is appropriate for the work to be done, and improvable. Without a subject matter expert, the model-based method does not work. In fact, not only do the results need to be evaluated by subject matter experts, but the measurement model itself also needs to be evaluated. In our work, we are looking for appropriate models that could be useful and adaptable in air combat settings, focusing on multi-agent approaches. These models can be symbolic and/or mathematical, allowing the definition of metrics to support the elicitation of qualitative and/or quantitative data. In the MOHICAN project, our primary concerns were trust and collaboration models, which we tried to translate into useful qualitative metrics for HMT.

Trust is a very rich subject that has been explored for a long time in many fields, such as psychology, sociology, human factors, philosophy, economics, and political science. It is related to many factors and processes, such as uncertainty and vulnerability [22], risk taking [48], distributed dynamic teams [49], and team training [50], for example. Trust is intimately related to cooperation and collaboration. This article focuses on a systemic and organizational (i.e., multi-agent) view of trust and collaboration [51, 52].

David Atkinson pointed out that autonomous agents are not tools, but partners with some specific properties that we are using in PRODEC [53]. He postulated that “trust we place in automation today is based on trust gained over many years of developing highly reliable and complex systems.” All of this means that trust in complex systems is based on familiarity. How long does it take to become familiar with an autonomous system? The more familiar you are with a complex sociotechnical system, the more you know how to use it, the more you can trust or distrust its behavior and properties. Becoming familiar with a complex system allows you to know whether it can be trusted or not, whether it is an autonomous system or not. However, getting familiar with such a system can take a long time. So, what method would be useful and efficient to get familiar with a complex system more quickly? Human-in-the-loop simulations are very effective for such familiarization. Human-in-the-loop modeling and simulation tools lead to the concepts and tools of digital twins, which allow a virtual representation of an object or system covering its life cycle (from the first idea to the obsolescence of a system). We are developing this digital twin concept in several domains, including operational maintenance of helicopter engines [14] and remote operations of an oil well [47] using PRODEC. We have found that the discovery of the emergent behavior and properties of trust and distrust during HITLS has helped us redesign and recalibrate the MOHICAN air combat assistant as well as fighter pilot training (i.e., machine and human cognitive functions).

Rephrasing what Klein and his colleagues [54] have already produced in the field of human-robot interaction, here are some concepts that are highly relevant to HMT based on our experience in the MOHICAN project: the more adaptable one is, the less predictable one is; collaboration should be based on the SADMAT model (i.e., Situation Awareness [SA] - Decision Making [DM] - Action Taking [AT]) developed in the MOHICAN project, linking SA, DM and AT [20]; attention management should be done in context and with shared understanding. SADMAT is fully compatible with the OODA loop (which means Observe-Orient-Decide-Act), proposed by John Boyd, military strategist, and colonel in the US Air Force. OODA is a concept commonly applied in combat operational processes [55].

Trust is intimately related to cooperation and collaboration. In addition, in the multi-agent context of a future air combat system, cooperation and collaboration are important processes to consider. In cooperation, the goal of each agent is the same, but their interests are individual. On the contrary, in collaboration, people come together for a common interest and the same goal. In an orchestra, for example, the musicians collaborate to play a symphony [39]. They are coordinated through scores, which have been previously coordinated by a composer, and a conductor at performance time [39]. Collaboration between humans and increasingly autonomous systems as teammates in uncertain dynamic environments consists of highly interdependent activities. On both the human and machine sides, training for collaboration is a key issue. Driskell and Salas showed experimentally that members of a collectively oriented team are more likely to be attentive to the input of other team members and to improve their performance during team interaction than egocentric members [56]. The question now applies to collaboration between pilots and virtual assistants. Studies by Salas et al. suggest that team training interventions are a viable approach that organizations can take to improve team outcomes [50].

This article focuses on a systemic and organizational (i.e., multi-agent) view of trust and collaboration [51, 52]. Huang at al. have recently discussed trust as a distributed dynamic feature “that involves all relevant stakeholders and the interactions among these entities” [49]. However, the distributed dynamic team trust (D2T2) framework and potential measures proposed are not integrated in an articulated method such as PRODEC. Indeed, PRODEC is a scenario-based design method that articulates combined systems of systems procedural and declarative analyses. Despite the typical development of scaled worlds [57–59] and synthetic task environments [50], there is still a strong need for the development of an approach that articulates procedural scenario-based design and declarative homogeneous systemic representation of humans and machines leading to flexible design and operations. This is precisely the objective of the PRODEC method. More concretely, a major question that was raised during the MOHICAN study was, “can FlexTech resources improve shared cognition and trust?”

Using PRODEC in the MOHICAN project enabled us to become familiar with what evaluation of HMT performance could be (Fig. 8), in terms of trust and collaboration, in a combat aircraft cockpit equipped with a virtual assistant (VA). HMT performance can be assessed using the derived multi-agent model by defining high-level meaningful metrics Tk, such as “operational performance,” “trust” and “collaboration.” Operational performance is a generic high-level metric that measures the distance between an expected performance (i.e., prescribed task) and effective performance (i.e., activity). For example, a flight trajectory can be the prescribed task that defines the expected performance, and the actual trajectory resulting from a pilot’s flying activity is the effective performance. When the distance between the two trajectories is lower than an appropriate threshold, the flight is perfect. However, when this distance is higher than this threshold, a discrepancy should be analyzed and understood. PRODEC knowledge base generated during the analytical phase helps to find out why. Obviously, for example, air force operational performance is scanned through task achievement, risk, and resources management.

Fig. 8

Methodology for performance assessment of a multi-agent system.

{Tk} can be derived from HMT criteria {Cj}, such as usability and explainability on the virtual assistant side, as well as workload and stress on the human side. Therefore, relationships, such as Tk = fk ({Cj}), can be derived based on the domain ontology. On more step is necessary to complete the performance measurement model. We need to get physical and cognitive low-level measures {mi} through methods and tools, such as eye tracking and electrocardiograms (objective measures), as well as subjective scale assessment (subjective measures). Therefore, relationships, such as Cj = gj ({mi}), can be derived based on human-centered design knowledge and experience.

In the MOHICAN project, performance criteria {Cj} and measures {mi} of trust and collaboration were selected based on different characteristics: relevance; validity (meaning and content); measurability; and ease of implementation. All these criteria were then iteratively developed in human-in-the-loop simulations.

Most of the criteria were measured subjectively using methods such as self-confrontation interviews, post-test interviews and standardized questionnaires, such as relevance (added value for the pilot), transparency (composed of two sub-criteria: perception of information and comprehension of information), flexibility/adaptability (tolerance to the pilot’s errors, to his actions/manipulations), usability, lack of discomfort, and so on. The challenge was to find criteria that could be based on objective measures. The following is a non-exhaustive list of operational performance, trust and collaboration criteria that have been successfully used in MOHICAN:

• Effectiveness criterion, evaluated according to the following measures:

∘ Operational performance measurement by expert pilots. The performance model is based on the analysis of simulator data categorized according to three indicators: task completion (i.e., did the crew successfully complete the assigned mission?); resource consumption (i.e., did the crew meet resource management guidelines, e.g., fuel management, time management?); risk management (i.e., did the crew maintain an appropriate level of safety relative to the level of risk of the mission, e.g., proximity to the adversary?).

∘ Number and type of interaction between the virtual assistant and the pilot for each subtask. We determined if the information transmitted by the virtual assistant was effectively processed by the pilot by using an eye-tracker (i.e., analysis pilot’s actions in the simulator, double check, verbalization, and so on).

• Efficiency criterion. The measure used was the total time of the interaction between the pilot and the virtual assistant (reaction time, interaction processing in seconds). Raw data provided by the flight simulator was used for this purpose.

• Reliability/Robustness criterion. This criterion was analyzed by identifying potential bugs and/or functional defects of the virtual assistant.

• Situation Awareness/Mental Workload. Measuring teaming performance only makes sense in a specific context. This notion of context is difficult to formalize. The way we attempted to iteratively formalize context was by clustering the various operational sequences (using BPMN), expert air-combat knowledge elicitation, as well as pilot’s activity and simulation context data acquisition and interpretation, which enabled the definition of an index evaluating pilot’s theoretical workload in real time. It is interesting to mention that heart rate measurements (captured during the simulation activity) overlapped very significantly data resulting from this index.

Using PRODEC in the MOHICAN project enabled us to identify trust/distrust and collaboration emergent behavior and properties during HITLS. This helped us redesign and recalibrate the air combat virtual assistant as well as fighter pilot training (i.e., machine and human cognitive functions).

6Other work, conclusion and perspectives

Bradshaw and colleagues proposed a teamwork-centered approach to autonomy for extended human-robot interaction in space applications [60]. They advocated an effective balance between machine self-sufficiency and self-determination, with the major concern of mutual understanding of the human and machine agents involved. The systemic interaction models (SIMs) presented in this article encapsulate these concerns [7, 28]. The systemic interaction models (SIM) presented in this article enable to model ways interaction between humans and machines may work.

The current strategy for building a resilient infrastructure is progressing too slowly to keep up with the pace with change, as evidenced by the continuous stream of “shock” events [61]. Walker et al. highlight the need to better anticipate evolving threats and recognize new emerging vulnerabilities in an increasingly interconnected world [62, 63]. Based on a robust systems framework (i.e., cognitive and physical systems representations, scenario-based design, human-in-the-loop simulations, formative assessments, agile development using PRODEC), the proposed approach allows for significant progress in the strategic construction of sociotechnical systems, such as future air combat systems.

A NASA Civil Aviation Committee recently reviewed existing HMT research, identified stakeholder community goals, examined relevant concepts of operation, and defined a framework for establishing a coordinated, comprehensive, and prioritized research plan that would enable future applications in the aviation marketplace [64]. This review effort is intended to provide policy makers, engineers, and researchers with useful guidance for directing and coordinating HMT research activities.

As an extension, HMT research challenges include:

• what kind of human-machine team conceptual models should be further developed and validated; how can collective trust be appropriately built, calibrated, and leveraged to establish the roles of each agent or system, and the proper authority sharing (i.e., who is in charge?). In aerial combat for example, we should talk about human-machine collaboration towards autonomy of sociotechnical systems at the right level of granularity and move away from the idea that a machine with artificial intelligence could be autonomous; ethical questions are at stake (e.g., can we let a machine decide alone on some lethal actions, especially when decisions are based on inputs from AI algorithms such as imagery analysis?);

• how can a system of systems maintain continuity of operations in unexpected, uncertain, unforeseen, and unpredictable situations – continuity is intimately related to resilience. If, for example, the overall sociotechnical system is partially or totally destroyed, how should its constituents be able to accomplish their own mission autonomously, where each system in the overall system has its own raison d’être and is therefore capable of being either dependent, independent, or interdependent on the others. The US Air Force has studied trust in a human-robot team and highlights the need for both independent and interdependent decision making in high-risk dynamic environments [50, 65];

• how to measure and leverage the performance of a human-machine team to enable continuous improvement of system performance; and how to certify partnered human-machine systems. Trust and collaboration metrics presented in this article are valid for HMT in an air combat system; they need to be expanded to other applications, such as oil-and-gas telerobotic systems and online remote maintenance of helicopter engine (work in progress in our institute). Finally, trust and collaboration metrics calibration remains a challenge that is currently being addressed and requires more investigation.

These challenges should be viewed as incentives for current and future HMT research plans. For example, HSI of an air combat system can be viewed from two coordinated perspectives to ensure overall consistency, interoperability, and appropriate authority sharing: (1) pilot-centric cockpits to improve the fighter pilot’s working environment (e.g., cockpit design for unmanned aerial vehicle or remote carrier management); (2) C2-centric to improve the overall combat cloud. In both perspectives, the HSI process is implemented by developing scenarios and human-in-the-loop simulations that uncover step-by-step the emerging functions and structures of the systems involved. These emergent properties reflect the ability of systems to work together to achieve the required operational capabilities in an SoS architecture. McNeese at al. provided insights in HMT from a coordination/collaboration of human-machine teams perspective [66]. However, in real life and considering our HSI approach, industrial human-centered processes are difficult to manage because systems are often developed by different companies. For this reason, it is essential to have a clear common understanding of what the different SoS actors should and do, leading to common HSI principles that result in consistent and coordinated system specifications.

HSI has become possible because human factors and ergonomics can be studied at the earliest stages of engineering design using virtual prototypes to understand human and system activity. Scenario-based design and human-in-the-loop simulations have become mandatory, and the concept of digital twins a reality. Consequently, advanced visualization techniques and tools are needed to increase physical and figurative tangibility [7, 8, 19]. For example, several alternatives of the future combat cloud could be compared virtually and progressively “tangibilized,” to promote the right easily understandable information at the right time and place.

On the collaborative side, people learn from each other, and learning is about working together enough to ensure that teamwork considers the identity of the other partner. Intersubjectivity is a matter of human maturity (maturity of the practices involved), defined by Human Readiness Levels (HRLs) [67, 68], and not just technological maturity, measured by Technology Readiness Levels (TRLs). Indeed, autonomy is a matter of technology, organization, and people maturity (see the TOP [Technologies, Organizations, and People] model presented by Boy [39]). Organizational (or societal) maturity requires the definition of appropriate models leading to organizational readiness levels (ORL) [69].

Finally, we must continue to analyze and better understand the issues of trust and collaboration, never forgetting that any sociotechnical system evolves over time (i.e., functions and structures can be changed, removed and/or added based on experience, changing needs and operational risks). Therefore, the flexibility of the corresponding system of systems must be a constant concern.

Acknowledgment

The authors greatly thank Synapse Defense for their expertise on the MOHICAN project that was supported by the French Directorate General of Armaments (DGA) Man-Machine Teaming (MMT) program managed by Thales and Dassault Aviation.

Conflict of interest

None to report.

References

[1] | Lyons JB , Sycara K , Lewis M , Capiola A . Human–autonomy teaming: Definitions, debates, and directions. Frontiers in Psychology – Organizational Psychology. (2021) . https://doi.org/10.3389/fpsyg.2021.589585. |

[2] | Kanaan M . T-Minus AI – Humanity’s Countdown to Artificial Intelligence and the New Pursuit of Global Power. BenBella Books, Inc., Dallas, Texas, USA, 2020. ISBN 978-1-948836-94-4. |

[3] | NASEM. Human-AI Teaming: State of the Art and Research Needs. National Academies of Sciences, Engineering, and Medicine. Washington, DC, USA: The National Academies Press; 2021. https://doi.org/10.17226/26355 |

[4] | Pappalardo D . Connected aerial collaborative combat, autonomy, and human-machine hybridization: Towards a winged “Centaur Warrior”? In French, «Combat collaboratif aérien connecté, autonomie et hybridation Homme-Machine: Vers un “Guerrier Centaure”ailé?». DSI Journal. (2019) ;139: :70–5. |

[5] | Torkaman J , Batcha AS , Elbaz K , Kiss DM , Doule O , Boy GA . Cognitive Function Analysis for Human Spaceflight Cockpits with Particular Emphasis on Microgravity Operations. Proceedings of AIAA SPACE Long Beach, California; 2016. https://doi.org/10.2514/6.2016-5345 |

[6] | Minsky M . The Society of Mind. New York: Simon & Schuster. ISBN 0-671-60740-5; (1986) . |

[7] | Boy GA . Human Systems Integration: From Virtual to tangible. CRC Taylor & Francis Press, Miami, FL, USA; (2020) . |

[8] | Design for Flexibility - A Human Systems Integration Approach. Springer Nature, Switzerland. ISBN: 978-3-030-76391-6; (2021) . |

[9] | Fong T . Autonomous Systems -- NASA Capability Overview; 2018. Available from: URL: https://www.nasa.gov/sites/default/files/atoms/files/nac_tie_aug2018_tfong_tagged.pdf |

[10] | Parasuraman R , Sheridan TB , Wickens CD . A model for types and levels of human interaction with automation. In: IEEE Transactions on Systems, Man, and Cybernetics – Part A: Systems and Humans. (2000) ;30: (3):286–97. |

[11] | Sheridan TB , Verplank WL . Human and computer control of undersea teleoperators. Technical Report, Man-Machine Systems Laboratory, Department of Mechanical Engineering, Massachusetts Institute of Technology, Cambridge, MA, USA; 1978 |

[12] | Hancock PA . Imposing limits on autonomous systems. Ergonomics. (2017) ;60/2: :284–91. |

[13] | Boy GA , Masson D , Durnerin E , Morel C . Human Systems Integration of Increasingly Autonomous Systems using PRODEC Methodology. FlexTech work-in-progress technical report. Available upon request, [email protected]. |

[14] | Lorente Q , Villeneuve E , Merlo C , Boy GA , Thermy F . Development of a digital twin for collaborative decision-making, based on a multi-agent system: Application to prescriptive maintenance. Proceedings of INCOSE HSI2021 International Conference. Wiley Digital Library; 2021. |

[15] | Norman DA , Stappers PJ . DesignX: Design and complex sociotechnical systems. She Ji: Journal of Design, Economics, and Innovation. (2016) ;1: (2). http://dx.doi.org/10.1016/j.sheji.2016.01.002 |

[16] | Hutchins E . Cognition in the wild. Cambridge, Mass.: MIT Press (2), 1995. ISBN 978-0-262-58146-2. |

[17] | Ferber J . Multi-Agent Systems: An Introduction to Distributed Artificial Intelligence. Addison-Wesley Longman Publishing Co., Inc., Boston, MA, USA., (1999) . |

[18] | Fong T , Steinfeld A , Kaber G , Lewis M , Scholtz J , Schultz A , Goodrich M . Common Metrics for Human-Robot Interaction. HRI’06, Salt Lake City, Utah, USA, (2006) . |

[19] | Boy GA . Tangible Interactive Systems. Springer, UK, (2016) . |

[20] | Boy GA , Grote G . Authority in Increasingly Complex Human and Machine Collaborative Systems: Application to the Future Air Traffic Management Construction. In the Proceedings of the 2009 International Ergonomics Association World Congress, Beijing, China. (2009) . |

[21] | Grudin J . Why CSCW applications fail: Problems in the design and evaluation of organizational interfaces. CSCW ’ Proceedings of the 1988 ACM conference on Computer-supported cooperative work. (1988) , 85–93. https://doi.org/10.1145/62266.62273. O-89791-282-9/88/0085. |

[22] | French B , Duenser A , Heathcote A . Trust in Automation – A Literature Review. CSIRO Report EP184082. CSIRO, Australia; 2018. |

[23] | INCOSE. Systems engineering definition, 2022. Available from: URL https://www.incose.org/about-systems-engineering/system-and-se-definition/systems-engineering-definition |

[24] | INCOSE. Systems Engineering Vision 2035, 2022. Available from: URL: https://www.incose.org/docs/default-source/se-vision/incose-se-vision-2035.pdf?sfvrsn=e32063c7_2 |

[25] | Boy GA . Model-Based Human Systems Integration. In the Handbook of Model-Based Systems Engineering, MadniAM, AugustineN, (Eds.). Springer Nature, Switzerland, (2022) . |

[26] | McDermott T , DeLaurentis D , Beling P , Blackburn M , Bone M . AI4SE and SE4AI: A Research Roadmap. INCOSE Insight. March issue; (2020) . DOI 10.1002/inst.12278. |

[27] | Soudain G , Triboulet F , Leroy A . EASA Concept paper First usable guidance for level 1 Machine Learning Applications. European Union Aviation Safety Agency Technical Report; (2021) . Available from: URL: https://www.easa.europa.eu/downloads/134357/en |

[28] | Boy GA . Cross-Fertilization of Human-Systems Integration and Artificial Intelligence: Looking for Systemic Flexibility. Proceedings of AI4SE 2019, First Workshop on the application of Artificial Intelligence for Systems Engineering. Madrid, Spain; 2019. |

[29] | Minsky M . The Society of Mind. New York: Simon & Schuster. ISBN 0-671-60740-5; (1986) . |

[30] | Scharre P . Army of none: Autonomous weapons and the future of war. First edition. New York: W. W. Norton & Company; (2018) . |

[31] | Pappalardo D . Connected aerial collaborative combat, autonomy, and human-machine hybridization: Towards a winged “Centaur Warrior”? In French, «Combat collaboratif aérien connecté, autonomie et hybridation Homme-Machine: Vers un “Guerrier Centaure” ailé?». DSI Journal. (2019) ;139: :70–5. |

[32] | ISO/IEC 15288. Systems Engineering – system life cycle processes. IEEE Standard, International Organization for Standardization, JTC1/SC7; 2015. |

[33] | de Rosnay J . Le Macroscope, Vers une vision globale. Seuil - Points Essais, Paris; (1977) . |

[34] | Le Moigne JL . La modélisation des systèmes complexes. Dunod, Paris; (1990) . |

[35] | Boy GA . Cognitive Function Analysis. Contemporary Studies in Cognitive Science and Technology Series, Ablex-Praeger Press, USA; (1998) . Available from: URL: https://www.amazon.com/Cognitive-Function-Analysis-Contemporary-Technology/dp/1567503772 |

[36] | Boy GA , Ferro D . Using Cognitive Function Analysis to Prevent Controlled Flight into Terrain. Chapter of the Human Factors and Flight Deck Design, Book. Don Harris (Ed.), Ashgate, UK; (2003) . |

[37] | Roth EM , Sushereba C , Militello J , Diiulio J , Ernst K . Function allocation considerations in the era of human autonomy teaming. Journal of Cognitive Engineering and Decision Making. (2019) ;13: (4):199–220. DOI: 10.1177/1555343419878038. |

[38] | Fitts PM . Human engineering for an effective air navigation and traffic control system. National Research Council, Washington, DC, USA, (1951) . |

[39] | Boy GA . Orchestrating Human-Cantered Design. Springer, UK, (2013) . |

[40] | Boy GA . Dealing with the Unexpected in our Complex Sociotechnical World. Proceedings of the 12th IFAC/IFIP/IFORS/IEA Symposium on Analysis, Design, and Evaluation of Human-Machine Systems. Las Vegas, Nevada, USA; 2013. Also, Chapter in Risk Management in Life-Critical Systems, Millot P, Boy GA, Wiley. |

[41] | Endsley MR . Situation awareness in future autonomous vehicles: Beware the unexpected. Proceedings of the 20th Congress of the International Ergonomics Association. Florence, Italy, Springer Nature, Switzerland, 2018. |

[42] | Endsley MR . Autonomous Horizon: System Autonomy in the Air Force – A Path to the Future. Volume 1: Human-Autonomy Teaming. Technical Report AF/ST TR 15-01; 2015. DOI 10.13140/RG.2.1.1164.2003. |

[43] | Rosson MB , Carroll JM . Scenario-based design. Chapter in Human Computer Interaction, CRC Press, eBook ISBN 9780429139390; 2009. |

[44] | White SA , Bock C . BPMN 2.0 Handbook Second Edition: Methods, Concepts, Case Studies, and Standards in Business Process Management Notation. Future Strategies Inc. ISBN 978-0-9849764-0-9; 2011. |

[45] | Boy GA . The group elicitation method: An introduction. In International Conference on Knowledge Engineering and Knowledge Management, Springer, Berlin, Heidelberg; 1996, pp. 290-305. |

[46] | Damacharla P , Javaid AY , Gallimore JJ , Devabhaktuni VK . Common metrics to benchmark Human-Machine Teams (HMT): A review. IEEE Access. 2018;6:38637-55. DOI: 10.1109/ACCESS.2018.2853560. |

[47] | Camara Dit Pinto S , Masson D , Villeneuve E , Boy GA , Urfels L . From requirements to prototyping: Application of human systems integration methodology to digital twin. In the Proceedings of the International Conference on Engineering Design, ICED; 2021, 16-20 August Gothenburg. |

[48] | Hardin R . Trust. Cambridge, UK: Polity; 2006. |

[49] | Huang L , Cooke NJ , Gutzwiller R , Berman S , Choou E , Demir M , Wenlong Z . Distributed Dynamic Team Trust in Human, Artificial Intelligence, and Robot Teaming. Ed. Lyons J, Trust in Human-Robot Interaction. Academic Press, Elsevier; 2020, pp. 301-19. https://doi.org/10.1016/B978-0-12-819472-0.00013-7 |

[50] | Salas E , Cooke NJ , Rosen MA . On teams, teamwork, and team performance: Discoveries and developments. Human Factors. (2008) ;50: (3):540-7. DOI: 10.1518/001872008X288457. |

[51] | Castelfranchi C , Falcone R . Trust is much more than subjective probability: Mental components and sources of trust. In Proceedings of the 33rd Hawaii International Conference on System Sciences, 2000, pp. 1-10. https://doi.org/10.1109/HICSS.2000.926815 |

[52] | Mayer RC , Davis JH , Schoorman FD . An integrative model of organizational trust. The Academy of Management Review. 1995;20:709. https://doi.org/10.2307/258792 |

[53] | Atkinson D . Human-Machine Trust. Invited speech at IHMC (Florida Institute for Human and Machine Cognition), (2012) . |

[54] | Klein G , Woods DD , Bradshaw JM , Hoffman RR , Feltovich PJ . Ten challenges for making automation a “team player” in joint human-agent activity. IEEE Intelligent Systems. (2004) ;91–95. |

[55] | BoydJR. Destruction and Creation. U.S. Army Command and General Staff College; 1976. |

[56] | Driskell JE , Salas E . Collective Behavior and Team Performance. Human Factors. (1992) ;34: (3):277–88. |

[57] | Cooke N , Shope S . Designing a synthetic task environment. In SchiflettS, ElliotL, SalasE, CoovertD, (Eds.), Scaled Worlds: Development, Validation, and Application. Surrey: Ashgate; (2004) , pp. 263–78. |

[58] | Corral CC , Tatapudi KS , Buchanan V , Huang L , Cooke NJ . Building a synthetic task environment to support artificial social intelligence research. Proceedings of the HFES 65th International Annual Meeting. (2021) ;65: (1):660-4. DOI: 10.1177/1071181321651354a. |

[59] | Schiflett SG , Elliott LR , Coovert MD , Salas E . Scaled Worlds: Development, Validation, and Applications. Ashgate, Surrey, England; 2004. |

[60] | Bradshaw JM , et al. Teamwork-centered autonomy for extended human-agent interaction in space applications. AAAI Spring Symposium Proceedings, Stanford, CA, USA;. (2004) , PP. 22–4. |

[61] | Woods DD , Alderson DL . Progress toward Resilient Infrastructures: Are we falling behind the pace of events and changing threats? Journal of Critical Infrastructure Policy. (2021) ;2: (2):Fall / Winter. Strategic Perspectives. |

[62] | Walker GH , Stanton NA , Salmon PM , Jenkins DP . A review of sociotechnical systems theory: A classic concept for command-and-control paradigms. Theoretical Issues in Ergonomics Science. (2008) ;9: :479–99. |

[63] | Walker GH , Stanton NA , Salmon PM , Jenkins DP , Rafferty LA . Translating concepts of complexity to the field of ergonomics. Ergonomics. (2010) ;53: :1175–86. |

[64] | Holbrook JB , Prinzel LJ , Chancey ET , Shively RJ , Feary MS , Dao QV , Ballin MG , Teubert C . Enabling Urban Air Mobility: Human-Autonomy Teaming Research Challenges and Recommendations. AIAA Aviation Forum (virtual event), (2020) . |

[65] | Schaefer KE , Hill SG , Jentsch FG . Trust in Human-Autonomy Teaming: A Review of Trust Research from the US Army Research Laboratory Robotics Collaborative Technology Alliance. Springer International Publishing AG, part of Springer Nature (outside the USA), Chen J, (Ed.): AHFE 2018, AISC 784; 2019, pp. 102-14. https://doi.org/10.1007/978-3-319-94346-6_10 |

[66] | McNeese NJ , Demir M , Cooke NJ , Myers C . Teaming with a synthetic teammate: Insights into human-autonomy teaming. Human Factors. (2018) ;60: :262-73. https://doi.org/10.1177/0018720817743223 |

[67] | Endsley MR . Human readiness levels: Linking S&T to acquisition [Plenary address]. National Defense Industrial Association Human Systems Conference, Alexandria, VA, USA; 2015. Available from: URL: https://ndiastorage.blob.core.usgovcloudapi.net/ndia/2015/human/WedENDSLEY.pdf |

[68] | Salazar G , See JE , Handley HAH , Craft R . Understanding human readiness levels. Proceedings of the Human Factors and Ergonomics Society Annual Meeting. (2021) ;64: (1):1765-9. https://doi.org/10.1177/1071181320641427 |

[69] | Boy GA . Socioergonomics: A few clarifications on the Technology-Organizations-People Tryptic. Proceedings of INCOSE HSI2021 International Conference, INCOSE, San Diego, CA, USA, 2021. |