Neural entity linking: A survey of models based on deep learning

Abstract

This survey presents a comprehensive description of recent neural entity linking (EL) systems developed since 2015 as a result of the “deep learning revolution” in natural language processing. Its goal is to systemize design features of neural entity linking systems and compare their performance to the remarkable classic methods on common benchmarks. This work distills a generic architecture of a neural EL system and discusses its components, such as candidate generation, mention-context encoding, and entity ranking, summarizing prominent methods for each of them. The vast variety of modifications of this general architecture are grouped by several common themes: joint entity mention detection and disambiguation, models for global linking, domain-independent techniques including zero-shot and distant supervision methods, and cross-lingual approaches. Since many neural models take advantage of entity and mention/context embeddings to represent their meaning, this work also overviews prominent entity embedding techniques. Finally, the survey touches on applications of entity linking, focusing on the recently emerged use-case of enhancing deep pre-trained masked language models based on the Transformer architecture.

1.Introduction

Knowledge Graphs (KGs), such as Freebase [14], DBpedia [92], and Wikidata [184], contain rich and precise information about entities of all kinds, such as persons, locations, organizations, movies, and scientific theories, just to name a few. Each entity has a set of carefully defined relations and attributes, e.g. “was born in” or “play for”. This wealth of structured information gives rise to and facilitates the development of semantic processing algorithms as they can directly operate on and benefit from such entity representations. For instance, imagine a search engine that is able to retrieve mentions in the news during the last month of all retired NBA players with a net income of more than 1 billion US dollars. The list of players together with their income and retirement information may be available in a knowledge graph. Equipped with this information, it appears to be straightforward to look up mentions of retired basketball players in the newswire. However, the main obstacle in this setup is the lexical ambiguity of entities. In the context of this application, one would want to only retrieve all mentions of “Michael Jordan (basketball player)”11 and exclude mentions of other persons with the same name such as “Michael Jordan (mathematician)”.22

This is why Entity Linking (EL) – the process of matching a mention, e.g. “Michael Jordan”, in a textual context to a KG record (e.g. “basketball player” or “mathematician”) fitting the context – is the key technology enabling various semantic applications. Thus, EL is the task of identifying an entity mention in the (unstructured) text and establishing a link to an entry in a (structured) knowledge graph.

Entity linking is an essential component of many information extraction (IE) and natural language understanding (NLU) pipelines since it resolves the lexical ambiguity of entity mentions and determines their meanings in context. A link between a textual mention and an entity in a knowledge graph also allows us to take advantage of the information encompassed in a semantic graph, which is shown to be useful in such NLU tasks as information extraction, biomedical text processing, or semantic parsing and question answering (see Section 5). This wide range of direct applications is the reason why entity linking is enjoying great interest from both academy and industry for more than two decades.

1.1.Goal and scope of this survey

Recently, a new generation of approaches for entity linking based on neural models and deep learning emerged, pushing the state-of-the-art performance in this task to a new level. The goal of our survey is to provide an overview of this latest wave of models, emerging from 2015.

Models based on neural networks have managed to excel in EL as in many other natural language processing tasks due to their ability to learn useful distributed semantic representations of linguistic data [11,30,203]. These current state-of-the-art neural entity linking models have shown significant improvements over “classical”33 machine learning approaches [27,84,148] to name a few that are based on shallow architectures, e.g. Support Vector Machines, and/or depend mostly on hand-crafted features. Such models often cannot capture all relevant statistical dependencies and interactions [54]. In contrast, deep neural networks are able to learn sophisticated representations within their deep layered architectures. This reduces the burden of manual feature engineering and enables significant improvements in EL and other tasks.

In this survey, we systemize recently proposed neural models, distilling one generic architecture used by the majority of neural EL models (illustrated in Figs 2 and 5). We describe the models used in each component of this architecture, e.g. candidate generation, mention-context encoding, entity ranking. Prominent variations of this generic architecture, e.g. end-to-end EL or global models, are also discussed. To better structure the sheer amount of available models, various types of methods are illustrated in taxonomies (Figs 3 and 6), while notable features of each model are carefully assembled in a tabular form (Table 2). We discuss the performance of the models on commonly used entity linking/disambiguation benchmarks and an entity relatedness dataset. Because of the sheer amount of work, it was not possible for us to try available software and to compare approaches on further parameters, such as computational complexity, run-time, and memory requirements. Nevertheless, we created a comprehensive collection of references to publicly available official implementations of EL models and systems discussed in this survey (see Table 7 in the Appendix).

An important component of neural entity linking systems is distributed entity representations and entity encoding methods. It has been shown that encoding the KG structure (entity relationships), entity definitions, or word/entity co-occurrence statistics from large textual corpora in low-dimensional vectors improves the generalization capabilities of EL models [54,70]. Therefore, we also summarize distributed entity representation models and novel methods for entity encoding.

Many natural language processing systems take advantage of deep pre-trained language models like ELMo [138], BERT [36], and their modifications. EL made its path into these models as a way of introducing information stored in KGs, which helps to adapt word representations to some text processing tasks. We discuss this novel application of EL and its further development.

1.2.Article collection methodology

We do not have a strict article collection algorithm for the review like e.g., the one conducted by Oliveira et al. [130]. Our main goal is to provide and describe a conceptual framework that can be applied to the majority of recently presented neural approaches to EL. Nevertheless, as with all surveys, we had to draw the line somewhere. The main criteria for including papers into this survey was that they had been published during or after 2015, and they primarily address the task of EL, i.e. resolving textual mentions to entries in KGs, or discussing EL applications. We explicitly exclude related work e.g., on (fine-grained) entity typing (see [4,28]), which also encompasses a disambiguation task, and work that employs KGs for other tasks than EL. This survey also does not try to cover all EL methods designed for specific domains like biomedical texts or messages in social media. For the general-purpose EL models evaluated on well-established benchmarks, we try to be as comprehensive as possible with respect to recent-enough papers that fit into the conceptual framework, no matter where they have appeared (however, with a focus on top conferences and journals in the fields of natural language processing and Semantic Web).

1.3.Previous surveys

One of the first surveys on EL was prepared by Shen et al. [162] in 2015. They cover the main approaches to entity linking (within the modules, e.g. candidate generation, ranking), its applications, evaluation methods, and future directions. In the same year, Ling et al. [96] presented a work that aims to provide (1) a standard problem definition to reduce confusion that appears due to the existence of variant similar tasks related to EL (e.g., Wikification [112] and named entity linking [67]), and (2) a clear comparison of models and their various aspects.

There are also other surveys that address a wider scope. The work of Martínez-Rodríguez et al. [106], published in 2020, involves information extraction models and semantic web technologies. Namely, they consider many tasks, like named entity recognition, entity linking, terminology extraction, keyphrase extraction, topic modeling, topic labeling, relation extraction. In a similar vein, the work of Al-Moslmi et al. [3], released in 2020, overviews the research in named entity recognition, named entity disambiguation, and entity linking published between 2014 and 2019.

Another recent survey paper by Oliveira et al. [130], published in 2020, analyses and summarizes EL approaches that exhibit some holism. This viewpoint limits the survey to the works that exploit various peculiarities of the EL task: additional metadata stored in specific input like microblogs, specific features that can be extracted from this input like geographic coordinates in tweets, timestamps, interests of users posted these tweets, and specific disambiguation methods that take advantage of these additional features. In the concurrent work, Möller et al. [113] overview models developed specifically for linking English entities to the Wikidata [184] and discuss features of this KG that can be exploited for increasing the linking performance.

Previous surveys on similar topics (a) do not cover many recent publications [96,162], (b) broadly cover numerous topics [3,106], or (c) are focused on the specific types of methods [130] or a knowledge graph [113]. There is not yet, to our knowledge, a detailed survey specifically devoted to recent neural entity linking models. The previous surveys also do not address the topics of entity and context/mention encoding, applications of EL to deep pre-trained language models, and cross-lingual EL. We are also the first to summarize the domain-independent approaches to EL, several of which are based on zero-shot techniques.

1.4.Contributions

More specifically, this article makes the following contributions:

– a survey of state-of-the-art neural entity linking models;

– a systematization of various features of neural EL methods and their evaluation results on popular benchmarks;

– a summary of entity and context/mention embedding techniques;

– a discussion of recent domain-independent (zero-shot) and cross-lingual EL approaches;

– a survey of EL applications to modeling word representations.

The structure of this survey is the following. We start with defining the EL task in Section 2. In Section 3.1, the general architecture of neural entity linking systems is presented. Modifications and variations of this basic pipeline are discussed in Section 3.2. In Section 4, we summarize the performance of EL models on standard benchmarks and present results of the entity relatedness evaluation. Section 5 is dedicated to applications of EL with a focus on recently emerged applications for improving neural language models. Finally, Section 6 concludes the survey and suggests promising directions of future work.

2.Task description

2.1.Informal definition

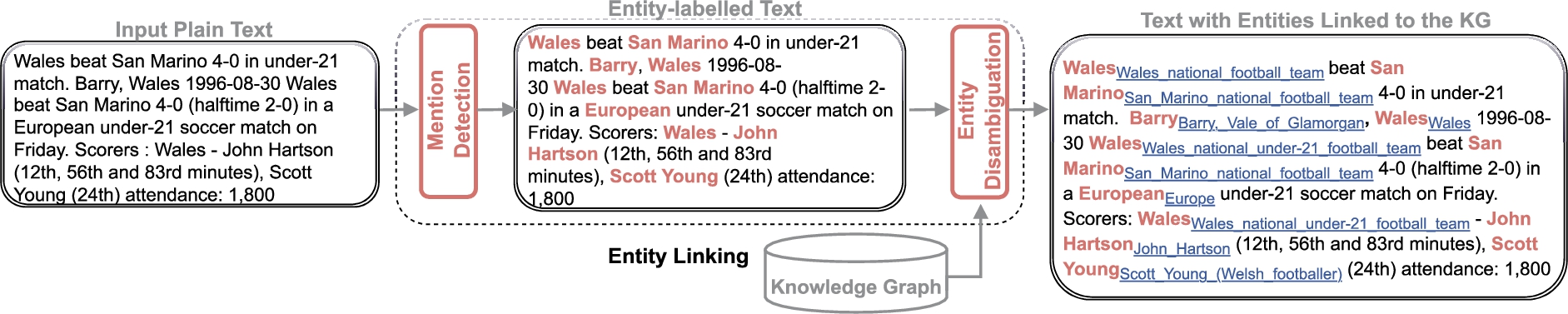

Fig. 1.

The entity linking task. An entity linking (EL) model takes a raw textual input and enriches it with entity mentions linked to nodes in a knowledge graph (KG). The task is commonly split into entity mention detection and entity disambiguation sub-tasks.

Consider the example presented in Fig. 1 with an entity mention Scott Young in a soccer-game-related context. Literally, this common name can refer to at least three different people: the American football player, the Welsh football player, or the writer. The EL task is to (1) correctly detect the entity mention in the text, (2) resolve its ambiguity and ultimately provide a link to a corresponding entity entry in a KG, e.g. provide for the Scott Young mention in this context a link to the Welsh footballer44 instead of the writer.55 To achieve this goal, the task is usually decomposed into two sub-tasks, as illustrated in Fig. 1: Mention Detection (MD) and Entity Disambiguation (ED).

2.2.Formal definition

2.2.1.Knowledge graph (KG)

A KG contains entities, relations, and facts, where facts are denoted as triples (i.e. head entity, relation, tail entity) as defined in Ji et al. [77]. Formally, as defined by Färber et al. [45], a KG is a set of RDF triples where each triple

This RDF representation can be considered as a multi-relational graph

There is also an equivalent three-way tensor representation of a KG

2.2.2.Mention detection (MD)

The goal of mention detection is to identify an entity mention span, while entity disambiguation performs linking of found mentions to entries of a KG. We can consider this task as determining an

In the majority of works on EL, it is assumed that the mentions are already given or detected, for example, using a named entity recognition (NER) system (sometimes called named entity recognition and classification (NERC) [4,119]). We should note that, usually, in addition to MD, NER systems also tag/classify mentions with a predefined types [95,107,130,181] that also can be leveraged for disambiguation [107].

2.2.3.Entity disambiguation (ED)

The entity disambiguation task can be considered as determining a function

To learn a mapping from entity mentions in a context to entity entries in a KG, EL models use supervision signals like manually annotated mention-entity pairs. The size of KGs varies; they can contain hundreds of thousands or even millions of entities. Due to their large size, training data for EL would be extremely unbalanced; training sets can lack even a single example for a particular entity or mention, e.g. as in the popular AIDA corpus [67]. To deal with this problem, EL models should have wide generalization capabilities.

Despite KGs being usually large, they are incomplete. Therefore, some mentions in a text cannot be correctly mapped to any KG entry. Determining such unlinkable mentions, which usually is designated as linking to a

2.3.Terminological aspects

More or less, the same technologies and models are sometimes called differently in the literature. Namely, Wikification [26] and entity disambiguation are considered as subtypes of EL [116]. To be comprehensive in this survey, we assume that the entity linking task encompasses both entity mention detection and entity disambiguation. However, only a few studies suggest models that perform MD and ED jointly, while the majority of papers on EL focus exclusively on ED and assume that mention boundaries are given by an external entity recognizer [152] (which may lead to some terminological confusions). Numerous techniques that perform MD (e.g. in the NER task) without entity disambiguation are considered in many previous surveys [57,95,119,160,193] inter alia and are out of the scope of this work.

Entity linking in the general case is not restricted to linking mentions to graph nodes but rather to concepts in a knowledge base. However, most of the modern widely-used knowledge bases organize information in the form of a graph [14,92,184], even in particular domains, like e.g. the scholarly domain [34]. A basic statement in a data/knowledge base usually can be represented as a subject-predicate-object tuple

3.Neural entity linking

We start the discussion of neural entity linking approaches from the most general architecture of EL pipelines and continue with various specific modifications like joint entity mention detection and linking, disambiguation techniques that leverage global context, domain-independent EL approaches including zero-shot methods, and cross-lingual models.

3.1.General architecture

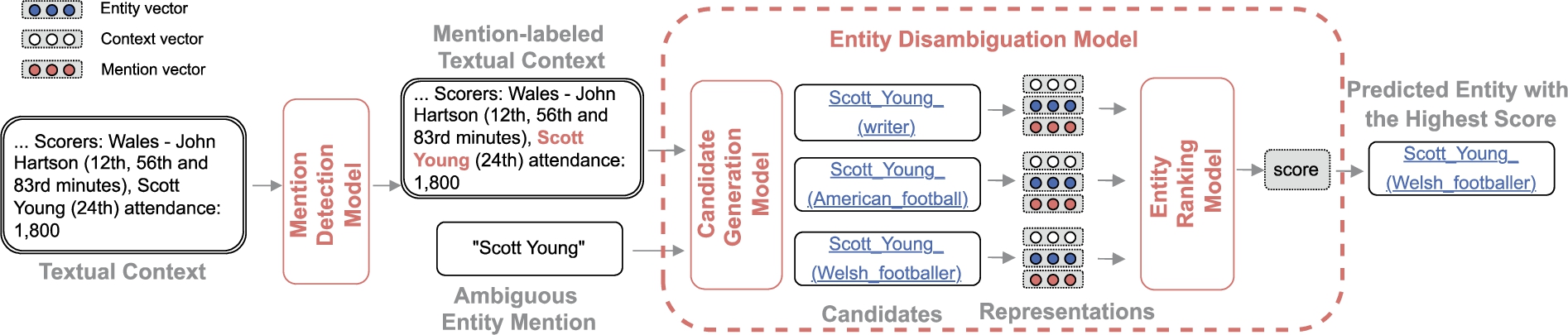

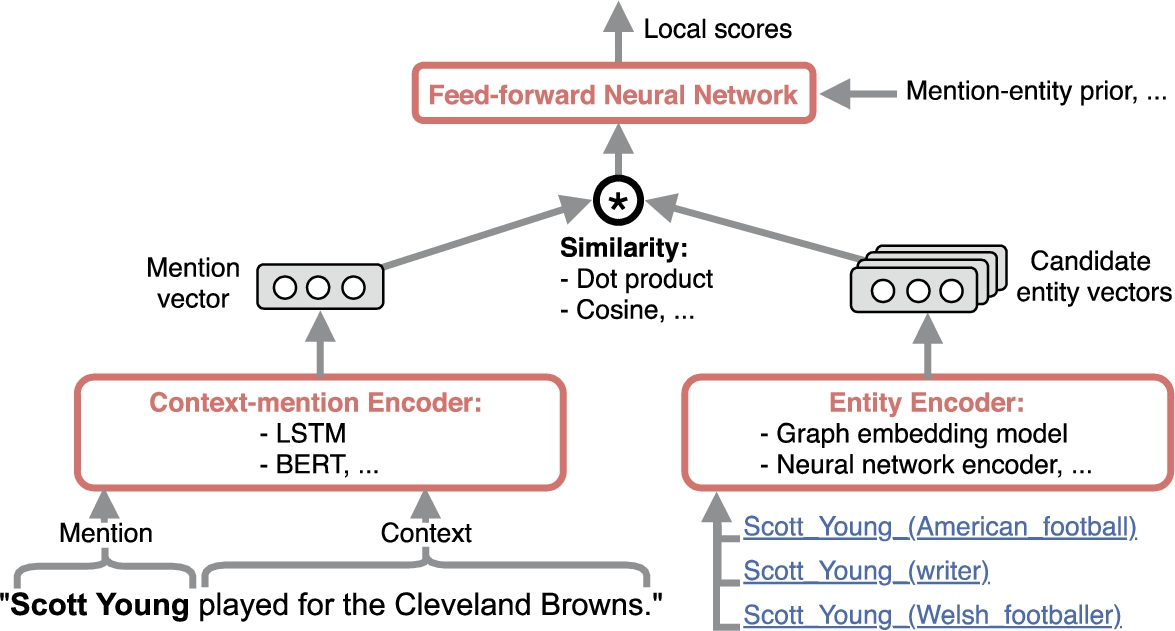

Fig. 2.

General architecture for neural entity linking. Entity linking (EL) consists of two main steps: mention detection (MD), when entity mention boundaries in a text are identified, and entity disambiguation (ED), when a corresponding entity is predicted for the given mention. Entity disambiguation is further carried out in two steps: candidate generation, when possible candidate entities are selected for the mention, and entity ranking, when a correspondence score between context/mention and each candidate is computed through the comparison of their vector representations.

Some of the attempts to EL based on neural networks treat it as a multi-class classification task in which entities correspond to classes. However, the straightforward approach results in a large number of classes, which leads to suboptimal performance without task-sharing [80]. The streamlined approach to EL is to treat it as a ranking problem. We present the generalized EL architecture in Fig. 2, which is applicable to the majority of neural approaches. Here, the mention detection model identifies the mention boundaries in text. The next step is to produce a shortlist of possible entities (candidates) for the mention, e.g. producing Scott_Young_(writer) as a candidate rather than a completely random entity. Then, the mention encoder produces a semantic vector representation of a mention in a context. The entity encoder produces a set of vector representations of candidates. Finally, the entity ranking model compares mention and entity representations and estimates mention-entity correspondence scores. An optional step is to determine unlinkable mentions, for which a KG does not contain a corresponding entity. The categorization of each step in the general neural EL architecture is summarized in Fig. 3.

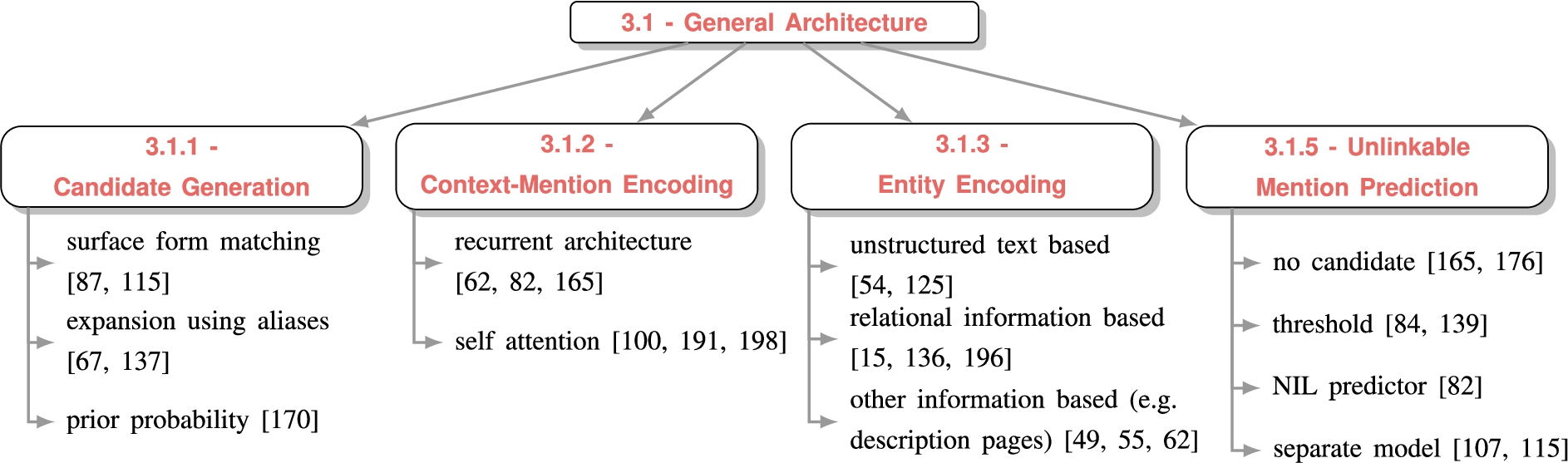

Fig. 3.

Reference map of the general architecture of neural EL systems. The categorization of each step in the general neural EL architecture with alternative design choices and example references illustrating each of the choices.

Table 1

Candidate generation examples. Candidate entities for the example mention “Big Blue” obtained using several candidate generation methods. The highlighted candidates are “correct” entities assuming that the given mention refers to the IBM corporation and not a river, e.g. Big_Blue_River_(Kansas)

| Method | 5 candidate entities for the example mention “Big Blue” |

| surface form matching based on DBpedia names6 | Big_Blue_Trail, Big_ Bluegrass, Big_Blue_Spring_cave_crayfish, Dexter_Bexley_and_the_Big_Blue_Beastie, IBM_Big_ Blue_(X-League) |

| expansion using aliases from YAGO-means7 | Big_Blue_River_(Indiana), Big_ Blue_River_(Kansas), Big_Blue_(crane), Big_ Red_(drink), IBM |

| probability + expansion using aliases from [54]: Anchor prob. + CrossWikis + YAGO8 | IBM, Big_Blue_River_(Kansas), The_Big_Blue, Big_Blue_River_(Indiana), Big_Blue_(crane) |

3.1.1.Candidate generation

An essential part of EL is candidate generation. The goal of this step is given an ambiguous entity mention, such as “Scott Young”, to provide a list of its possible “senses” as specified by entities in a KG. EL is analogous to the Word Sense Disambiguation (WSD) task [116,121] as it also resolves lexical ambiguity. Yet in WSD, each sense of a word can be clearly defined by WordNet [46], while in EL, KGs do not provide such an exact mapping between mentions and entities [22,116,121]. Therefore, a mention potentially can be linked to any entity in a KG, resulting in a large search space, e.g. “Big Blue” referring to IBM. In the candidate generation step, this issue is addressed by performing effective preliminary filtering of the entity list.

Formally, given a mention

Similar to [3,162], we distinguish three common candidate generation methods in neural EL: (1) based on surface form matching, (2) based on expansion with aliases, and (3) based on a prior matching probability computation. In the first approach, a candidate list is composed of entities that match various surface forms of mentions in the text [87,115,211]. There are many heuristics for the generation of mention forms and matching criteria like the Levenshtein distance, n-grams, and normalization. For the example mention of “Big Blue”, this approach would not work well, as the referent entity “IBM” or its long-form “International Business Machines” does not contain a mention string. Examples of candidate entity sets are presented in Table 1, where we searched a name matching of the mention “Big Blue” in the titles of all Wikipedia articles present in DBpedia6 and presented random 5 matches.7 8

In the second approach, a dictionary of additional aliases is constructed using KG metadata like disambiguation/redirect pages of Wikipedia [43,211] or using a dictionary of aliases and/or synonyms (e.g. “NYC” stands for “New York City”). This helps to improve the candidate generation recall as the surface form matching usually cannot catch such cases. Pershina et al. [137] expand the given mention to the longest mention in a context found using coreference resolution. Then, an entity is selected as a candidate if its title matches the longest version of the mention, or it is present in disambiguation/redirect pages of this mention. This resource is used in many EL models, e.g. [20,107,125,131,144,165,196]. Another well-known alternative is YAGO [171] – an ontology automatically constructed from Wikipedia and WordNet. Among many other relations, it provides “means” relations, and this mapping is utilized for candidate generation like in [54,67,158,165,196]. In this technique, the external information would help to disambiguate “Big Blue” as “IBM”. Table 1 shows examples of candidates generated with the help of the YAGO-means candidate mapping dataset used in Hoffart et al. [67].

The third approach to candidate generation is based on pre-calculated prior probabilities of correspondence between certain mentions and entities,

It is common to apply multiple approaches to candidate generation at once. For example, the resource constructed by Ganea and Hofmann [54] and used in many other EL methods [82,86,139,159,198] relies on prior probabilities obtained from entity hyperlink count statistics of CrossWikis [170] and Wikipedia, as well as on entity aliases obtained from the “means” relationship of the YAGO ontology Hoffart et al. [67]. The illustrative mention “Big Blue” can be linked to its referent entity “IBM” with this method, as shown in Table 1. As another example, Fang et al. [44] utilize surface form matching and aliases. They share candidates between abbreviations and their expanded versions in the local context. The aliases are obtained from Wikipedia redirect and disambiguation pages, the Wikipedia search engine, and synonyms from WordNet [46]. Additionally, they submit mentions that are misspelled or contain multiple words to Wikipedia and Google search engines and search for the corresponding Wikipedia articles. It is also worth noting that some works also employ a candidate pruning step to reduce the number of candidates.

Recent zero-shot models [55,100,191] perform candidate generation without external resources. Section 3.2.3 describes them in detail.

3.1.2.Context-mention encoding

To correctly disambiguate an entity mention, it is crucial to thoroughly capture the information from its context. The current mainstream approach is to construct a dense contextualized vector representation of a mention

Several early techniques in neural EL utilize a convolutional encoder [49,127,169,172], as well as attention between candidate entity embeddings and embeddings of words surrounding a mention [54,86]. However, in recent models, two approaches prevail: recurrent networks and self-attention [182].

A recurrent architecture with LSTM cells [66] that has been a backbone model for many NLP applications, is adopted to EL in [43,62,82,87,107,129,165] inter alia. Gupta et al. [62] concatenate outputs of two LSTM networks that independently encode left and right contexts of a mention (including the mention itself). In the same vein, Sil et al. [165] encode left and right local contexts via LSTMs but also pool the results across all mentions in a coreference chain and postprocess left and right representations with a tensor network. A modification of LSTM – GRU [29] – is used by Eshel et al. [40] in conjunction with an attention mechanism [7] to encode left and right context of a mention. Kolitsas et al. [82] represent an entity mention as a combination of LSTM hidden states included in the mention span. Le and Titov [87] simply run a bidirectional LSTM network on words complemented with embeddings of word positions relative to a target mention. Shahbazi et al. [159] adopt pre-trained ELMo [138] for mention encoding by averaging mention word vectors.

Encoding methods based on self-attention have recently become ubiquitous. The EL models presented in [25,100,139,191,198] and others rely on the outputs from pre-trained BERT layers [36] for context and mention encoding. In Peters et al. [139], a mention representation is modeled by pooling over word pieces in a mention span. The authors also put an additional self-attention block over all mention representations that encode interactions between several entities in a sentence. Another approach to modeling mentions is to insert special tags around them and perform a reduction of the whole encoded sequence. Wu et al. [191] reduce a sequence by keeping the representation of the special pooling symbol ‘[CLS]’ inserted at the beginning of a sequence. Logeswaran et al. [100] mark positions of a mention span by summing embeddings of words within the span with a special vector and using the same reduction strategy as Wu et al. [191]. Yamada et al. [198] concatenate text with all mentions in it and jointly encode this sequence via a self-attention model based on pre-trained BERT. In addition to the simple attention-based encoder of Ganea and Hofmann [54], Chen et al. [25] leverage BERT for capturing type similarity between a mention and an entity candidate. They replace mention tokens with a special “[MASK]” token and extract the embedding generated for this token by BERT. A corresponding entity representation is generated by averaging multiple embeddings of mentions.

Fig. 4.

Visualization of entity embeddings. Entity embedding space for entities related to the ambiguous entity mention “Scott Young”. Three candidate entities from Wikipedia are illustrated. For each entity, their most similar 5 entities are shown in the same colors. Entity embeddings are visualized with PCA, which is utilized to reduce dimensionality (in this example, to 2D), using pre-trained embeddings provided by Yamada et al. [194]9.

![Visualization of entity embeddings. Entity embedding space for entities related to the ambiguous entity mention “Scott Young”. Three candidate entities from Wikipedia are illustrated. For each entity, their most similar 5 entities are shown in the same colors. Entity embeddings are visualized with PCA, which is utilized to reduce dimensionality (in this example, to 2D), using pre-trained embeddings provided by Yamada et al. [194]9.](https://content.iospress.com:443/media/sw/2022/13-3/sw-13-3-sw222986/sw-13-sw222986-g004.jpg)

3.1.3.Entity encoding

To make EL systems robust, it is essential to construct distributed vector representations of entity candidates

For instance, in Fig. 4, the most similar entities for Scott Young in the Scott_Young_(American_football) sense are related to American football, whereas the Scott_Young_(writer) sense is in the proximity of writer-related entities.

There are three common approaches to entity encoding in EL: (1) entity representations learned using unstructured texts and algorithms like word2vec [111] based on co-occurrence statistics and developed originally for embedding words; (2) entity representations constructed using relations between entities in KGs and various graph embedding methods; (3) training a full-fledged neural encoder to convert textual descriptions of entities and/or other information into embeddings.

In the first category, Ganea and Hofmann [54] collect entity-word co-occurrences statistics from two sources: entity description pages from Wikipedia; text surrounding anchors of hyperlinks to Wikipedia pages of corresponding entities. They train entity embeddings using the max-margin objective that exploits the negative sampling approach like in the word2vec model, so vectors of co-occurring words and entities lie closer to each other compared to vectors of random words and entities. Some other methods directly replace or extend mention annotations (usually anchor text of a hyperlink) with an entity identifier and straightforwardly train on the modified corpus a word representation model like word2vec [115,176,197,210,211]. In [54,115,125,176], entity embeddings are trained in such a way that entities become embedded in the same semantic space as words (or texts i.e., sentences and paragraphs [197]). For example, Newman-Griffis et al. [125] propose a distantly-supervised method that expands the word2vec objective to jointly learn words and entity representations in the shared space. The authors leverage distant supervision from terminologies that map entities to their surface forms (e.g. Wikipedia page titles and redirects or terminology from UMLS [12]).

In the second category of entity encoding methods that use relations between entities in a KG, Huang et al. [70] train a model that generates dense entity representations from sparse entity features (e.g. entity relations, descriptions) based on the entity relatedness. Several works expand their entity relatedness objective with functions that align words (or mentions) and entities in a unified vector space [20,42,144,163,194,196], just like the methods from the first category. For example, Yamada et al. [196] jointly optimize three objectives to learn word and entity representations: prediction of neighbor words for the given target word, prediction of neighbor entities for the target entity based on the relationships in a KG, and prediction of neighbor words for the given entity.

Recently, knowledge graph embedding has become a prominent technique and facilitated solving various NLP and data mining tasks [187] from KG completion [15,123,189] to entity classification [128]. For entity linking, two major graph embedding algorithms are widely adopted: DeepWalk [136] and TransE [15].

The goal of the DeepWalk [136] algorithm is to produce embeddings of vertices that preserve their proximity in a graph [58]. It first generates several random walks for each vertex in a graph. The generated walks are used as training data for the skip-gram algorithm. Like in word2vec for language modeling, given a vertex, the algorithm maximizes the probabilities of its neighbors in the generated walks. Parravicini et al. [135], Sevgili et al. [157] leverage DeepWalk-based graph embeddings built from DBpedia [92] for entity linking. Parravicini et al. [135] use entity embeddings to compute cosine similarity scores of candidate entities in global entity linking. Sevgili et al. [157] show that combining graph and text-based embeddings can slightly improve the performance of neural entity disambiguation when compared to using only text-based embeddings.

The goal of the TransE [15] algorithm is to construct embeddings of both vertices and relations in such a way that they are compatible with the facts in a KG [187]. Consider the facts in a KG are represented in the form of triples (i.e. head entity, relation, tail entity). If a fact is contained in a KG, the TransE margin-based ranking criterion facilitates the presence of the following correspondence between embeddings:

There are many other techniques for KG embedding: [35,59,128,175,189,199] inter alia and very recent 5*E [122], which is designed to preserve complex graph structures in the embedding space. However, they are not widely used in entity linking right now. A detailed overview of all graph embedding algorithms is out of the scope of the current work. We refer the reader to the previous surveys on this topic [18,58,154,187] and consider integration of novel KG embedding techniques in EL models a promising research direction.

In the last category, we place methods that produce entity representations using other types of information like entity descriptions and entity types. Often, an entity encoder is a full-fledged neural network, which is a part of an entity linking architecture. Sun et al. [172] use a neural tensor network to encode interactions between surface forms of entities and their category information from a KG. In the same vein, Francis-Landau et al. [49] and Nguyen et al. [127] construct entity representations by encoding titles and entity description pages with convolutional neural networks. In addition to a convolutional encoder for entity descriptions, Gupta et al. [62] also include an encoder for fine-grained entity types by using the type set of FIGER [97]. Gillick et al. [55] construct entity representations by encoding entity page titles, short entity descriptions, and entity category information with feed-forward networks. Le and Titov [87] use only entity type information from a KG and a simple feed-forward network for entity encoding. Hou et al. [69] also leverage entity types. However, instead of relying on existing type sets like in [62], they construct custom fine-grained semantic types using words from starting sentences of Wikipedia pages. To represent entities, they first average the word vectors of entity types and then linearly aggregate them with embeddings of Ganea and Hofmann [54].

Recent works leverage deep language models like BERT [36] or ELMo [138] for encoding entities. Nie et al. [129] use an architecture based on a recurrent network for obtaining entity representations from Wikipedia entity description pages. Subsequently, several models adopt BERT for the same purpose [100,191] inter alia. Yamada et al. [198] propose a masked entity prediction task, where a model based on the BERT architecture learns to predict randomly masked input entities. This task makes the model learn also how to generate entity representations along with standard word representations. Shahbazi et al. [159] introduce E-ELMo that extends the ELMo model [138] with an additional objective. The model is trained in a multi-task fashion: to predict next/previous words, as in a standard bidirectional language model, and to predict the target entity when encountering its mentions. As a result, besides the model for mention encoding, entity representations are obtained. Mulang’ et al. [118] use bidirectional Transformers to jointly encode context of a mention, a candidate entity name, and multiple relationships of a candidate entity from a KG verbalized into textual triples: “[subject] [predicate] [object]”. The input sequence of the encoder is composed simply by appending all these types of information delimited by a special separator token.

Fig. 5.

Entity ranking. A generalized entity candidate ranking neural architecture: entity candidates are ranked according their appropriateness for a particular mention in the current context.

3.1.4.Entity ranking

The goal of this stage is given a list of entity candidates

The mention representation

Most of the state-of-the-art studies compute similarity

The final disambiguation decision is inferred via a probability distribution

There are several approaches to framing a training objective in the literature on EL. Consider that we have k candidates for the target mention m, one of which is a true entity

Instead of the negative log-likelihood, some works use variants of a ranking loss. The idea behind such an approach is to enforce a positive margin

3.1.5.Unlinkable mention prediction

The referent entities of some mentions can be absent in the KGs, e.g. there is no Wikipedia entry about Scott Young as a cricket player of the Stenhousemuir cricket club.1010 Therefore, an EL system should be able to predict the absence of a reference if a mention appears in specific contexts, which is known as the NIL prediction task:

The NIL prediction task is essentially a classification with a reject option [51,64,65]. There are four common ways to perform NIL prediction. Sometimes a candidate generator does not yield any corresponding entities for a mention; such mentions are trivially considered unlikable [165,176]. One can set a threshold for the best linking probability (or a score), below which a mention is considered unlinkable [84,139]. Some models introduce an additional special “NIL” entity in the ranking phase, so models can predict it as the best match for the mention [82]. It is also possible to train an additional binary classifier that accepts mention-entity pairs after the ranking phase, as well as several additional features (best linking score, whether mentions are also detected by a dedicated NER system, etc.), as input and makes the final decision about whether a mention is linkable or not [107,115].

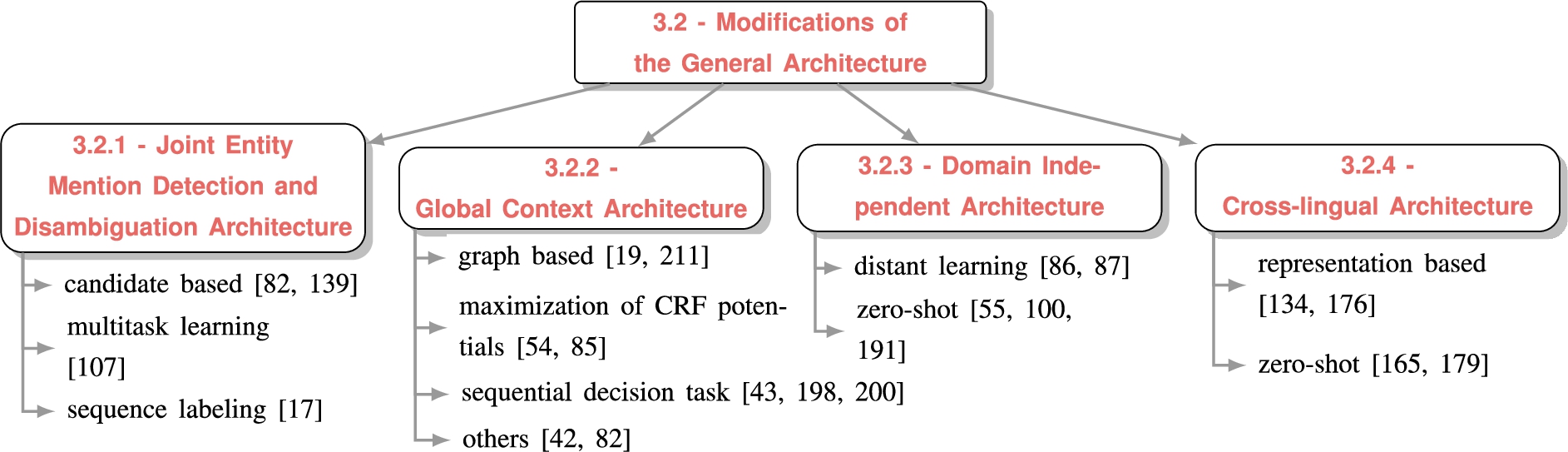

3.2.Modifications of the general architecture

This section presents the most notable modifications and improvements of the general architecture of neural entity linking models presented in Section 3.1 and Figs 2 and 5. The categorization of each modification is summarized in Fig. 6.

3.2.1.Joint entity mention detection and disambiguation

While it is common to separate the mention detection (cf. Equation (2)) and entity disambiguation stages (cf. Equation (3)), as illustrated in Fig. 1, a few systems provide joint solutions for entity linking where entity mention detection and disambiguation are done at the same time by the same model. Formally, the task becomes to detect a mention

Undoubtedly, solving these two problems simultaneously makes the task more challenging. However, the interaction between these steps can be beneficial for improving the quality of the overall pipeline due to their natural mutual dependency. While first competitive models that provide joint solutions were probabilistic graphical models [103,126], we focus on purely neural approaches proposed recently [17,23,33,82,107,139,142,169].

The main difference of joint models is the necessity to produce also mention candidates. For this purpose, Kolitsas et al. [82] and Peters et al. [139] enumerate all spans in a sentence with a certain maximum width, filter them by several heuristics (remove mentions with stop words, punctuation, ellipses, quotes, and currencies), and try to match them to a pre-built index of entities used for the candidate generation. If a mention candidate has at least one corresponding entity candidate, it is further treated by a ranking neural network that can also discard it by considering it unlinkable to any entity in a KG (see Section 3.1.4). Therefore, the decision during the entity disambiguation phase affects mention detection. In a similar fashion, Sorokin and Gurevych [169] treat each token n-gram up to a certain length as a possible mention candidate. They use an additional binary classifier for filtering candidate spans, which is trained jointly with an entity linker. Banerjee et al. [9] also enumerates all possible n-grams and expands each of them with candidate entities, which results in a long sequence of points corresponding to a candidate entity for a particular mention n-gram. This sequence is further processed by a single-layer BiLSTM pointer network [183] that generates index numbers of potential entities in the input sequence. Li et al. [94] consider various possible spans as mention candidates and introduce a loss component for boundary detection, which is optimized along with the loss for disambiguation.

Martins et al. [107] describe the approach with tighter integration between detection and linking phases via multi-task learning. The authors propose a stack-based bidirectional LSTM network with a shift-reduce mechanism and attention for entity recognition that propagates its internal states to the linker network for candidate entity ranking. The linker is supplemented with a NIL predictor network. The networks are trained jointly by optimizing the sum of losses from all three components.

Broscheit [17] goes further by suggesting a completely end-to-end method that deals with mention detection and linking jointly without explicitly executing a candidate generation step. In this work, the EL task is formulated as a sequence labeling problem, where each token in the text is assigned an entity link or a NIL class. They leverage a sequence tagger based on pre-trained BERT for this purpose. This simplistic approach does not supersede [82] but outperforms the baseline, in which candidate generation, mention detection, and linking are performed independently. In the same vein, Chen et al. [23] use a sequence tagging framework for joint entity mention detection and disambiguation. However, they experiment with both settings: when a candidate list is available and not, and demonstrate that it is possible to achieve high linking performance without candidate sets. Similar to Li et al. [94], they optimize the joint loss for linking and mention boundary detection.

Poerner et al. [142] propose a model E-BERT-MLM, in which they repurpose the masked language model (MLM) objective for the selection of entity candidates in an end-to-end EL pipeline. The candidate mention spans and candidate entity sets are generated in the same way as in [82]. For candidate selection, E-BERT-MLM inserts a special “[E-MASK]” token into the text before the considered candidate mention span and tries to restore an entity representation for it. The model is trained by minimizing the cross-entropy between the generated entity distribution of the potential spans and gold entities. In addition to the standard BERT architecture, the model contains a linear transformation pre-trained to align entity embeddings with embeddings of word-piece tokens.

De Cao et al. [33] recently have proposed a generative approach to performing mention detection and disambiguation jointly. Their model, which is based on BART [93], performs a sequence-to-sequence autoregressive generation of text markup with information about mention spans and links to entities in a KG. The generation process is constrained by a markup format and a candidate set, which is retrieved from standard pre-built candidate resources. Most of the time, the network works in a copy-paste regime when it copies input tokens into the output. When it finds a beginning of a mention, the model marks it with a square bracket, copies all tokens of a mention, adds a finishing square bracket, and generates a link to an entity. Although this approach to EL, at the first glance, is counterintuitive and completely different from the solutions with a standard bi-encoder architecture, this model achieves near state-of-the-art results for joint MD and ED and competitive performances on ED-only benchmarks. However, as it is shown in the paper, to achieve such impressive results, the model had to be pre-trained on a large annotated Wikipedia-based dataset [191]. The authors also note that the memory footprint of the proposed model is much smaller than that of models based on the standard architecture due to no need for storing entity embeddings.

3.2.2.Global context architectures

Two kinds of contextual information are available in entity disambiguation: local and global. In local approaches to ED, each mention is disambiguated independently based on the surrounding words, as in the following function:

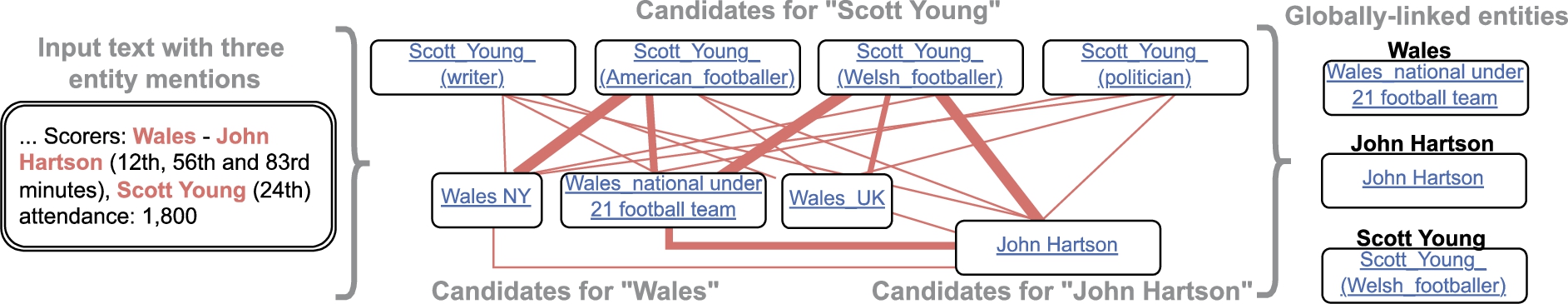

Fig. 7.

Global entity disambiguation. The global entity linking resolves all mentions simultaneously based on entity coherence. Bolder lines indicate expected higher degrees of entity-entity similarity.

Global approaches to ED take into account semantic consistency (coherence) across multiple entities in a context. In this case, all q entity mentions in a group are disambiguated interdependently: a disambiguation decision for one entity is affected by decisions made for other entities in a context as illustrated in Fig. 7 and Equation (20).

In the example presented in Fig. 7, the consistency score between correct entity candidates: the national football team sense of Wales and the Welsh footballer sense of Scott Young and John Hartson, is expected to be higher than between incorrect ones.

Besides involving consistency, the considered context of a mention in global methods is usually larger than in local ones or even extends to the whole document. Although modeling consistency between entities and the extra information of the global context improves the disambiguation accuracy, the number of possible entity assignments is combinatorial [53], which results in high time complexity of disambiguation [54,200]. Another difficulty is an attempt to assign an entity its consistency score since this score is not possible to compute in advance due to the simultaneous disambiguation [196].

The typical approach to global disambiguation is to generate a graph including candidate entities of mentions in a context and perform some graph algorithms, like random walk algorithms (e.g. PageRank [133]) or graph neural networks, over it to select highly consistent entities [61,137,210,211]. Recently, Xue et al. [192] propose a neural recurrent random walk network learning algorithm based on the transition matrix of candidate entities containing relevance scores, which are created from hyperlinks information and cosine similarity of entities. Cao et al. [19] construct a subgraph from the candidates of neighbor mentions, integrate local and global features of each candidate, and apply a graph convolutional network over this subgraph. In this approach, the graph is static, which would be problematic in such cases that two mentions would co-occur in different documents with different topics, however, the produced graphs will be the same, and so, could not catch the different information [190]. To address it, Wu et al. [190] propose a dynamic graph convolution architecture, where entity relatedness scores are computed and updated in each layer based on the previous layer information (initialized with some features, including context scores) and entity similarity scores. Globerson et al. [56] introduce a model with an attention mechanism that takes into account only the subgraph of the target mention, rather than all interactions of all the mentions in a document and restrict the number of mentions with an attention.

Some works approach global ED by maximizing the Conditional Random Field (CRF) potentials, where the first component Ψ represents a local entity-mention score, and the other component Φ measures coherence among selected candidates [53,54,85,86], as defined in Ganea and Hofmann [54]:

However, model training and its exact inference are NP-hard. Ganea and Hofmann [54] utilize truncated fitting of loopy belief propagation [53,56] with differentiable and trainable message passing iterations using pairwise entity scores to reduce the complexity. Le and Titov [85] expand it in a way that pairwise scores take into account relations of mentions (e.g. located_in, or coreference: the mentions are coreferent if they refer to the same entity) by modeling relations between mentions as latent variables. Shahbazi et al. [158] develop a greedy beam search strategy, which starts from a locally optimal initial solution and is improved by searching for possible corrections with the focus on the least confident mentions.

Despite the optimizations proposed like in some aforementioned works, taking into account coherence scores among candidates of all mentions at once can be prohibitively slow. It also can be malicious due to erroneous coherence among wrong entities [43]. For example, if two mentions have coherent erroneous candidates, this noisy information may mislead the final global scoring. To resolve this issue, some studies define the global ED problem as a sequential decision task, where the disambiguation of new entities is based on the already disambiguated ones with high confidence. Fang et al. [43] train a policy network for sequential selection of entities using reinforcement learning. The disambiguation of mentions is ordered according to the local score, so the mentions with high confident entities are resolved earlier. The policy network takes advantage of output from the LSTM global encoder that maintains the information about earlier disambiguation decisions. Yang et al. [200] also utilize reinforcement learning for mention disambiguation. They use an attention model to leverage knowledge from previously linked entities. The model dynamically selects the most relevant entities for the target mention and calculates the coherence scores. Yamada et al. [198] iteratively predict entities for yet unresolved mentions with a BERT model, while attending on the previous most confident entity choices. Similarly, Gu et al. [60] sort mentions based on their ambiguity degrees produced by their BERT-based local model and update query/context based on the linked entities so that the next prediction can leverage the previous knowledge. They also utilize a gate mechanism to control historical cues – representations of linked entities. Yamada et al. [196] and Radhakrishnan et al. [144] measure the similarity first based on unambiguous mentions and then predict entities for complex cases. Nguyen et al. [127] use an RNN to implicitly store information about previously seen mentions and corresponding entities. They leverage the hidden states of the RNN to reach this information as a feature for the computation of the global score. Tsai and Roth [176] directly use embeddings of previously linked entities as features for the disambiguation model. Recently, Fang et al. [44] combine sequential approaches with graph based methods, where the model dynamically changes the graph depending on the current state. The graph is constructed with previously resolved entities, current candidate entities, and subsequent mention’s candidates. The authors use a graph attention network over this graph to make a global scoring. As explained before, Wu et al. [190] also change the entity graph dynamically depending on the outputs from previous layers of a GCN. Zwicklbauer et al. [211] include to the candidates graph a topic node created from the set of already disambiguated entities.

Some studies, for example, Kolitsas et al. [82] model the coherence component as an additional feed-forward neural network that uses the similarity score between the target entity and an average embedding of the candidates with a high local score. Fang et al. [42] use the similarity score between the target entity and its surrounding entity candidates in a specified window as a feature for the disambiguation model.

Another approach that can be considered as global is to make use of a document-wide context, which usually contains more than one mention and helps to capture the coherence implicitly instead of explicitly designing an entity coherence component [49,62,115,139].

3.2.3.Domain-independent architectures

Domain independence is one of the most desired properties of EL systems. Annotated resources are very limited and exist only for a few domains. Obtaining labeled data in a new domain requires much labor. Earlier, this problem is tackled by few domain-independent approaches based on unsupervised [20,125,186] and semi-supervised models [84]. Recent studies provide solutions based on distant learning and zero-shot methods.

Le and Titov [86,87] propose distant learning techniques that use only unlabeled documents. They rely on the weak supervision coming from a surface matching heuristic, and the EL task is framed as binary multi-instance learning. The model learns to distinguish between a set of positive entities and a set of random negatives. The positive set is obtained by retrieving entities with a high word overlap with the mention and that have relations in a KG to candidates of other mentions in the sentence. While showing promising performance, which in some cases rivals results of fully supervised systems, these approaches require either a KG describing relations of entities [87] or mention-entity priors computed from entity hyperlink statistics extracted from Wikipedia [86].

Recently proposed zero-shot techniques [100,174,191,201] tackle problems related to adapting EL systems to new domains. In the zero-shot setting, the only entity information available is its description. As well as in other settings, texts with mention-entity pairs are also available. The key idea of zero-shot methods is to train an EL system on a domain with rich labeled data resources and apply it to a new domain with only minimal available data like descriptions of domain-specific entities. One of the first studies that proposes such a technique is Gupta et al. [62] (not purely zero-shot because they also use entity typings). Existing zero-shot systems do not require such information resources as surface form dictionaries, prior entity-mention probabilities, KG entity relations, and entity typing, which makes them particularly suited for building domain-independent solutions. However, the limitation of information sources raises several challenges.

Since only textual descriptions of entities are available for the target domain, one cannot rely on pre-built dictionaries for candidate generation. All zero-shot works rely on the same strategy to tackle candidate generation: pre-compute representations of entity descriptions (sometimes referred to as caching), compute a representation of a mention, and calculate its similarity with all the description representations. Pre-computed representations of descriptions save a lot of time at the inference stage. Particularly, Logeswaran et al. [100] use the BM25 information retrieval formula [78], which is a similarity function for count-based representations.

A natural extension of count-based approaches is embeddings. The method proposed by Gillick et al. [55], which is a predecessor of zero-shot approaches, uses average unigram and bigram embeddings followed by dense layers to obtain representations of mentions and descriptions. The only aspect that separates this approach from pure zero-shot techniques is the usage of entity categories along with descriptions to build entity representations. Cosine similarity is used for the comparison of representations. Due to the computational simplicity of this approach, it can be used in a single stage fashion where candidate generation and ranking are identical. For further speedup, it is possible to make this algorithm two-staged. In the first stage, an approximate search can be used for candidate set retrieval. In the second stage, the retrieved smaller set can be used for exact similarity computation. Instead of simple embeddings, Wu et al. [191] suggest using a BERT-based bi-encoder for candidate generation. Two separate encoders generate representations of mentions and entity descriptions. Similar to the previous work, the candidate selection is based on the score obtained via a dot-product of mention/entity representations.

For entity ranking, a very simple embedding-based approach of Gillick et al. [55] described above shows very competitive scores on the TAC KBP-2010 benchmark, outperforming some complex neural architectures. The recent studies of Logeswaran et al. [100] and Wu et al. [191] utilize a BERT-based cross-encoder to perform joint encoding of mentions and entities. The cross-encoder takes a concatenation of a context with a mention and an entity description to produce a scalar score for each candidate. The cross-attention helps to leverage the semantic information from the context and the definition on each layer of the encoder network [71,150]. In both studies, cross-encoders achieve superior results compared to bi-encoders and count-based approaches. For entity linking, cross-attention between mention context representations and entity descriptions is also used by Nie et al. [129]. However, they leverage recurrent architectures for encoding. Yao et al. [201] introduce a small tweak of positional embeddings in the Logeswaran et al. [100]’s architecture aimed at better handling long contexts. Tang et al. [174] address the problem of the limited size of the mention context and the entity description that could be processed by the standard BERT model. They argue that the input size of 512 tokens is not enough to capture context and entity description relatedness since the evidence for linking could scatter in different paragraphs and suggest a novel architecture that resolves this problem. Roughly speaking, their model splits the context of a mention and entity description into multiple paragraphs, performs cross-attention between representations of these paragraphs, and aggregates the results for disambiguation. The experimental results show that their model substantially improves the zero-shot performance keeping the inference time in an acceptable range.

Evaluation of zero-shot systems requires data from different domains. Logeswaran et al. [100] proposes the Zero-shot EL1111 dataset, constructed from several Wikias.1212 In the proposed setting, training is performed on one set of Wikias while evaluation is performed on others. Gillick et al. [55] construct the Wikinews dataset. This dataset can be used for evaluation after training on Wikipedia data.

Clearly, heavy neural architectures pre-trained on general-purpose open corpora substantially advance the performance of zero-shot techniques. As highlighted by Logeswaran et al. [100] further unsupervised pre-training on source data, as well as on the target data is beneficial. The development of better approaches to the utilization of unlabeled data might be a fruitful research direction. Furthermore, closing the performance gap of entity ranking between a fast representation based bi-encoder and a computationally intensive cross-encoder is an open question.

3.2.4.Cross-lingual architectures

An abundance of labeled data for EL in English contrasts with the amount of data available in other languages. The cross-lingual EL (sometimes called XEL) methods [76] aim at overcoming the lack of annotation for resource-poor languages by leveraging supervision coming from their resource-rich counterparts. Many of these methods are feasible due to the presence of a unique source of supervision for EL – Wikipedia, which is available for a variety of languages. The inter-language links in Wikipedia that map pages in one language to equivalent pages in another language also help to map corresponding entities in different languages.

Challenges in XEL start at candidate generation and mention detection steps since a resource-poor language can lack mappings between mention strings and entities. In addition to the standard mention-entity priors based on inter-language links [165,176,179], candidate generation can be approached by mining a translation dictionary [134], training a translation and alignment model [177,180], or applying a neural character-level string matching model [151,207]. In the latter approach, the model is trained to match strings from a high-resource pivot language to strings in English. If a high-resource pivot language is similar to the target low-resource one, such a model is able to produce reasonable candidates for the latter. The neural string matching approach can be further improved with simpler average n-gram encoding and extending entity-entity pairs with mention-entity examples [208]. Such an approach can also be applied to entity recognition [31]. Fu et al. [50] criticize methods that solely rely on Wikipedia due to the lack of inter-language links for resource-poor languages. They propose a candidate generation method that leverages results from querying online search engines (Google and Google Maps) and show that due to its much higher recall compared to other methods, it is possible to substantially increase the performance of XEL.

There are several approaches to candidate ranking that take advantage of cross-lingual data for dealing with the lack of annotated examples. Pan et al. [134] use the Abstract Meaning Representation (AMR) [8] statistics in English Wikipedia and mention context for ranking. To train an AMR tagger, pseudo-labeling [89] is used. Tsai and Roth [176] train monolingual embeddings for words and entities jointly by replacing every entity mention with corresponding entity tokens. Using the inter-language links, they learn the projection functions from multiple languages into the English embedding space. For ranking, context embeddings are averaged, projected into the English space, and compared with entity embeddings. The authors demonstrate that this approach helps to build better entity representations and boosts the EL accuracy in the cross-lingual setting by more than 1% for Spanish and Chinese. Sil et al. [165] propose a method for zero-shot transfer from a high-resource language. The authors extend the previous approach with the least squares objective for embedding projection learning, the CNN context encoder, and a trainable re-weighting of each dimension of context and entity representations. The proposed approach demonstrates improved performance as compared to previous non-zero-shot approaches. Upadhyay et al. [179] argues that the success of zero-shot cross-lingual approaches [165,176] might be largely originating from a better estimation of mention-entity prior probabilities. Their approach extends [165] with global context information and incorporation of typing information into context and entity representations (the system learns to predict typing during the training). The authors report a significant drop in performance for zero-shot cross-lingual EL without mention-entity priors, while showing state-of-the-art results with priors. They also show that training on a resource-rich language might be very beneficial for low-resource settings.

The aforementioned techniques of cross-lingual entity linking heavily rely on pre-trained multilingual embeddings for entity ranking. While being effective in settings with at least prior probabilities available, the performance in realistic zero-shot scenarios drops drastically. Along with the recent success of the zero-shot multilingual transfer of large pre-trained language models, this is a motivation to utilize powerful multilingual self-supervised models. Botha et al. [16] use the zeros-shot monolingual architecture of Logeswaran et al. [100], Wu et al. [191] and mBERT [141] to build a massively multilingual EL model for more than 100 languages. Their system effectively selects proper entities among almost 20 million of candidates using a bi-encoder, hard negative mining, and an additional cross-lingual entity description retrieval task. The biggest improvements over the baselines are achieved in the zero-shot and few-shot settings, which demonstrates the benefits of training on a large amount of multilingual data.

3.3.Methods that do not fit the general architecture

There are a few works that propose methods not fitting the general architecture presented in Figs 2 and 5. Raiman and Raiman [146] rely on the intermediate supplementary task of entity typing instead of directly performing entity disambiguation. They learn a type system in a KG and train an intermediate type classifier of mentions that significantly refines the number of candidates for the final linking model. Onoe and Durrett [131] leverage distant supervision from Wikipedia pages and the Wikipedia category system to train a fine-grained entity typing model. At test time, they use the soft type predictions and the information about candidate types derived from Wikipedia to perform the final disambiguation. The authors claim that such an approach helps to improve the domain independence of their EL system. Kar et al. [80] consider a classification approach, where each entity is considered as a separate class or a task. They show that the straightforward classification is difficult due to exceeding memory requirements. Therefore, they experiment with multitask learning, where parameter learning is decomposed into solving groups of tasks. Globerson et al. [56] do not have any encoder components; instead, they rely on contextual and pairwise feature-based scores. They have an attention mechanism for global ED with a non-linear optimization as described in Section 3.2.2.

3.4.Summary

Table 2

Features of neural EL models. Neural entity linking models compared according to their architectural features. The description of columns is presented in the beginning of Section 3.4. The footnotes in the table are enumerated in the end of Section 3.4

| Model | Encoder type | Global | MD + ED | NIL pred. | Ent. encoder source based on | Candidate generation | Learning type for disam. | Cross-lingual |

| Sun et al. (2015) [172] | CNN + Tensor net. | ent. specific info. | surface match + aliases | supervised | ||||

| Francis-Landau et al. (2016) [49] | CNN | ✘3 | ✘ | ent. specific info. | surface match + prior | supervised | ||

| Fang et al. (2016) [42] | word2vec-based | ✘ | relational info. | n/a | supervised | |||

| Yamada et al. (2016) [196] | word2vec-based | ✘ | relational info. | aliases | supervised | |||

| Zwicklbauer et al. (2016b) [211] | word2vec-based | ✘ | ✘ | unstructured text + ent. specific info. | surface match | unsupervised5 | ||

| Tsai and Roth (2016) [176] | word2vec-based | ✘ | ✘ | unstructured text | prior | supervised | ✘ | |

| Nguyen et al. (2016b) [127] | CNN | ✘ | ✘ | ent. specific info. | surface match + prior | supervised | ||

| Globerson et al. (2016) [56] | n/a | ✘ | n/a | prior + aliases | supervised | |||

| Cao et al. (2017) [20] | word2vec-based | ✘ | relational info. | aliases | supervised or unsupervised | |||

| Eshel et al. (2017) [40] | GRU + Atten. | unstructured text1 | aliases or surface match | supervised | ||||

| Ganea and Hofmann (2017) [54] | Atten. | ✘ | unstructured text | prior + aliases | supervised | |||

| Moreno et al. (2017) [115] | word2vec-based | ✘3 | ✘ | unstructured text | surface match + aliases | supervised | ||

| Gupta et al. (2017) [62] | LSTM | ✘3 | ent. specific info. | prior | supervised4 | |||

| Nie et al. (2018) [129] | LSTM + CNN | ✘ | ent. specific info. | surface match + prior | supervised | |||

| Sorokin and Gurevych (2018) [169] | CNN | ✘ | ✘ | relational info. | surface match | supervised | ||

| Shahbazi et al. (2018) [158] | Atten. | ✘ | unstructured text | prior + aliases | supervised | |||

| Le and Titov (2018) [85] | Atten. | ✘ | unstructured text | prior + aliases | supervised | |||

| Newman-Griffis et al. (2018) [125] | word2vec-based | unstructured text | aliases | unsupervised | ||||

| Radhakrishnan et al. (2018) [144] | n/a | ✘ | relational info. | aliases | supervised | |||

| Kolitsas et al. (2018) [82] | LSTM | ✘ | ✘ | unstructured text | prior + aliases | supervised | ||

| Sil et al. (2018) [165] | LSTM + Tensor net. | ✘ | ent. specific info. | prior or prior + aliases | zero-shot | ✘ | ||

| Upadhyay et al. (2018a) [179] | CNN | ✘3 | ent. specific info. | prior | zero-shot | ✘ | ||

| Cao et al. (2018) [19] | Atten. | ✘ | relational info. | prior + aliases | supervised | |||

| Raiman and Raiman (2018) [146] | n/a | ✘ | n/a | prior + type classifier | supervised | ✘ | ||

| Mueller and Durrett (2018) [117] | GRU + Atten. + CNN | unstructured text1 | surface match | supervised | ||||

| Shahbazi et al. (2019) [159] | ELMo | unstructured text | prior + aliases or aliases | supervised | ||||

| Logeswaran et al. (2019) [100] | BERT | ent. specific info. | BM25 | zero-shot | ||||

| Gillick et al. (2019) [55] | FFNN | ent. specific info. | nearest neighbors | supervised4 | ||||

| Peters et al. (2019) [139]2 | BERT | ✘3 | ✘ | ✘ | unstructured text | prior + aliases | supervised |

Table 2

(Continued)

| Model | Encoder type | Global | MD + ED | NIL pred. | Ent. encoder source based on | Candidate generation | Learning type for disam. | Cross-lingual |

| Le and Titov (2019b) [87] | LSTM | ent. specific info. | surface match | weakly-supervised | ||||

| Le and Titov (2019a) [86] | Atten. | ✘ | unstructured text | prior + aliases | weakly-supervised | |||

| Fang et al. (2019) [43] | LSTM | ✘ | unstructured text +ent. specific info. | aliases | supervised | |||

| Martins et al. (2019) [107] | LSTM | ✘ | ✘ | unstructured text | aliases | supervised | ||

| Yang et al. (2019) [200] | Atten. or CNN | ✘ | unstructured text or ent. specific. info. | prior + aliases | supervised | |||

| Xue et al. (2019) [192] | CNN | ✘ | ent. specific info. | prior + aliases | supervised | |||

| Zhou et al. (2019) [207] | n/a | ✘ | unstructured text | prior + char.-level model | zero-shot | ✘ | ||

| Broscheit (2019) [17] | BERT | ✘ | ✘ | n/a | n/a | supervised | ||

| Hou et al. (2020) [69] | Atten. | ✘ | ent. specific info. + unstructured text | prior + aliases | supervised | |||

| Onoe and Durrett (2020) [131] | ELMo + Atten. + CNN + LSTM | n/a | prior or aliases | supervised4 | ||||

| Chen et al. (2020) [23] | BERT | ✘ | relational info. | n/a or aliases | supervised | |||

| Wu et al. (2020b) [191] | BERT | ent. specific info. | nearest neighbors | zero-shot | ||||

| Banerjee et al. (2020) [9] | fastText | ✘ | relational info. | surface match | supervised | |||

| Wu et al. (2020a) [190] | ELMo | ✘ | unstructured text + relational info. | prior + aliases | supervised | |||

| Fang et al. (2020) [44] | BERT | ✘ | ent. specific info. | surface match + aliases + Google Search | supervised | |||

| Chen et al. (2020) [25] | Atten. + BERT | ✘ | unstructured text | prior + aliases | supervised | |||

| Botha et al. (2020) [16] | BERT | ent. specific info. | nearest neighbors | zero-shot | ✘ | |||

| Yao et al. (2020) [201] | BERT | ent. specific info. | BM25 | zero-shot | ||||

| Li et al. (2020) [94] | BERT | ✘ | ent. specific info. | nearest neighbors | zero-shot | |||

| Poerner et al. (2020) [142]2 | BERT | ✘ | ✘ | ✘ | relational info. | prior + aliases | supervised | |

| Fu et al. (2020) [50] | M-BERT | ent. specific info. | Google Search Google Maps | zero-shot | ✘ | |||

| Mulang’ et al. (2020) [118] | Atten. or CNN or BERT | ✘ | relational info. | prior + aliases | supervised | |||

| Yamada et al. (2021) [198] | BERT | ✘ | unstructured text | prior + aliases or aliases | supervised | |||

| Gu et al. (2021) [60] | BERT | ✘ | ✘ | ent. specific info. | surface match + prior or aliases | supervised | ||

| Tang et al. (2021) [174] | BERT | ent. specific info. | BM25 | zero-shot | ||||

| De Cao et al. (2021) [33] | BART | ✘ | ✘ | n/a | prior + aliases | supervised |

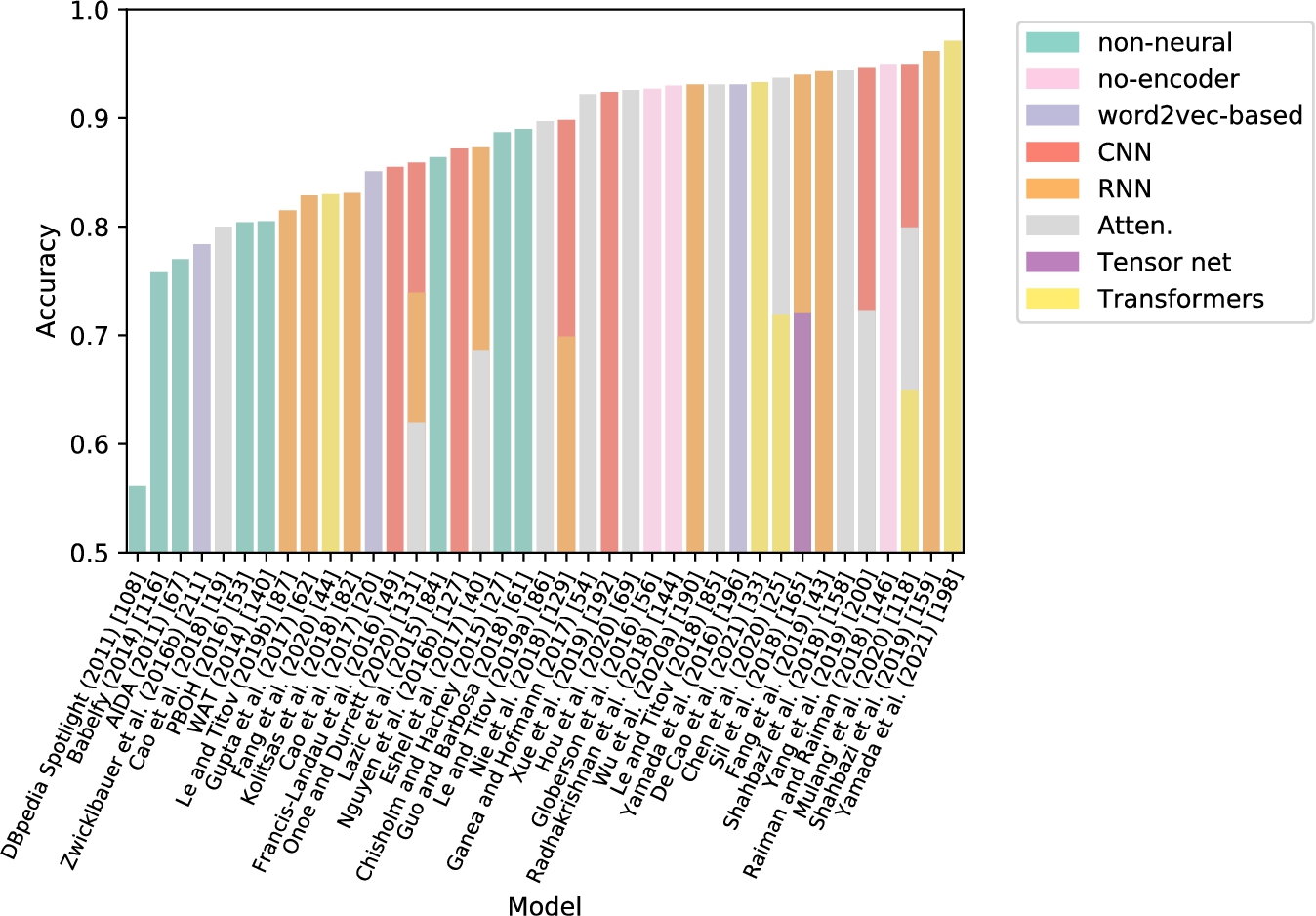

We summarize design features for neural EL models in Table 2 and also links to their publicly available implementations in Table 7 in the Appendix. The mention encoders have made a shift to self-attention architectures and started using deep pre-trained models like BERT. The majority of studies still rely on external knowledge for the candidate generation step. There is a surge of models that tackle the domain adaptation problem in a zero-shot fashion. However, the task of zero-shot joint entity mention detection and linking has not been addressed yet. It is shown in several works that the cross-encoder architecture is superior compared to models with separate mention and entity encoders. The global context is widely used, but there are few recent studies that focus only on local EL.

Each column in Table 2 corresponds to a model feature. The encoder type column presents the architecture of the mention encoder of the neural entity linking model. It contains the following options:

– n/a – a model does not have a neural encoder for mentions / contexts.

– CNN – an encoder based on convolutional layers (usually with pooling).

– Tensor net. – an encoder that uses a tensor network.

– Atten. – means that a context-mention encoder leverages an attention mechanism to highlight the part of the context using an entity candidate.

– GRU – an encoder based on a recurrent neural network and gated recurrent units [29].

– LSTM – an encoder based on a recurrent neural network and long short-term memory cells [66] (might be also bidirectional).

– FFNN – an encoder based on a simple feedforward neural network.

– ELMo – an encoder based on a pre-trained ELMo model [138].

– BERT – an encoder based on a pre-trained BERT model [36].

– fastText – an encoder based on a pre-trained fastText model [13].

– word2vec-based – an encoder that leverages principles of CBOW or skip-gram algorithms [88,110,111].

Note that the theoretical complexity of various types of encoders is different. As discussed by Vaswani et al. [182], complexity per layer of self-attention is

The global column shows whether a system uses a global solution (see Section 3.2.2). The MD + ED column refers to joint entity mention detection and disambiguation models, where detection and disambiguation of entities are performed collectively (Section 3.2.1). The NIL prediction column points out models that also label unlinkable mentions. The entity embedding column presents which resource is used to train entity representations based on the categorization in Section 3.1.3, where

– n/a – a model does not have a neural encoder for entities.

– unstructured text – entity representations are constructed from unstructured text using approaches based on co-occurrence statistics developed originally for word embeddings like word2vec [111].

– relational info. – a model uses relations between entities in KGs.

– ent. specific info. – an entity encoder uses other types of information, like entity descriptions, types, or categories.

In the candidate generation column, the candidate generation methods are specified (Section 3.1.1). It contains the following options:

– n/a – the solution that does not have an explicit candidate generation step (e.g. the method presented by Broscheit [17]).

– surface match – surface form matching heuristics.

– aliases – a supplementary aliases for entities in a KG.

– prior – filtering candidates with pre-calculated mention-entity prior probabilities or frequency counts.

– type classifier – Raiman and Raiman [146] filter candidates using a classifier for an automatically learned type system.

– BM25 – a variant of TF-IDF to measure similarity between a mention and a candidate entity based on description pages.

– nearest neighbors – the similarity between mention and entity representations is calculated, and entities that are nearest neighbors of mentions are retrieved as candidates. Wu et al. [191] train a supplementary model for this purpose.

– Google search – leveraging Google Search Engine to retrieve entity candidates.

– char.-level model – a neural character-level string matching model.

The learning type for disambiguation column shows whether a model is ‘supervised’, ‘unsupervised’, ‘weakly-supervised’, or ‘zero-shot’. The cross-lingual column refers to models that provide cross-lingual EL solutions (Section 3.2.4).

In addition, the following superscript notations are used to denote specific features of methods shown as a note in the Table 2:

1. These works use only entity description pages, however, they are labeled as the first category (unstructured text) since their training method is based on principals from word2vec.

2. The authors provide EL as a subsystem of language modeling.

3. These solutions do not rely on global coherence but are marked as “global” because they use document-wide context or multiple mentions at once for resolving entity ambiguity.

4. These studies are domain-independent as discussed in Section 3.2.3.

5. Zwicklbauer et al. [211] may not be accepted as purely unsupervised since they have some threshold parameters in the disambiguation algorithm tuned on a labeled set.

Table 3

Evaluation datasets. Descriptive statistics of the evaluation datasets used in this survey to compare the EL models. The values for MSNBC, AQUAINT, and ACE2004 datasets are based on the update by Guo and Barbosa [61]. The statistics for AIDA-B, MSNBC, AQUAINT, ACE2004, CWEB, and WW is reported according to [54] (# of mentions takes into account only non-NIL entity references). The TAC KBP dataset statistics is reported according to [39,75,76,191] (# of mentions takes into account also NIL entity references)

| Corpus | Text genre | # of documents | # of mentions |

| AIDA-B [67] | News | 231 | 4,485 |

| MSNBC [32] | News | 20 | 656 |

| AQUAINT [112] | News | 50 | 727 |

| ACE2004 [148] | News | 36 | 257 |

| CWEB [52,61] | Web & Wikipedia | 320 | 11,154 |

| WW [61] | Web & Wikipedia | 320 | 6,821 |

| TAC KBP 2010 [75] | News & Web | 2,231 | 2,250 |

| TAC KBP 2015 Chinese [76] | News & Forums | 166 | 11,066 |

| TAC KBP 2015 Spanish [76] | News & Forums | 167 | 5,822 |

4.Evaluation

In this section, we present evaluation results for the entity linking and entity relatedness tasks on the commonly used datasets.

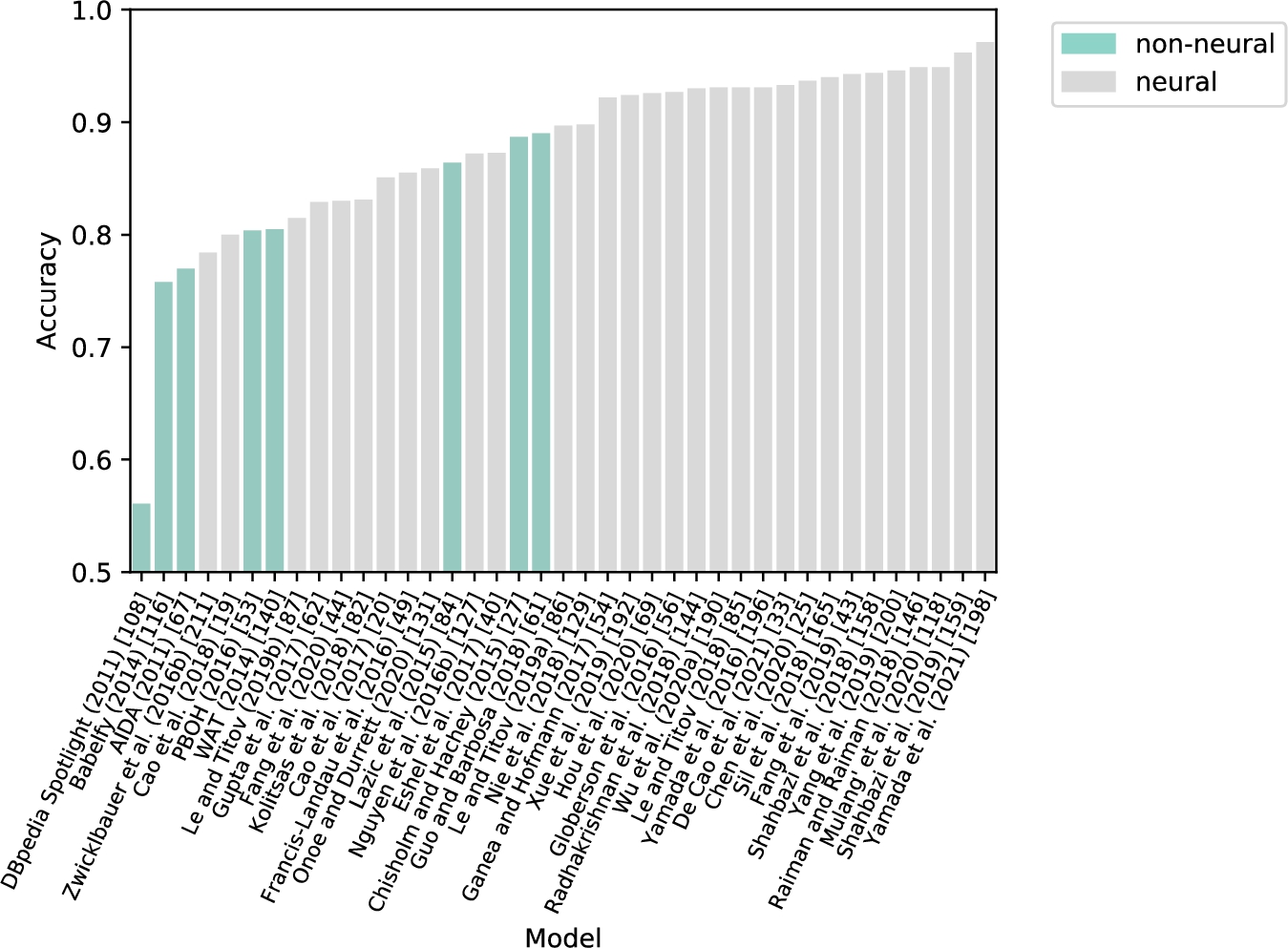

4.1.Entity linking

4.1.1.Experimental setup

The evaluation results are reported based on two different evaluation settings. The first setup is entity disambiguation (ED) where the systems have access to the mention boundaries. The second setup is entity mention detection and disambiguation (MD + ED) where the input for the systems that perform MD and ED jointly is only plain text. We presented their results in separate tables since the scores for the joint models accumulate the errors made during the mention detection phase.

Datasets We report the evaluation results of monolingual EL models on the English datasets widely-used in recent research publications: AIDA [67], TAC KBP 2010 [75], MSNBC [32], AQUAINT [112], ACE2004 [148], CWEB [52,61], and WW [61]. AIDA is the most popular dataset for benchmarking EL systems. For AIDA, we report the results calculated for the test set (AIDA-B).