Survey on English Entity Linking on Wikidata: Datasets and approaches

Abstract

Wikidata is a frequently updated, community-driven, and multilingual knowledge graph. Hence, Wikidata is an attractive basis for Entity Linking, which is evident by the recent increase in published papers. This survey focuses on four subjects: (1) Which Wikidata Entity Linking datasets exist, how widely used are they and how are they constructed? (2) Do the characteristics of Wikidata matter for the design of Entity Linking datasets and if so, how? (3) How do current Entity Linking approaches exploit the specific characteristics of Wikidata? (4) Which Wikidata characteristics are unexploited by existing Entity Linking approaches? This survey reveals that current Wikidata-specific Entity Linking datasets do not differ in their annotation scheme from schemes for other knowledge graphs like DBpedia. Thus, the potential for multilingual and time-dependent datasets, naturally suited for Wikidata, is not lifted. Furthermore, we show that most Entity Linking approaches use Wikidata in the same way as any other knowledge graph missing the chance to leverage Wikidata-specific characteristics to increase quality. Almost all approaches employ specific properties like labels and sometimes descriptions but ignore characteristics such as the hyper-relational structure. Hence, there is still room for improvement, for example, by including hyper-relational graph embeddings or type information. Many approaches also include information from Wikipedia, which is easily combinable with Wikidata and provides valuable textual information, which Wikidata lacks.

1.Introduction

1.1.Motivation

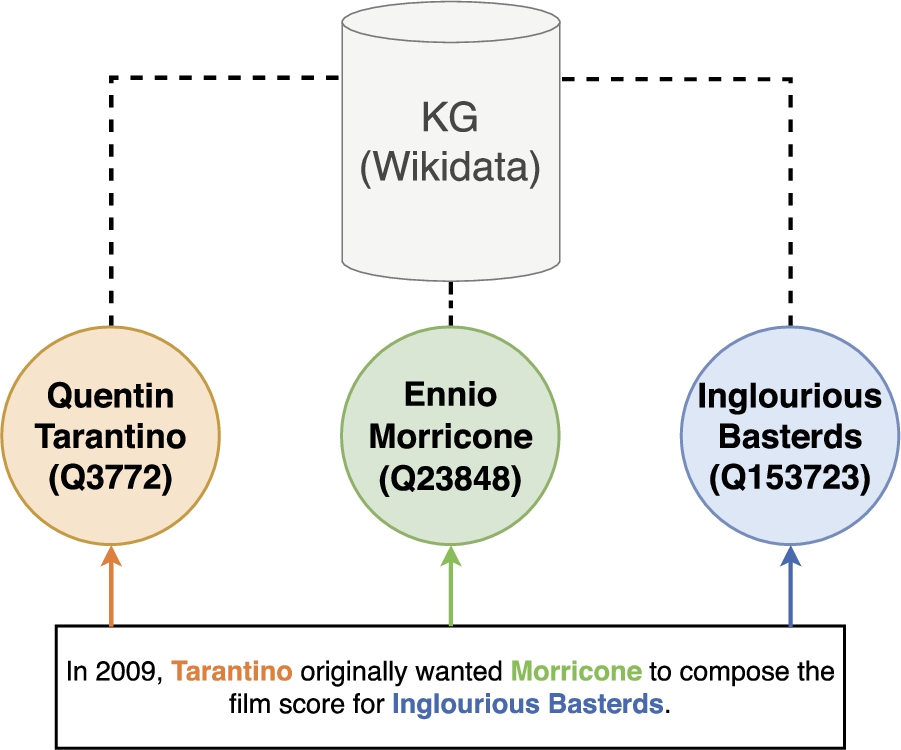

Fig. 1.

Entity linking – mentions in the text are linked to the corresponding entities (color-coded) in a knowledge graph (here: Wikidata).

Entity Linking (EL) is the task of connecting already marked mentions in an utterance to their corresponding entities in a knowledge graph (KG), see Fig. 1. In the past, this task was tackled by using popular knowledge bases such as DBpedia [67], Freebase [12] or Wikipedia. While the popularity of those is still imminent, another alternative, named Wikidata [120], appeared.

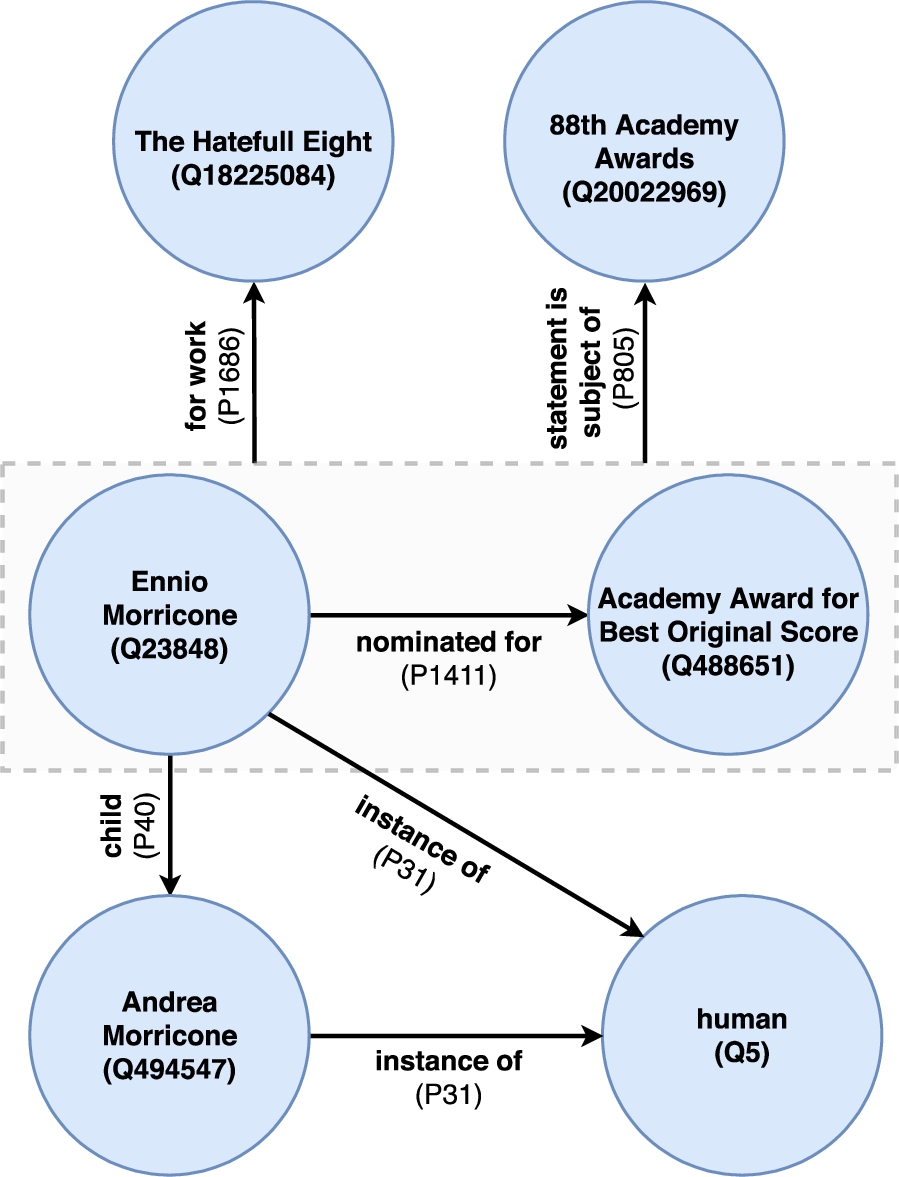

Fig. 4.

Wikidata subgraph – dashed rectangle represents a claim with attached qualifiers.

Wikidata follows a similar philosophy as Wikipedia as it is curated by a continuously increasing community, see Fig. 2. However, Wikidata differs in the way knowledge is stored – information is stored in a structured format via a knowledge graph (KG). An important characteristic of Wikidata is its inherent multilingualism. While Wikipedia articles exist in multiple languages, Wikidata information are stored using language-agnostic identifiers. This is of advantage for multilingual entity linking. DBpedia, Freebase or Yago4 [109] are KGs too which can become outdated over time [93]. They rely on information extracted from other sources in contrast to the Wikidata knowledge which is inserted by a community. Given an active community, this leads to Wikidata being frequently and timely updated – another characteristic. Note that DBpedia also stays up-to-date but has a delay of a month11 while Wikidata dumps are updated multiple times a month. There are up-to-date services to access knowledge for both KGs, Wikidata and DBpedia (cf. DBpedia Live22), but full dumps are preferred as else the FAIR replication [126] of research results based on the KG is hindered. Another Wikidata characteristic interesting for Entity Linkers, are hyper-relations (see Fig. 4 for an example graph), which might affect their abilities and performance.

Therefore, it is of interest how existing approaches incorporate these characteristics. However, existing literature lacks an exhaustive analysis which examines Entity Linking approaches in the context of Wikidata.

Ultimately, this survey strives to expose the benefits and associated challenges which arise from the use of Wikidata as the target KG for EL. Additionally, the survey provides a concise overview of existing EL approaches, which is essential to (1) avoid duplicated research in the future and (2) enable a smoother entry into the field of Wikidata EL. Similarly, we structure the dataset landscape which helps researchers find the correct dataset for their EL problem.

The focus of this survey lies on EL approaches, which operate on already marked mentions of entities, as the task of Entity Recognition (ER) is much less dependent on the characteristics of a KG. However, due to the recent uptake of research on EL on Wikidata, there is only a low number of EL-only publications. To broaden the survey’s scope, we also consider methods that include the task of ER. We do not restrict ourselves regarding the type of models used by the entity linkers.

This survey limits itself to all EL approaches supporting the English language as most frequent language, and thus, a better comparison of the approaches and datasets is possible. We also include approaches that support multiple languages. The existence of such approaches for Wikidata is not surprising as an important characteristic of Wikidata is the support of a multitude of languages.

1.2.Research questions and contributions

First, we want to develop an overview of datasets for EL on Wikidata. Our survey analyses datasets and whether they are designed with Wikidata in mind and if so, in what way? Thus, we post the following two research questions:

RQ 1: Which Wikidata EL datasets exist, how widely used are they and how are they constructed?

RQ 2: Do the characteristics of Wikidata matter for the design of EL datasets and if so, how?

EL approaches use many kinds of information like labels, popularity measures, graph structures, and more. This multitude of possible signals raises the question of how the characteristics of Wikidata are used by the current state of the art of EL on Wikidata. Thus, the third research question is:

RQ 3: How do current Entity Linking approaches exploit the specific characteristics of Wikidata?

Lastly, we identify what kind of characteristics of Wikidata are of importance for EL but are insufficiently considered. This raises the last research question:

RQ 4: Which Wikidata characteristics are unexploited by existing Entity Linking approaches?

This survey makes the following contributions:

– An overview of all currently available EL datasets focusing on Wikidata

– An overview of all currently available EL approaches linking on Wikidata

– An analysis of the approaches and datasets with a focus on Wikidata characteristics

– A concise list of future research avenues

2.Survey methodology

There exist several different ways in which a survey can contribute to the research field [57]:

1. Providing an overview of current prominent areas of research in a field

2. Identification of open problems

3. Providing a novel approach tackling the extracted open problems (in combination with the identification of open problems)

Table 1

Qualifying and disqualifying criteria for approaches. “Semi-structured” in this table means that the entity mentions do not occur in natural language utterances but in more structured documents such as tables

| Criteria | |

| Must satisfy all | Must not satisfy any |

| – Approaches that consider the problem of unstructured EL over Knowledge Graphs | – Approaches conducting Semi-structured EL |

| – Approaches where the target Knowledge Graph is Wikidata | – Approaches not doing EL in the English language |

Until December 18, 2020, we continuously searched for existing and newly released scientific work suitable for the survey. Note, this survey includes only scientific articles that were accessible to the authors.33

2.1.Approaches

Our selection of approaches stems from a search over the following search engines:

– Google Scholar

– Springer Link

– Science Direct

– IEEE Xplore Digital Library

– ACM Digital Library

To gather a wide choice of approaches, the following steps were applied. Entity Linking, Entity Disambiguation or variations of the phrases44 had to occur in the title of the paper. The publishing year was not a criterion due to the small number of valid papers and the relatively recent existence of Wikidata. Any approach where Wikidata was not occurring once in the full text was not considered. The systematic search process resulted in exactly 150 papers and theses (including duplicates).

Following this search, the resulting papers were filtered again using the qualifying and disqualifying criteria which can be found in Table 1. This resulted in 15 papers and one master thesis in the end.

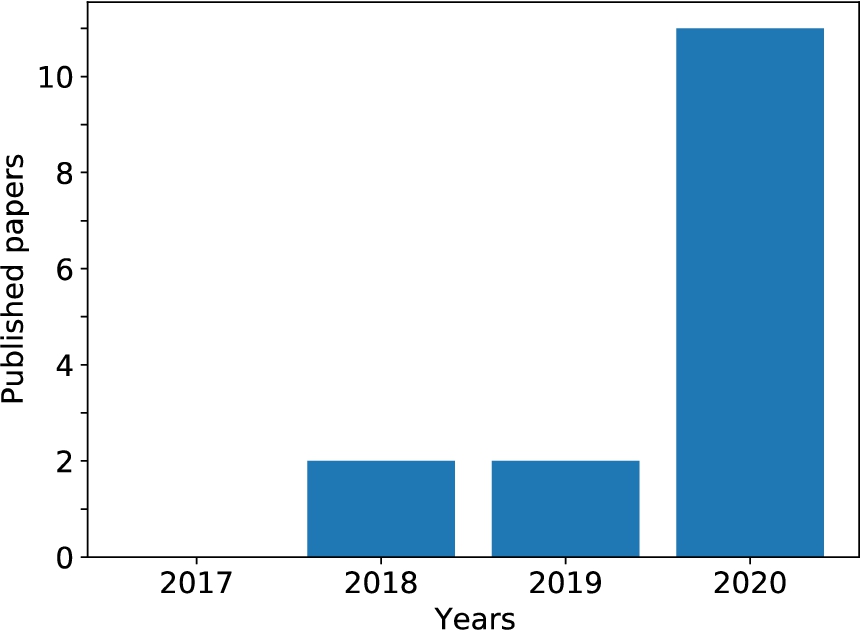

The search resulted in papers in the period from 2018 to 2020. While there exist EL approaches from 2016 [4,107] working on Wikidata, they did not qualify according to the criteria above.

2.2.Datasets

The dataset search was conducted in two ways. First, a search for potential datasets was performed via the same search engines as used for the approaches. Second, all datasets occurring in the system papers were considered if they fulfilled the criteria. The criteria for the inclusion of a dataset can be found in Table 2.

Table 2

Qualifying and disqualifying criteria for the dataset search

| Criteria | |

| Must satisfy all | Must not satisfy any |

| – Datasets that are designed for EL or are used for evaluation of Wikidata EL | – Datasets without English utterances |

| – Datasets must include Wikidata identifiers from the start; an existing dataset later mapped to Wikidata is not permitted | |

We filtered the dataset papers in the following way. First, in the title, Entity Linking or Entity Disambiguation or variations thereof had to occur, similar to the search for the Entity Linking approaches. Additionally, dataset, data, corpus or benchmark had to occur once in title55 must occur in the title and Wikidata has to appear at least once in the full text. Due to those keywords, other datasets suitable for EL, but constructed for a different purpose like KG population, were not included. This resulted in 26 papers (including duplicates). Of those, only two included Wikidata identifiers and focused on English.

Eighteen datasets were accompanying the different approaches. Many of those did not include Wikidata identifiers from the start. This made them less optimal for the examination of the influence of Wikidata on the design of datasets. They were included in the section about the approaches but not in the section about the Wikidata datasets.

After the removal of duplicates, 11 Wikidata datasets were included in the end.

3.Problem definition

EL is the task of linking an entity mention in unstructured or semi-structured data to the correct entity in a KG. The focus of this survey lies in unstructured data, namely, natural language utterances.

3.1.General terms

Utterance An utterance u is defined as a sequence of n words w.

Entity There exists no universally agreed-on definition of an entity in the context of EL [97]. According to the Oxford Dictionary, an entity is:

“something that exists separately from other things and has its own identity” [82]

Any Wikidata item is an entity.

Knowledge graph While the term knowledge graph was already used before, the popularity increased drastically after Google introduced the Knowledge Graph in 2012 [28,103]. However, similar to an entity, there exists no unanimous definition of a KG [28,52]. For example, Färber et al. define a KG as an RDF graph [35]. However, a KG being an RDF graph is a strict assumption. While the Wikidata graph is available in the RDF format, the main output format is JSON. Freebase, often called a KG, did not provide the RDF format until a year after its launch [11]. Paulheim defines it less formal as:

“A knowledge graph (i) mainly describes real world entities and their interrelations, organized in a graph, (ii) defines possible classes and relations of entities in a schema, (iii) allows for potentially interrelating arbitrary entities with each other and (iv) covers various topical domains.” [84]

In this survey, a knowledge graph is defined as a directed graph

Hyper-relational knowledge graphs In a hyper-relational knowledge graph, statements can be specified by more information than a single relation. Multiple relations are, therefore, part of a statement. In case of a hyper-relational graph

3.2.Tasks

Since not only approaches that solely do EL were included in the survey, Entity Recognition will also be defined.

Entity recognition ER is the task of identifying the mention span

It is also up to debate what an entity mention is. In general, a literal reference to an entity is considered a mention. But whether to include pronouns or how to handle overlapping mentions depends on the use case.

Entity linking The goal of EL is to find a mapping function that maps all found mentions to the correct KG entities and also to identify if an entity mention does not exist in the KG.

In general, EL takes the utterance u and all k identified entity mentions

EL is often split into two subtasks. First, potential candidates for an entity are retrieved from a KG. This is necessary as doing EL over the whole set of entities is often intractable. This Candidate generation is usually performed via efficient metrics measuring the similarities between mentions in the utterance and entities in the KG. The result is a set of candidates

There are two different categories of reranking methods are called local or global [91].

The rank assignment and score calculation of the candidates of one entity is often not independent of the other entities’ candidates. In this case, the ranking will be done by including the whole assignment via a global scoring function:

Note, there also exists some ambiguity in the objective of linking itself. For example, there exists a Wikidata entity 2014 FIFA World Cup and an entity FIFA World Cup. There is no unanimous solution on how to link the entity mention in the utterance In 2014, Germany won the FIFA World Cup.

Sometimes EL is also called Entity Disambiguation, which we see more as part of EL, namely where entities are disambiguated via the candidate ranking.

There exist multiple special cases of EL. Multilingual EL tries to link entity mentions occurring in utterances of different languages to one shared KG, for example, English, Spanish or Chinese utterances to one language-agnostic KG. Formally, an entity mention m in some utterance u of some context language

Cross-lingual EL tries to link entity mentions in utterances in different languages to a KG in one dedicated language, for example, Spanish and German utterances to an English KG [92]. In that case, the multilingual EL problem gets constrained to

In zero-shot EL, the entities during test time

KB/KG-agnostic EL approaches are able to support different KBs respectively KGs, often multiple in parallel. For example, a KG must be available in RDF format. We refer the interested reader to central works [76,114,137] or our Appendix.

4.Wikidata

Wikidata is a community-driven knowledge graph edited by humans and machines. The Wikidata community can enrich the content of Wikidata by, for example, adding/changing/removing entities, statements about them, and even the underlying ontology information. As of July 2020, it contained around 87 million items of structured data about various domains. Seventy-three million items can be interpreted as entities due to the existence of an is instance property. As a comparison, DBpedia contains around 5 million entities [109]. Note that the is instance property includes a much broader scope of entities than the ones interpreted as entities for DBpedia. In comparison to other similar KGs, the Wikidata dumps are updated most frequently (Table 3). But note that this only applies to the dumps, if one considers direct access via the Website or a SPARQL endpoint, both, Wikidata66,77 and DBpedia88,99 provide continuously updated knowledge.

Table 3

KG statistics by [109]

| KG | #Entities in million | #Labels/Aliases in million | Last updated |

| Wikidata | 78 | 442 | Up to 4 times a month* |

| DBpedia | 5 | 22 | Monthly |

| Yago4 | 67 | 371 | November 2019 |

4.1.Definition

Wikidata is a collection of entities where each such entity has a page on Wikidata. An entity can be either an item or a property. Note, an entity in the sense of Wikidata is generally not the same as an entity one links to via EL. For example, Wikidata entities are also properties that describe relations between different items. Linking to such relations is closer to Relation Extraction [9,70,104]. Furthermore, many items are more abstract classes, which are usually not considered as entities linked to in EL. Note that if not mentioned otherwise, if we speak about entities, entities in the context of EL are meant.

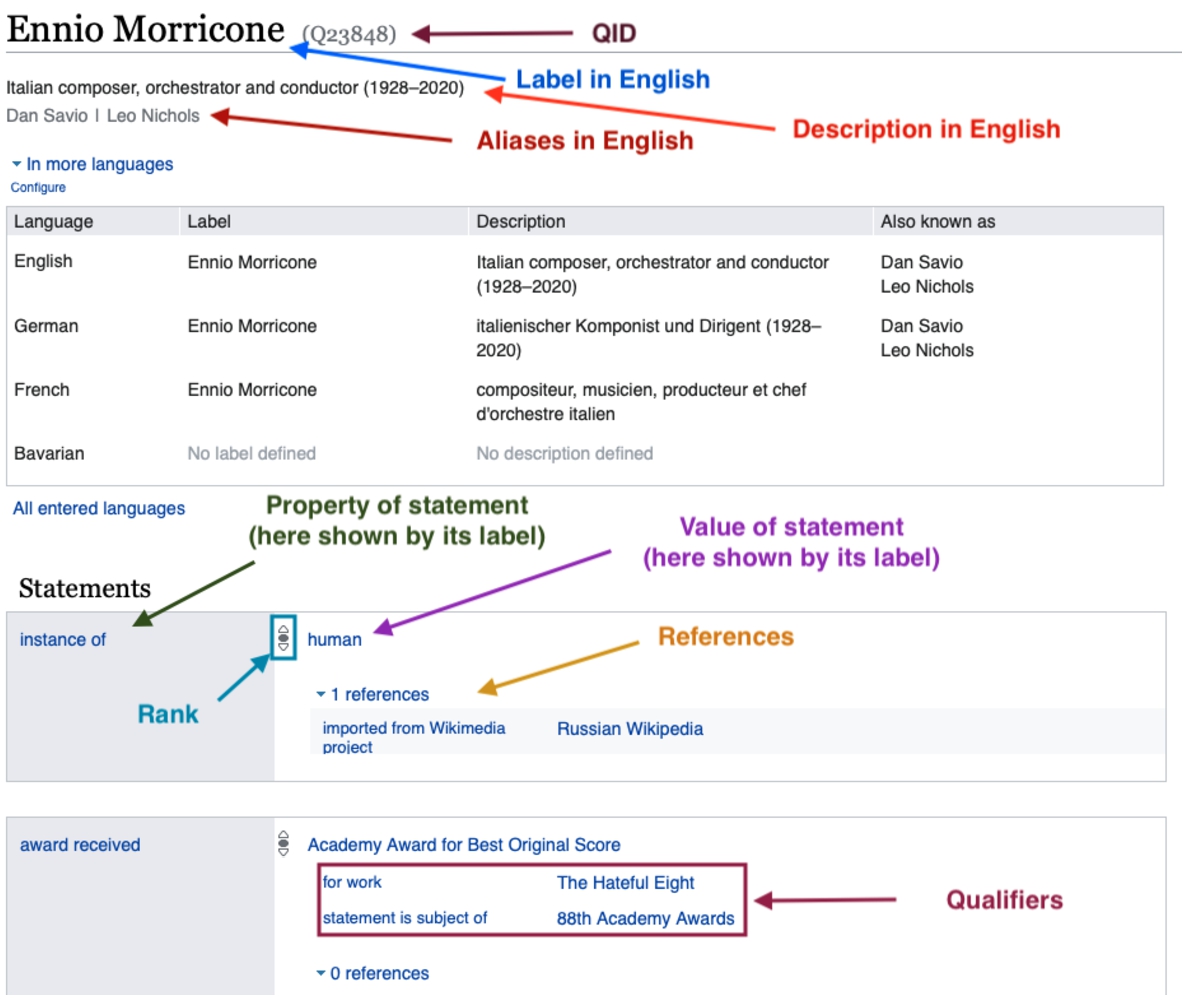

Item Topics, classes, or objects are defined as items. An item is enriched with more information using statements about the item itself. In general, items consist of one label, one description, and aliases in different languages. An unique and language-agnostic identifier identifies items in the form Q[0-9]+. An example of an item can be found in Fig. 5.

For example, the item with the identifier Q23848 has the label Ennio Morricone, two aliases, Dan Savio and Leo Nichols, and Italian composer, orchestrator and conductor (1928–2020) as description at the point of writing. The corresponding Wikidata page can also be seen in Fig. 5.

Fig. 5.

Example of an item in Wikidata.

Property A property specifies a relation between items or literals. Each property also has an identifier similar to an item, specified by P[0-9]+. For instance, a property P19 specifies the place of birth Rome for Ennio Morricone. In NLP, the term relation is commonly used to refer to a connection between entities. A property in the sense of Wikidata is a type of relation. To not break with the terminology used in the examined papers, when we talk about relations, we always mean Wikidata properties if not mentioned otherwise.

Statement A statement introduces information by giving structure to the data in the graph. It is specified by a claim, and references, qualifiers and ranks related to the claim. Statements are assigned to items in Wikidata. A claim is defined as a pair of property and some value. A value can be another item or some literal. Multiple values are possible for a property. Even an unknown value and a no value exists.

References point to sources making the claims inside the statements verifiable. In general, they consist of the source and date of retrieval of the claim.

Qualifiers define the value of a claim further by contextual information. For example, a qualifier could specify for how long one person was the spouse of another person. Qualifiers enable Wikidata to be hyper-relational (see Section 3.1). Structures similar to qualifiers also exist in some other knowledge graphs, such as the inactive Freebase in the form of Compound Value Types [12].

Ranks are used if multiple values are valid in a statement. If the population of a country is specified in a statement, it might also be useful to have the populations of past years available. The most up-to-date population information usually has then the highest rank and is thus usually the most desirable claim to use.

Statements can be also seen in Fig. 5 at the bottom. For example, it is defined that Ennio Morricone is an instance of the class human. This is also an example for the different types of items. While Ennio Morricone is an entity in our sense, human is a class.

Other structural elements The aforementioned elements are essential for Wikidata, but more do exist. For example, there are entities (in the sense of Wikidata) corresponding to Lexemes, Forms, Senses or Schemas. Lexemes, Forms and Senses are concerned with lexicographical information, hence words, phrases and sentences themselves. This is in contrast to Wikidata items and properties, which are directly concerned with things, concepts and ideas. Schemas formally subscribe to subsets of Wikidata entities. For example, any Wikidata item which has actor as its occupation is an instance of the class human. Both, lexicographical and schema information, are usually not directly of relevance for EL. Therefore, we refrain from introducing them in more detail.

For more information on Wikidata, see the paper by Denny Vrandečić and Markus Krötzsch [120].

Differences in structure to other knowledge graphs DBpedia extracts its information from Wikipedia and Wikidata. It maps the information to its own ontology. DBpedia’s statements consist of only single triples (<subject, predicate, object>) since it follows the RDF specification [67]. Additional information like qualifiers, references or ranks do not exist. But it can be modeled via additional triples. As it is no inherent feature of DBpedia, it is harder to use as there a no strict conventions. Entities in DBpedia have human-readable identifiers, and there exist entities per language [67] with partly differing information. Hence, for a single concept or thing, multiple DBpedia entities might exist. For example, the English entity of the city Munich1010 has 25 entities as dbo:administrativeDistrict assigned. The German entity1111 only a single one. It seems that this originates from a different interpretation of the predicate dbo:administrativeDistrict.

Yago4 extracts all its knowledge from Wikidata but filters out information it deems inadequate. For example, if a property is used too seldom, it is removed. If a Wikidata entity does not have a class that exists in Schema.org,1212 it is removed. The RDF specification format is used. Qualifier information is included indirectly via separate triples. Rank information and references of statements do not exist. The identifiers follow either a human-readable form if available via Wikipedia or Wikidata or use the Wikidata QID. However, in contrast to DBpedia, only one entity exists per thing or concept [109].

For a thorough comparison of Wikidata and other KGs (in respect to Linked Data Quality [134]), please refer to the paper by Färber et al. [35].

4.2.Discussion

Novelties A useful characteristic of Wikidata is that the community can openly edit it. Another novelty is that there can be a plurality of facts, as contradictory facts based on different sources are allowed. Similarly, time-sensitive data can also be included by qualifiers and ranks. The population of a country, for example, changes from year to year, which can be represented easily in Wikidata. Lastly, due to their language-agnostic identifiers, Wikidata is inherently multilingual. Language only starts playing a role in the labels and descriptions of an item.

Strengths Due to the inclusion of information by the community, recent events will likely be included. The knowledge graph is thus much more up-to-date than most other KGs. Freebase is unsupported for years now, and DBpedia updates its dumps only every month. Note, the novel DBpedia live 2.01313 is updated when changes to a Wikipedia page occur, but, as discussed, makes research harder to replicate. Thus, Wikidata is much more suitable and useful for industry applications such as smart assistants since it is the most complete open-accessible data source to date. In Fig. 6a, one can see that number of items in Wikidata is increasing steadily. The existence of labels and additional aliases (see Fig. 6b) helps EL as a too-small number of possible surface forms often lead to a failure in the candidate generation. DBpedia does, for example, not include aliases, only a single exact label;1414 to compensate, additional resources like Wikipedia are often used to extract a label dictionary of adequate size [76]. Even each property in Wikidata has a label [120]. Fully language model-based approaches are therefore more naturally usable [78]. Also, nearly all items have a description, see Fig. 6d. This short natural language phrase can be used for context similarity measures with the utterance. The inherent multilingual structure is intuitively useful for multilingual Entity Linking. Table 4 shows information about the use of different languages in Wikidata. As can be seen, item labels/aliases are available in up to 457 languages. But not all items have labels in all languages. On average, labels, aliases and descriptions are available in 29.04 different languages. However, the median is only 6 languages. Many entities will, therefore, certainly not have information in many languages. The most dominant language is English, but not all elements have label/alias/description information in English. For less dominant languages, this is even more severe. German labels exist, for example, only for 14%, and Samoan labels for 0.3%. Context information in the form of descriptions is also given in multiple languages. Still, many languages are again not covered for each entity (as can be seen by a median of only 4 descriptions per element). While the multilingual label and description information of items might be useful for language model-based variants, the same information for properties enables multilingual language models. Because, on average, 21.18 different languages are available per property for labels, one could train multilingual models on the concatenations of the labels of triples to include context information. But of course, there are again many properties with a lower number of languages, as the median is also only 6 languages. Cross-lingual EL is therefore certainly necessary to use language model-based EL in multiple languages.

By using the qualifiers of hyper-relational statements, more detailed information is available, useful not only for Entity Linking but also for other problems like Question Answering. The inclusion of hyper-relational statements is also more challenging. Novel graph embeddings have to be developed and utilized, which can represent the structure of a claim enriched with qualifiers [37,98].

Table 4

Statistics – languages Wikidata (extracted from dump [125])

| Items | Properties | |

| Number of languages | 457 | 427 |

| (average, median) of # languages per element (labels + descriptions) | 29.04, 6 | 21.24, 13 |

| (average, median) of # languages per element (labels) | 5.59, 4 | 21.18, 6 |

| (average, median) of # languages per element (descriptions) | 26.10, 4 | 9.77, 6 |

| % elements without English labels | 15.41% | 0% |

| % elements without English descriptions | 26.23% | 1.08% |

Ranks are of use for EL in the following way. Imagine a person had multiple spouses throughout his/her life. In Wikidata, all those relationships are assigned to the person via statements of different ranks. If now an utterance is encountered containing information on the person and her/his spouse, one can utilize the Wikidata statements for comparison. Depending on the time point of the utterance, different statements apply. One could, for example, weigh the relevance of statements according to their rank. If now a KG (for example Yago4 [109]) includes only the most valid statement, the current spouse, utterances containing past spouses are harder to link.

For references, up to now, no found approach did utilize them for EL. One use case might be to filter statements by reference if one knows the source’s credibility, but this is more a measure to cope with the uncertainty of statements in Wikidata and not directly related to EL.

Table 5

Number of English labels/aliases pointing to a certain number of items in Wikidata (extracted from dump [125])

| # Labels/aliases | 70,124,438 | 2,041,651 | 828,471 | 89,210 | 3329 |

| # Items per label/alias | 1 | 2 | 3–10 | 11–100 | <100 |

Weaknesses However, this community-driven approach also introduces challenges. For example, the list of labels of an item will not be exhaustive, as shown in Figs 6b and 6c. The graphs consider labels and aliases of all languages. While the median of labels and aliases is around 4 per element, not all are useful for Entity Linking. Ennio Morricone does not have an alias solely consisting of Ennio while he will certainly sometimes be referenced by that. Thus, one can not rely on the exact labels alone. But interestingly, Wikidata has properties for the fore- and surname alone, just not as a label or alias. A close examination of what information to use is essential.

This is also a problem in other KGs. Also, Wikidata often has items with very long, noisy, error-prone labels, which can be a challenge to link to [78]. Nearly 20 percent of labels have a length larger than 100 letters, see Fig. 7. Due to the community-driven approach, false statements also occur due to errors or vandalism [47].

Another problem is that entities lack of facts (here defined as statements not being labels, descriptions, or aliases). According to Tanon et al. [109], in March 2020, DBpedia had, on average, 26 facts per entity while Wikidata had only 12.5. This is still more than YAGO4 with 5.1. To tackle such long-tail entities, different approaches are necessary. The lack of descriptions can also be a problem. Currently, around 10% of all items do not have a description, as shown in Fig. 6d. Luckily, the situation is increasingly improving.

A general problem of Entity Linking is that a label or alias can reference multiple entities, see Table 5. While around 70 million mentions point each to a unique item, 2.9 million do not. Not all of those are entities by our definition but, e.g., also classes or topics. In addition, longer labels or aliases often correspond to non-entity items. Thus, the percentage of entities with overlapping labels or aliases is certainly larger than for all items. To use Wikidata as a Knowledge Graph, one needs to be cautious of the items one will include as entities. For example, there exist Wikimedia disambiguation page items that often have the same label as an entity in the classic sense. Both Q76 and Q61909968 have Barack Obama as the label. Including those will make disambiguation more difficult. Also, the possibility of contradictory facts will make EL over Wikidata harder.

In Wikification, also known as EL on Wikipedia, large text documents for each entity exist in the knowledge graph, enabling text-heavy methods [127]. Such large textual contexts (besides the descriptions and the labels of triples itself) do not exist in Wikidata, requiring other methods or the inclusion of Wikipedia. However, as Wikidata is closely related to Wikipedia, an inclusion is easily doable. Every Wikipedia article is connected to a Wikidata item. The Wikipedia article belonging to a Wikidata item can be, for example, extracted via a SPARQL1515 query to the Wikidata Query Service1616 using the http://schema.org/about predicate. The Wikidata item of a Wikipedia article can be simply found on the article page itself or by using the Wikipedia API.1717

One can conclude that the characteristics of Wikidata, like being up to date, multilingual and hyper-relational, introduce new possibilities. At the same time, the existence of long-tail entities, noise or contradictory facts poses a challenge.

5.Datasets

5.1.Overview

This section is concerned with analyzing the different datasets which are used for Wikidata EL. A comparison can be found in Table 6. The majority of datasets on which existing Entity linkers were evaluated, were originally constructed for KGs different from Wikidata. Such a mapping can be problematic as some entities labeled for other KGs could be missing in Wikidata. Or some NIL entities that do not exist in other KGs could exist in Wikidata. Eleven datasets [16,23,24,27,29,33,46,56,69,80] were found for which Wikidata identifiers were available from the start. In the following the datasets are separated by their domain. A list of all examined datasets – including links where available – can be found in the Appendix in Table 17.

Table 6

Comparison of used datasets

| Dataset | Domain | Year | Annotation process | Purpose | Spans given | Identifiers |

| T-REx [33] | Wikipedia abstracts | 2015 | automatic | Knowledge Base Population (KBP), Relation Extraction (RE), Natural Language Generation (NLG) | ✓ | Wikidata |

| NYT2018 [68,69] | News | 2018 | manually | EL | ✓ | Wikidata, DBpedia |

| ISTEX-1000 [24] | Research articles | 2019 | manually | EL | ✓ | Wikidata |

| LC-QuAD 2.0 [27] | General complex questions (Wikidata) | 2019 | semi-automatic | Question Answering (QA) | × | DBpedia, Wikidata |

| Knowledge Net [23] | Wikipedia abstracts, biographical texts | 2019 | manually | KBP | ✓ | Wikidata |

| KORE50DYWC [80] | News | 2019 | manually | EL | ✓ | Wikidata, DBpedia, YAGO, Crunchbase |

| Kensho Derived Wikimedia Dataset [56] | Wikipedia | 2020 | automatic | Natural Language Processing (NLP) | ✓ | Wikidata, Wikipedia |

| CLEF HIPE 2020 [29] | Historical newspapers | 2020 | manually | ER, EL | ✓ | Wikidata |

| Mewsli-9 [16] | News in multiple languages | 2020 | automatic | Multilingual EL | ✓ | Wikidata |

| TweekiData [46] | Tweets | 2020 | automatic | EL | ✓ | Wikidata |

| TweekiGold [46] | Tweets | 2020 | manually | EL | ✓ | Wikidata |

1 Data from 2010

2 Original dataset on Wikipedia

5.1.1.Encyclopedic datasets

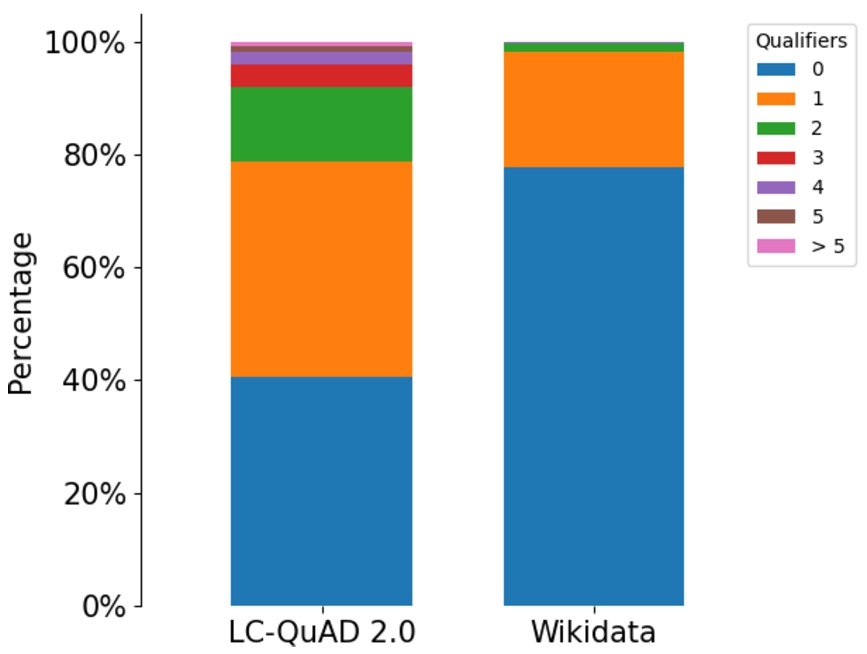

LC-QuAD 2.0 [27] is a semi-automatically created dataset for Questions Answering providing complex natural language questions. For each question, Wikidata and DBpedia identifiers are provided. The questions are generated from subgraphs of the Wikidata KG and then manually checked. The dataset does not provide annotated mentions.

T-REx [33] was constructed automatically over Wikipedia abstracts. Its main purpose is Knowledge Base Population (KBP). According to Mulang et al. [78], this dataset describes the challenges of Wikidata, at least in the form of long, noisy labels, the best.

The Kensho Derived Wikimedia Dataset [56] is an automatically created condensed subset of Wikimedia data. It consists of three levels: Wikipedia text, annotations with Wikipedia pages and links to Wikidata items. Thus, mentions in Wikipedia articles are annotated with Wikidata items. However, as some Wikidata items do not have a corresponding Wikipedia page, the annotation is not exhaustive. It was constructed for NLP in general.

5.1.2.Research-focused datasets

ISTEX-1000 [24] is a research-focused dataset containing 1000 author affiliation strings. It was manually annotated to evaluate the OpenTapioca [24] entity linker.

5.1.3.Biographical datasets

KnowledgeNet [23] is a Knowledge Base Population dataset with 9073 manually annotated sentences. The text was extracted from biographical documents from the web or Wikipedia articles.

5.1.4.News datasets

NYT2018 [68,69] consists of 30 news documents that were manually annotated on Wikidata and DBpedia. It was constructed for KBPearl [69], so its main focus is also KBP which is a downstream task of EL.

One dataset, KORE50DYWC [80], was found, which was not used by any of the approach papers. It is an annotated EL dataset based on the KORE50 dataset, a manually annotated subset of the AIDA-CoNLL corpus. The original KORE50 dataset focused on highly ambiguous sentences. All sentences were reannotated with DBpedia, Yago, Wikidata and Crunchbase entities.

CLEF HIPE 2020 [29] is a dataset based on historical newspapers in English, French and German. Only the English dataset will be analyzed in the following. This dataset is of great difficulty due to many errors in the text, which originate from the OCR method used to parse the scanned newspapers. For the English language, only a development and test set exist. In the other two languages, a training set is also available. It was manually annotated.

Mewsli-9 [16] is a multilingual dataset automatically constructed from WikiNews. It includes nine different languages. A high percentage of entity mentions in the dataset do not have corresponding English Wikipedia pages, and thus, cross-lingual linking is necessary. Again, only the English part is included during analysis.

5.1.5.Twitter datasets

TweekiData and TweekiGold [46] are an automatically annotated corpus and a manually annotated dataset for EL over tweets. TweekiData was created by using other existing tweet-based datasets and linking them to Wikidata data via the Tweeki EL. TweekiGold was created by an expert, manually annotating tweets from another dataset with Wikidata identifiers and Wikipedia page-titles.

5.2.Analysis

Table 7

Comparison of the datasets with focus on the number of documents and Wikidata entities

| Dataset | # documents | # mentions | NIL entities | Wikidata entities | Unique Wikidata entities | Mentions per document |

| T-REx [33] | 4,650,000 | 51,297,484 | 0% | 100% | 9.1% | 11.03 |

| NYT2018 [68,69]1 | 30 | – | – | – | – | – |

| ISTEX-1000 [24] (train) | 750 | 2073 | 0% | 100% | 53.7% | 2.76 |

| ISTEX-1000 [24] (test) | 250 | 670 | 0% | 100% | 65.8% | 2.68 |

| LC-QuAD 2.0 [27] | 6046 | 44,529 | 0% | 100% | 51.2% | 1.47 |

| Knowledge Net [23] (train) | 3977 | 13,039 | 0% | 100% | 30% | 3.28 |

| Knowledge Net [23] (test)2 | 1014 | – | – | – | – | – |

| KORE50DYWC [80] | 50 | 307 | 0% | 100% | 72.0% | 6.14 |

| Kensho Derived Wikimedia Dataset [56] | 14,255,258 | 121,835,453 | 0% | 100% | 3.7% | 8.55 |

| CLEF HIPE 2020 (en, dev) [29] | 80 | 470 | 46.4% | 53.6% | 31.9% | 5.88 |

| CLEF HIPE 2020 (en, test) [29] | 46 | 134 | 33.6% | 66.4% | 42.5% | 2.91 |

| Mewsli-9 (en) [16] | 12,679 | 80,242 | 0% | 100% | 48.2% | 6.33 |

| TweekiData [46] | 5,000,000 | 5,038,870 | 61.2% | 38.8% | 5.4% | 1.01 |

| TweekiGold [46] | 500 | 958 | 11.1% | 88.9% | 66.6% | 1.92 |

1 Information gathered from accompanying paper as dataset was not available

2 Available dataset did not contain mention/entity information

Table 7 shows the number of documents, the number of mentions, NIL entities and unique entities, and the mentioned ratio. What classifies as a document in a dataset depends on the dataset itself. For example, for T-REx, a document is a whole paragraph of a Wikipedia article, while for LC-QuAD 2.0, a document is just a single question. Due to this, the average number of entities in a document also varies, e.g., LC-QuAD 2.0 with 1.47 entities per document and T-REx with 11.03. If a dataset was not available, information from the original paper was included. If dataset splits were available, the statistics are also shown separately. The majority of datasets do not contain NIL entities. For the Tweeki datasets, it is not mentioned which Wikidata dump was used to annotate. For a dataset that contains NIL entities, this is problematic. On the other hand, the dump is specified for the CLEF HIPE 2020 dataset, making it possible to work on the Wikidata version with the correct entities missing.

Answer – RQ 1.

Which Wikidata EL datasets exist, how widely used are they and how are they constructed?

The preceding paragraphs answer the following two aspects of the first research question. First, we provided descriptions and an overview of all datasets created for Wikidata, including statistics on their structure. This answers which datasets exist. Furthermore, for each dataset it is stated how they were constructed, whether automatically, semi-automatically or manually. Thus information on the quality and construction process of the datasets is given. To answer the last part of the question, how widely are the datasets in use, Table 8 shows how many times each Wikidata dataset was used in Wikidata EL approaches during training or evaluation. As one can see, there exists no single dataset used in all research of EL. This is understandable as different datasets focus on different document types and domains as shown in Table 6, what again results in different approaches.

Table 8

Usage of datasets for training or evaluation

| Dataset | Number of usages in Wikidata EL approach papers |

| T-REx [33] | 2 [69,78] |

| NYT2018 [68,69] | 1 [69] |

| ISTEX-1000 [24] | 2 [24,77] |

| LC-QuAD 2.0 [27] | 2 [8,100] |

| Knowledge Net [23] | 1 [69] |

| KORE50DYWC [80] | 0 |

| Kensho Derived Wikimedia Dataset [56] | 1 [86] |

| CLEF HIPE 2020 [29] | 3 [15,65,89] |

| Mewsli-9 [16] | 1 [16] |

| TweekiData [46] | 1 [46] |

| TweekiGold [46] | 1 [46] |

Table 9

Ambiguity of mentions (existence of a match does not correspond to a correct match), NYT2018 dataset was not available and LC-QuAD 2.0 is not annotated

| Dataset | Average number of matches | No match | Exact match | More than one match |

| T-REx | 4.79 | 31.36% | 32.98% | 35.65% |

| ISTEX-1000 (train) | 23.23 | 8.06% | 26.34% | 65.61% |

| ISTEX-1000 (test) | 25.85 | 10.30% | 23.88% | 65.82% |

| Knowledge Net (train) | 21.90 | 10.41% | 22.29% | 67.3% |

| KORE50DYWC | 28.31 | 3.93% | 7.49% | 88.60% |

| Kensho Derived Wikimedia Dataset | 8.16 | 35.18% | 30.94% | 33.88% |

| CLEF HIPE 2020 (en, dev) | 24.02 | 35.71% | 11.51% | 52.78% |

| CLEF HIPE 2020 (en, test) | 17.78 | 43.82% | 6.74% | 49.44% |

| Mewsli-9 (en) | 11.09 | 16.80% | 34.90% | 47.30% |

| TweekiData | 19.61 | 19.98% | 12.01% | 68.01% |

| TweekiGold | 16.02 | 7.41% | 20.25% | 72.34% |

The difficulty of the different datasets was measured by the accuracy of a simple EL method (Table 10) and the ambiguity of mentions (Table 9). The simple EL method searches for entity candidates via an ElasticSearch index, including all English labels and aliases. It then disambiguates by taking the one with the largest tf-idf-based BM25 similarity measure score and the lowest Q-identifier number resembling the popularity. Nothing was done to handle inflections.1818 Only accessible datasets were included. As one can see, the accuracy is positively correlated with the number of exact matches. The more ambiguous the underlying entity mentions are, the more inaccurate a simple similarity measure between label and mention becomes. In this case, more context information is necessary. The simple Entity Linker was only applied to datasets that were feasible to disambiguate in that way. T-REx and the Kensho Derived Wikimedia Dataset were too large in terms of the number of documents to run the linker on commodity hardware. According to the EL performance, ISTEX-1000 is the easiest dataset. Many of the ambiguous mentions reference the most popular one, while also many exact unique matches exist. T-REx, the Kensho Derived Wikimedia Dataset and the Mewsli-9 training dataset have the largest percentage of exact matches for labels. While TweekiGold is quite ambiguous, deciding on the most prominent entity appears to produce good EL results. The most ambiguous dataset is KORE50DYWC. Additionally, just choosing the most popular entity of the exact matches results in worse performs than for example on TweekiGold which is also very ambiguous. This is due to the fact that the original KORE50 dataset focuses on difficult ambiguous entities which are not necessarily popular. The CLEF HIPE 2020 dataset also has a low EL accuracy but not due to ambiguity but many mentions with no exact match. The reason for that is the noise created by OCR.

Table 10

EL accuracy – Kensho derived Wikimedia dataset, T-REx and TweekiData are not included due to size, Acc. filtered has all exact matches removed, NYT2018 dataset was not available and LC-QuAD 2.0 is not annotated

| Dataset | Acc. | Acc. filtered |

| ISTEX-1000 (train) | 0.744 | 0.716 |

| ISTEX-1000 (test) | 0.716 | 0.678 |

| Knowledge Net (train) | 0.371 | 0.285 |

| KORE50DYWC | 0.225 | 0.187 |

| CLEF HIPE 2020 (en, dev) | 0.333 | 0.287 |

| CLEF HIPE 2020 (en, test) | 0.258 | 0.241 |

| TweekiGold | 0.565 | 0.520 |

| Mewsli-9 (en) | 0.602 | 0.490 |

The second column of Table 10 specifies the accuracy with all unique exact matches removed. This is based on the intuition that exact matches without any competitors are usually correct.

As seen in the Tables 6, 7, 9 and 10, there exists a very diverse set of datasets for EL on Wikidata, differing in the domain, document type, ambiguity and difficulty.

Answer – RQ 2.

Do the characteristics of Wikidata matter for the design of EL datasets and if so, how?

Except the Mewsli-9 [16] and CLEF HIPE 2020 [29] datasets, none of the others take any specific characteristics of Wikidata into account. The two exceptions focus on multilinguality and rely therefore directly on the language-agnostic nature of Wikidata. The CLEF HIPE 2020 dataset is designed for Wikidata and has documents for English, French and German, but each language has a different corpus of documents. The same is the case for the Mewsli-9 dataset, while here, documents in nine languages are available. In the future, a dataset similar to VoxEL [96], which is defined for Wikipedia, would be helpful. Here, each utterance is translated into multiple languages, which eases the comparison of the multilingual EL performance. Having the same corpus of documents in different languages would allow a better comparison of a method’s performance in various languages. Of course, such translations will never be perfectly comparable.

Besides that, we identified one additional characteristic which might be of relevance to Wikidata EL datasets. It is the large rate of change of Wikidata. Due to that, it would be advisable that the datasets specify the Wikidata dumps they were created on, similar to Petroni et al. [88]. Many of the existing datasets do that, yet not all. In current dumps, entities, which were available while the dataset was created, could have been removed. It is even more probable that NIL entities could now have a corresponding entity in an updated Wikidata dump version. If the EL approach now would detect it as a NIL entity, it is evaluated as correct, but in reality, this is false and vice versa. Of course, this is not a problem unique to Wikidata. Anytime, the dump is not given for an EL dataset, similar uncertainties will occur. But due to the fast growth of Wikidata (see Fig. 6a), this problem is more pronounced.

Concerning emerging entities, another variant of an EL dataset could be useful too. Two Wikidata dumps from different time points could be used to label the utterances. Such a dataset would be valuable in the context of an EL approach supporting emerging entities (e.g., the approach by Hoffart et al. [50]). With the true entities available, one could measure the quality of the created emerging entities. That is, multiple mentions assigned to the same emerging entity should also point to a single entity in the more recent KG. Also, constraining that the method needs to perform well on both KG dumps would force EL approaches to be less reliant on a fixed graph structure.

6.Approaches

Currently, the number of methods intended to work explicitly on Wikidata is still relatively small, while the amount of the ones utilizing the characteristics of Wikidata is even smaller.

There exist several KG-agnostic EL approaches [76,114,137]. However, they were omitted as their focus is being independent of the KG. While they are able to use Wikidata characteristics like labels or descriptions, there is no explicit usage of those. They are available in most other KGs. None of the found KG-agnostic EL papers even mentioned Wikidata. Though we recognize that KG-agnostic approaches are very useful in the case that a KG becomes obsolete and has to be replaced or a non-public KG needs to be used, such approaches are not included in this section. However, Table 15 in the Appendix provides an overview of the used Wikidata characteristics of the three approaches.

DeepType [90] is an entity linking approach relying on the fine-grained type system of Wikidata and the categories of Wikipedia. As type information is not evolving as fast as novel entities appear, it is relatively robust against a changing knowledge base. While it uses Wikidata, it is not specified in the paper whether it links to Wikipedia or Wikidata. Even the examination of the available code did not result in an answer as it seems that the entity linking component is missing. While DeepType showed that the inclusion of Wikidata type information is very beneficial in entity linking, we did not include it in this survey due to the aforementioned reasons. As Wikidata contains many more types (≈2,400,000) than other KGs, e.g., DBpedia (≈484,000) [109]1919), it seems to be more suitable for this fine-grained type classification. Yet, not only the number of types plays a role but also how many types are assigned per entity. In this regard, Wikipedia provides much more type information per entity than Wikidata [124]. That is probably the reason why both Wikipedia categories and Wikidata types are used together. As Wikidata is growing every minute, it may also be challenging to keep the type system up to date.

Tools without accompanying publications are not considered due to the lack of information about the approach and its performance. Hence, for instance, the Entity Linker in the DeepPavlov [17] framework is not included, although it targets Wikidata and appears to use label and description information successfully to link entities.

While the approach by Zhou et al. [136] does utilize Wikidata aliases in the candidate generation process, the target KB is Wikipedia and was therefore excluded.

The vast majority of methods is using machine learning to solve the EL task [8,15,16,18,24,53,60,65,77,78,86,89,105]. Some of those approaches solve the ER and EL jointly as an end-to-end task. Besides that, there exist two rule-based approaches [46,100] and two based on graph optimization [60,69].

The approaches mentioned above solve the EL problem as specified in Section 3. That is, other EL methods with a different problem definition also exist. For example, Almeida et al. [4] try to link street names to entities in Wikidata by using additional location information and limiting the entities only to locations. As it uses additional information about the true entity via the location, it is less comparable to the other approaches and, thus, was excluded from this survey. Thawani et al. [111] link entities only over columns of tables. The approach is not comparable since it does not use natural language utterances. The approach by Klie et al. [62] is concerned with Human-In-The-Loop EL. While its target KB is Wikidata, the focus on the inclusion of a human in EL process makes it incomparable to the other approaches. EL methods exclusively working on languages other than English [30–32,59,116] were not considered but also did not use any novel characteristics of Wikidata. In connection to the CLEF HIPE 2020 challenge [30], multiple Entity Linkers working on Wikidata were built. While short descriptions of the approaches are available in the challenge-accompanying paper, only approaches described in an own published paper were included in this survey. The approach by Kristanti and Romary [64] was not included as it used pre-existing tools for EL over Wikidata, for which no sufficient documentation was available.

Due to the limited number of methods, we also evaluated methods that are not solely using Wikidata but also additional information from a separate KG or Wikipedia. This is mentioned accordingly. Approaches linking to knowledge graphs different from Wikidata, but for which a mapping between the knowledge graphs and Wikidata exists, are also not included. Such methods would not use the Wikidata characteristics at all, and their performance depends on the quality of the other KG and the mapping.

In the following, the different approaches are described and examined according to the used characteristics of Wikidata. An overview can be found in Table 11. We split the approaches into two categories, the ones doing only EL and the ones doing ER and EL. Furthermore, to provide a better overview of the existing approaches, they are categorized by notable differences in their architecture or used features. This categorization focuses on the EL aspect of the approaches.

For each approach, it is mentioned what datasets were used in the corresponding paper. Only a subset of the datasets was directly annotated with Wikidata identifiers. Hence, datasets are mentioned, which do not occur in Section 5.

Table 11

Comparison between the utilized Wikidata characteristics of each approach

| Approach | Labels/Aliases | Descriptions | Knowledge graph structure | Hyper-relational structure | Types | Additional information |

| OpenTapioca [24] | ✓ | × | ✓ | ✓ | ✓ | × |

| Falcon 2.0 [100] | ✓ | × | ✓1 | × | × | × |

| Arjun [78] | ✓ | × | × | × | × | × |

| VCG [105] | ✓ | × | ✓ | × | × | × |

| KBPearl [69] | ✓ | × | ✓ | × | × | × |

| PNEL [8] | ✓ | ✓ | ✓ | × | × | × |

| Mulang et al. [77] | ✓ | ✓2 | ✓ | × | × | × |

| Perkins [86] | ✓ | × | ✓ | × | × | × |

| NED using DL on Graphs [18] | ✓ | × | ✓ | × | × | × |

| Huang et al. [53] | ✓ | ✓ | ✓ | × | × | Wikipedia |

| Boros et al. [15] | × | × | × | × | ✓ | Wikipedia, DBpedia |

| Provatorov et al. [89] | ✓ | ✓ | × | × | × | Wikipedia |

| Labusch and Neudecker [65] | × | × | × | × | × | Wikipedia |

| Botha et al. [16] | × | × | × | × | × | Wikipedia |

| Hedwig [60] | ✓ | ✓ | ✓ | × | × | Wikipedia |

| Tweeki [46] | ✓ | × | × | × | ✓ | Wikipedia |

2 Appears in the set of triples used for disambiguation

1 Only querying the existence of triples

6.1.Entity linking

6.1.1.Language model-based approaches

The approach by Mulang et al. [77] is tackling the EL problem with transformer models [117]. It is assumed that the candidate entities are given. For each entity, the labels of 1-hop and 2-hop triples are extracted. Those are then concatenated together with the utterance and the entity mention. The concatenation is the input of a pre-trained transformer model. With a fully connected layer on top, it is then optimized according to a binary cross-entropy loss. This architecture results in a similarity measure between the entity and the entity mention. The examined models are the transformer models Roberta [72], XLNet [131] and the DCA-SL model [130]. The approach was evaluated on three datasets with no focus on certain documents or domains: ISTEX-1000 [24], Wikidata-Disamb [18] and AIDA-CoNLL [51]. AIDA-CoNLL is a popular dataset for evaluating EL but has Wikipedia as the target. ISTEX-1000 focuses on research documents, and Wikidata-Disamb is an open-domain dataset. There is no global coherence technique applied. Overall, up to 2-hop triples of any kind are used. For example, labels, aliases, descriptions, or general relations to other entities are all incorporated. It is not mentioned if the hyper-relational structure in the form of qualifiers was used. On the one hand, the purely language-based EL results in less need for retraining if the KG changes as shown by other approaches [16,127]. This is the case due to the reliance on sub-word embeddings and pre-training via the chosen transformer models. If full word-embeddings were used, the inclusion of new words would make retraining necessary. Still, an evaluation of the model on the zero-shot EL task is missing and has to be done in the future. The reliance on the triple information might be problematic for long-tail entities which are rarely referred to and are part of fewer triples. Nevertheless, a lack of available context information is challenging for any EL approach relying on it.

The approach designed by Botha et al. [16] tackles multilingual EL. It is also crosslingual. That means it can link entity mentions to entities in a knowledge graph in a language different from the utterance one. The idea is to train one model to link entities in utterances of 100+ different languages to a KG containing not necessarily textual information in the language of the utterance. While the target KG is Wikidata, they mainly use Wikipedia descriptions as input. This is the case as extensive textual information is not available in Wikidata. The approach resembles the Wikification method by Wu et al. [127] but extends the training process to be multilingual and targets Wikidata. Candidate generation is done via a dual-encoder architecture. Here, two BERT-based transformer models [26] encode both the context-sensitive mentions and the entities to the same vector space. The mentions are encoded using local context, the mention and surrounding words, and global context, the document title. Entities are encoded by using the Wikipedia article description available in different languages. In both cases, the encoded CLS-token are projected to the desired encoding dimension. The goal is to embed mentions and entities in such a way that the embeddings are similar. The model is trained over Wikipedia by using the anchors in the text as entity mentions. There exists no limitation that the used Wikipedia articles have to be available in all supported languages. If an article is missing in the English Wikipedia but available in the German one, it is still included. Now, after the model is trained, all entities are embedded. The candidates are generated by embedding the mention and searching for the nearest neighbors. A cross-encoder is employed to rank the entity candidates, which cross-encodes entity description and mention text together by concatenating and feeding them into a BERT model. Final scores are obtained, and the entity mention is linked. The model was evaluated on the cross-lingual EL dataset TR2016hard [112] and the multilingual EL dataset Mewsli-9 [16]. Furthermore, it was tested how well it performs on an English-only dataset called WikiNews-2018 [42]. Wikidata information is only used to gather all the Wikipedia descriptions in the different languages for all entities. The approach was tested on zero- and few-shot settings showing that the model can handle an evolving knowledge graph with newly added entities that were never seen before. This is also more easily achievable due to its missing reliance on the graph structure of Wikidata or the structure of Wikipedia. It is the case that some Wikidata entities do not appear in Wikipedia and are therefore invisible to the approach. But as the model is trained on descriptions of entities in multiple languages, it has access to many more entities than only the ones available in the English Wikipedia.

6.1.2.Language model and graph embeddings-based approaches

The master thesis by Perkins [86] is performing candidate generation by using anchor link probability over Wikipedia and locality-sensitive hashing (LSH) [43] over labels and mention bi-grams. Contextual word embeddings of the utterance (ELMo [87]) are used together with KG embeddings (TransE [14]), calculated over Wikipedia and Wikidata, respectively. The context embeddings are sent through a recurrent neural network. The output is concatenated with the KG embedding and then fed into a feed-forward neural network resulting in a similarity measure between the KG embedding of the entity candidate and the utterance. It was evaluated on the AIDA-CoNLL [51] dataset. Wikidata is used in the form of the calculated TransE embeddings. Hyper-relational structures like qualifiers are not mentioned in the thesis and are not considered by the TransE embedding algorithm and, thus, probably not included. The used KG embeddings make it necessary to retrain when the Wikidata KG changes as they are not dynamic.

6.1.3.Word and graph embeddings-based approaches

In 2018, Cetoli et al. [18] evaluated how different types of basic neural networks perform solely over Wikidata. Notably, they compared the different ways to encode the graph context via neural methods, especially the usefulness of including topological information via GNNs [106,129] and RNNs [49]. There is no candidate generation as it was assumed that the candidates are available. The process consists of combining text and graph embeddings. The text embedding is calculated by applying a Bi-LSTM over the Glove Embeddings of all words in an utterance. The resulting hidden states are then masked by the position of the entity mention in the text and averaged. A graph embedding is calculated in parallel via different methods utilizing GNNs or RNNs. The end score is the output of one feed-forward layer having the concatenation of the graph and text embedding as its input. It represents if the graph embedding is consistent with the text embedding. Wikidata-Disamb30 [18] was used for evaluating the approach. Each example in the dataset also contains an ambiguous negative entity, which is used during training to be robust against ambiguity. One crucial problem is that those methods only work for a single entity in the text. Thus, it has to be applied multiple times, and there will be no information exchange between the entities. While the examined algorithms do utilize the underlying graph of Wikidata, the hyper-relational structure is not taken into account. The paper is more concerned with comparing how basic neural networks work on the triples of Wikidata. Due to the pure analytical nature of the paper, the usefulness of the designed approaches to a real-world setting is limited. The reliance on graph embeddings makes it susceptible to change in the Wikidata KG.

6.2.Entity recognition and entity linking

The following methods all include ER in their EL process.

6.2.1.Language model-based approaches

In connection to the CLEF 2020 HIPE challenge [30], multiple approaches [15,65,89] for ER and EL of historical newspapers on Wikidata were developed. Documents were available in English, French and German. Three approaches with a focus on the English language are described in the following. Differences in the usage of Wikidata between the languages did not exist. Yet, the approaches were not multilingual as different models were used and/or retraining was necessary for different languages.

Boros et al. [15] tackled ER by using a BERT model with a CRF layer on top, which recognizes the entity mentions and classifies the type. During the training, the regular sentences are enriched with misspelled words to make the model robust against noise. For EL, a knowledge graph is built from Wikipedia, containing Wikipedia titles, page ids, disambiguation pages, redirects and link probabilities between mentions and Wikipedia pages are calculated. The link probability between anchors and Wikipedia pages is used to gather entity candidates for a mention. The disambiguation approach follows an already existing method [63]. Here, the utterance tokens are embedded via a Bi-LSTM. The token embeddings of a single mention are combined. Then similarity scores between the resulting mention embedding and the entity embeddings of the candidates are calculated. The entity embeddings are computed according to Ganea and Hofmann [39]. These similarity scores are combined with the link probability and long-range context attention, calculated by taking the inner product between an additional context-sensitive mention embedding and an entity candidate embedding. The resulting score is a local ranking measure and is again combined with a global ranking measure considering all other entity mentions in the text. In the end, additional filtering is applied by comparing the DBpedia types of the entities to the ones classified during the ER. If the type does not match or other inconsistencies apply, the entity candidate gets a lower rank. Here, they also experimented with Wikidata types, but this resulted in a performance decrease. As can be seen, technically, no Wikidata information besides the unsuccessful type inclusion is used. Thus, the approach resembles more of a Wikification algorithm. Yet, they do link to Wikidata as the HIPE task dictates it, and therefore, the approach was included in the survey. New Wikipedia entity embeddings can be easily added [39] which is an advantage when Wikipedia changes. Also, its robustness against erroneous texts makes it ideal for real-world use. This approach reached SOTA performance on the CLEF 2020 HIPE challenge.

Labusch and Neudecker [65] also applied a BERT model for ER. For EL, they used mostly Wikipedia, similar to Boros et al. [15]. They built a knowledge graph containing all person, location and organization entities from the German Wikipedia. Then it was converted to an English knowledge graph by mapping from the German Wikipedia Pages via Wikidata to the English ones. This mapping process resulted in the loss of numerous entities. The candidate generation is done by embedding all Wikipedia page titles in an Approximative Nearest Neighbour index via BERT. Using this index, the neighboring entities to the mention embedding are found and used as candidates. For ranking, anchor-contexts of Wikipedia pages are embedded and fed into a classifier together with the embedded mention-context, which outputs whether both belong to the same entity. This is done for each candidate for around 50 different anchor contexts. Then, multiple statistics on those similarity scores and candidates are calculated, which are used in a Random Forest model to compute the final ranks. Similar to the previous approach, Wikidata was only used as the target knowledge graph, while information from Wikipedia was used for all the EL work. Thus, no special characteristics of Wikidata were used. The approach is less affected by a change of Wikidata due to similar reasons as the previous approach. This approach lacks performance compared to the state of the art in the HIPE task. The knowledge graph creation process produces a disadvantageous loss of entities, but this might be easily changed.

Provatorov et al. [89] used an ensemble of fine-tuned BERT models for ER. The ensemble is used to compensate for the noise of the OCR procedure. The candidates were generated by using an ElasticSearch index filled with Wikidata labels. The candidate’s final rank is calculated by taking the search score, increasing it if a perfect match applies and finally taking the candidate with the lowest Wikidata identifier number (indicating a high popularity score). They also created three other methods of the EL approach: (1) The ranking was done by calculating cosine similarity between the embedding of the utterance and the embedding of the same utterance with the mention replaced by the Wikidata description. Furthermore, the score is increased by the Levenshtein distance between the entity label and the mention. (2) A variant was used where the candidate generation is enriched with historical spellings of Wikidata entities. (3) The last variant used an existing tool [115], which included contextual similarity and co-occurrence probabilities of mentions and Wikipedia articles. In the tool, the final disambiguation is based on the ment-norm method by Le and Titov [66]. The approach uses Wikidata labels and descriptions in one variant of candidate ranking. Beyond that, no other characteristics specific to Wikidata were considered. Overall, the approach is very basic and uses mostly pre-existing tools to solve the task. The approach is not susceptible to a change of Wikidata as it is mainly based on language and does not need retraining.

The approach designed by Huang et al. [53] is specialized in short texts, mainly questions. The ER is performed via a pre-trained BERT model [26] with a single classification layer on top, determining if a token belongs to an entity mention. The candidate search is done via an ElasticSearch2020 index, comparing the entity mention to labels and aliases by exact match and Levenshtein distance. The candidate ranking uses three similarity measures to calculate the final rank. A CNN is used to compute a character-based similarity between entity mention and candidate label. This results in a similarity matrix whose entries are calculated by the cosine similarity between each character embedding of both strings. The context is included in two ways. First, between the utterance and the entity description, by embedding the tokens of each sequence through a BERT model. Again, a similarity matrix is built by calculating the cosine similarity between each token embedding of both utterance and description. The KG is also considered by including the triples containing the candidate as a subject. For each such triple, a similarity matrix is calculated between the label concatenation of the triple and the utterance. The most representative features are then extracted out of the matrices via max-pooling, concatenated and fed into a two-layer perceptron. The approach was evaluated on the WebQSP [105] dataset, which is composed of short questions from web search logs. Wikidata labels, aliases and descriptions are utilized. Additionally, the KG structure is incorporated through the labels of candidate-related triples. This is similar to the approach by Mulang et al. [77], but only 1-hop triples are used. There is also no hyper-relational information considered. Due to its reliance on text alone and using a pre-trained language model with sub-word embeddings, it is less susceptible to changes of Wikidata. While the approach was not empirically evaluated on the zero-shot EL task, other approaches using language models (LM) [16,73,127] were and indicate a good performance.

6.2.2.Word embedding-based approaches

Arjun [78] tries to tackle specific challenges of Wikidata like long entity labels and implicit entities. Published in 2020, Arjun is an end-to-end approach utilizing the same model for ER and EL. It is based on an Encoder-Decoder-Attention model. First, the entities are detected via feeding Glove [85] embedded tokens of the utterance into the model and classifying each token as being an entity or not. Afterward, candidates are generated in the same way as in Falcon 2.0 [100] (see Section 6.2.6). The candidates are then ranked by feeding the mention, the entity label, and its aliases into the model and calculating the score. The model resembles a similarity measure between the mention and the entity labels. Arjun was trained and evaluated on the T-REx [33] dataset consisting of extracts out of various Wikipedia articles. It does not use any global ranking. Wikidata information is used in the form of labels and aliases in the candidate generation and candidate ranking. The model was trained and evaluated using GloVe embeddings, for which new words are not easily addable. New entities are therefore not easily supported. However, the authors claim that one can replace them with other embeddings like BERT-based ones. While those proved to perform quite well in zero-shot EL [16,127], this was usually done with more context information besides labels. Therefore it remains questionable if using those would adapt the approach for zero-shot EL.

6.2.3.Word and graph embeddings-based approaches

In 2018, Sorokin and Gurevych [105] were doing joint end-to-end ER and EL on short texts. The algorithm tries to incorporate multiple context embeddings into a mention score, signaling if a word is a mention, and a ranking score, signaling the candidate’s correctness. First, it generates several different tokenizations of the same utterance. For each token, a search is conducted over all labels in the KG to gather candidate entities. If the token is a substring of a label, the entity is added. Each token sequence gets then a score assigned. The scoring is tackled from two sides. On the utterance side, a token-level context embedding and a character-level context embedding (based on the mention) are computed. The calculation is handled via dilated convolutional networks (DCNN) [133]. On the KG side, one includes the labels of the candidate entity, the labels of relations connected to a candidate entity, the embedding of the candidate entity itself, and embeddings of the entities and relations related to the candidate entity. This is again done by DCNNs and, additionally, by fully connected layers. The best solution is then found by calculating a ranking and mention score for each token for each possible tokenization of the utterance. All those scores are then summed up into a global score. The global assignment with the highest score is then used to select the entity mentions and entity candidates. The question-based EL datasets WebQSP [105] and GraphQuestions [108] were used for evaluation. GraphQuestions contains multiple paraphrases of the same questions and is used to test the performance on different wordings. The approach uses the underlying graph, label and alias information of Wikidata. Graph information is used via connected entities and relations. They also use TransE embeddings, and therefore no hyper-relational structure. Due to the usage of static graph embeddings, retraining will be necessary if Wikidata changes.

PNEL [8] is an end-to-end (E2E) model jointly solving ER and EL focused on short texts. PNEL employs a Pointer network [118] working on a set of different features. An utterance is tokenized into multiple different combinations. Each token is extended into the (1) token itself, (2) the token and the predecessor, (3) the token and the successor, and (4) the token with both predecessor and successor. For each token combination, candidates are searched for by using the BM25 similarity measure. Fifty candidates are used per tokenization combination. Therefore, 200 candidates (not necessarily 200 distinct candidates) are found per token. For each candidate, features are extracted. Those range from the simple length of a token to the graph embeddings of the candidate entity. All features are concatenated to a large feature vector. Therefore, per token, a sequence of 200 such features vectors exists. Finally, the concatenation of those sequences of each token in the sentence is then fed into a Pointer network. At each iteration of the Pointer network, it points to one distinct candidate in the network or an END token marking no choice. Pointing is done by computing a softmax distribution and choosing the candidate with the highest probability. Note that the model points to a distinct candidate, but this distinct candidate can occur multiple times. Thus, the model does not necessarily point to only a single candidate of the 200 ones. PNEL was evaluated on several QA datasets, namely WebQSP [105], SimpleQuestions [13] and LC-QuAD 2.0 [27]. SimpleQuestions focuses, as the name implies, on simple questions containing only very few entities. LC-QuAD 2.0, on the other hand, contains both, simple and more complex, longer questions including multiple entities. The entity descriptions, labels and aliases are all used. Additionally, the graph structure is included by TransE graph embeddings, but no hyper-relational information was incorporated. E2E models can often improve the performance of the ER. Most EL algorithms employed in the industry often use older ER methods decoupled from the EL process. Thus, such an E2E EL approach can be of use. Nevertheless, due to its reliance on static graph embeddings, complete retraining will be necessary if Wikidata changes.

6.2.4.Non-NN ML-based approaches

OpenTapioca [24] is a mainly statistical EL approach published in 2019. While the paper never mentions ER, the approach was evaluated with it. In the code, one can see that the ER is done by a SolrTextTagger analyzer of the Solr search platform.2121 The candidates are generated by looking up if the mention corresponds to an entity label or alias in Wikidata stored in a Solr collection. Entities are filtered out which do not correspond to the type person, location or organization. OpenTapioca is based on two main features, which are local compatibility and semantic similarity. First, local compatibility is calculated via a popularity measure and a unigram similarity measure between entity label and mention. The popularity measure is based on the number of sitelinks, PageRank scores, and the number of statements. Second, the semantic similarity strives to include context information in the decision process. All entity candidates are included in a graph and are connected via weighted edges. Those weights are calculated via a statistical similarity measure. This measure includes how likely it is to jump from one entity candidate to another while discounting it by the distance between the corresponding mentions in the utterance. The resulting adjacency matrix is then normalized to a stochastic matrix that defines a Markov Chain. One now propagates the local compatibility using this Markov Chain. Several iterations are then taken, and a final score is inferred via a Support Vector Machine. It supports multiple entities per utterance. OpenTapioca is evaluated on AIDA-CoNLL [51], Microposts 2016 [121], ISTEX-1000 [24] and RSS-500 [94]. RSS-500 consists of news-based examples and Microposts 2016 focuses on shorter documents like tweets. OpenTapioca was therefore evaluated on many different types of documents. The approach is only trained on and evaluated for three types of entities: locations, persons, and organizations. It facilitates Wikidata-specific labels, aliases, and sitelinks information. More importantly, it also uses qualifiers of statements in the calculation of the PageRank scores. But the qualifiers are only seen as additional edges to the entity. The usage in special domains is limited due to its restriction to only three types of entities, but this is just an artificial restriction. It is easily updatable if the Wikidata graph changes as no immediate retraining is necessary.

6.2.5.Graph optimization-based approaches