Teaching-learning-based optimization with a fuzzy grouping learning strategy for global numerical optimization

Abstract

The Teaching-Learning-Based Optimization (TLBO) algorithm is a novel heuristic method that is inspired by the philosophy of teaching and learning in a class. In the “Teacher Phase” of the original TLBO algorithm, all learners are combined in one group and learn only from the teacher, which quickly leads to declining population diversity. Utilizing fuzzy K-means clustering to objectively divide all learners into smaller-sized groups more closely conforms to the modern idea of intra-class grouping for teaching and learning. Furthermore, fuzzy K-means clustering can objectively divide learners as nearly as possible according to their interests and abilities, which helps each learner to grow to his or her fullest extent. This paper presents a novel version of TLBO, TLBO with a Fuzzy Grouping Learning Strategy (FGTLBO), in which fuzzy K-means clustering is used to create K centers, each of which acts as the mean of its corresponding group. Performance and accuracy of the FGTLBO algorithm are examined on CEC2005 standard benchmark functions, and these results are compared with various other versions of TLBO. The experimental results verify that the FGTLBO algorithm is very competitive in terms of solution quality and convergence rate.

1Introduction

Rao, et al. [21] initially proposed the Teaching-Learning-Based Optimization (TLBO) algorithm, which is based on swarm intelligence and applied to continuous, non-linear, multivariable, multimodal, large-scale optimization problems [22]. TLBO was inspired by the traditional teaching and learning phenomenon of a classroom. Compared with other evolutionary algorithms, such as Particle Swarm Optimization (PSO) [9], Differential Evolution (DE) [18], Artificial Bee Colony (ABC) [3], Group Search Optimizer (GSO) [24], Cuckoo Search (CS) [26], Water Cycle algorithm (WCA) [6], Differential Search algorithm (DSA) [15], Backtracking Search algorithm (BSA) [14], and Interior Search algorithm (ISA) [2], the TLBO algorithm has simple computational characteristics and few specific controlling parameters. These features have attracted great interest among researchers in recent years.

Since its introduction, the TLBO algorithm has been applied in various optimization problems such as parameters optimization of selected casting processes [20], multi-objective design optimization of a plate-fin heat sink [19], multi-objective optimization of heat exchangers [23], Dynamic Economic Emission Dispatch [25], Dynamic Voltage Restorer (DVR) compensator [13], and combinatorial problems on flow shop and job shop scheduling cases [1]. Niu, et al. put forward an improved TLBO algorithm to identify the parameters of PEM fuel cell and solar cell models [17]. Similarly, Ghasemi et al. proposed an improved TLBO algorithm using the L

Although TLBO has been successfully applied in many fields, Zou, et al. [5] report that the original TLBO algorithm features some undesirable dynamical properties that degrade its searching ability. One of the most important problems is that the TLBO algorithm tends to get trapped in local optima solutions because of diversity loss; in other words, over a certain period of time, each member in the population tends toward a fixed value.

Several variants have been introduced to improve the performance of the original TLBO. For example, a ring neighborhood topology was introduced into the original algorithm to maintain the exploration ability of the population and the diversity of individuals. Results show that the proposed method increased the solution quality [11]. The Gaussian sampling learning strategy was introduced into the original TLBO to improve its performance, and it balanced the exploration and exploitation in the “Teacher Phase” [4]. Subsequently, the quantum-behaved learning strategy was introduced into the original TLBO algorithm to enhance the exploitative nature of the population; results show that the proposed algorithm is a challenging method [5].

Although these variants on the TLBO algorithm show some improvements, they fail to focus objectively on more groups. To this aim, we present a novel version of the algorithm, TLBO with a fuzzy grouping learning strategy (FGTLBO). In FGTLBO, fuzzy K-means clustering is used to create K centers, which act as the means of their corresponding groups in the “Teacher Phase” in order to form groups objectively. This modification both improves the diversity of the population, which needs to be preserved in order to discourage premature convergence, and achieves balance between the explorative and exploitative tendencies inherent in pursuing better solutions.

The structure of this paper is organized as follows: Section 2 presents the original TLBO, which provides the necessary preparation for understanding the rest of the paper. Section 3 proposes FGTLBO. Section 4 analyzes the results of our comparative study and discusses the influence of the size of the group on the performance of the FGTLBO algorithm. Finally, Section 5 presents the conclusion and proposals for future work.

2Overview of original TLBO algorithm

Inspired by the philosophy of teaching and learning in a traditional classroom, Rao, et al. developed the TLBO algorithm for the purpose of optimizing numerical problems. This algorithm considers the population as a group of learners, while different design variables are analogous to different subjects offered to learners. In the end, the learner’s grade is equivalent to the “fitness” value of the optimization problem. The best solution out of the entire population is considered to represent the teacher, who is generally the most knowledgeable person in a class and shares his knowledge with the learners.

This method’s operating procedure consists of two learning phases, namely the “Teacher Phase” and the “Learner Phase.” The “Teacher Phase” is analogous to learning from the teacher, whereas the “Learner Phase” means learning through interaction among learners. The phases are defined as follows:

(1)

2.1Learner phase

In this phase, improvement in the knowledge of a learner X i depends mainly on peer learning from an optimal learner X j . Based on the knowledge levels of these two learners, two states may occur: if X j is better than X i , X i will move towards X j ; otherwise, it will be moved from X i . The learner phase can be expressed as follows:

(2)

(3)

3TLBO with a fuzzy grouping learning strategy (FGTLBO)

In the original TLBO, each learner is learning from a teacher in only one class, which easily leads to declining population diversity. On the other hand, utilizing fuzzy K-means clustering to group learners objectively conforms to the modern model of intra-class grouping for teaching and learning more closely. Motivated by these facts, we propose FGTLBO, a novel version of TLBO that incorporates a fuzzy grouping learning strategy.

3.1Fuzzy K-means clustering

Fuzzy K-means clustering is a data clustering technique in which a dataset is grouped into K clusters such that every data point in the dataset belonging to a cluster will have a high degree of belonging or membership within that cluster, while another data point that lies far away from the center of the cluster will have a low degree of belonging or membership within that cluster. Traditional hard clustering demands that every point within a data set be assigned into a cluster precisely. Fuzzy K-means clustering is an extension of traditional hard clustering such that the corresponding clustering centers used in fuzzy K-means clustering are closer to the real centers of groups than those used in traditional hard clustering.

The clustering centers determined by these groups may be far away from each other if the centers are close to the actual centers of the groups under fuzzy k-means clustering. Conversely, the centers may be close to each other. According to formula in (4) the “Teacher Phase” of the original TLBO algorithm, each clustering center is considered to be the center of a certain group. If the centers of the these groups are close to one another, some individuals of the next generation will end up very similar; in other words, results easily fall into local optima, greatly reducing the effectiveness of the original TLBO algorithm. Therefore, the theory of fuzzy K-means clustering makes a suitable addition to the original TLBO algorithm.

Fuzzy K-means clustering was initially developed by Dunn and later generalized by Bezdek [8] by means of a family of objective functions. Fuzzy K-means clustering is an iterative method that minimizes the objective function defined as follows:

(5)

(6)

(7)

(8)

The membership functions and cluster centers are updated by the following expressions:

(9)

(10)

This iteration will stop when

3.2Fuzzy grouping

Intra-class grouping for teaching and learning constitutes a method of classroom management that divides learners into two or more groups within the classroom to provide for individual differences. To more closely simulate the processes of teaching and learning in a modern class, we will divide all learners into small sized groups using fuzzy K-means clustering. Fuzzy K-means clustering can objectively divide learners as accurately as possible according to their interests and abilities, which will help grow their abilities and creativities to their fullest extents. The number K of cluster centers in fuzzy K-means clustering is identical to the number m of the dividing group strategy. For simplicity, we will divide all learners into groups based on Euclidean distance, and each group uses its own members to search for a better area in the search space. Once these groups are constructed, we can utilize them to update the corresponding group mean. Table 1 summarizes the pseudo code for fuzzy grouping as explained above.

3.3The pseudo code for the FGTLBO algorithm

We developed FGTLBO by incorporating the fuzzy grouping learning strategy into the “Teacher Phase” of the original TLBO framework. Table 2 presents the pseudo code for the FGTLBO algorithm.

3.4The global convergence analysis of the FGTLBO algorithm based on Markov chain

We consider only the minimum global optimization problem without loss of generality. Let x satisfy solution space Ω for the FGTLBO algorithm, namely x ∈ Ω. Let

(11)

Definition 1. For global solution x

*, if there exists a discrete time stochastic process

Theorem1. The FGTLBO algorithm converges to global solution x * with probability 1.

Proof: Suppose that all learners are sorted in ascending order according to their results. There exists a transition probability matrix P (t) of finite state Markov chain generation t steps satisfying the following:

(12)

Based on the nature of the Markov chain P (k) = P k and the convergence to a positive stable random matrix by the means of P (k),

(13)

(14)

Furthermore,

(15)

Therefore,

(16)

Thus, the FGTLBO algorithm can converge to a global solution with probability 1.

4Experiments and comparisons

4.1Benchmark functions used in experiments

A large set of CEC2005 tested benchmark functions [16] were used in experiments to assess the performance of the FGTLBO algorithm. Based on shape characteristics, the set of benchmark functions are grouped into unimodal functions (F 1 to F 5), basic multimodal functions (F 6 to F 12), and expanded multimodal functions (F 13 to F 14).

4.2Experimental environment, termination criteria, and control parameters

All experiments were conducted using Matlab7.9 on the same machine with a Celoron2.26 GHz CPU, 2GB of memory, and a Windows XP operating system. For the purpose of decreasing statistical errors, all experiments were independently run 25 times for 14 test functions of 30 variables, with 300,000 Function Evaluations (FES) as the stopping criterion. The FGTLBO algorithm uses as parameters N = 50 and K = m = 3. The parameters of the other algorithms agree well with the original papers.

4.3Performance metric

The mean value F mean and standard deviation SD of the error value function F (x) - F (x *) were recorded to evaluate the performance of each algorithm, where F (x) and F (x *) denote the test problem’s best fitness value and real global optimization value, respectively. Statistical analysis was used to compare the results obtained by the algorithms and to verify whether overall optimization performance differs significantly among various algorithms. To statistically compare the FGTLBO algorithm with four other TLBO algorithms, the Wilcoxons rank sum test [27] was applied at a 0.05 significance level to evaluate the median fitness values F mean of two solutions from any two algorithms. This statistical tool is frequently employed in the literature to compare problem-solving success among the Computational-Intelligence algorithms.

4.4Numerical experiments and results

This section compares the FGTLBO algorithm with four other TLBO variants. Corresponding tables present the experimental results, and the last three rows of each table summarize the comparison results. The best results are shown in bold.

4.4.1Comparison of FGTLBO algorithm and four relevant TLBO algorithms

This section compares the FGTLBO algorithm with four relevant TLBO algorithms. Based on the statistical results in Table 3, it appears that none of the algorithms can perfectly solve the fourteen CEC2005 standard benchmark functions. Judging by the Wilcoxon’s rank sum test listed in the last three rows of Table 3, the FGTLBO algorithm outperforms the original TLBO algorithm on all test functions except F 4. For test function F 3, the mean value F mean of the FGTLBO algorithm yields 4.07E-22, which is a significantly better result than those produced by the other four relevant TLBO algorithms.

The incorporation of a fuzzy grouping learning strategy allows the FGTLBO algorithm to achieve promising results on unimodal and multimodal functions. The FGTLBO algorithm outperforms TLBO, ETLBO, NSTLBO, and DGSTLBO on twelve, eight, thirteen, and thirteen of the fourteen test functions, respectively. The TLBO algorithm performs best on test function F 4, while the DGSTLBO algorithm performs worse than other four relevant algorithms on test functions F 1, F 3, F 5, F 6, and F 12. The ETLBO algorithm performs better than FGTLBO algorithm only on test functions F 4, F 6, F 12, and F 13. The NSTLBO algorithm performs worse than other four relevant algorithms on test functions F 2, F 4, F 9, F 10, F 11, F 13, and F 14. Notably, the DGSTLBO, NSTLBO, ETLBO, TLBO, and DATLBO algorithms perform similarly on test function F 4.

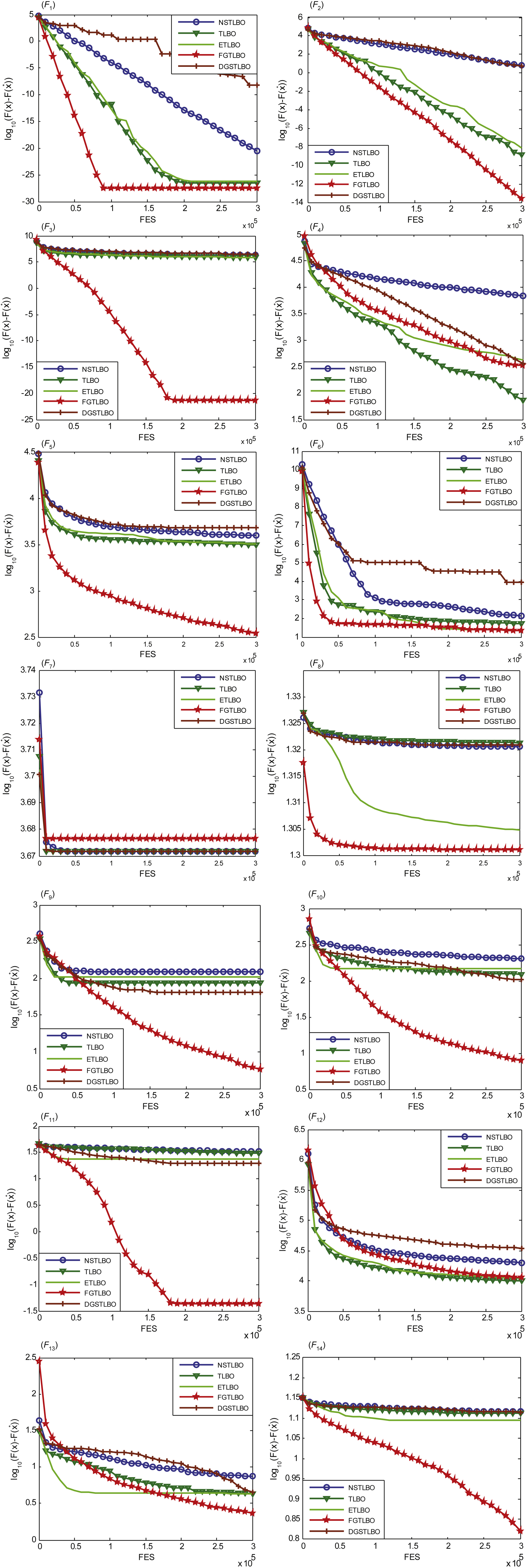

Figure 1 presents the convergence graphs of the five relevant TLBO algorithms on fourteen test functions for D = 30. Based on the convergence graphs, the FGTLBO algorithm shows better convergence, stability, and robustness in most cases than the other four algorithms for test functions F 1, F 2, F 3, F 5, F 6, F 8, F 9, F 10, F 11, F 12, F 13, and F 14. In the case of test function F 4, the convergence rate of the FGTLBO algorithm is similar to those of the other four relevant TLBO algorithms. For test function F 7, on the other hand, the convergence rate of the FGTLBO algorithm is worse than those of the other four algorithms.

The TLBO algorithm shows better convergence, stability, and robustness than the other four relevant TLBO algorithms only on test functions F 4 and F 12. The ETLBO algorithm shows better convergence than the TLBO algorithm only on test function F 12. The DGSTLBO algorithm shows worse convergence on test functions F 1, F 2, F 5, F 6, F 12, and F 13, and the NSTLBO algorithm shows worse convergence on test functions F 4, F 9, and F 10. Overall, the FGTLBO algorithm performs significantly better than the ETLBO, NSTLBO, DGSTLBO, and original TLBO algorithms. This analysis suggests that fuzzy grouping draws its strength from increasing the diversity of the population to discourage premature convergence. Therefore, the FGTLBO algorithm has a higher probability of efficiently exploring the search space and finding a globalsolution.

4.4.2Influence of the number of groups on the performance of the FGTLBO algorithm

In order to consider the influence of different numbers of groups on the performance of the FGTLBO algorithm, the group quantity m was set from 2 to 11 while other parameters remained the same as described in Section 4.2. All of the experiments were conducted on test functions F 1 through F 14. Tables 4-5 show the statistical results for different numbers of groups. These results illustrate that FGTLBO (m = 3) demonstrated significantly better overall performance than other cases. Moreover, as m increased from 4 to 11, the performance of the FGTLBO algorithm did not improve, which shows that a situation with more groups is not optimal choice for the FGTLBO algorithm. Because smaller m values may result in premature convergence while larger m values greatly decrease the probability of finding the correct search direction. Therefore, we recommend that the number of groups m for the FGTLBO algorithm be set at 3.

5Conclusions and future work

This paper presented FGTLBO, a new version of the TLBO algorithm in which a fuzzy K-means clustering strategy is used to create K centers, each of which acts as the mean of its corresponding group in the algorithm’s “Teacher Phase.” To more closely simulate the processes of teaching and learning in a modern classroom, we objectively divided all learners into small-sized groups. This conforms to the idea of modern intra-class groupings for teaching and learning. As fuzzy K-means clustering can objectively divide learners as nearly as possible on the basis of their interests and abilities, it can help each learner grow to his or her fullest extent. We examined the performance and accuracy of the FGTLBO algorithm on CEC2005 standard benchmark functions and compared the results with various classical versions of the TLBO algorithm. The experimental results verify that the FGTLBO algorithm is very competitive in terms of solution quality and convergence rate under most experimental conditions. Further research can assess how well the FGTLBO algorithm performs in parameter optimization of a multi-pass milling process.

Acknowledgments

The authors wish to acknowledge the National Natural Science Foundation of China (51575442 and 61402361) and the Shaanxi Province Education Department (11JS074) for their financial support for this work.

References

1 | Baykasoĝlu A, Hamzadayi A, Köse SY (2014) Testing the performance of teaching–learning based optimization (TLBO) algorithm on combinatorial problems: Flow shop and job shop scheduling cases Information Sciences 276: 1 204 218 |

2 | Gandomi AH (2014) Interior search algorithm (ISA): A novel approach for global optimization ISA Trans 53: 4 1168 1183 |

3 | Karaboga D, Basturk B (2007) A powerful and efficient algorithm for numerical function optimization: Artificial bee colony (ABC) algorithm Journal of Global Optimization 39: 3 459 471 |

4 | Zou F (2014) Bare-bones teaching-learning-based optimization The Scientific World Journal 115: 10 1447 1462 |

5 | Zou F (2014) Teaching–learning-based optimization with dynamic group strategy for global optimization Information Sciences 273: 8 112 131 |

6 | Eskandar H (2012) Water cycle algorithm –A novel metaheuristic optimization method for solving constrained engineering optimization problems Computers & Structures 110: 10 151 166 |

7 | Hosseinpour H, Bastaee B (2015) Optimal placement of on-load tap changers in distribution networks using SA-TLBO method International Journal of Electrical Power & Energy Systems 64: 5 1119 1128 |

8 | Bezdek JC, Ehrlich R, Full W (1984) FCM: The fuzzy c-means clustering algorithm Comput Geosci 10: 84 191 203 |

9 | Kennedy J, Eberhart R (1995) Particle swarm optimization IEEE International Conference on Neural Networks 4: 1 129 132 |

10 | Li JQ, Pan QK, Mao K (2015) A discrete teaching-learning-based optimization algorithm for realistic flowshop rescheduling problems Engineering Applications of Artificial Intelligence 37: 7 279 292 |

11 | Wang L (2014) An improved teaching–learning-based optimization with neighborhood search for applications of ANN Neurocomputing 143: 16 231 247 |

12 | Ghasemi M (2015) An improved teaching–learning-based optimization algorithm using Lévy mutation strategy for non-smooth optimal power flow International Journal of Electrical Power & Energy Systems 65: 3 375 384 |

13 | Khalghani MR, Khooban MH (2014) A novel self-tuning control method based on regulated bi-objective emotional learning controller’s structure with TLBO algorithm to control DVR compensator Applied Soft Computing 24: 13 912 922 |

14 | Civicioglu P (2013) Backtracking search optimization algorithm for numerical optimization problems Applied Mathematics and Computation 219: 15 8121 8144 |

15 | Civicioglu P (2012) Transforming geocentric cartesian coordinates to geodetic coordinates by using differential search algorithm Computers & Geosciences 46: 3 229 247 |

16 | Suganthan PN, Hansen N, Liang JJ, Deb K, Chen YP, Auger A, Tiwari S (2005) Problem definitions and evaluation criteria for the CEC special session on real-parameter optimization 82: 7 321 329 |

17 | Niu Q, Zhang H, Li K (2014) An improved TLBO with elite strategy for parameters identification of PEM fuel cell and solar cell models International Journal of Hydrogen Energy 39: 8 3837 3854 |

18 | Storn R, Price K (1997) Differential evolution –A simple and efficient heuristic for global optimization over continuous spaces Journal of Global Optimization 11: 4 341 359 |

19 | Rao RV, Waghmare GG (2015) Multi-objective design optimization of a plate-fin heat sink using a teaching-learning-based optimization algorithm Applied Thermal Engineering 76: 2 521 529 |

20 | Rao RV, Kalyankar VD, Waghmare G (2014) Parameters optimization of selected casting processes using teaching–learning-based optimization algorithm Applied Mathematical Modelling 38: 2 5592 5608 |

21 | Rao RV, Savsani VJ, Vakharia DP (2011) Teaching–learning-based optimization: A novel method for constrained mechanical design optimization problems Computer-Aided Design 43: 3 303 315 |

22 | Rao RV, Savsani VJ, Vakharia DP (2012) Teaching–learning-based optimization: An optimization method for continuous non-linear large scale problems Information Sciences 183: 1 1 15 |

23 | Rao RV, Patel V (2013) Multi-objective optimization of heat exchangers using a modified teaching-learning-based optimization algorithm Applied Mathematical Modelling 37: 3 1147 1162 |

24 | He S, Wu QH, Saunders JR (2009) Group search optimizer: An optimization algorithm inspired by animal searching behavior IEEE Transactions on Evolutionary Computation 13: 5 973 990 |

25 | Niknam T, Sadeghi MS, Golestaneh F (2012) Theta-multiobjective teaching-learning-based optimization for dynamic economic emission dispatch IEEE Systems Journal 6: 2 341 352 |

26 | Yang XS, Deb S (2009) Cuckoo search via Lévy flights, Nature & Biologically Inspired Computing, NaBIC World Congress 13: 5 210 214 |

27 | Wang Z Cai Y, Zhang Q (2011) Differential evolution with composite trial vector generation strategies and control parameters IEEE Tran Evol Comput 15: 1 55 56 |

Figures and Tables

Fig.1

Convergence graphs of the mean function error values versus the number of FES on fourteen test functions, with comparisons of five algorithms’ results.

Table 1

The pseudo code for fuzzy grouping

| Begin |

| Input: N, D, K, e, m, t |

| Set: X = {x1,x2, … ,xN};U(0) = rand(K,N);U(0) |

| =U(0)./(ones(K,1)*sum(U(0))); |

| Dist(K,N) = 0;U(K,N) = 0;P(K, D) = 0;t = 0; |

| 01 While 1 |

| 02 t = ıt+1; U(t) = U(0). ∧m; P = U(t)*X./(ones(D,1)*sum(U(t)’))’; |

| 03 For i = 1:K |

| 04 For j = 1:N |

| 05 Dist (i,j) = norm(P(i,:)-X(j,:)); |

| 06 End For |

| 07 End For |

| 08 U(t) = 1./(Dist. ∧ m.*(ones(K,1)*sum(Dist. ∧(-m)))); |

| 09 fitness(t) = sum(sum(U(t).*Dist.∧2)); |

| 10 If norm(U(t)-U0,Inf)< e |

| 11 break; |

| 12 End If |

| 13 U(0) = U(t); re = []; |

| 14 For i = 1:N |

| 15 tmp = []; |

| 16 For j = 1:K |

| 17 tmp = [tmp norm(x(i,:)-P(j,:))]; |

| 18 End For |

| 19 [junk index] = min(tmp); re = [re;x(i,:) index]; |

| 20 End For |

| 21 End While |

| 22 x1 = []; x2 = []; … ;xK = []; |

| 23 For i = 1:N |

| 24 If re(i,D+1)==1 |

| 25 x1 = [x1;re(i,1: D)]; |

| 26 Else If re(i,4)==2 |

| 27 x2 = [x2;re(i,1: D)]; |

| 28 … |

| 29 Else If |

| 30 Else If |

| 31 xK = [xK; re(i,1: D)]; |

| 32 End If |

| 33 End For |

| Output: X = {Group(1),Group(2), … , Group(K)} |

| End |

Table 2

The pseudo code for the FGTLBO algorithm

| Input: N, D, K, e, m,t, FESMAX, K = 3; |

| 01 t = 0; |

| 02 Generate an initial population: X = {x1,x2, … ,xN}; |

| 03 Function Evaluations: FES = N; |

| 04 While FES< = FESMAX |

| 05 fuzzy Grouping: X = {Group(1),Group(2), … ,Group(K)}; |

| 06 P = {P1,P2, … ,PK}; |

| 07 Evaluate the objective function values: f(X); |

| 08 Find the best learner: gbest(t); |

| 09 Set: mbest1 = P1; mbest2 = P2; mbest3 = P3; |

| 10 For i = 1:size(Group(1)) |

| 11 TF = round(1 + rand); |

| 12 For j = 1: D |

| 13 X (j) new,i = Group(1)i (j) +rand(gbest (j) -TFmbest1 (j) ); |

| 14 If X (j) new,i>lu2 (j) |

| 15 X (j) new,i = max(lu1 (j) ,2lu2 (j) -X (j) new,i); |

| 16 End If |

| 17 If X (j) new,i<lu1 (j) |

| 18 X (j) new,i = max(lu2 (j) ,2lu1 (j) -X (j) new,i); |

| 19 End If |

| 20 End For |

| 21 End For |

| 22 For i = 1:size(Group(2)) |

| 23 TF = round(1 + rand); |

| 24 For j = 1: D |

| 25 X (j) new,i = Group(2)i (j) +rand(gbest (j) -TFmbest2 (j) ); |

| 26 If X (j) new,i>lu2 (j) |

| 27 X (j) new,i = max(lu1 (j) ,2lu2 (j) -X (j) new,i); |

| 28 End If |

| 29 If X (j) new,i<lu1 (j) |

| 30 X (j) new,i = max(lu2 (j) ,2lu1 (j) -X (j) new,i); |

| 31 End If |

| 32 End For |

| 33 End For |

| 34 For i = 1:size(Group(3)) |

| 35 TF = round(1 + rand); |

| 36 For j = 1: D |

| 37 X (j) new,i = Group(3)i (j) +rand(gbest (j) -TFmbest3 (j) ); |

| 38 If X (j) new,i>lu2 (j) |

| 39 X (j) new,i = max(lu1 (j) ,2lu2 (j) -X (j) new,i); |

| 40 End If |

| 41 If X (j) new,i<lu1 (j) |

| 42 X (j) new,i = max(lu2 (j) ,2lu1 (j) -X (j) new,i); |

| 43 End If |

| 44 End For |

| 45 End For |

| 46 Evaluate the objective function values: f(X); |

| 47 FES = FES+1; |

| 48 Execute the “Learner Phase": update population X; |

| 49 t = t+1; |

| 50 End while |

| Output: the individual with the smallest objective function |

| value in the population. |

Table 3

Results of five algorithms over 25 independent iterations of 14 test functions

| Function | Result | TLBO | DGSTLBO | ETLBO | NSTLBO | FGTLBO |

| F 1 | F mean | 3.39E-27- | 6.86E-09- | 1.90E-27- | 2.77E-21- | 3.53E-28 |

| SD | 1.49E-27 | 8.83E-09 | 2.69E-27 | 2.66E-21 | 1.43E-28 | |

| F 2 | F mean | 1.56E-09- | 5.46E-00- | 5.08E-11- | 6.81E-00- | 2.42E-14 |

| SD | 4.20E-09 | 5.41E-00 | 1.61E-10 | 2.05E-00 | 3.49E-14 | |

| F 3 | F mean | 6.81E+05- | 3.54E+06- | 1.87E+05- | 1.81E+06- | 4.07E-22 |

| SD | 4.08E+04 | 2.15E+06 | 3.24E+05 | 3.71E+05 | 6.05E-22 | |

| F 4 | F mean | 7.35E+01+ | 3.63E+02- | 5.88E+01+ | 7.01E+03- | 3.38E+02 |

| SD | 9.78E+01 | 2.79E+02 | 1.86E+02 | 2.64E+03 | 3.72E+02 | |

| F 5 | F mean | 3.16E+03- | 4.79E+03- | 3.53E+02≈ | 3.96E+03- | 3.45E+02 |

| SD | 6.77E+02 | 1.19E+03 | 1.11E+03 | 6.07E+02 | 3.06E+02 | |

| – | 4 | 5 | 3 | 5 | ||

| + | 0 | 0 | 1 | 0 | ||

| ≈ | 1 | 0 | 1 | 0 | ||

| F 6 | F mean | 5.36E+01- | 8.26E+03- | 6.84E-01+ | 1.44E+02- | 2.25E+01 |

| SD | 4.12E+01 | 2.33E+04 | 2.16E+00 | 8.34E+01 | 2.06E+01 | |

| F 7 | F mean | 4.70E+03≈ | 4.70E+03≈ | 4.70E+03≈ | 4.70E+03≈ | 4.75E+03 |

| SD | 1.45E-12 | 6.78E-13 | 1.49E+03 | 2.23E-12 | 9.59E-13 | |

| F 8 | F mean | 2.09E+01- | 2.09E+01- | 2.08E-01- | 2.09E+01- | 2.00E+01 |

| SD | 3.52E-02 | 4.71E-02 | 6.35E-02 | 4.17E-02 | 5.60E-02 | |

| F 9 | F mean | 8.59E+01- | 6.37E+01- | 1.44E+01- | 1.22E+02- | 5.76E-00 |

| SD | 1.92E+01 | 2.59E+01 | 4.56E+01 | 2.55E+01 | 4.20E-00 | |

| F 10 | F mean | 1.23E+02- | 1.04E+02- | 1.14E+01- | 2.03E+02- | 7.97E-00 |

| SD | 3.30E+01 | 5.02E+01 | 3.62E+01 | 2.97E+01 | 5.75E-00 | |

| F 11 | F mean | 3.09E+01- | 1.94E+01- | 2.49E-00- | 3.30E+01- | 4.40E-02 |

| SD | 3.39E-00 | 3.19E+00 | 7.88E-00 | 4.88E-00 | 8.40E-02 | |

| F 12 | F mean | 9.93E+03- | 3.46E+04- | 1.45E+03+ | 2.00E+04- | 1.13E+04 |

| SD | 1.17E+04 | 1.60E+04 | 4.58E+03 | 1.86E+04 | 9.87E+03 | |

| – | 6 | 6 | 4 | 6 | ||

| + | 0 | 0 | 2 | 0 | ||

| ≈ | 1 | 1 | 1 | 1 | ||

| F 13 | F mean | 4.33E-00- | 4.49E-00- | 3.66E-01+ | 7.46E-00- | 2.31E-00 |

| SD | 9.27E-01 | 4.06E+00 | 1.16E-00 | 3.05E+00 | 7.17E-01 | |

| F 14 | F mean | 1.29E+01- | 1.30E+01- | 1.23E+01- | 1.31E+01- | 6.62E-00 |

| SD | 1.87E-01 | 1.89E-01 | 3.89E-00 | 2.68E-01 | 6.64E-01 | |

| – | 2 | 2 | 1 | 2 | ||

| + | 0 | 0 | 1 | 0 | ||

| ≈ | 0 | 0 | 0 | 0 | ||

| – | 12 | 13 | 8 | 13 | ||

| + | 0 | 0 | 4 | 0 | ||

| ≈ | 2 | 1 | 2 | 1 |

“–”, “+”, and “≈” denote that the performance of an algorithm is significantly worse than, significantly better than, or similar to that of FGTLBO, respectively.

Table 4

Results of FGTLBO algorithm based on different numbers of groups

| Function | Result | m = 2 | m = 3 | m = 4 | m = 5 | m = 6 |

| F 1 | F mean | 5.79E-28- | 3.53E-28 | 1.26E-27≈ | 2.37E-27- | 6.43E-27- |

| SD | 9.09E-09 | 8.83E-09 | 5.61E-09 | 7.69E-09 | 3.45E-09 | |

| F 2 | F mean | 8.09E-13+ | 2.42E-14 | 3.04E-13- | 2.13E-13≈ | 6.18E-12- |

| SD | 2.22E-14 | 3.49E-14 | 1.53E-10 | 3.65E-12 | 2.74E-13 | |

| F 3 | F mean | 3.42E-23+ | 4.07E-22 | 8.81E-21≈ | 6.25E-20- | 5.18E-20- |

| SD | 2.04E-21 | 6.05E-22 | 2.62E-23 | 3.01E-20 | 4.96E-19 | |

| F 4 | F mean | 5.25E+02- | 3.38E+02 | 6.08E+02- | 1.07E+03- | 3.62E+02≈ |

| SD | 6.08E+02 | 3.72E+02 | 4.66E+02 | 3.52E+03 | 3.55E+02 | |

| F 5 | F mean | 5.47E+02- | 3.45E+02 | 3.93E+02- | 3.06E+03- | 1.15E+03- |

| SD | 4.42E+02 | 3.06E+02 | 1.01E+03 | 5.17E+02 | 2.08E+02 | |

| – | 3 | 3 | 4 | 4 | ||

| + | 2 | 0 | 0 | 0 | ||

| ≈ | 0 | 2 | 1 | 1 | ||

| F 6 | F mean | 5.36E+01+ | 8.26E+03 | 6.84E-01+ | 1.44E+02+ | 2.25E+01+ |

| SD | 4.12E+01 | 2.33E+04 | 2.16E+00 | 8.34E+01 | 2.06E+01 | |

| F 7 | F mean | 4.75E+03≈ | 4.75E+03 | 4.75E+03≈ | 4.75E+03≈ | 4.75E+03≈ |

| SD | 1.88E-12 | 9.59E-13 | 1.09E-10 | 2.42E-12 | 9.59E-13 | |

| F 8 | F mean | 2.03E+01- | 2.00E+01 | 2.05E+01≈ | 2.05E+01- | 2.06E+01- |

| SD | 2.32E-02 | 5.60E-02 | 6.31E-02 | 3.97E-02 | 5.02E-02 | |

| F 9 | F mean | 4.57E-01+ | 5.76E-00 | 9.04E-00- | 6.25E-00≈ | 9.66E-00- |

| SD | 1.92E-00 | 4.20E-00 | 4.84E-00 | 2.08E-00 | 8.90E-00 | |

| F 10 | F mean | 6.98E-00- | 7.97E-00 | 8.84E-00- | 8.92E-00- | 9.07E-00- |

| SD | 7.32E-00 | 5.75E-00 | 1.69E-00 | 5.77E-00 | 6.76E-00 | |

| F 11 | F mean | 9.62E-02- | 4.40E-02 | 8.19E-02- | 7.70E-02≈ | 6.49E-02≈ |

| SD | 5.09E-01 | 8.40E-02 | 9.85E-02 | 4.88E-02 | 9.49E-02 | |

| F 12 | F mean | 1.93E+04- | 1.13E+04 | 2.05E+04- | 2.16E+04- | 2.21E+04- |

| SD | 2.10E+04 | 9.87E+03 | 4.50E+03 | 3.06E+04 | 3.87E+04 | |

| – | 4 | 4 | 3 | 4 | ||

| + | 2 | 1 | 1 | 1 | ||

| ≈ | 1 | 2 | 3 | 2 | ||

| F 13 | F mean | 2.30E-00≈ | 2.31E-00 | 2.29E-00+ | 2.35E-00- | 2.36E-00- |

| SD | 8.21E-01 | 7.17E-01 | 1.06E-00 | 5.02E-01 | 8.26E-01 | |

| F 14 | F mean | 1.23E+01- | 1.22E+01 | 1.51E+01- | 1.46E+01- | 1.47E+01 |

| SD | 4.32E-01 | 6.16E-01 | 6.09E-01 | 6.38E-01 | 6.82E-01 | |

| – | 1 | 1 | 2 | 2 | ||

| + | 0 | 1 | 0 | 0 | ||

| ≈ | 1 | 0 | 0 | 0 | ||

| – | 8 | 8 | 9 | 10 | ||

| + | 4 | 2 | 1 | 1 | ||

| ≈ | 2 | 4 | 4 | 3 |

“–”, “+”, and “≈” denote that the performance of the FTLBO (m = 3) algorithm is significantly worse than, significantly better than, or similar to those of other cases.

Table 5

Results of the FGTLBO algorithm based on different numbers of groups

| Function | Result | m = 7 | m = 8 | m = 9 | m = 10 | m = 11 |

| F 1 | F mean | 1.97E-27- | 5.80E-27- | 1.89E-27- | 9.07E-27- | 8.13E-27- |

| SD | 8.27E-09 | 8.07E-09 | 7.25E-09 | 7.64E-09 | 3.03E-09 | |

| F 2 | F mean | 6.31E-13- | 8.26E-13- | 4.02E-12- | 3.51E-12- | 2.75E-13- |

| SD | 3.63E-09 | 8.51E-09 | 1.29E-10 | 4.22E-10 | 7.38E-11 | |

| F 3 | F mean | 1.41E-20≈ | 5.50E-19- | 4.62E-19- | 1.04E-18- | 3.31E-19- |

| SD | 7.02E-20 | 5.55E-17 | 4.27E-18 | 5.01E-20 | 3.54E-22 | |

| F 4 | F mean | 5.05E+02- | 5.53E+02- | 6.08E+02- | 7.53E+02 | 8.08E+02- |

| SD | 3.58E+02 | 3.09E+02 | 4.16E+02 | 3.04E+03 | 3.61E+02 | |

| F 5 | F mean | 3.00E+03- | 3.09E+03- | 3.13E+03- | 3.36E+03- | 4.40E+03 |

| SD | 1.17E+02 | 1.31E+03 | 1.90E+03 | 1.99E+02 | 2.01E+02 | |

| – | 4 | 5 | 5 | 5 | 5 | |

| + | 0 | 0 | 0 | 0 | 0 | |

| ≈ | 1 | 0 | 0 | 0 | 0 | |

| F 6 | F mean | 5.36E+01+ | 8.26E+03≈ | 6.84E+01+ | 1.44E+02- | 2.25E+01- |

| SD | 4.12E+01 | 2.33E+04 | 2.16E+00 | 8.34E+01 | 2.06E+01 | |

| F 7 | F mean | 4.75E+03≈ | 4.75E+03≈ | 4.75E+03≈ | 4.75E+03≈ | 4.75E+03≈ |

| SD | 5.47E-12 | 9.98E-13 | 1.00E-12 | 2.73E-12 | 8.51E-13 | |

| F 8 | F mean | 2.06E+01- | 2.08E+01- | 2.08E+01- | 2.08E+01- | 2.09E+01- |

| SD | 3.77E-02 | 4.91E-02 | 2.36E-02 | 6.12E-02 | 5.20E-02 | |

| F 9 | F mean | 9.19E-00- | 9.27E-00- | 9.91E-00- | 9.94E-00≈ | 9.86E-00- |

| SD | 5.91E-00 | 3.59E-00 | 4.06E-00 | 3.55E-00 | 8.90E-00 | |

| F 10 | F mean | 9.13E-00+ | 9.44E-00≈ | 9.72E-00- | 9.84E-00- | 8.98E-00- |

| SD | 7.32E-00 | 5.02E-00 | 7.82E-00 | 8.17E-00 | 4.35E-00 | |

| F 11 | F mean | 9.01E-02- | 8.99E-02- | 9.04E-02- | 9.78E-02- | 8.49E-02- |

| SD | 7.35E-02 | 8.11E-02 | 9.83E-02 | 9.82E-02 | 9.45E-02 | |

| F 12 | F mean | 2.33E+04 | 2.30E+04- | 2.30E+04- | 2.31E+04- | 2.05E+04- |

| SD | 3.07E+04 | 3.13E+04 | 3.20E+04 | 3.21E+04 | 3.20E+04 | |

| – | 4 | 4 | 5 | 6 | 6 | |

| + | 2 | 0 | 1 | 0 | 0 | |

| ≈ | 1 | 3 | 1 | 1 | 1 | |

| F 13 | F mean | 2.33E-00≈ | 2.41E-00≈ | 2.45E-00- | 2.39E-00- | 2.48E-00- |

| SD | 9.11E-01 | 9.86E-01 | 9.90E-01 | 9.19E-00 | 9.91E-00 | |

| F 14 | F mean | 1.45E+01- | 1.43E+01- | 1.44E+01- | 1.49E+01- | 1.59E+01- |

| SD | 2.11E-00 | 2.65E-00 | 2.27E-00 | 2.43E-01 | 2.83E-01 | |

| – | 1 | 1 | 2 | 2 | 2 | |

| + | 0 | 0 | 0 | 0 | 0 | |

| ≈ | 1 | 1 | 0 | 0 | 0 | |

| – | 9 | 10 | 12 | 13 | 13 | |

| + | 2 | 0 | 1 | 0 | 0 | |

| ≈ | 3 | 4 | 1 | 1 | 1 |

“–”, “+”, and “≈” denote that the performance of the FGTLBO (m = 3) algorithm is significantly worse than, significantly better than, or similar to those of other cases.