Artificial Intelligence and User Experience in reciprocity: Contributions and state of the art

Abstract

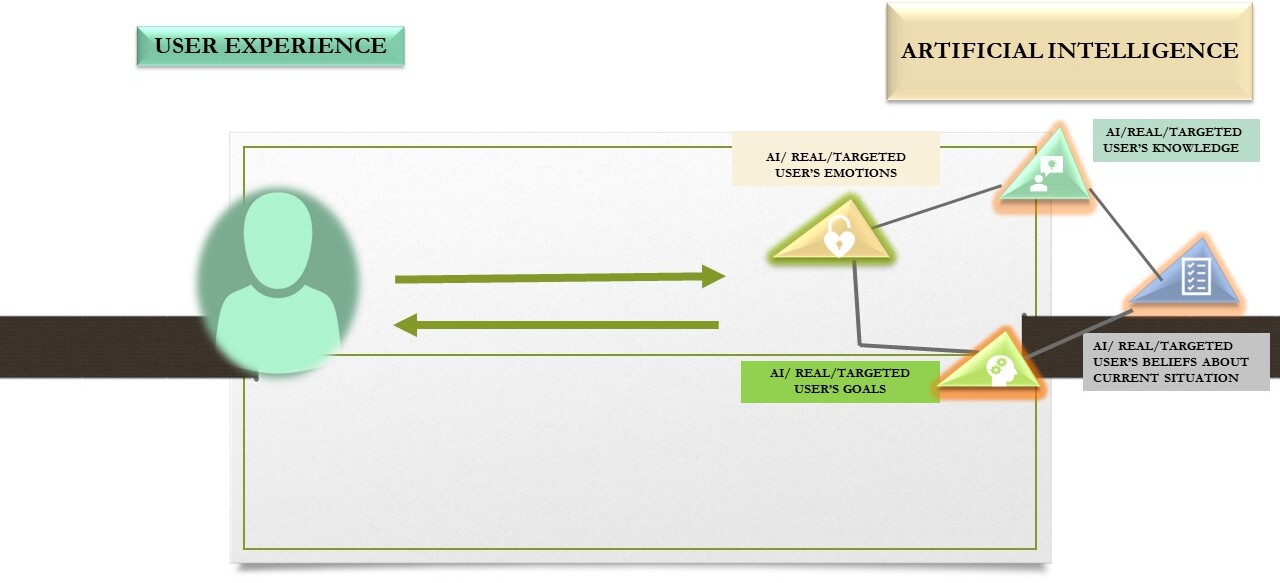

Among the primary aims of Artificial Intelligence (AI) is the enhancement of User Experience (UX) by providing deep understanding, profound empathy, tailored assistance, useful recommendations, and natural communication with human interactants while they are achieving their goals through computer use. To this end, AI is used in varying techniques to automate sophisticated functions in UX and thereby changing what UX is apprehended by the users. This is achieved through the development of intelligent interactive systems such as virtual assistants, recommender systems, and intelligent tutoring systems. The changes are well received, as technological achievements but create new challenges of trust, explainability and usability to humans, which in turn need to be amended by further advancements of AI in reciprocity. AI can be utilised to enhance the UX of a system while the quality of the UX can influence the effectiveness of AI. The state of the art in AI for UX is constantly evolving, with a growing focus on designing transparent, explainable, and fair AI systems that prioritise user control and autonomy, protect user data privacy and security, and promote diversity and inclusivity in the design process. Staying up to date with the latest advancements and best practices in this field is crucial. This paper conducts a critical analysis of published academic works and research studies related to AI and UX, exploring their interrelationship and the cause-effect cycle between the two. Ultimately, best practices for achieving a successful interrelationship of AI in UX are identified and listed based on established methods or techniques that have been proven to be effective in previous research reviewed.

1.Introduction

Artificial Intelligence (AI) has been a driving force behind significant technological advancements that leverage big data, real-time computer communication via the Internet and the Internet of Things, and the vast accumulation of user input through sources like social media, search engines, and handheld devices [1, 2, 3, 4, 5, 6, 7]. Artificial Intelligence (AI) has numerous applications across different domains including healthcare, banking and finance, retail and product recommendations, education, energy, media and entertainment and many other domains [8, 9, 10, 11, 12].

These developments have caused a shift in the way people interact with computers, resulting in a transformation of the user experience as it was perceived in standard Human-Computer Interaction [13, 14]. One important change is that computers that use AI are qualified to make decisions and produce feedback based on incomplete information. This is a form of reasoning that deals with uncertain or ambiguous information, where the conclusions or decisions reached may not be completely accurate or certain. AI-based reasoning encompasses various techniques like machine learning [15, 16, 17, 18, 19], deep learning [20, 21], fuzzy logic [22], cognitive reasoning [23] and others.

One of the advantages of AI-based reasoning is that it can be used to handle complex situations where precise information is not available or is difficult to obtain. However, one of the disadvantages is that the conclusions or decisions reached using AI rely on incomplete or uncertain information, which can lead to unreliable conclusions or decisions. Additionally, AI-based applications may not always provide predictable output, which differs from traditional computing methods. AI-based reasoning has been in development for some time and is not entirely novel. The employment of AI for the enhancement of the User Experience (UX) of interactive software has been a long-standing goal of computer scientists for many decades prior to the latest advancements.

User Experience (UX) refers to the overall experience that a user has when interacting with a product, system, or service [24, 25, 26]. This includes everything from how easy it is to navigate a website to the emotions a user feels when using a particular application. UX designers work to create products that are easy to use and enjoyable for users, considering user behaviour, preferences, and needs. In view of the above, UX design is an important aspect of product design that involves understanding user needs and preferences and creating products that are easy to use, enjoyable, and engaging.

Artificial Intelligence has made significant contributions to User Experience design, resulting in progress in the development of intelligent interactive systems. These systems, such as virtual assistants, recommender systems, intelligent help systems, and intelligent tutoring systems, have the potential to enhance user experience by providing natural language processing, personalised recommendations, real-time assistance, and adaptive interfaces. Additionally, AI allows designers to automate repetitive tasks, enabling them to concentrate on more intricate design challenges. However, AI changes the UX of software applications in a way that it achieves enhancements but poses new challenges due to its intelligent features which may not always be well received and understood by humans.

The relationship between Artificial Intelligence and User Experience can be viewed as a cause-effect reciprocity, where AI can impact the UX of a system, and the quality of the UX can also affect the effectiveness and acceptability of AI. In other words, AI can be used to enhance the UX of a system, but the quality and nature of the resulting AI-based UX can also affect the accuracy, trustworthiness, usability and efficiency of the AI. In many cases, usability as it was perceived in standard Human-Computer Interaction [27, 28, 29] is violated by AI-based systems. The interdependence of AI and UX highlights the importance of designing AI systems with a focus on UX, and vice versa, to ensure that these systems are effective, efficient, and user-friendly. In many cases, the limitations, and challenges of AI-based UX design are addressed by new implementations of more sophisticated AI reasoning, resulting in a development cycle.

The state of the art in AI in User Experience is constantly evolving, with new research and advancement efforts aimed at improving the effectiveness and usability of AI systems. There is a growing emphasis on designing AI systems that are transparent, explainable, and fair, to address concerns about bias, discrimination, and lack of trust in these systems. Natural Language Processing (NLP) and Machine Learning (ML) techniques are being used to create more human-like interactions with AI systems. A new target is to improve the accuracy and relevance of recommendations and predictions. In addition, there is a growing focus on designing AI systems that prioritise user control and autonomy, protecting user data privacy and security, and promoting diversity and inclusivity in the design process. As AI continues to evolve and impact User Experience design, it is important to stay up to date with the latest advancements and best practices in this field while knowing important research of the past. Indeed, Norman and Nielsen cautioned computer scientists, developers, and industries that the developer community’s ignorance of the extensive history and findings of HCI research leads them to feel empowered to release untested and unproven creative efforts on the unsuspecting public [30].

In this context, the paper critically analyses published academic works and research studies related to AI and UX to examine their reciprocity and cause-effect cycle by presenting a review of the author’s past research along with other researchers’ work in the field to identify significant findings, methodologies, and theories. Moreover, the study highlights gaps in knowledge and areas for future research, which are discussed in detail. By reviewing AI in UX, this study offers insights into the current state of knowledge, identifies best practices, and leads to the development of new research questions and hypotheses. The author emphasises the importance of past successful research and highlights the need for AI stakeholders to be aware of the extensive history and findings of HCI research to prevent the release of untested and unproven creative efforts on the public.

The remainder of this paper is organised as follows. Section 2 describes the cycle of contributions and limitations of Artificial Intelligence in User Experience and vice versa. In the subsequent Sections 3 and 4, descriptions of AI in UX are presented and discussed in the light of contributions in the sub-fields of interactive systems of web search, recommender systems, intelligent help systems and virtual assistants and intelligent tutoring systems. In particular, Section 3 outlines the utilisation of Artificial Intelligence reasoning to enhance User Experience and Section 4 presents and examines the impact of AI-based reasoning on User Experience. Section 5 of the article identifies the challenges and difficulties associated with integrating AI into UX and suggests possible solutions for expanding the use of AI in this field, drawing upon relevant research literature. Section 6 draws on the author’s extensive previous research in the field of UX in AI to identify best practices that have resulted in the development of effective and personalised AI-based interactive systems. Lastly, Section 7 describes and discusses the conclusions and potential areas for further research.

2.The cycle of Artificial Intelligence and User Experience

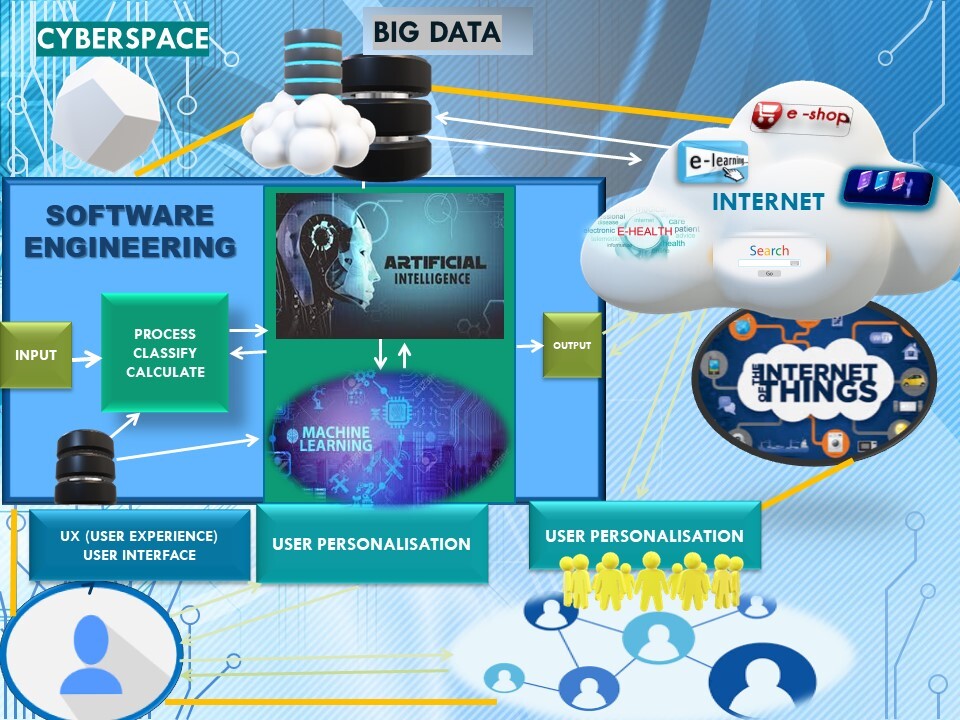

The objective of UX is to generate favorable user experiences, which may involve designing software products that are user-friendly, visually attractive, and easy to navigate. A positive user experience can enhance user engagement and facilitate the achievement of users’ objectives. UX designers often use various tools such as wireframing, prototyping, and user testing. Wireframes are basic visual representations that aid designers in creating product layouts, whereas prototyping involves developing a preliminary version of the product to experiment with different functionalities and user interactions. User testing involves monitoring users as they interact with the product, gathering feedback, and making improvements based on that feedback. With the increasing complexity and reliance on Internet connectivity of software applications, new technologies and methods have been introduced to improve the user experience. This is evident in cases where software operations necessitate Internet connections, such as e-commerce platforms, e-learning tools, e-health applications, email services, social media platforms, and web search engines, as well as in instances where they serve as supplementary features of standalone systems like customised information systems. In modern systems, Artificial Intelligence, Machine Learning, and Deep Learning are utilised at both the local and Internet levels to provide users with personalised and integrated interactions. These advanced technologies are employed in the development of sophisticated interactive software applications in the context of AI-enhanced software engineering [31, 32, 33, 34, 35, 36] as demonstrated in Fig. 1.

Figure 1.

Artificial Intelligence in interactive software applications.

As a result, modern User Experience design must consider all levels of reasoning within an information system itself as well as the Internet, which holds vast masses of data, to provide a seamless and personalised experience for the end-user. While the resulting AI-based UX aims to reduce the cognitive burden on users, the UX design process becomes considerably more complex for UX engineers. In addition, the functioning of Artificial Intelligence in the system can cause new User Experience challenges, as users may not be accustomed to its operation. Therefore, to further improve the User Experience, more Artificial Intelligence reasoning may be employed in response to these challenges.

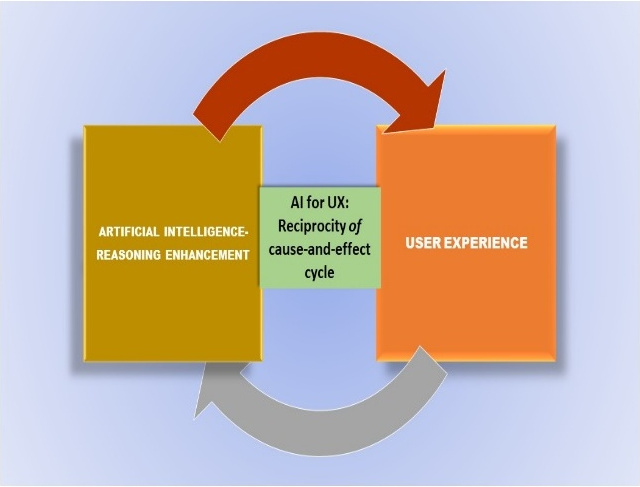

The interaction between Artificial Intelligence and User Experience can be seen as a two-way relationship, where each can affect the other. AI can be used to enhance the UX of a system and the quality of the UX can impact the effectiveness of AI. This mutual influence emphasises the need for AI systems to prioritise UX design and for UX designers to consider the impact of AI on the user experience. By doing so, the resulting systems will be more efficient and user friendly and will be created in a cause-effect cycle in reciprocity, as illustrated in Fig. 2.

In view of the above, a new field has emerged, that of Human-AI interaction [37, 38] based on the evolution of knowledge-based Human-Computer Interaction [39, 40]. This new field refers to the ways in which humans and artificial intelligence (AI) systems interact and communicate with each other. As AI becomes more prevalent in our daily lives, it is increasingly important to design interfaces and interactions that enable seamless and effective communication between humans and AI. The effects in UX of AI constitute impressive advancements but they also suffer from problems which need to be addressed.

Figure 2.

AI in UX: Reciprocity of cause-and-effect.

For example, Artificial intelligence (AI) technology has been increasingly used in the implementation of advanced Clinical Decision Support Systems (CDSS) with major potential usefulness of AI-empowered CDSS (AI-CDSS) in clinical decision-making scenaria but post-adoption user perception and experience remain understudied [41]. As another example, when it comes to fully autonomous driving, passengers relinquish control of the steering to a highly automated system. However, the behaviour of autonomous driving may cause confusion and result in a negative user experience. As a consequence, the acceptance and comprehension of the users are critical factors that determine the success or failure of this new technology [42]. Furthermore, there has been a notable improvement in the quality of artificial intelligence applications for natural language generated systems. According to a recent study, participants found the process enjoyable and useful, and were able to imagine potential uses for future systems. However, although machine suggestions were given, they did not necessarily result in better written outputs. As a result, the researchers propose innovative natural language models and design options that could enhance support for creative writing [43]. This leads to the initiation of new AI employment cycles, which address the existing problems of user experience with AI.

3.Artificial Intelligence and user modelling in service of User Experience

AI technology can assist User Experience design in several ways. AI for UX (User Experience) refers to the use of artificial intelligence (AI) technologies to improve the user experience of digital products and services. By incorporating AI into UX design, designers can create products that are more engaging, effective, and accessible for users.

AI can be used to personalise the user experience by analysing user data and predicting user behaviour and preferences, allowing the product to adapt to the needs of each individual user. Moreover, AI can assist in gathering user research data, such as sentiment analysis or feedback analysis, to comprehend user needs and improve UX design. For instance, websites and apps can use AI to recommend content, products, or services based on the user’s past behaviour or search history.

User modelling is used for personalisation while programs are being executed. User modelling is the process of creating representations or profiles of users based on their behaviour, preferences, goals, and other relevant characteristics. These user models are used in various applications, including personalised recommendations, adaptive interfaces, and intelligent tutoring systems, to tailor the user experience to the needs and interests of individual users. For example, in [44] user modeling is performed by detecting music similarity perception based on objective feature subset selection for music recommendation, in [45] two kinds of user model, student and instructor models and their interaction are created in an authoring tool for intelligent tutoring systems, while techniques for the prediction of data interaction in user modelling are presented and discussed in [46]. User modelling involves collecting and analysing data about users through various sources, including user feedback, user actions, and user profiles. Machine learning algorithms, cognitive reasoning, fuzzy sets or other statistical techniques are then used to identify patterns and gain insights in the data, which are used to create user models.

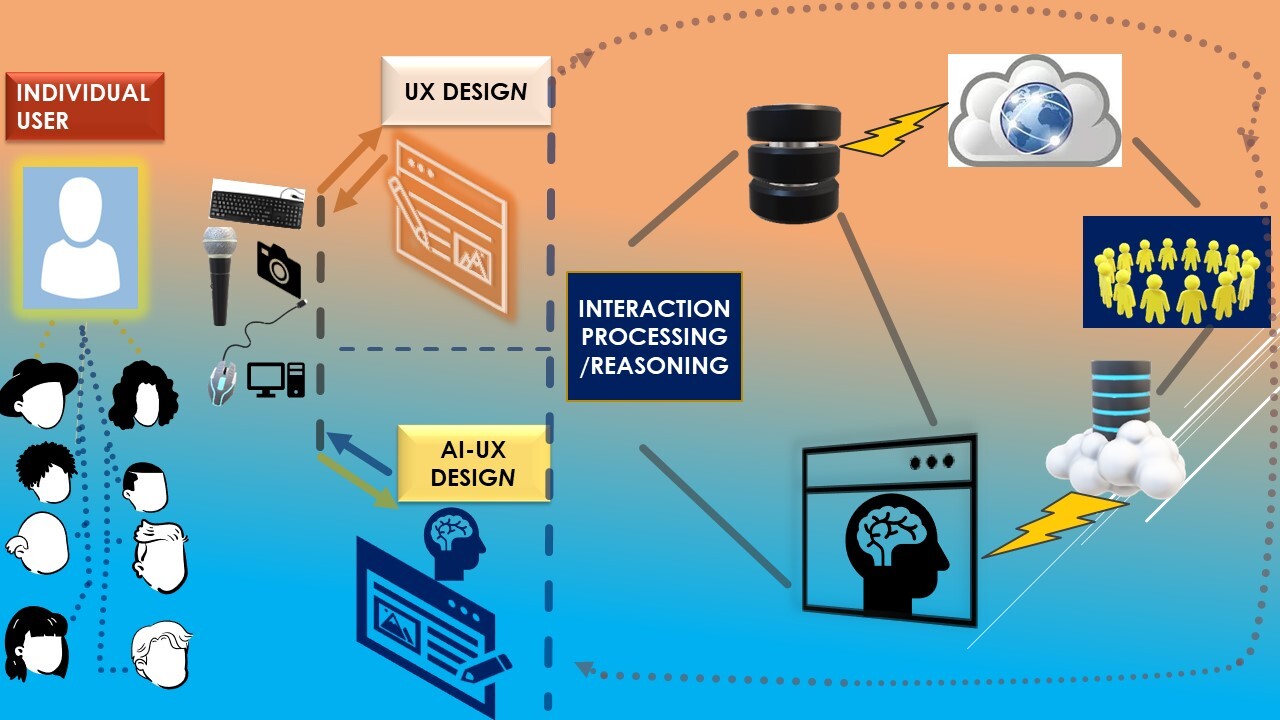

Individual users possess unique characteristics such as interests, preferences, educational background, expertise, personality traits, and emotions, which can be communicated to interactive applications via various input and output channels like keyboard, camera, microphone, mouse, and mobile or handheld devices. The processing of user inputs requires the use of artificial intelligence techniques. In this regard, user modelling can be utilised to create representations of individual user beliefs, which take into account their inputs from specific standalone applications, as well as inputs from other web-based applications through the internet. These inputs contribute to the formulation and accumulation of hypotheses about individual users and communities of users across one or more applications. These hypotheses can then be leveraged to personalise and enhance the user experience as illustrated in Fig. 3.

Figure 3.

AI-based reasoning and personalisation in UX.

The user models convey processed information and inferences about users, depending on the specific needs of the application. For example, a user model for a recommender system may include information on the user’s past behaviour, product ratings, and preferences. In contrast, a user model for an intelligent tutoring system may include information on the user’s knowledge level, learning style, and performance.

User models can be categorised along four dimensions, namely degree of specialisation, modifiability, acquisition and temporal extent[47]. The degree of specialisation refers to whether the user model concerns individual users or a community of users. Modifiability refers to whether the user model is static and does not change once determined or dynamic, in which case it may change after its initial determination. Acquisition refers to whether the user model is identified implicitly by the users’ actions or whether it is constructed based on explicit information that the users give about themselves. Temporal extent refers to whether the user model is based on short-term information concerning one interaction session, in which case it is a short-term user model or whether it is based on long-term information that has been collected about users along many sessions, in which case it is a long-term user model.

Individual user models are created through the collection and analysis of data specific to a particular user, such as their search history, purchase behaviour, or interactions with a particular system or service. Then the collected individual data is processed so that the system may draw inferences concerning the individual user’s needs. For example, individual user modelling has been employed for learn-and-play personalised reasoning from point-and-click to virtual reality mobile educational games to provide individualised tutoring and game recommendation according to the student-players’ preferences in the context of edutainment [48].

User models based on communities of users refer to the creation of user models based on shared characteristics or behaviours among groups of users. Instead of focusing on individual users, user models based on communities of users aim to identify patterns and trends among groups of users who share common interests, preferences, or behaviours. These user models are created through the analysis of data from social media platforms, online forums, and other online communities. User models based on communities of users have a wide range of applications. For example, they have been used for dynamically extracting and exploiting information about customers in interactive TV-commerce [49] or for customer data clustering in an e-shopping application for assisting on-line customers in buying products [50].

Short-term and long-term user modelling are two complementary approaches to user modelling that focus on the temporal extent of capturing and analysing data related to user behaviour and preferences.

Short-term user models focus on current users’ sessions by pursuing to capture and analyse data related to a user’s immediate interactions with a digital system or service. This includes data such as the user’s current context, current goals, current plans, current behaviour, and current preferences within the limits of an interaction session. Short-term user models are often used in real-time personalisation, such as in e-commerce websites that provide personalised recommendations based on a user’s current session or in adaptive interfaces that adjust to the user’s current task. The main advantage of short-term user models is that they can provide immediate and relevant recommendations or personalised experiences to the user.

On the other hand, long-term user models are based on historical data and user behaviour over an extended period of time. Long-term user models capture data such as the user’s preferences, interests, demographics, and past interactions with a digital system or service. These models can be used to provide more personalised and effective user experiences over time, such as personalised content recommendations or customised interface layouts. The main advantage of long-term user models is that they can capture the user’s preferences and behaviour over time, allowing for more accurate and personalised recommendations and experiences. However, they may require more data and computational resources to generate insights, and they may not be as effective in real-time scenarios as short-term user models.

Cognitive-based user modelling is a type of user modelling that focuses on understanding how users think, reason, and process information to create more effective and personalised user experiences. This approach to user modelling is based on the idea that a user’s cognitive processes, such as attention, memory, and decision-making, are critical factors that influence their interactions with digital systems and services. These data sources provide insights into how users perceive, process, and interact with digital content and interfaces, which can then be used to create more personalised and effective user experiences. For example, cognitive theories have been used to model cognitive aspects, personality and performance issues of users in a Collaborative Learning Environment [51] or for creating cognitive-based adaptive scenarios in educational games [52].

Machine learning algorithms are used to identify patterns in user behaviour, such as the types of content they engage with or the topics they discuss. For example, they have been used to provide online recommendations or to create targeted advertising campaigns that appeal to specific groups of users or for crowdsourcing recognised image objects in mobile devices [53].

Data mining techniques are used to extract valuable insights and patterns from large sets of user data collected through various methods such as surveys, user testing, analytics, and other techniques. The goal of data mining in UX is to help designers and developers make informed decisions about product design, features, and usability. These can be applied to many domains. For example, data mining is used to extract useful information out of large data in e-government to help civil servants perform their work tasks [54].

Fuzzy logic is a mathematical framework for dealing with uncertainty and imprecision. Fuzzy logic is based on the idea of fuzzy sets, which are sets with boundaries that are not clearly defined. In UX it may be used for several applications. For example, the set of “forgetful users” is a fuzzy set because there is no clear boundary between forgetful user and not forgetful user in an intelligent assistive system for users with dementia problems [55]. In another example, fuzzy logic decisions have been used in conjunction with web services for a personalised Geographical Information System (GIS), that provides individualised recommendations on route selections depending on the users’ geographical place, interests and preferences [56].

The actual design process of UX is also an important use of AI so that the UX becomes more appealing to the user. UX designers can anticipate situations that will occur frequently, impact a large number of users, and require a user to execute the same sequential set of actions to complete a task [57]. The availability of user data on the Internet can be exploited by AI, which can provide predictive analytics to predict user behaviour or outcomes, helping designers make informed decisions about UX design.

Natural Language Processing (NLP) can also be used to improve the conversational experience between users and AI-empowered products, such as chatbots and virtual assistants. NLP is a branch of AI that enables machines to understand and interpret human language to create a more natural and intuitive interaction. For example, [58] describes a mapping mechanism of natural language sentences onto an SPN state machine for understanding purposes. In another example, [59] describes a procedure of modelling natural language sentences into SPN Graphs, while [60] describes a Natural Language Understanding -based method for a first level automatic categorisation of AI-based security documents.

Sentiment analysis is a subfield of natural language processing (NLP) that involves analysing text to determine the sentiment or emotional tone of the language used. This can involve identifying positive or negative language, as well as more specific emotions such as anger, fear, or joy. Sentiment analysis is a method that has been used for revealing insight into unstructured content by automatically analysing people’s opinions, emotions, and attitudes towards a specific event, individual, or topic based on user-generated data, as described in a review of sentiment analysis based on sequential transfer learning [61]. For example, sentiment analysis has been employed in a computational model for mining consumer perceptions in social media [62].

Image, Sound, Voice and Video Analysis Technology is also a kind of AI technology that is used to recognise and classify images, sounds and videos for improving UX. It can make interfaces more visually appealing and engaging and can be useful for applications such as product recommendations and visual search. For example, image analysis has been used in a concept-based image acquisition system which embodies the ability to extract a certain subset of images that are representatives of a user defined query concept [63]. As another example, markerless based human motion capture describes the activity of analysing and expressing human motion using image processing and computer vision techniques and aims at revealing information about the affective state, cognitive activity, personality, intention and psychological state of a person [64]. In another example, digital image libraries are organised according to a user-defined concept which is extracted from a set of images that the user submits to the system as its representative instances [65]. AI can also help improve accessibility for users with disabilities by providing alternative ways of interacting with digital products or services through natural language processing, image recognition, voice synthesising. For example, a voice assistant could offer audio feedback to visually impaired users [66], or in another example an intelligent mobile multimedia application supports the elderly users [67].

Affective computing is also another important area where AI has been used to improve UX. Affective computing consists of two sub-areas, emotion recognition and emotion generation.

Emotion recognition is the process of using artificial intelligence (AI) algorithms to identify and interpret human emotions based on their facial expressions, speech patterns, physiological signals and context. Emotion recognition by computers can be achieved through different methods. For example, facial expression recognition involves using computer vision algorithms to analyse the facial features of a person and identify emotional expressions, such as happiness, sadness, anger, and surprise [68]. Such analysis can be extended for emotion recognition of groups, such as a whole class of students [69]. Similarly, speech analysis can be used to identify emotions based on the tone, pitch, and other features of a person’s voice [70]. Multi-modal affect recognition can combine many sources of evidence from multiple modalities, such as the keyboard, the microphone, the camera and other sources [71, 72, 73]. Emotion generation is the process of using artificial intelligence (AI) algorithms to create computer feedback that conveys empathy to the users. Affective computing has a wide range of potential applications, such as enhancing human-computer interaction, and providing more personalised experiences in fields like marketing, entertainment and e-learning [74].

All of the above, constitute different aspects and approaches for the improvement of UX through the use of AI and Machine Learning. The following subsections describe how AI technology is used for the benefit of User Experience in some important interactive application domains, such as Web searching, Recommender Systems, Intelligent help systems and virtual assistants and Intelligent Tutoring Systems.

3.1AI techniques for the UX of web searching

AI can be used to enhance the user experience (UX) of web searching by improving the relevance and personalisation of search results. By analysing user behaviour and preferences, search engines can better understand what types of content and information the user is looking for and provide more accurate and useful results.

There are various kinds of AI techniques used in web searching. Many of them are used for personalisation involving user modelling. Personalisation techniques are used to customise search results based on the user’s location, search history, and preferences. This approach involves analysing user data to create a personalised search experience. This includes data such as the user’s search queries, click-through rates, time spent on search results pages, and search histories. AI can also be used to personalise search results based on factors such as location. For example, a search for “restaurants” in Athens, Greece may yield different results than a search for the same term in the Greek town of Chicago, U.S.A, as the AI can take into account the user’s location and provide results based on local restaurants. User modelling in web searching refers to the process of recording and analysing user behaviour and preferences when searching for information on the web. There are several techniques used in user modelling for web searching.

Some common types of AI used in web search engines are the following:

• Content-based retrieval is a technique used in web search to retrieve information based on the similarity of content. It involves analysing the content of a web page, such as the text, images, videos, and audio, to determine its relevance to a user’s query and provide relevant search results. For example, content-based retrieval has been used in an intelligent mobile application to retrieve music pieces from digital music libraries [75]. Another example involves using agents for feature extraction in the context of content-based image retrieval for medical applications [76].

• Collaborative filtering uses data from other users with similar search behaviour to recommend search results. For example, a model that represents a user’s needs and its search context is based on content and collaborative personalisation, implicit and explicit feedback, and contextual search [77].

• Hybrid filtering in web search is a method of combining two or more different types of filtering techniques to improve the accuracy and relevance of search results. This approach can be used to address some of the limitations of individual filtering techniques and provide a more comprehensive search experience. For example, a hybrid filtering mechanism is proposed to eliminate irrelevant or less relevant results for personalised mobile search, which combines content-based filtering and collaborative filtering; the former filters the results according to the mobile user’s feature model generated from the user’s query history, and the latter filters the results using the user’s social network, which is constructed from the user’s communication history [78].

• Machine Learning: Machine learning allows search engines to learn from user behaviour, such as clicks and dwell time and adapt search results based on individual preferences. For example, if a user consistently clicks on results from certain websites, the search engine may prioritise those results in future searches. Machine Learning is used to improve the accuracy of search results. This technique is also used to identify patterns and relationships in large datasets. An example of this kind of approach is the use of machine learning algorithms based on experts’ knowledge to classify web pages into three predefined classes according to the degree of content adjustment to the search engine optimisation (SEO) recommendations [79].

• Deep Learning: Deep learning is a subfield of machine learning that involves training artificial neural networks to learn from large datasets. The goal of deep learning is to enable computers to learn and recognise patterns, classify information, and make decisions in a way that is similar to how the human brain operates. Deep learning is used in web searching to improve the accuracy of image and voice-based search. Neural networks in web search are used in web search engines, ranking algorithms citation analysis [80]. For example, in [81] a deep learning model of Convolutional Neural Network (CNN) is used in big web search log data mining to learn the semantic representation of a document to improve the efficiency and effectiveness of information retrieval.

• Natural Language Processing (NLP): NLP is used in web searching to understand the user’s queries in natural language and match them with relevant web pages. NLP techniques include text segmentation, entity recognition, and sentiment analysis. For example, [82] presents a method for translating web search queries into natural language questions. The authors propose a system that uses natural language processing (NLP) techniques to transform a web search query into a question that can be answered using a knowledge base.

• Semantic Search: Semantic search uses machine learning and NLP techniques to understand the meaning behind user queries and deliver more accurate search results. This approach involves analysing the relationship between words and phrases and identifying entities in the text. Semantic search and semantic web are two related concepts in the field of information retrieval and web technologies. Instead of relying solely on keywords, semantic search algorithms attempt to understand the meaning of the query and the content being searched, and to provide more relevant results based on that understanding. Semantic search can be seen as an application of the principles of the semantic web to the problem of search. By using semantic technologies such as ontologies, taxonomies, and natural language processing, semantic search can provide more intelligent and accurate search results that better match the user’s intent. Semantic web technology and semantic search are emerging areas of research that have the potential to transform the way we interact with information on the internet. According to [83], semantic web technology enables the creation of machine-readable data that can be easily interpreted by computers, leading to more intelligent and effective search results. In [84] the authors further explain how query technologies can be developed to enable semantic search in the context of the semantic sensor web, where sensors generate large amounts of data that require sophisticated search and analysis tools. Finally, in [85], the authors provide a diachronic study of publications comparing the terms “semantic web” and “web of data” over time and identifying the evolution of these concepts. Together, these works highlight the potential of semantic web technology and semantic search to improve information retrieval and analysis on the web.

• Knowledge Graphs: Knowledge graphs are used to organise and connect information in a way that makes it easier to find and understand. These graphs are used to identify entities, relationships, and concepts related to the user’s query. For example, in [86] methods are proposed for extracting triples from Wikipedia’s HTML tables using a reference knowledge graph.

3.2AI techniques for the UX of recommender systems

Recommender Systems provide information in a way that it is most appropriate and valuable to its users and prevent them from being overwhelmed by huge amounts of information that, in the absence of recommender systems, they should browse or examine [87]. Recommender systems are a type of information filtering system that aim to predict the preferences or interests of a user, and recommend items or products that the user may be interested in. They are commonly used in e-commerce, social media, online advertising, and other applications where personalised recommendations can enhance the user experience and increase engagement [88].

Artificial intelligence (AI) has been widely used to enhance the user experience (UX) of recommender systems to provide personalised recommendations to users based on their interests, preferences, and behaviour.

There are different types of AI techniques used in recommender systems, depending on the specific approach used to build the system. Some of the common types of AI techniques used in recommender systems are the following:

• Collaborative filtering: Collaborative filtering (CF) is a well-known recommendation method that estimates missing ratings by employing a set of similar users to the active user [89]. Collaborative filtering can be further divided into two sub-types: user-based collaborative filtering and item-based collaborative filtering.

– User-based collaborative filtering is a widespread technique as many researchers consider important in the memory-based collaborative filtering recommender system (RS) to accurately calculate the similarities between users and finally finding interesting recommendations for active users [90].

– Item-based collaborative filtering utilises the similarity values of items for predicting the target item [91]. The basic assumption of item-based CF is that user prefers similar items that he or she liked in the past [92].

• Content-based filtering: This approach recommends items to users based on the user’s preferences and characteristics of the items. Content-based filtering uses attributes of the items to recommend similar items to the user. For example, in [93] the proposed content-based recommendation algorithm quantifies the suitability of a job seeker for a job position in a more flexible way, using a structured form of the job and the candidate’s profile, produced from a content analysis of the unstructured form of the job description and the candidate’s CV.

• Hybrid recommender systems: This approach combines collaborative filtering, which uses data on user behaviour to recommend content, with content-based filtering, which uses characteristics of the content itself to make recommendations. For example, in [94], a two-level cascade scheme is presented where a hybrid of one-class classification and collaborative filtering is used to decompose the recommendation problem, with the first level selecting desirable items for each user using a one-class classification scheme trained only with positive examples, and the second level applying collaborative filtering to assign rating degrees to the selected items.

• Machine-learning based recommender systems: This approach employs machine learning algorithms as the main underlying reasoning mechanism. For example, in [95] an artificial immune algorithm has been used in a system for music recommendation. In [96] the recommender system uses “musical genre classification” and “personality diagnosis” as inputs to make recommendations, by employing machine learning to classify musical genres and diagnose personality traits.

• Deep learning-based recommender systems: This approach uses neural networks and deep learning techniques to model complex relationships between users and items to make personalised recommendations. For example, an approach of biomarker-based deep learning is used for personalised nutrition recommendations [97].

• Fuzzy-based recommender systems: The presence of several works focuses on managing items’ attributes with fuzzy techniques, strengthening the development of uncertainty aware content-based recommender systems [98].

• Context-aware recommendation: This approach takes into account additional contextual information, such as the time of day, user location, or weather conditions, to make more relevant recommendations. For example, in [99] environmental and social considerations are incorporated into the portfolio optimisation process and in [100] a tourism recommendation system is context-aware and is also based on a fuzzy ontology.

• Constraint-based recommender systems: These systems constitute a type of recommendation system that uses a set of constraints or rules to generate personalised recommendations for users. These systems are often used in situations where there are specific requirements or constraints that must be considered in the recommendation process, such as limited availability of products or services, specific user preferences or characteristics, or other business or operational considerations. For example, in [101] a constraint-based approach has been used in a job recommender system.

• Knowledge-based recommender systems. These systems rely on explicit knowledge about a user’s preferences, needs, and characteristics, as well as knowledge about the product or service being recommended. This knowledge is usually represented as rules or logic statements that are developed by human experts in the domain. Knowledge-based recommendation systems typically do not rely on machine learning algorithms to generate recommendations. For example, in [102] a knowledge-based TV-shopping application is described that provides recommendations based on users’ current input and historical profile from past interactions.

• NLP, voice synthesisers, and animations in recommender systems. These approaches can enhance the user experience and improve the effectiveness of the recommendations by providing more personalised, natural, and engaging interactions. For example, in [103] the incorporation of animated lifelike agents into adaptive recommender systems has been used to help users buy products in an e-shop in a more effective way.

• Matrix factorisation: This is a type of collaborative filtering that uses matrix factorisation to extract latent factors that explain the user-item interaction. The technique involves decomposing a matrix of user-item interactions into lower-dimensional matrices, and then using those matrices to predict missing entries and recommend new items. For example, in [104], the authors propose a lightweight matrix factorisation algorithm that reduces the number of parameters required for training, while maintaining the accuracy of the recommendations.

• Association rule mining: This technique involves finding patterns in large datasets to make recommendations. Association rule mining helps to find out the association between different attributes of the system. For example, if users of an e-bookshop who buy a book A also tend to buy a book B, then the system might recommend book B to users who have purchased book A by saying something like Users who bought the book A were also interested in buying book B. An example of an approach uses association rule mining to uncover hidden associations among metadata values and to represent them in the form of association rules. These rules are then used to present users with real-time recommendations when authoring metadata [105].

• Reinforcement learning: Reinforcement learning (RL) is a machine learning approach that learns by interacting with an environment to achieve a specific goal. The authors in [106] point out that unlike traditional recommendation methods, including collaborative filtering and content-based filtering, RL is able to handle the sequential, dynamic user-system interaction and to take into account the long-term user engagement and that although the idea of using RL for recommendation is not new, it used to have scalability problems in the past.

• Graph-based recommendation: This approach uses graph algorithms to model relationships between users, items, and other entities in the system. The graph structure can be used to generate personalised recommendations and to identify communities of users with similar preferences. For example, [107] presents a comprehensive framework for smoothing embeddings in sequential recommendation models. The approach involves constructing a hybrid item graph that combines sequential item relations based on user-item interactions with semantic item relations based on item attributes.

3.3AI techniques for the UX of intelligent help systems and virtual assistants

AI has played a significant role in enhancing the user experience of intelligent help systems and virtual assistants. Recently a lot of virtual assistants or intelligent personal assistants have been developed by leading software companies to enhance the user experience in many ways. The term “virtual assistant” is often used similarly with “intelligent personal assistant” to refer to AI programs designed to assist users. However, “virtual assistant” is a more general term that can refer to any type of AI assistant, while “intelligent personal assistant” specifically refers to AI assistants that are designed to interact with users in a personalised way, often through voice commands or natural language processing.

Natural Language capabilities in virtual assistants have progressed enormously during the past decade. However, the notion of having assistants with capabilities to communicate in natural language started many decades ago with the program named “ELIZA” which performed natural language processing (NLP) and was developed in the mid-1960s by Joseph Weizenbaum at MIT [108]. ELIZA was one of the first attempts to create a chatbot or conversational agent using pattern recognition and simple language processing techniques. ELIZA works by identifying keywords and phrases in the user’s input and then generating a response based on a set of pre-programmed rules or scripts. The program is designed to mimic the behaviour of a psychotherapist, and as such, it often responds to questions with open-ended questions of its own, encouraging the user to continue talking and exploring their thoughts and feelings. While ELIZA’s approach to natural language processing is now considered limited, it remains an important landmark in the history of AI and NLP and has influenced subsequent research in these fields.

The latest Intelligent Personal Assistants (IPA), such as Amazon Alexa [109], Microsoft Cortana [110], Google Assistant [111], or Apple Siri [112], allow people to search for various subjects, schedule a meeting, or to make a call from their car or house hands-free, no longer needing to hold any mobile devices [113] and they communicate in natural language with users. There is also ChatGPT, a large language model trained by OpenAI designed to answer a wide range of questions and engage in natural language conversations with users [114]. In [115] recent research and specific and complex Intelliget Personal Assistants (IPA) are presented and the authors highlight that the IPA must fit the users’ profiles and learn their context (family and social) taking into account their emotional estates to resolve any potential request and that the IPA should be able to recognise users’ goals, act proactively, and interact with other applications to accomplish them.

Virtual assistants are similar to intelligent help systems in that they are both AI-empowered programs designed to provide assistance to users. Both types of systems can answer questions, provide information, and offer guidance in a variety of areas. However, virtual assistants often have more advanced features, such as voice recognition technology and natural language processing, which allow them to interact with users in a more conversational and personalised way. Additionally, virtual assistants can often control smart home devices, make phone calls, engage in conversations, and perform other tasks beyond just providing information or guidance. AI-empowered intelligent help systems and virtual assistants can help users quickly and easily find answers to their questions or get assistance with complex tasks. Moreover, virtual assistants are AI programs designed to answer questions, provide information, and assist users in various ways. These systems can also proactively offer suggestions or assistance based on user behaviour, such as identifying common issues or errors and providing solutions.

Since the inception of intelligent help systems, they have been broadly categorised as either passive or active help systems [116].

• Passive help systems require that the user requests help explicitly from the system. For example, UC is a passive help system for Unix users that can answer to users’ questions about Unix commands, using Natural Language [117].

• Active help systems should guide and advise a user like a knowledgeable colleague or assistant. For example, AI has been used to generate hypotheses about users while they are interacting with the Unix operating system so that it may provide spontaneous assistance [118, 119].

There are several types of AI that can be used to improve the user experience of intelligent help systems and virtual assistants. The following are some AI techniques that have been successful in intelligent help systems and virtual assistants:

• Goal recognition: Goal Recognition refers to the ability to recognise the intent of an acting agent through a sequence of observed actions in an environment. While research on this problem gathered momentum as an offshoot of plan recognition, recent research has established it as a major subject of research on its own, leading to numerous new approaches that both expand the expressivity of domains in which to perform goal recognition and substantial advances to the state-of-the-art on established domain types [120].

• Plan recognition: Plan recognition in intelligent help systems and virtual assistants refers to the ability of these AI systems to infer the user’s plans or goals based on their actions and behaviour. Plan Recognition is a closely related problem to goal recognition, where the premise of the observer is to recognise how the acting agent intends to reach its goal [121]. This involves analysing and interpreting the user’s interactions with the system, such as their voice commands, search queries, and other input, to identify their intentions and anticipate their future actions. Plan recognition is a critical component of intelligent help systems and virtual assistants as it enables the system to provide more personalised and context-aware responses and recommendations, improving the overall user experience. Various techniques and algorithms are employed to implement plan recognition in intelligent help systems and virtual assistants. For example, a theoretical treatment of plan recognition is presented in [122] where the use of an interval-based logic of time to describe actions, atomic plans, non-atomic plans, action execution, and simple plan recognition is described. In another example, plan-based techniques for automated concept recovery are presented in [123]. The authors of [124] note that a recogniser is deemed superior to prior works if it can recognize plans faster or in more intricate settings, although this often results in a trade-off between speed and richness or computation time.

• Plan generation: Plan generation in intelligent help systems and virtual assistants refers to the ability of these AI systems to generate plans or sequences of actions that help the user achieve their goals or complete their tasks. This requires analysing the user’s input and context to determine the appropriate course of action and generating a plan that is most likely to achieve the desired outcome. Plan generation is a necessary component of intelligent help systems and virtual assistants as it enables the system to provide more proactive and effective assistance to the user. Various techniques and algorithms, such as rule-based systems, decision trees, and reinforcement learning, are employed to implement plan generation in these systems. For example, in [125], to achieve the design target of responding accurately to complex users’ queries that involve the interconnection of multiple commands with various options, the system requires a detailed representation of dynamic knowledge about command behaviour and a planning mechanism that can integrate the knowledge into a cohesive solution.

• Error diagnosis in intelligent help systems and virtual assistants concerns the ability of these AI systems to identify, diagnose and help in the rectification of human errors that the user may have made in the context of the problem domain. Rasmussen points out that human errors are not events for which objective data can be collected, instead they should be considered occurrences of man-task mismatches which can only be characterised by a multifaceted description [126]. An empowered AI-system performs error diagnosis by monitoring and analysing the user’s input as well as contextual information and concerning the domain of use to determine if there are any errors or inconsistencies, and in case there are, identifying the root cause of the problem, so as to provide advice on the rectification of errors, which the user may have or have not been aware of. For example, in the domain of a compiler architecture for domain-specific type error diagnosis of programming languages, the authors define error contexts as a way to control the order in which solving and blaming proceeds [127]. Error diagnosis is an important AI-based operation in intelligent help systems and virtual assistants as it enables the system to identify a problem and offer helpful guidance to the user in resolving the diagnosed problem. As an AI method, error diagnosis involves using AI algorithms and techniques to analyse data and identify patterns that can help detect errors or anomalies in a system. This can be applied in various domains, such as healthcare, finance, and education, to improve the efficiency and accuracy of processes. Various techniques and algorithms, such as rule-based systems, cognitive theories, decision trees, and machine learning, are employed to implement error diagnosis in these systems. For example, in [128] pronunciation error detection and diagnosis is achieved through cross-lingual transfer learning of non-native acoustic modeling.

• Knowledge Representation and Reasoning: This AI technique involves representing knowledge in a way that can be easily processed and reasoned about by the system. By using knowledge graphs, ontologies, and other techniques, intelligent help systems and virtual assistants can store and retrieve information in a way that allows for more efficient and effective assistance. For example, in [129] an intelligent help system sets the design target to advise users on alternative plans that would achieve the same goal more efficiently and along the way, a representation of plans, subplans, goals, actions, properties and time intervals is developed to recognise plans and then to advise the user.

• Natural Language Processing (NLP): This AI technology allows intelligent help systems and virtual assistants to understand and respond to natural language input from users. NLP can be used to create chatbots that can help users with their queries and problems as has been the case in intelligent help systems [130] and in more recent virtual assistants [131]. Chin [132] argues that intelligent help systems should not merely react to user input but actively offer information, correct misconceptions, and reject unethical requests, and to do so, they must function as intelligent agents utilizing Natural Language Processing and Plan suggestion situations.

• Machine Learning (ML): This type of AI can help intelligent help systems and virtual assistants learn from user interactions and improve their responses over time. ML algorithms can be used to personalise the user experience and provide more relevant help and recommendations. Supervised learning algorithms can be trained on labeled data to classify instances as normal or faulty, while unsupervised learning algorithms can be used to identify anomalies or outliers in the data. For example, Microsoft Cortana [110] uses machine learning to personalise user experiences, understand user queries, and provide relevant information and recommendations.

• Neural Networks: Neural networks can be used to learn the relationship between the observed actions and the underlying goals or intentions from a set of training examples. Once trained, neural networks can be used to infer the most likely goals or intentions based on the observed actions. Neural networks can also be used to diagnose errors or faults in complex systems, such as manufacturing processes or healthcare systems. By analysing sensor data and other inputs, neural networks can learn to predict when errors or faults are likely to occur.

– Convolutional Neural Networks (CNNs) are a type of neural network which have a feature extraction part where the specified kernels are convoluted on the data [133]. CNNs consist of several layers of interconnected nodes, each of which performs a specific type of operation on the input data. One important advantage of CNNs is their ability to learn features automatically from raw input data, without requiring explicit feature engineering by humans. CNNs have been used effectively in a wide range of applications, including image and video recognition, natural language processing, which may all be used in Virtual Assistants and Intelligent Help Systems among other interactive application domains.

– Recurrent Neural Networks (RNNs) are a type of neural network that extend a Feedforward Neural Network (FNN) model, which learned an appropriate set of features while it was learning how to predict the next word in a sentence. The extension allows RNNs to handle arbitrary context lengths [134]. One important advantage of RNNs is their ability to handle input sequences of varying length. This renders them a powerful method in applications such as speech recognition, where the length of the audio input may vary from one sample to the next. For example, Google Assistant [111] uses neural networks to improve its speech recognition and Natural Language Understanding capabilities. It uses a combination of CNNs, RNNs, and sequence-to-sequence models to interpret user queries and provide natural language responses.

– DNNs stands for Deep Neural Networks, which are a type of artificial neural network that is designed to model complex relationships between inputs and outputs. A DNN is a collection of neurons organised in a sequence of multiple layers, where neurons receive as input the neuron activations from the previous layer, and perform a simple computation (e.g. a weighted sum of the input followed by a nonlinear activation) [135]. The depth of the network refers to the number of layers it has, which can range from a few to hundreds or even thousands of layers. DNNs are used in a wide range of applications, including image recognition, speech recognition, natural language processing, and autonomous vehicles, to name a few. DNNs have the ability to learn complex features from large datasets and thus they constitute a powerful method for solving many real-world problems. For example, Apple Siri [112] uses deep neural networks (DNNs) to recognise speech and understand user queries. It also uses recurrent neural networks (RNNs) to generate natural language responses.

• Cognitive theories are important for understanding how people learn, solve problems, and make decisions, and can be applied to the design of intelligent help systems and virtual assistants. These cognitive theories can inform the design of intelligent help systems and virtual assistants by guiding the selection of appropriate instructional strategies, the organisation and presentation of information, and the design of user interactions. For example, in [136] a cognitive theory has been employed to achieve automatic error diagnosis for users interacting with an operating system and offer spontaneous assistance.

• Rule-based Systems: Rule-based systems can be used to represent a set of rules that define the relationship between the observed actions and the underlying goals or intentions. By applying these rules to the observed actions, rule-based systems can infer the most likely goals or intentions. Rule-based systems that use a knowledge base and a set of inference rules can also be used to diagnose errors or faults. Moreover, they can be used to represent a set of rules that define the relationship between the initial state, the desired goal state, and the sequence of actions that should be performed to achieve the goal. By applying these rules to the initial state, rule-based systems can generate a plan for achieving the desired goal. For example, [137] describes a rule-based assistant system for managing the clothing cycle and in [138] an ontology and rule-based intelligent patient management assistant is described.

• Fuzzy logic: Fuzzy logic is a mathematical approach to deal with uncertain or imprecise data that are common in intelligent help systems and virtual assistants. It allows for degrees of membership in a set, rather than a strict binary membership. For example, [139] describes an intelligent fuzzy-based emergency alert generation to assist persons with episodic memory decline problems.

• Hierarchical Task Networks (HTNs): HTNs can be used to represent a plan as a hierarchy of subgoals and decompose the plan into a set of smaller, more manageable tasks. By recursively decomposing the plan into subgoals, HTNs can generate a plan that achieves the desired goal. For example, in [140] it is pointed out that integrating task and motion planning through the use of multiple mobile robots has become a popular approach to solve complex problems requiring cooperative efforts, as advancements in mobile robotics, autonomous systems, and artificial intelligence continue to rise.

• Reinforcement Learning: Reinforcement learning is a branch of machine learning concerned with using experience gained through interacting with the world and evaluative feedback to improve a system’s ability to make behavioural decisions [141]. For example, [142] shows how Reinforcement Learning is helping to solve Internet-of-Underwater-Things problems.

• Speech Recognition: This AI technology can be used to enable voice-based interactions with intelligent help systems and virtual assistants. This can be especially useful for users who have difficulty typing or navigating a graphical user interface. For example, the voice recognition and natural language understanding (NLU) capabilities of Amazon Alexa [109] are powered by machine learning algorithms, which enable it to interpret user commands and respond with appropriate actions.

• Computer Vision: This type of AI can be used to enable visual interactions with intelligent help systems and virtual assistants. For example, users could be interacting with a camera that performs image analysis on the face of the user to identify emotions of users while interacting and detect some displeasure on the part of the user which may need attendance. Another example in [143] describes research on detection of road potholes using computer vision and machine learning approaches to assist the visually challenged.

3.4AI techniques for the UX of intelligent tutoring systems

The User Experience (UX) in Intelligent Tutoring Systems (ITS) refers to how users perceive and interact with the system, including its interface, content, and features in the context of learning a particular domain being taught by the ITS. A well-designed ITS should provide an engaging and effective learning experience that meets the user’s individual needs and preferences.

AI has been used to improve the user experience of intelligent tutoring systems (ITS) by providing personalised and adaptive learning experiences for individual learners. These systems use AI algorithms to analyse user data and behaviour to identify learning strengths and weaknesses and to provide personalised instruction and feedback. This can be done by recording and assessing the user’s progress, identifying areas of users’ weaknesses, and providing targeted feedback and additional resources to help them improve.

AI can also be used to analyse user data to identify patterns and insights that can help improve the effectiveness of the tutoring system in many aspects. For example, AI can be used to analyse student engagement, the effectiveness of different teaching strategies, and the impact of different resources on learning outcomes.

To improve UX in ITS, AI can be used to personalise learning content and adapt the system’s feedback to the user’s performance. AI can also analyse the user’s behaviour and adjust the system’s interface and content to enhance user engagement and motivation. For example, an ITS can use affective computing to understand the user’s input and provide feedback with empathy, which can help the user feel more engaged and connected to the system.

There are different types of AI that can be used to improve the UX of intelligent tutoring systems. Some important approaches are the following:

• Adaptive hypermedia (AH) is a field of research that combines artificial intelligence (AI) and human-computer interaction (HCI) to develop systems that can dynamically adapt to the needs and preferences of individual users. AH systems use a combination of user modelling, content modelling, and adaptive navigation to provide personalised information and services to users. More specifically, adaptive hypermedia is an AI method that uses cognitive modelling, machine learning algorithms and other AI techniques to analyse data on users’ behaviour and draw inferences about their respective level of knowledge, preferences, and interests, and then adapt the content and navigation of a website or application to meet their needs. For example in [144] fuzzy logic has been used for student modelling so that adaptive navigation techniques are applied to automatically adapt the pace of tutoring to individual strengths and weaknesses of students. In another instance, adaptive hypermedia are used to adapt tutoring of algebra-related domains to the individual needs of students [145].

• Student modelling is the process of creating and maintaining a model of a student’s knowledge, skills, preferences, and other relevant attributes. The goal of student modelling is to provide personalised support and feedback to students, based on their individual needs and abilities in the domain being taught. It involves the use of artificial intelligence techniques to create and maintain the model. Some of the AI methods used in student modelling include machine learning algorithms, rule-based systems, cognitive theories and fuzzy logic. For example, in [146] an Artificial Immune System-Based student modelling approach is described to adapt tutoring to learning styles that fit the students’ needs. At another instance, student modelling is used to identify areas where learners have weaknesses so that the intelligent tutoring systems (ITS) may provide appropriate instruction to help them improve their performance in the domain of English as a second language [147]. A more comprehensive review of student modelling approaches and functions is presented in [148].

• Learning Analytics. A new research discipline, termed Learning Analytics, is emerging and examines the collection and intelligent analysis of learner and instructor data with the goal to extract information that can render electronic and/or mobile educational systems more personalised, engaging, dynamically responsive and pedagogically efficient [149].

• Error diagnosis is an important function of Intelligent Tutoring Systems that uses AI techniques to diagnose problematic parts of the students’ behaviour or beliefs that lead to errors in assessment tests and reveal misconceptions. The deep error diagnosis frequently requires conflict resolution as erroneous behaviour may be due to many conflicting hypotheses about possible students’ misconceptions. As an example, in an English tutor, a process of real-time evaluation of conflicting hypotheses about students’ mistakes is used to perform a deep error diagnosis. This approach aims to identify the underlying students’ weaknesses that may be causing the errors [150].

• Knowledge Representation is an important component of Intelligent Tutoring Systems that allows the representation of a domain being taught to be used by several AI inference mechanisms to find out the progress or weaknesses of students. For example, in [151], Fuzzy Cognitive Maps are used for the Domain Knowledge Representation of an Adaptive e-Learning System.

• Fuzzy logic. This is a type of AI that has been used in many aspects of Intelligent Tutoring Systems. For example, fuzzy logic has been used to determine the knowledge level of students of programming courses in an e-learning environment [152] and on another instance it has been used for student-player modelling in an educational adventure game [153].

• Machine learning: This is a type of AI that involves the use of algorithms and statistical models to analyse data and make predictions or decisions without being explicitly programmed. Machine learning can be used in intelligent tutoring systems to personalise the learning experience for each student by analysing their learning data, preferences, and behaviour. For example, [154] decribes a machine learning-based framework for the initialisation of student models in Web-based Intelligent Tutoring Systems of various domains, such as mathematics and language learning.

• Deep Learning: Deep learning is a subset of machine learning that uses neural networks to simulate the workings of the human brain. Deep learning can be used in Intelligent Tutoring Systems at many levels and for varying functionalities. For example, in [155] neural networks have been employed to perform visual-facial emotion recognition so that the tutoring system may analyse the face of a student through a camera and understand how the student feels about the lesson so that it may respond in an appropriate manner.

• Cognitive theories: This is a type of AI that is designed to simulate human thought processes, including perception, reasoning, and learning. Cognitive computing can be used in intelligent tutoring systems to provide more sophisticated feedback and guidance to students, based on their individual learning styles and cognitive abilities. For example in [156], student modelling is performed using cognitive theories to identify cognitive, personality and performance issues in a collaborative learning environment for software engineering. In another instance, the individualisation of a cognitive model of students’ memory is performed in an Intelligent Tutoring System, so that the system may personalise the presentation and sequencing of revisions of teaching material depending on when the student is likely to have forgotten the material taught in preceding sections of the ITS [157].

• Decision Theories: Decision theories are a set of frameworks and models used to make rational and informed decisions in the face of uncertainty. These theories aim to identify the best course of action among a set of alternatives, taking into account the potential outcomes and their probabilities.

An example of an intelligent medical tutor that uses decision theories is described in [158]. This tutor offers adaptive tutoring on atheromatosis to users with varying levels of medical knowledge and computer skills, based on their interests and backgrounds. This adaptivity is achieved through user modelling, which draws on stereotypical knowledge of potential users, including patients, their relatives, doctors, and medical students. The system uses a hybrid inference mechanism that combines decision-making techniques with rule-based reasoning of double stereotypes.

• Affective computing: This type of AI focuses on recognising human emotions and generating responses that contain emotion-like feedback from the part of the computer. One way that affective computing can be used in ITS is through the use of emotion recognition technologies. These technologies can detect and interpret facial expressions, vocal tone, and other parts of the users’ behaviour to determine a student’s emotional state. An illustration of this process is the use of a voice recognition system and analysis of keyboard actions to perform emotion recognition of students in an Intelligent Tutoring System (ITS) [159]. The system takes into consideration the recognized emotions and utilizes a cognitive theory of affect to generate emotion-based responses that are appropriate for the learner’s current affective state. These emotions are conveyed to the learner through an animated agent, which mimics human empathy by adjusting the tone and pitch of its voice while providing recommendations for specific learning materials or modifying the level of instruction difficulty. In a different scenario, described in [160], the cognitive theory of affect is utilized to identify the emotions of students instead of generating emotional responses. This approach is implemented within an Intelligent Tutoring System designed as an educational game. Three animated agents are employed to simulate the roles of a classmate, tutoring coach, and examiner, and they provide appropriate responses based on the recognized emotions of the student.

• Mobile and smartphone senses: Mobile and smartphone devices provide a wealth of sensors that can accumulate users’ responses which, in turn, can be exploited for analysing users’ behaviour in the context of an intelligent tutoring system. Mobile devices can provide profound reasoning [161] concerning users through smartphone senses [162]. One such example is the sentiment mapping through smartphone multi-sensory crowdsourcing described in [163].

• Engagement and immersive technologies: Engagement and immersiveness of learners in computer-based tutoring constitute important features that are sought by learning applications to maximise the educational effectiveness [164]. To achieve these there has been a growing emphasis on recognising human emotions in interactive computer-based learning applications, while using multi-modal user interfaces, natural language as well as virtual reality environment in conjunction with AI techniques.

• Educational games and edutainment: The technology of game playing has been blended with AI techniques and together they constitute important parts of educational software in the context of Intelligent Tutoring Systems to provide engaging challenges to students so that they stay active and alert while learning. These educational activities are used to educate and entertain and often titled as edutainment technology. For example, in [165] a software version of the well known game “Guess who” is developed to teach students parts of the English language as a second language. In [166], a 3D game is developed for the purposes of education and fuzzy-based reasoning mechanism is used to ensure that students receive a dynamically adjusted difficulty level of the game itself so that they may enjoy the game and attend the teaching material [167] without encountering difficulties or being bored with the game itself. In another instance, an educational adventure game encompasses adaptive scenaria to accommodate the individual learning needs of each student [168].

• Collaborative Learning: The social context of class involving students with classmates and allowing collaboration is important in the area of Intelligent Tutoring Systems to enhance learning experiences. For example, in [169] an intelligent recommender system for trainers and trainees has been incorporated in a collaborative learning environment in order to recommend to students and teachers teams of students that appear to have complementary qualities for the purposes of educational collaboration in project assignments.

• Social Networks: Social networks may extend collaborative learning in the platforms of social media. For example, [170] describes an Intelligent Tutoring System over a social network for mathematics learning.

• Natural language processing (NLP): This is a type of AI that enables computers to understand, interpret, and generate human language. NLP can be used in intelligent tutoring systems to provide students with feedback on their written or spoken responses, and to help them practice their language skills in a more natural way. In this approach, AI-empowered tutoring systems can use natural language processing (NLP) and machine learning algorithms to understand user input and provide more human-like responses and feedback by achieving a more natural and interactive learning experience. For example, in [171] the focus is on the combination of various deep learning approaches to automatically help students to accelerate the learning process by automatic feedback, but also to support teachers by pre-evaluating free text and suggesting corresponding scores or grades.