Bladder Cancer Health Literacy: Assessing Readability of Online Patient Education Materials

Abstract

BACKGROUND:

Understanding of health-related materials, termed health literacy, affects decision makings and outcomes in the treatment of bladder cancer. The National Institutes of Health recommend writing education materials at a sixth-seventh grade reading level. The goal of this study is to assess readability of bladder cancer materials available online.

OBJECTIVE:

The goal of this study is to characterize available information about bladder cancer online and evaluate readability.

METHODS:

Materials on bladder cancer were collected from the American Urological Association’s Urology Care Foundation (AUA-UCF) and compared to top 50 websites by search engine results. Resources were analyzed using four different validated readability assessment scales. The mean and standard deviation of the materials was calculated, and a two-tailed t test for used to assess for significance between the two sets of patient education materials.

RESULTS:

The average readability of AUA materials was 8.5 (8th–9th grade reading level). For the top 50 websites, average readability was 11.7 (11–12th grade reading level). A two-tailed t test between the AUA and top 50 websites demonstrated statistical significance between the readability of the two sets of resources (P = 0.0001), with the top search engine results being several grade levels higher than the recommended 6–7th grade reading level.

CONCLUSIONS:

Most health information provided by the AUA on bladder cancer is written at a reading ability that aligns with most US adults, with top websites for search engine results exceeding the average reading level by several grade levels. By focusing on health literacy, urologists may contribute lowering barriers to health literacy, improving health care expenditure and perioperative complications.

INTRODUCTION

A bladder cancer diagnosis has heavy implications in the physical, psychological, and emotional aspects of the lives of patients. In this digital age, it is common for patients to explore treatment options online before making their decisions [1]. Nine in ten US adults use the Internet, and an estimated fifty-nine percent of US adults use the internet to search health related information [1, 2]. As the number of internet users continues to rise, it is clear that urologists must become aware of health information online pertaining to the various treatment options of bladder cancer and their associated morbidities and financial costs.

Health literacy is defined as the “ability to obtain, comprehend, and act on medical information” [3]. Health literacy requires an individual to have functional, communicative, and critical skills. Functional skills include reading and writing. Communicative skills refer to extracting and applying new information in varying and evolving situations. Critical skills allow for the analysis of information and reflecting on advice [3]. The National Assessment of Health Literacy estimates that one-third of patients in the United States have limited health literacy, with the average U.S. adult reading at a seventh to eighth grade reading level [3]. The National Institutes of Health (NIH) and American Medical Association (AMA) recommend patient education materials be written at a sixth-seventh grade reading level to reach a greater patient base and account for the national average reading level [4]. The Joint Commission recommends that patient education materials be written at a fifth-grade level [5]. When applied to the urological surgery patient population, health literacy is crucial for comprehending the nature, risks, and benefits of surgical procedures, adhering to strict perioperative rules, and making complex care decisions about interventions or lack thereof. Poor health literacy may lead to patient nonadherence, higher healthcare costs, increased mortality, and poor surgical outcomes [6, 7]. Low health literacy is associated with $106–236 billion annually in increased hospitalizations [6].

With an abundance of patient education materials available online, a nonprofit organization termed Health on the Net Foundation (HON) was created in 1995 to combat false health information online [8]. The Health on the Net Foundation Code of Conduct (HONcode) was created by the HON Foundation to grant certification to human health online content containing reliable and credible health information [9]. The certification is designed to set basic ethical standards in the presentation of health information and clearly identify the source and purpose of the data on patient-centered websites [9]. HONcode certification is reviewed by a committee of medical professionals [8]. The HONcode does not rate the quality of information provided by websites and requires an application in order to be considered for certification [10].

Prior health literacy studies have looked at broad urological topics, collecting patient education materials from websites of major urologic organizations [11] and analyzing readability of the articles [4, 12]. Additionally, a 2018 study by Azer et al. reviewed accuracy and readability of kidney and bladder cancer websites [13]. This study determined readability of kidney and bladder cancer to fall between 10–11th grade reading levels [13]. A second study by Pruthi et al. analyzed resources on bladder, prostate, kidney, and testicle cancer from major urologic organizations, determining readability to average between an 11–12th grade reading level [12]. However, these studies have not isolated bladder cancer resources, comparing materials from the American Urological Association’s Urology Care Foundation compared with internet search engines. This study aims to analyze patient resources specific to bladder cancer by characterizing online materials, assessing for HONcode certification, and evaluating for readability.

MATERIALS AND METHODS

Free patient education resources on bladder cancer from the Urology Care Foundation, the official foundation of the American Urological Association (AUA), and from the top 50 websites using search engine results queried on July 25, 2020. A total of 8 articles related to bladder cancer patient education were obtained from the AUA website. The search term “bladder cancer” was queried using Google. The search browser was cleared for prior search engine history, cookies, location tools, and user account information to limit potential result bias. The first 50 websites were used, with materials within one click of opening the website collected for data analysis. Only the main text of the article was included, with advertisements, external links, references, contact forms and addresses excluded from the analysis. Sponsored or duplicate websites were not included in the analysis. Only articles written in English were included in the analysis.

The text collected from each website was analyzed for readability using four different validated scales to maintain reliability in the readability assessment process [14]. These scales included the Coleman-Liau Index, which assesses average number of letters and average number of sentences [4]; the SMOG (Simplified Measure of Gobbledygook) Readability Formula, which analyzes average number of words with three or more syllables and average number of sentences [15]; the Gunning Fog Index, which looks at number of sentences, number of words, and number of words with three or more syllables [16]; and the Flesch-Kincaid Grade Level, which looks at average number of syllables per word and average number of words per sentence [16].

The top 50 websites were further classified by category including institutional or reference (academic and hospital centers, government sponsored, and evidenced-based), commercial (private practice or funded), charitable (non-profit and recognized medical society), or personal sites. Each website was also assessed for HONcode certification.

Statistics were calculated utilizing JMP 15.1 Software (SAS Institute Inc, SAS Campus Drive, Cary, NC) and Microsoft Excel Version 16.35 (Microsoft Corporation One Microsoft Way Redmond, WA). Mean readability and standard deviation were calculated for each of the four assessment tools. Statistical analysis was performed to assess for significance between the AUA and top 50 websites using a two-tailed t test. To further analyze the top 50 websites by category, a one-way analysis of variance (ANOVA) was used to assess for significance. P value was set at 0.05. Unless specified, data are reported as means±Standard Deviation (SD).

RESULTS

The mean readability scores of resources on the AUA website include a Coleman-Liau Index of 9.4±0.7 (9th grade), SMOG Readability of 7.5±0.5 (7th–8th grade), Gunning Fog Index of 8.9±1.2 (8th–9th grade), and Flesch-Kincaid of 8.1±0.8 (8th grade). The average readability of AUA materials by the four assessment tools was 8.5±0.8 (8th–9th grade).

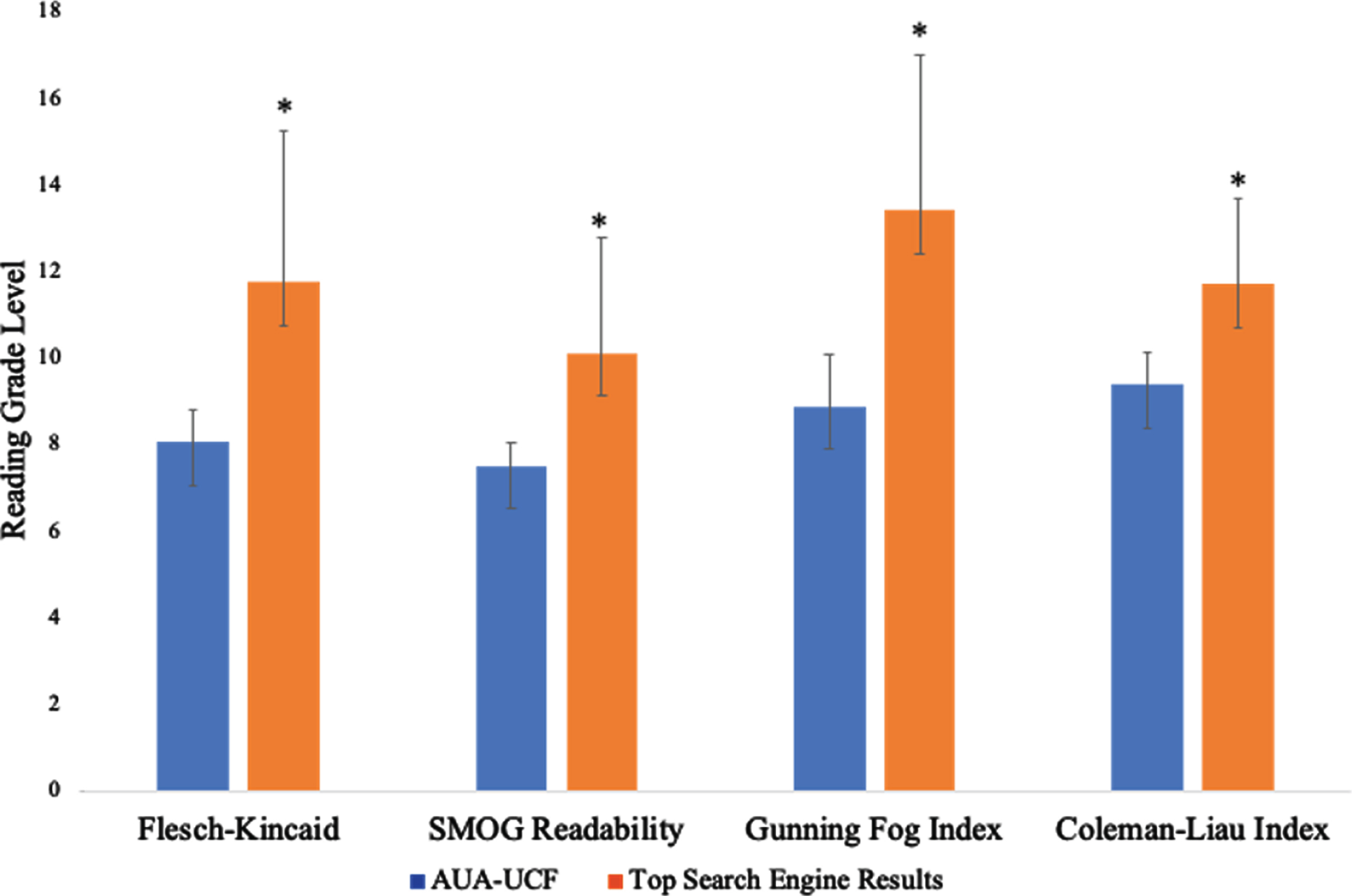

The top 50 websites by search engine results are listed in Table 2. Scores included a Coleman-Liau of 11.7±2.0 (11–12th grade), SMOG Readability of 10.1±2.7 (10th grade), Gunning Fox Index of 13.4±3.6 (13–14th grade), and Flesch-Kincaid of 11.8±3.5 (11–12th grade). The average readability of these websites by the four assessment tools was 11.7±2.9 (11–12th grade). Results are displayed in Table 1. A two-tailed t test comparing the average readability between the AUA (8.5±0.8) and top search engine results (11.7±2.9) demonstrated statistical significance (P = 0.0001). Readability by assessment tools of the AUA-UCF and top search engine results are displayed in Fig. 1.

Table 2

Readability and heath-of-net foundation code status by website category

| Category | N (%) | HON, N (%) | CLI (SD) | SMOG (SD) | GFI (SD) | FKGL (SD) |

| Instit/Ref | 33 (66) | 4 (44) | 11.7 (1.9) | 10.0 (2.3) | 13.2 (3.7) | 11.6 (3.1) |

| Commercial | 10 (20) | 5 (56) | 12.5 (2.2) | 11.5 (3.5) | 15.4 (4.3) | 13.5 (4.6) |

| Charitable | 7 (14) | 0 (0) | 10.3 (1.8) | 8.6 (2.3) | 11.7 (3.0) | 10.0 (2.7) |

HON –Health of the Net Foundation code status, Instit/Ref –Institutional/Reference, SMOG –Simplified Measure of Gobbledygook, CL –Coleman-Liau Index, GFI –Gunning Fog Index, FKGL –Flesch-Kincaid Grade Level.

Table 1

Average readability of AUA-UCF and top search engine bladder cancer resources

| AUA/UCF | Top 50 Websites | P value | |

| N | 8 | 50 | |

| Coleman-Liau Index, mean (SD) | 9.4 (0.7) | 11.7 (2.0) | P = 0.008 |

| SMOG Readability Formula, mean (SD) | 7.5 (0.5) | 10.1 (2.7) | P = 0.008 |

| Flesch-Kincaid Grade Level, mean (SD) | 8.1 (0.8) | 11.8 (3.5) | P = 0.004 |

| Gunning Fox Index, mean (SD) | 8.9 (1.2) | 13.4 (3.6) | P = 0.002 |

AUA –American Urological Association, UCF –Urology Care Foundation, SMOG –Simplified Measure of Gobbledygook.

Fig. 1

Readability of AUA-UCF and Top 50 Website Results by Readability Tools. Bar graph showing the mean reading grade level for online bladder cancer patient information from the American Urological Association-Urologic Care Foundation (AUA-UCF) websites (orange bars) and the top 50 search engine websites (blue bars). Reading grade level was assessed with four validated metrics. SMOG, Simplified Measure of Gobbledygook. *p < 0.05.

The majority of the top 50 websites were categorized as institution/reference (33/50, 66%), followed by commercial (10/50, 20%), and charitable (7/50, 14%). None of the websites were categorized as personal, and this category was excluded from further analysis. Only 9/50 (18%) websites were HONcode certified, mainly in the commercial (5/9, 56%) and institutional/reference (4/9, 44%) categories (Table 2). HONcode certification was not associated with a difference in readability (P = 0.41). A subgroup analysis (Table 2) was performed between the institutional/reference, commercial, and charitable websites. ANOVA demonstrated significant differences in mean readability scores across website categories (P = 0.0003).

An example of an AUA-UCF material written at a 9th grade reading level includes the description of cystoscopy: “this very common test lets your doctor see inside your bladder using a thin tube that has a light and camera” [17]. In contrast, a 12th grade reading level example from one of the top 50 websites from Cancer.Net reads: “a surgeon inserts a cystoscope through the urethra into the bladder” [18]. While both accurately describe the procedure, the AUA-UCF material includes simple, commonly used words.

DISCUSSION

The American Medical Association, U.S. Department of Health and Human Services, and Healthy People 2020 have made specific aims to improve identification and accommodation of patients with low health literacy [4]. Patient understanding can be improved by providing patient education materials written at a grade level that will be most widely understood by a wide spectrum of education and reading levels. In this study, bladder cancer patient online education materials were examined as a unique search term allowing for specificity of search results. With more than half of US adults utilizing the internet for patient education materials, this investigation aimed to analyze and characterize the readability of health information online related to bladder cancer and its treatment to best serve this unique patient population [1, 2].

The readability tests utilized in this analysis were chosen as they are among the most commonly used readability formulas and have been validated by several prior studies [19]. These tests analyze word complexity (based on number of syllables), character count, and sentence length. The Coleman-Liau Index analyzes number of characters and sentences per 100 words. This index is often chosen for its simplicity and ease in assessing readability of health-related materials [19]. The Flesch-Kincaid Grade Level calculates results based on total number of words, sentences, and syllables with results corresponding to an overall grade level [20, 21]. This tool is often selected for analyzing readability given its accuracy and simplicity [19]. The Gunning Fog Index analyzes average sentence length and proportion of complex words with 3 or more syllables in comparison to regular words to determine readability [20, 21]. The Gunning Fog Index is a preferred tool for assessing health and medicine information materials [19]. The SMOG Readability Formula is calculated by number of sentences and words with 3 or more syllables [20, 21]. Higher scores correspond with lower readability. SMOG aims at predicting 100%comprehension, whereas other formulas aim for 50–75%comprehension [22]. SMOG Readability Formula has been referred to as the preferred readability tool for assessing consumer-oriented health material given reliability and consistency in testing [19, 23]. As supported by previous readability studies, multiple readability tools were utilized to maintain reliability of the results [19, 21, 22, 24].

After readability analysis with these four validated instruments, this study demonstrated that the level of bladder cancer materials on the AUA website is between one to two grade levels above the recommended sixth-seventh grade reading levels, falling between an 8–9th grade reading level. The AUA is the largest urologic association worldwide and strives to provide educational materials for both providers and patients [25]. With free access to websites such as the AUA’s Urology Care Foundation, providers are able to confidently provide patient education materials on bladder cancer that reach a large range of reading levels. Of note, the online materials provided by the AUA’s Urology Care Foundation mirrored the print materials and can be printed by medical professionals to distribute to patients during office visits. In contrast to the reading level of the Urology Care Foundation, search engine from top 50 websites results demonstrated greater deviation from the average American reading level, on the order of at least three grade levels (average 11–12th grade reading level). The difference between these two resources were statistically significant (P < 0.01). The findings of this study align with prior studies investigating readability levels of urological materials [4, 12, 13]; however, this is the first study that has specifically investigated bladder cancer materials and compared top 50 websites results with one of the most widely utilized urology foundations [25].

Delving further into general organization of websites on bladder cancer, broad topic categories could be described as: defining bladder cancer, signs and symptoms, causes and risk factors, diagnostic testing, and management. Based on knowledge of readability tools and how reading grade levels are scaled (number of words per sentence, polysyllables, and character count) these categories were further explored to determine areas that were more or less complex in respect to health literacy. While many websites tended to define and explain in detail a bladder cancer overview with definitions and symptoms, complexity of the text increased when naming diagnostic testing and management. For example, hematuria was often defined as “bleeding in the urine,” assisting patients in understanding the terminology [26]. In contrast, diagnostic testing was often listed without further descriptors, such as “urinalysis with microscopy,” “voided urinary cytology,” and “cystoscopy” [27]. While names of these particular tests may be appropriate, adding a sentence to describe these tests may aid patients with lower health literacy.

Additionally, this study demonstrated variation between website categories, with the highest reading level identified in commercial websites and lower reading levels within charitable websites. This study also found that the majority of websites (82%) were not HONcode certified, a similar result seen in prior studies [28]. Websites holding this certification were commercial and institution websites. However, it is noteworthy that certification requires an application process and fee, possibly limiting non-profit organizations and websites with limited budgets [29]. HONcode certification was not associated with a difference in readability, also similar to prior studies [8]. This study demonstrates the need for continued efforts in improving patient education materials to meet a broader patient base; however, guiding patients to resources accessible online written at or near the recommended grade level, such as the AUA and similar health professional-led patient-focused non-profit organizations, may contribute to greater understanding of their diagnosis and potential treatment options.

Readability refers to understanding of a text [30]. The average US resident reads at a 7–8th grade reading level [3], and the average Medicare beneficiary reads at a 5th grade reading level [31]. A lower readability has been advocated for as “far more people have been put off reading a text because they could not understand it” than because the reading level was viewed as beneath their reading level [32]. Health literacy barriers are found among all ethnicities, races, and social classes; however, there has been a link between health literacy and education and income levels [31]. Prior studies have demonstrated that a large majority of patients understand patient education materials written at a lower reading grade level. In a diabetes patient education study by Overland et al., patients were randomized to read food care information at 6th, 9th, or 11th grade reading levels. 60%of patients understand the 6th grade materials, 21%understood the 9th grade reading level, and 19%understood the 11th grade materials independently [33]. Other studies have demonstrated similar results [31, 34]. Thus, while online patient education materials can be a valuable resource, it is important to note that these materials are often written at a level much higher than the readability of many, if not most, patients. The National Institutes of Health (NIH) note that many meritorious patient education campaigns are designed at a 9–10th grade reading level and are written for the general public. However, defining the target audience of the patient education materials may assist in communication and target messages towards patients with low health literacy. The NIH notes that readers appreciate materials “conveyed simply and clearly.” It is advised that patients who desire greater detail and in-depth information can be directed to these sources [35]. Online patient materials, both from the Urology Care Foundation and top Web sites, should be modified to the recommended 6–7th grade reading level. Additionally, many of the websites, including the AUA-UCF and top search results, supplement their materials with photographs or videos on bladder cancer along with treatment options, which may also aid to patient understanding and should continue to be utilized in the assemblance of patient education materials. References or links to resources containing greater detail should be available for patients who desire in-depth explanations at higher reading grade levels. These improvements can positively enhance patient awareness and understanding of their diagnosis and options, increase patient engagement, and improve upon physician-patient communication [36].

Beyond patient education materials in their written forms, several other validated methods may be employed to enhance patient education and understanding in the clinic. Examples include using nonmedical language during patient counseling, explaining difficult topics slowly or repeating information multiple times, visual aids, audiovisual resources, written supplements, and the teach-back method. Among these resources, the method most proven to enhance and ensure patient understanding is the teach-back method. In this style of patient education, the provider explains information to the patient using plain language and asks the patient to restate the information provided in their own words. If the explanation is incorrect, the provider may then choose to rephrase or repeat the information or may employ different strategies of communication with the patient. The process of repeating is continued as appropriate until the patient is able to offer the correct explanation. Once correct, the provider should acknowledge and reinforce appropriate understanding [37].

This study had certain limitations as only online, written materials were assessed. Oftentimes in clinical practice many methods of communication are utilized, including direct discussion with the patient, audio, images and figures, or video which may add to the greater picture of patient understanding. Additionally, as only English written materials were included, results are biased to English-speaking patients. More studies are needed to assess how implementing materials written at the recommended reading level may lead to improved surgical outcomes in the bladder cancer patient population.

CONCLUSION

Patient education materials online related to bladder cancer on the American Urological Association (AUA) website is written slightly above the reading ability of most U.S. adults by one to two grade levels. However, websites on bladder cancer obtained from top 50 website results are written above the recommended readability by at least three grade levels, often far higher. Urologists should assist patients by improving and providing patient education materials to fit a wider range of reading abilities, at a recommended sixth-seventh grade reading level. Additionally, evidenced-based teaching methods including the teach-back method may be employed to greater enhance physician-patient communication. With a greater focus on health literacy and readability of patient education materials, urologists may enhance provider-patient relationships and contribute to improved surgical outcomes and lowered health care expenditures.

ACKNOWLEDGMENTS

The authors have no acknowledgements.

FUNDING

There authors report no funding.

AUTHOR CONTRIBUTIONS

Lauren E. Powell: conception, performance of work, interpretation and analysis of data, writing the article; Theodore I. Cisu: conception, performance of work, interpretation and analysis of data, writing the article; Adam P. Klausner: conception, performance of work, interpretation and analysis of data, writing the article.

ETHICAL CONSIDERATIONS

Given the nature of this study where no human subject research was performed, the study did not require consent or IRB review.

CONFLICTS OF INTEREST/COMPETING INTERESTS

Lauren E. Powell has no conflicts of interest to report; Theodore I. Cisu has no conflicts of interest to report; Adam P. Klausner has no conflicts of interest to report.

REFERENCES

[1] | Fox S . The Social Life of Health Information. Pew Internet and American Life Project. May 2011. Available at: https://assets0.flashfunders.com/offering/document/e670ae83-4b9a-40f0-aac7-41b64dbc7068/PIP_Social_Life_of_Health_Info.pdf. Accessed January 8, 2020. |

[2] | Internet/Broadband Fact Sheet. Pew Research Center. Published June 12, 2019. Available at: https://www.pewresearch.org/internet/fact-sheet/internet-broadband/. Accessed January 8, 2020. |

[3] | Kutner M , Greenberg E , Jin Y , Paulsen C .The health literacy of America’s adults. National Center for Education Statistics. Published September 6, 2006. Available at: https://nces.ed.gov/pubs2006/2006483.pdf. Accessed January 8, 2020. |

[4] | Colaco M , et al. Readability Assessment of Online Urology Patient Education Materials. The Journal of Urology. (2013) ;189: (3):1048–52. |

[5] | Stossel LM , et al. Readability of Patient Education Materials Available at the Point of Care. J Gen Intern Med. (2012) ;27: (9):1165–70. |

[6] | Ismail IK , et al. An Evaluation of Health Literacy in Plastic Surgery Patients. Plastic and Reconstructive Surgery. (2015) ;136: (4S-1):55–9. |

[7] | De Oliveira GS , et al. The impact of health literacy in the care of surgical patients: a qualitative systematic review. BMC Surg. (2015) ;15: :86. |

[8] | Bompastore NJ , Cisu T , Holoch P . Separating the Wheat From the Chaff: An Evaluation of Readability, Quality, and Accuracy of Online Health Information for Treatment of Peyronie Disease. Urology. (2018) ;118: :59–64. |

[9] | Boyer C . When the quality of health information matters: Health on the Net is the Quality Standard for Information You can Trust. Health on the Net Foundation. 2013 Sep. Available at: https://www.hon.ch/Global/pdf/TrustworthyOct2006pdf. Accessed August 12, 2020. |

[10] | Health on the Net. HONcode Certification Introduction. November 5, 2019. Available at: https://www.hon.ch/HONcode/. Accessed August 12, 2020. |

[11] | Urology Care Foundation. Educational Materials, Available at https://www.urologyhealth.org/educational-materials?topic_area=1156|&product_format=466%7c&language=1122%7c. Accessed July 25, 2020. |

[12] | Pruthi A , et al. Readability of American Online Patient Education Materials in Urologic Oncology: a Need for Simple Communication. Urology. (2015) ;85: (2):351–6. |

[13] | Azer SA , et al. Accuracy and Readability of Websites on Kidney and Bladder Cancers. J Cancer Educ. (2018) ;33: (4):926–44. |

[14] | Jayaratne YS , Anderson NK , Zwahlen RA . Readability of websites containing information on dental implants. Clin Oral Implants Res. (2014) ;25: :1319–24. |

[15] | McLaughlin GH . SMOG grading—a new readability formula. J Reading. (1969) ;12: :639–64. |

[16] | Swanson CE , Fox HG . Validity of Readability Formulas. Journal of Applied Psychology. (1953) ;37: (2):114–8. |

[17] | Diagnosing & Treating Muscle-Invasive Bladder Cancer. Urology Care Foundation. Available at: file:///Users/student/Downloads/Muscle-Invasive-Bladder-Cancer-Fact-Sheet-1.pdf. Accessed August 23, 2020. |

[18] | Bladder Cancer: Types of Treatment. Cancer.Net. March 2019. Available at: https://www.cancer.net/cancer-types/bladder-cancer/types-treatment. Accessed August 23, 2020. |

[19] | Robinson E , McMenemy D . To be understood as to understand: A readability analysis of public library acceptable use policies. Journal of Librarianship and Information Science. (2020) ;52: (3):713–25. |

[20] | Zhou EP , Kiwanuka E , Morrissey PE . Online patient resources for deceased donor and live donor kidney recipients: a comparative analysis of readability. Clinical Kidney Journal. (2018) ;11: (4):559–63. |

[21] | Pruthi A , et al. Readability of American Online Patient Education Materials in Urologic Oncology: A Need for Simple Communication. Urology. (2015) ;85: (2):351–6. |

[22] | Kiwanuka E , Mehrzad R , Prsic A , Kwan D . Online Patient Resources for Gender Affirmation Surgery. Annals of Plastic Surgery. (2017) ;79: (4):329–33. |

[23] | Fitzsimmons PR , et al. A readability assessment of online Parkinson’s disease information. J R Coll Physicians Edinb. (2010) ;40: :292–6. |

[24] | Jayaratne YS , Anderson NK , Zwahlen RA . Readability of websites containing information on dental implants. Clin Oral Implants Res. (2014) ;25: :1319–24. |

[25] | AUA History. American Urological Association. 2020. Available at: https://www.auanet.org/about-the-aua/history-of-the-aua. Accessed August 12, 2020. |

[26] | Zorn KC , Gautam G , Davis CP , Stöppler MC . Bladder Cancer (Cancer of the Urinary Bladder). MedicineNet. Web. Available at: https://www.medicinenet.com/bladder_cancer/article.htm. Accessed November 4, 2020. |

[27] | Steinberg GD , Schwartz BF . Bladder Cancer. Medscape. May 6, 2020. Available at: https://emedicine.medscape.com/article/438262-overview. Accessed November 4, 2020. |

[28] | Koo K , Yap RL . How readable is BPH treatment information on the internet? Assessing barriers to literacy in prostate health. Am J Mens Health. (2017) ;11: :300–7. |

[29] | Health on the Net. Steps in the Process. March 2020. Available at: https://www.hon.ch/en/certification/websites.html. Accessed August 12, 2020. |

[30] | Badarudeen S , Sabharwal S . Assessing readability of patient education materials: Current role in orthopaedics. Clinical Orthopaedics and Related Research. (2010) ;468: :2572–80. |

[31] | Stossel LM , et al. Readability of Patient Education Materials Available at the Point of Care. J Gen Intern Med. (2012) ;27: (9):1165–70. |

[32] | Seely J ((2013) ). The Oxford Guide to Effective Writing and Speaking. Oxford: Oxford University Press. |

[33] | Overland JE , Hoskins PL , McGill MJ , Yue DK . Low literacy: a problem in diabetes education. Diabet Med. (1993) ;10: (9):847–50. |

[34] | Baker GC , Newton DE , Bergstresser PR . Increased readability improves the comprehension of written information for patients with skin disease. J Am Acad Dermatol. (1988) ;19: (6):1135–41. |

[35] | Clear & Simple. National Institutes of Health. December 18, 2018. Web. Available at: https://www.nih.gov/institutes-nih/nih-office-director/office-communications-public-liaison/clear-communication/clear-simple. Accessed November 4, 2020. |

[36] | Hutchinson N , Baird GL , Garg M . Examining the Reading Level of Internet Medical Information for Common Internal Medicine Diagnoses. The American Journal of Medicine. (2016) ;129: (6):637–9. |

[37] | DeWalt DA , et al. Developing and testing the health literacy universal precautions toolkit. Nurs Outlook. (2011) ;59: :85–94. |