Towards a richer model of deliberation dialogue: Closure problem and change of circumstances

Abstract

Models of deliberative dialogue are fundamental for developing autonomous systems that support human practical reasoning. The question discussed in this paper is whether existing models are able to capture the complexity and richness of natural deliberation. In real-world contexts, circumstances relevant to the decision can change rapidly. We reflect on today’s leading model of deliberation dialogue and we propose an extension to capture how newly exchanged information about changing circumstances may shape the dialogue. Moreover, in natural deliberation, a dialogue may be successful even if a decision on what to do has not been made. A set of criteria is proposed to address the problem of when to close off the practical reasoning phase of dialogue. We discuss some measures for evaluating the success of a dialogue after closure and we present some initial efforts to introduce the new deliberation features within an existing model of agent dialogue. We believe that our extended model of dialogue may contribute to representing that richness of natural deliberative dialogue that is yet to be addressed in existing models of agent deliberation.

1.Introduction

Practical reasoning is the inferential process of arriving at a conclusion to take action. Deliberative dialogue serves as a rational method of decision on what to do, thus rational deliberation cannot be understood without the fabric of practical reasoning that holds it together. Deliberation is often taken to be a solitary process in which an individual arrives at an intelligent conclusion on how to act by forming a goal and collecting data. Even individual deliberation can be seen as a process in which one “thinks aloud” by asking questions and then answers them himself. By answering such questions in a dialogue format, one can clarify his goals in light of his present situation and its potential future consequences. Practical reasoning in this context is seen as a form of argumentation. Group deliberations are also common: typically an individual is not acting in isolation, and the goal of participants is to decide what to do in collaboration.

Practical reasoning is foundational to current research initiatives in computing, especially in multi-agent systems; e.g., support to electronic democracy [6], and reasoning about patients’ medical treatments [3,5]. The capability of the user, or of the agents within the system, to pose critical questions and reply to counter-arguments for a proposed plan of action is vital for this application, as such deliberations are only useful if weak points in a proposal can be questioned. Often there is a gap between agent-based and human reasoning, however. Formal models of argumentation-based dialogue exploit natural ways for humans to represent justifications and conflicts in making decisions [3,7], but these models often detach from natural argumentation to focus on logical formalisms. In so doing, these models make simplifying assumptions such as common knowledge among participants in a dialogue. In general, it is important to consider situations in which participants’ goals differ, although they have identified the need to coordinate activities. Even if we assume that there is a common goal, common knowledge is a very strong assumption. Models such as those proposed by Atkinson and Bench-Capon [2], Black and Atkinson [3] and Kok et al. [7] rely on there being a common understanding of the problem at hand, with the debate being focussed on which solution is best given the preferences of all parties engaged. Even if participants wish to share knowledge prior to dialogue, in any non-trivial situation they do not necessarily know what is relevant to the problem at hand. Sharing all knowledge is infeasible, and in some domains local policies may constrain information sharing; e.g. between a non-governmental aid organization and a military coalition [10]. The need to share information during deliberation is, therefore, essential for the richness of real-world dialogues to be modeled. There is also a lack of rigorous evaluation of the effectiveness of these models of dialogue. Research typically proves some formal properties, and then illustrates its applications through the use of examples (cf. [12]). In order for argumentation models to be deployed in real-world practical applications where humans and autonomous agents collaborate in making decisions, these systems must resemble natural argumentation and their benefits must be evaluated. While formal systems are developed from models of human deliberation, there is little effort in the literature to understand whether these systems are useful, and to verify to what extent the richness of human practical reasoning can be represented by them. This paper is within the context of the broader question: are existing models of autonomous deliberative dialogue able to capture the complexity and richness of realistic natural deliberation?

We discuss the leading model of deliberation dialogue in Artificial Intelligence [8]. This is the right type of framework to represent how practical reasoning is used in realistic cases, but at least two important phases should be investigated further. In real world scenarios, circumstances relevant to the decision can change rapidly. Participants may also share individual knowledge during dialogue that changes the circumstances. It is vitally important for the agent who is to deliberate intelligently to be aware of these changes, thus we propose an extension to the argumentation phase of the dialogue that takes changes in circumstances into account during the course of the deliberation itself. Moreover, in many instances, a deliberative dialogue can be very successful educationally in revealing the arguments and positions on both sides, even though the dialogue did not succeed in determining what to do. This raises the problem of when practical reasoning should be closed off and how to establish the success of a dialogue. We propose a solution to this closure problem by presenting ten criteria that determine when a deliberation has been successful in realistic cases.

In this paper, we show that these two phases of natural dialogue are to a certain extent addressed in the argumentation-based deliberation system presented in [12]. In order to do so, we analyze the empirical evaluation presented in our previous work in the light of the new considerations presented in this paper. We show that this model is able to capture the information sharing that changes circumstances during the dialogue and that this is fundamental for obtaining more successful outcomes in a situation where new information is continually streaming in. We also show that some of our measures may prove useful for the evaluation of at least two criteria proposed for successful dialogue. We argue that this dialogue system is a first attempt to fill some of the gaps that exist in autonomous deliberation models identified in this research.

2.The McBurney, Hitchcock and Parsons model

In this section we briefly present the leading model of deliberation dialogue by McBurney, Hitchcock and Parsons [8]. For convenience, hereafter we refer to this model as the MHP model.

Deliberation in an MHP dialogue is seen as a resource-bounded procedure that starts from an initial situation where a choice has to be made, moving towards a closing stage where the decision is arrived at on the basis of pro and con arguments that have been put forward in a middle stage [15]. According to the MHP dialogue model, deliberation is a formal procedure that goes through eight stages. The dialogue has an opening stage where the question is raised about what is to be done, and a closing stage where the sequence of deliberation is ended. This special question raised at the opening stage is called the governing question, meaning that this single question governs the whole dialogue, including the opening and closing stages.

1. The opening stage. The governing question is raised.

2. The inform stage. Next there is a discussion of goals, any constraints on the actions being considered, and any external facts relevant to the dialogue.

3. The propose stage. At this stage proposals for possible action are brought forward.

4. The consider stage. Comments are made on the proposals that have been brought forward. At this stage, arguments for and against proposals are considered.

5. The revise stage. At this stage, the goals, the actions that have been proposed, and the facts that have been collected and that are relevant to the deliberation may be revised. Another type of dialogue, for example a persuasion dialogue, may be embedded in the deliberation dialogue.

6. The recommend stage. Participants can recommend a particular action and the other participants can either accept or reject this option.

7. The confirm stage. All participants must confirm their acceptance of one particular option for action in order for the dialogue to terminate.

8. The close stage. At this point the dialogue terminates.

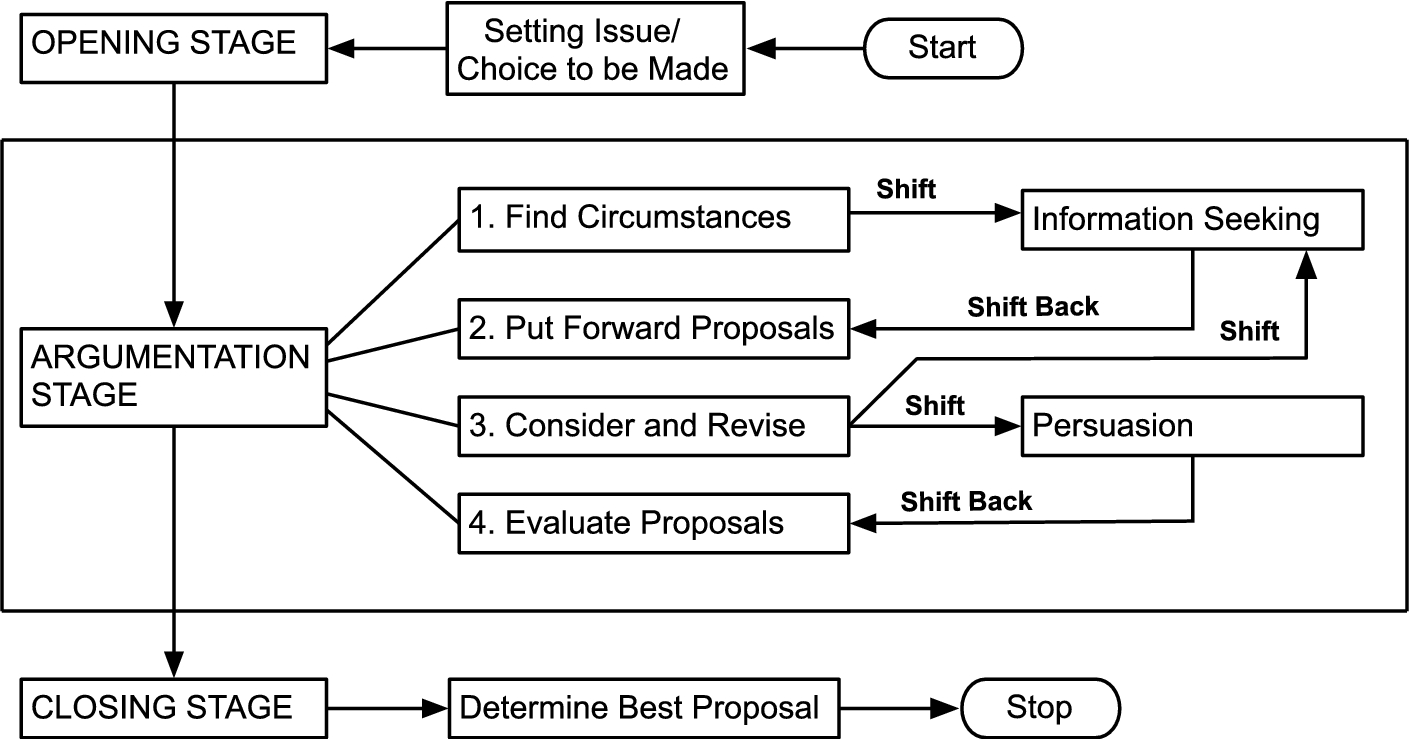

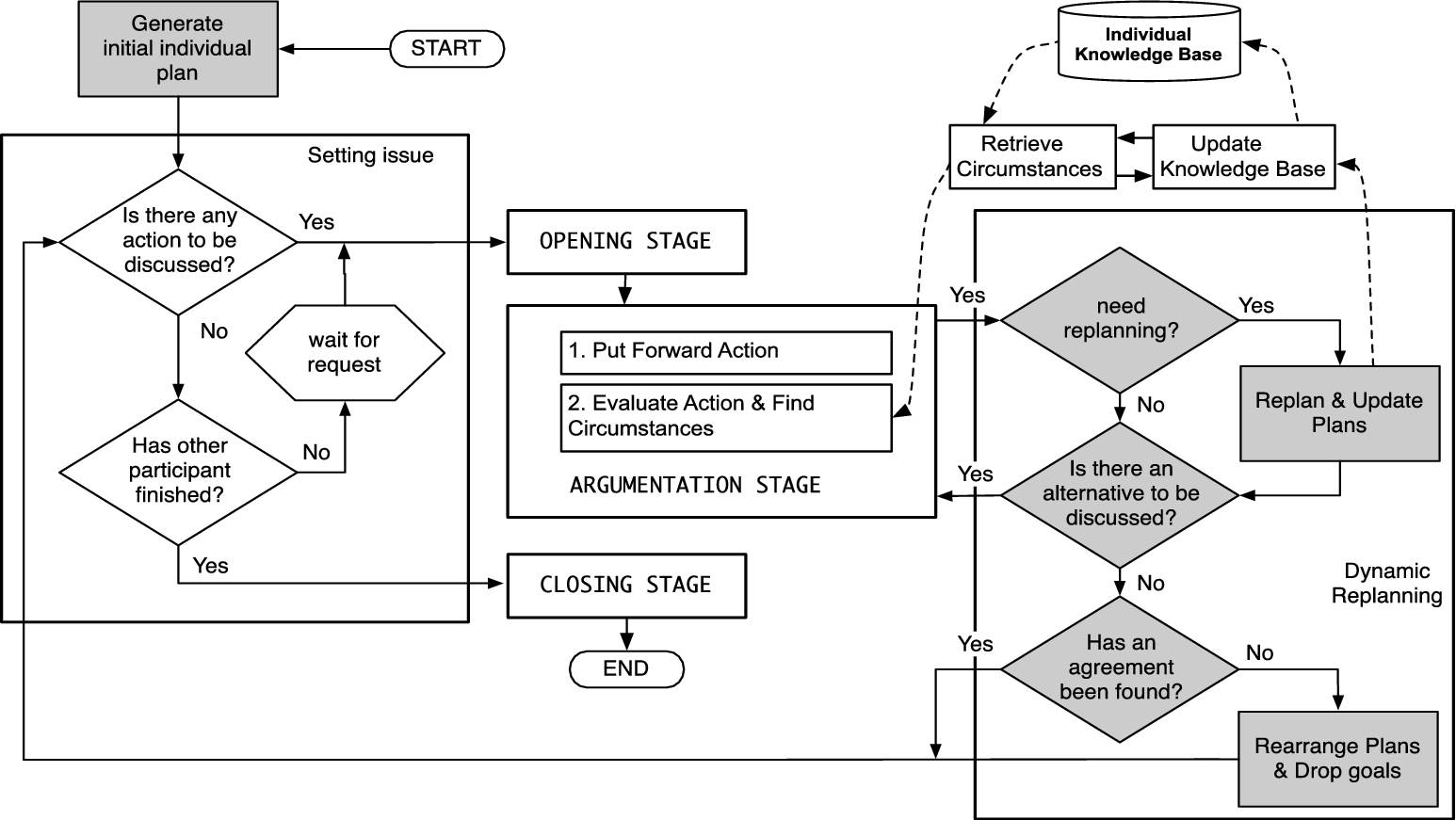

Fig. 1.

Outline of the McBurney, Hitchcock and Parsons (MHP) model.

The structure of the MHP dialogue is shown in Fig. 1. In the opening stage the goal of the dialogue is defined. The goal is for the participants to decide what is the best decision. Once the opening stage is set in place, the dialogue moves forward to a more complex middle stage that has several components. For convenience sake, we call this middle stage the argumentation stage. Whether the case is one of a single agent or group deliberation, the first step in this middle stage is to inform the agent(s) what the circumstances are. Thus, there needs to be an information-seeking dialogue embedded into a deliberation dialogue. For the purposes of modeling an abstract normative model of rational deliberation, the information-seeking part needs to be seen as a distinctive element, and because of its importance it is placed at the beginning of the middle stage.

Once the information is in, the second part of the middle stage is putting forward proposals by all parties to the deliberation dialogue. Then the dialogue moves to the consider stage and the revise stage. During this stage each party probes into the proposals put forward by other parties by raising questions about possible problems with the proposal and even by attacking the proposal with counterarguments. In response to an attack on its proposal, the agent may concede, and retract its commitment or refine the proposal.

3.Deliberation with open knowledge base

The MHP protocol represents many examples of realistic argumentation in deliberation dialogue. One of the problems with this model, however, is that it does not take into account changes of the circumstances of deliberation. In this section, we discuss how to extend the MHP model to make the role of the knowledge base of the agents involved more explicit.

3.1.The need for an open knowledge base

Consider the following dialogue between Alice and Bob extracted from an extended example in Walton [13].

Example 1. In this dialogue, the governing question and the inform stage are motivated by the fact that Alice and Bob would like to find a suitable house in Windsor. At the propose stage they narrowed their choices down to two: a condo and a two-story house. Both Alice and Bob place a high value on health, costs, and on the environment. A segment of the consider stage of dialogue follows:

| Bob: | With the condo I can ride to work on my bike in 35 minutes. The bike path goes right along the river straight to my office. |

| Alice: | The problem with the two-story house is that it is twice as far away as the two other homes. It would take over an hour for you to ride the bike in. |

| Bob: | If we lived there, I would have to drive the car to work most days. |

| Alice: | Riding the bike to work is more environmentally friendly. Also, you really like riding your bike to work, and it is good exercise. |

| Bob: | Yes, and there is also the factor of the cost of gas. The cost of driving to work adds up to a significant amount over a year. Also, neither of us likes spending a lot of time in the car. It is wasted time, and getting exercise is a good way to spend that time. When you spend your whole day working on a computer, it is really important to get some exercise to break up your day. |

| Alice: | That is offset by the lower taxes of the two story house, because it is outside the city, even though it is a larger house. |

| Bob: | I say let’s make an offer on the condo. |

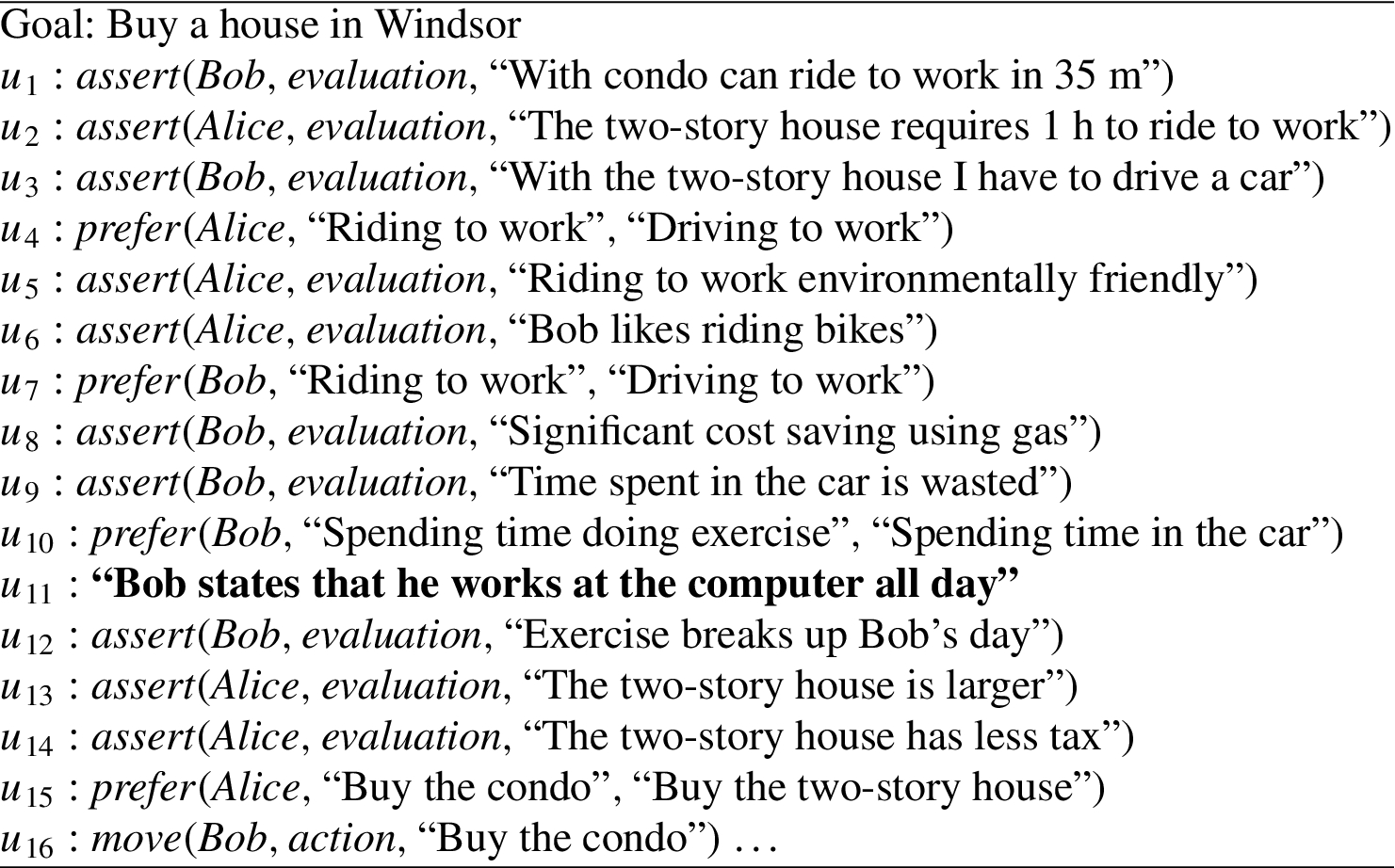

Fig. 2.

Formalization of the speech acts in Example 1.

The MHP protocol formally represents the stages of the deliberation between Alice and Bob. However, if the circumstances change, and more information is needed before making a decision, the protocol may not be able to capture such characteristics. This is the case for example of move

The deliberation dialogue always arises out of the question of what to do in any given set of circumstances and the information relevant to the circumstances is vital to arriving at a rational decision. In any real case, however, new information will need to be introduced during all sequences of argumentation prior to the closing stage and the dialogue model must support the new changes in the knowledge base. The following example will clarify this problem further.

Example 2. Brian had a problem with his printer. Whenever he scanned a document using the automatic document feeder, a black line appeared down the middle of the page. To solve the problem he searches for a troubleshooting guide for its printer. This guide gave a series of instructions. If this problem happens when you print and copy/scan, the problem is in the print engine itself, hence refer to a certain website for help troubleshooting. However in Brian’s case, the defect only occurred when scanning from the automatic document feeder. Following the instruction in the troubleshooting guide, first Brian opened the scanner cover checked for debris. There was none. Next he located the small strip of glass at the left of the main glass area. He carefully cleaned the glass with a soft cloth, and then scanned a document to see if this fixed the problem. It did not. Then he looked at the glass and he could see that it was covered by a thin plastic piece. He then managed to pull the plastic piece out. He found a small black mark in the middle on the bottom of the plastic piece. He tried cleaning the plastic, but it did not work, as he found by scanning a test document. He then showed the plastic piece to his wife Anna, and asked her if there was some way it might be possible to clean it to remove the small black mark. She proposed to use a soft cleaning pad and they managed to remove the black mark. Brian went through the scanning procedure as a test. Success! There was no longer a black line down the middle of the pages that were scanned. Brian’s problem was solved. An interpretation of the formal dialogue is shown in Fig. 3.

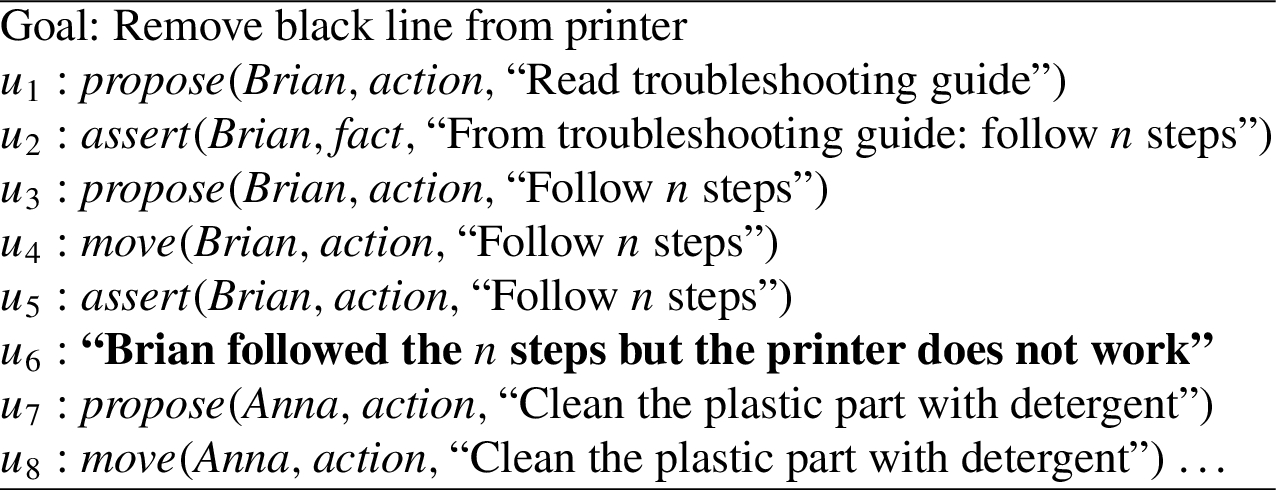

Fig. 3.

Interpretation of the printer example dialogue.

One of the most important aspects of this case is that the deliberation was based on a prior process of information-seeking dialogue. Moreover, the information-seeking dialogue was interwoven with the steps taken during the sequence of practical reasoning in the deliberation dialogue. The MHP dialogue permits the representation of Brian’s monologue in order to make a decision on what to do to fix the printer, considering the search for information as the troubleshooting instructions. However, if an agent were to act on Brian’s behalf, it would have to add a new piece of information to its knowledge base; i.e.,

The above discussion suggests that the changes in the knowledge-base during the dialogue and their effect on choosing new proposals must be investigated further. The MHP model of deliberation dialogue needs some revisions to bring it in line with an open knowledge-base that takes into account the change of circumstances during the argumentation stage.

3.2.An extended dialogue model for open knowledge

One of the most important factors in intelligent deliberation is that in real scenarios the circumstances of the world can change, and it is vitally important for the agent who is to deliberate intelligently to be aware of these changes and to take them into account during the course of the deliberation itself. Intelligent deliberation needs to be both informed and flexible. Some of the agents may know things that the others do not know, or information may only become relevant during the course of the dialogue. It is important that the knowledge base is left open during the argumentation stage so that new, relevant information that might affect proposals and commitments can come in. The MHP model has led to the development of a number of deliberation protocols employed in multiagent systems where agents have complete shared knowledge of the circumstances [3,7,9]. Optional actions in these frameworks are assigned to the agents for discussion at the initial stage of dialogue and information shared during the dialogue is not taken into account when selecting new proposals to be discussed. An error in a rational deliberation, however, is for the agent to become inflexible by failing to take new relevant developments into account when deciding what to do.

For this reason, deliberation as a framework for rational argumentation needs to be extended from the structure described above. Instead of the knowledge base being fixed at the opening stage, propositions need to be added to it and deleted from it as the deliberation proceeds through the argumentation stage.

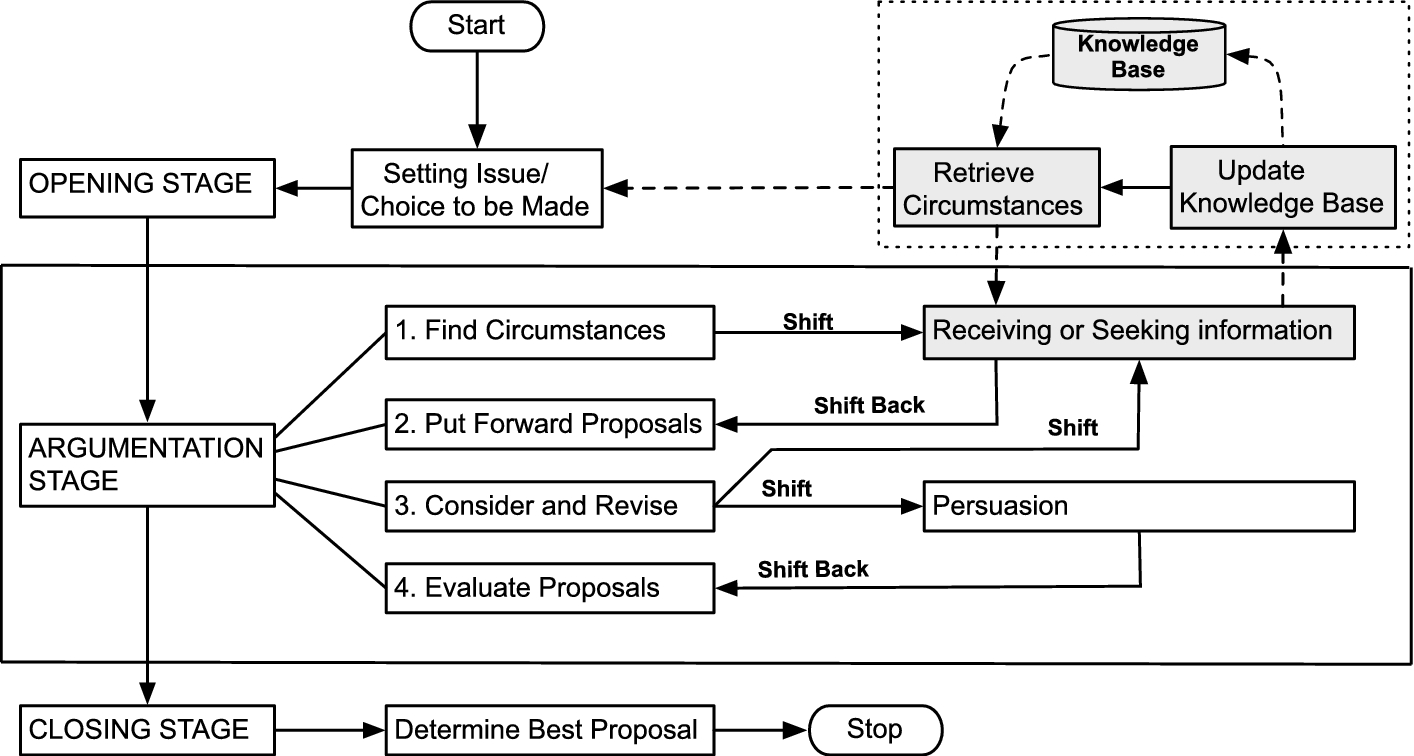

Fig. 4.

Outline of a revised model of deliberation dialogue.

In Fig. 4, we propose a revised model, where we highlight the role of an open knowledge base. A knowledge base is set in place at the opening stage and agreed to by all parties in the deliberation as representing the circumstances of the situation in which the governing question is framed. This knowledge base is fixed in place at the opening stage and is part of what defines the choice that is to be made as stated by the governing question of the deliberation. The knowledge base can be updated during an information-seeking dialogue. More importantly, once we reach the argumentation stage, this knowledge base needs to be open so that if the situation changes, and information about these changes becomes available to the agents, this knowledge is taken into account during the stage where proposals are put forward, considered and evaluated.

The proposals may need to be modified or evaluated differently once this new information comes to be known. During this stage, some agents will have knowledge that others lack, and thus one important type of speech act is that of asserting a proposition that represents factual knowledge to inform the other agents involved in the deliberation process. There is the need to introduce a new speech act here that allows the assertion of the new knowledge and the revision of the commitment store. Here we refer to this speech act that is meant to intentionally exchange new information with

In this section, we discussed the need for the MHP model to be revised, especially in relation to the cases where the agents taking part in the deliberation have partial views of the circumstances of the world. The revised version offered here provides for the sharing of information between agents while deliberating so that better proposal can be identified. In the next section, we discuss a further problem of the MHP dialogue that may arise when agents have open knowledge: when a dialogue is to be considered successful.

4.Determining a successful deliberation

In the new model, there is a cycle of proposing, considering and revising as new information comes in, and evaluating the proposals in light of this new information. There is a danger that this cyclical process can continue infinitely, stalling the deliberation. Hence, it is necessary to have a closing stage so that the argumentation stage can be terminated once it has been judged that enough information on the circumstances of the decision has been taken into account. But when can the search for knowledge about a case be closed off so that the premises of the practical reasoning provide an evidential base sufficient to prove the conclusion? In this section, we discuss the closure problem as the problem of determining when practical reasoning ends.

4.1.The closure problem

Here we attempt to define conditions for closure of practical reasoning. A deliberation may have to be closed off and a decision taken based on the pro and con arguments put forward for practical reasons, typically time and money. A decision by majority vote may have to be taken to meet the practical demand for closing of the discussion. However, the depth, comprehensiveness and thoroughness of the pro and con arguments brought for and against the proposals is the most valuable feature of a deliberation leading to a supported conclusion offering a well-reasoned decision for action. There are also some issues remaining about how to represent the closing stage. What if it cannot be determined, on the evidence, which is the best proposal?

In a case of deliberation, like that between Alice and Bob, the dialogue can be closed when they have collected enough information about what is available on the real estate market, and they have discussed the matter thoroughly enough to critically examine all the pro and con arguments on both sides of every available option. At that point, even though the deliberation could be reopened before they make an offer and are committed to it, the dialogue has reached the closing point. If one party has put forward a particular proposal, and nobody else has any objections, then, at least temporarily, the deliberation is closed. They have found a proposed course of action that they all agree on, or at least that nobody disagrees with, or is willing to contend further. Hence the deliberation is over. A reasoned decision can now be arrived at on what to do. There are different options. The original proposal can be accepted or the original proposal can be rejected, in which cases the deliberation stops. The proposal can simply be modified, to consider the objections made during the dialogue, or a counterproposal can be brought forward. In the latter two cases, the procedure of formulating a proposal goes around the cycle again, except that this time a new proposal has been formulated in place of the one that was not accepted.

The decision of when the deliberation has reached its closing stage cannot be made by any current model of deliberation. In an emergency, the closing stage may have to be reached quickly. In theory, the closing stage should only be reached when the arguments and proposals considered on all sides have been sufficiently discussed so that all the relevant factors have been considered. In practice, a decision in the circumstances may have to be made within time constraints, and so a determination of which proposal is best may still be subject to pro and contra argumentation. In such cases, the argumentation stage should be closed off and some means taken to arrive at a decision, such as taking a vote. It is also possible, however, that in real instances of deliberation, there may be no agreement on the best proposal for action to solve the problem posed by the governing question. In this case, in the MHP model, presumably the deliberation dialogue must be considered a failure, because the governing question has not been answered. Nevertheless, the deliberation could have educational value, in that the consideration of the pro and con arguments might have shown deficiencies in some of the proposals. This revelation might have deepened the understanding of the participants.

Note that the only stages which must occur in every dialogue that terminates normally are the opening and the closing stage. It appears that we must have the closing stage in every deliberation dialogue, or at least in every successful deliberation dialogue. Hence, in the MHP model, a dialogue cannot really be a deliberation dialogue unless it terminates in a closing stage where a decision is arrived at on the best course of action to take. This remark is a revealing comment on the general problem of formulating goals in formal systems of dialogue. It also affects the persuasion type of dialogue. The goal of a persuasion dialogue is to resolve the conflict of opinions agreed upon as the issue at the opening stage. However, in many instances, a persuasion dialogue can be very successful educationally in revealing the arguments and positions on both sides. Determining the criteria of success of a dialogue in meeting its goal is a general problem for formal dialogue systems.

4.2.Criteria for closure of deliberative dialogue

In order to address the closure problem of a dialogue, here we define ten criteria that determine the extent to which a deliberation has been successful:

(1) Whether the proposals that were discussed represent all the proposals that should be considered, or whether some proposals that should have been discussed were not.

(2) The accuracy and completeness of the information regarding the circumstances of the case made available to the agents during the opening stage.

(3) How well arguments were critically questioned or attacked by counterarguments.

(4) How well the agents followed the procedural rules by allowing the other agents to present their proposals and arguments openly, and how they responded to proposals.

(5) How thoroughly each of the proposals that were put forward during the deliberation were engaged by supporting or attacking arguments.

(6) Whether any arguments that should have been considered were not given due consideration.

(7) How good the arguments were that support or attack each of the proposals, depending on the validity of the arguments and the factual accuracy of their premises.

(8) Whether the argumentation avoided personal attacks, or was unduly influenced by opinion leaders or personalities who dominated discussion during the argumentation stage.

(9) The relevance of the arguments put forward during the argumentation stage.

(10) The taking into account of the values of the group of agents engaged in the deliberation.

The relevance of an argument is determined by how it fits into a sequence of argumentation that connects to the problem or choice of action set as the issue of the deliberation at the opening stage. To declare that the closing stage has been reached, the participants in the deliberation must reach a consensus on whether the deliberation has been successful, based on the ten criteria above, so that one proposal has been shown to be superior to the others. This is called an internal evaluation of the success of the dialogue. The other way is an external evaluation carried out at a metalevel. Once a deliberation has been carried out in a given case by a group of agents, another group of agents can then keep a record of the argumentation in the deliberation, for example by keeping a transcript of the discussions. Then they can analyze and evaluate the argumentation in the deliberation dialogue, and arrive at an evaluation of how successful the dialogue was according to the ten criteria set out above.

This solution to the closure problem, while not yet implemented in a computational system, is useful for the project of improving current models of deliberation. The closure problem in other dialogues is different than using the closure conditions for deliberation dialogue proposed here. To explain this difference consider the inquiry dialogue. The inquiry is closed only when it can be shown that the proposition at issue can be proved, or where it can be definitively shown that it cannot be proved even though a large body of relevant evidence has been collected. The goal of the inquiry dialogue is to prove a proposition is true by a sequence of argumentation that is strong enough to meet the standard of proof appropriate for the type of inquiry. Argumentation in the inquiry is characterized by the goal of establishing a cumulative process of argument that builds only on established facts in order to prove a conclusion to a high standard of proof. Burden of proof in an inquiry is characteristically set at high standard ([15], p. 73). In contrast, there is no burden of proof in a deliberation dialogue, only a burden of reasonable explanation [14]. This situation is then different from that of the deliberation dialogue where the purpose of the dialogue is to make a decision on what to do or solve a practical problem. In this case, it may be necessary to take action, or decide not to take action based on a lower standard to show that an action can be justified or not such as the standard of the preponderance of the evidence. One reason for this difference is the propensity of the circumstances to change, hence the need for a different definition of the closure problem as the one we proposed in this section.

5.Effects of open knowledge-base in agent applications

In the previous sections, we discussed the motivation for the need of an extended model of deliberation to capture the changes of circumstances during dialogue as well as define criteria for determining the success of a dialogue. In this section, we analyze a model of deliberation presented in our previous research [11,12] with the purpose of showing an initial approach to this extended model and its importance. This model is designed for agents with individual plans to achieve different individual objectives, where agents discuss interdependencies and may highlight arising conflicts caused by mutually-exclusive goals, scheduling constraints and norms. Hence, agents gather information about circumstances, not only at the initial stage of the dialogue but during its course. We intend to show, by means of an empirical evaluation, that this is necessary for agents to identify an agreement in situations where agents do not have shared knowledge about each other’s plans.

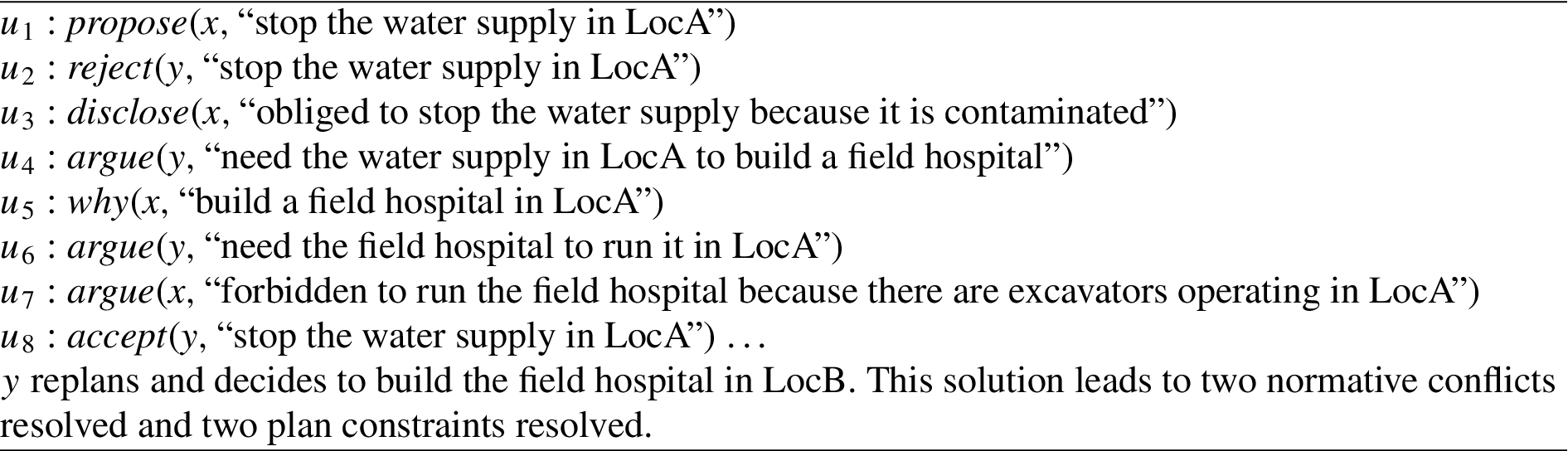

In the example of the deliberation used in Toniolo et al. [12], agents are discussing the repair of the water supply in a particular location where a disaster has occurred. One agent proposes to stop the water supply in the location while the other argues about the need for water to perform activities that include the building of a field hospital to deal with casualties. The first agent, however, has a goal of stopping the water supply that is contaminated. Water that is contaminated is not safe, and that constitutes an argument against allowing the use of the water supply in that location. The two agents then discuss other options, for example building the field hospital first but not using the water supply until after it can be guaranteed that the water is safe. They discuss other options as well, for example building the field hospital in a different location. By communicating the circumstances to each other, and by constructing arguments to respond to arguments put forward by the other side, for example the argument that building water supply at this location would not be safe, the dialogue can proceed in an orderly way.

The protocol used in Toniolo et al. [12] considers two agents, each with individual goals and norms a different view of the world, that form interdependent plans to achieve their goals. This represents the case of separate individual knowledge bases. An agent proposes an action, that we refer to as

Fig. 5.

Overview of agent deliberation process.

The important characteristic of this dialogue structure is that it allows the agents to construct alternatives dynamically as they acquire information during the sequence of dialogue. This exchange of information enables them to reduce the number of conflicts in their interdependent plans related to scheduling or norm violations. When such conflicts arise, they can be dealt with by two means: (1) argument exchanges, describing negative consequences, and (2) by exchanging information about the circumstances of the case as new circumstances come to be known by one party. The former type of argument describes what conflicts may not permit an action to be performed, or a state of the world to be achieved. We refer to this type of arguments as

In our dialogue protocol, we consider two ways to exchange supporting statements. An agent may ask “why?”, (e.g., “Why do you want to perform this action?”) and the other agent counter-argues this by explaining some circumstances,

The integration of new information within a fully autonomous deliberation system may be problematic, however, and one way to address this is to deal with this information within the underpinning system used to identify actions or plans. For example, an open world may lead to no solutions for the frame problem in a planning domain; i.e., that of representing effects of an action without explicitly representing all the invariants of the domain. In our framework, we address this via the underpinning planning system using external knowledge-producing actions that can be added to the current chosen plan allowing an agent to access and incorporate new knowledge (more details are discussed in [11]). However, we believe that the disclosure of information about changes of circumstances or action explanations should be represented at the protocol level in order to capture the effects of the new information on the process of identifying new proposals. This is especially important in situations where agents do not have common objectives such as in the disaster response example. To develop richer and more flexible deliberative supporting systems, we must model that participants propose new alternatives within the same dialogue guided by the information shared during dialogue, instead of selecting them from a fixed set established at the opening stage.

Table 1

Speech acts of each protocol

| Speech act | Attacks | Surrenders |

| – | ||

| – | ||

| – | ||

| – | ||

| – | ||

| – | ||

| – | ||

| – | ||

| – | ||

| – |

6.Evaluating the effects of an open knowledge base

In our previous work [11,12], we performed an empirical evaluation of a system employing our model of deliberation. Here we report some results and further considerations to support our claim that taking into consideration new information shared during the dialogue to identify alternatives is necessary for agents to find better alternatives for interdependent plans.

In order to show the difference introduced by

Fig. 6.

The disaster response example dialogue.

6.1.Experiment settings

The empirical evaluation that we performed aimed at showing that using

In an experimental run with two agents, each agent was assigned an individual plan and a number of dependent actions

The plans for each experimental run were analyzed to measure the conflicts before the dialogue, referred to as

6.2.Better plans with information disclosure: Experiment results

A summary of the experiment results is proposed in Table 2. Here we report only those relevant to our discussion, more details can be found in [12]. The results showed that the percentage of conflicts resolved (

Table 2

Information disclosure results

| Measure | ||

| Median | 30 | 48 |

| Median | 43 | 24 |

| Median | 11 | 39 |

In conclusion, the dialogue is more likely to be successful with a protocol where agents are able to intentionally exchange information about circumstances. This is because at any point in dialogue, an agent may disclose some information about the reason for adopting a particular action, due to causal constraints or norm requirements. These explanations, in the form of new information, permit agents to find more favorable alternatives to fulfill the dependency, in case of conflicts. The results prove that by permitting agents to disclose information about individual circumstances we obtain a significant improvement on the number of agreements established.

These results show that the introduction of a flexible knowledge-base where agents can add pieces of information about circumstances while deliberating is fundamental for establishing more successful agreements. In the next section, we report in more detail some results obtained in the investigation of our second objectives: whether a dialogue has been successful.

7.Measures for criteria of closure

In Section 4.2 we formulated ten criteria for assessing whether a deliberative dialogue has been successful. In this section, we discuss some measures for external evaluation of success carried out by looking at the record of deliberation. In order to do so, we now report two measures used in [12] that may be useful to evaluate the success of a deliberation dialogue.

7.1.Criteria and hypotheses

The criteria proposed are necessary in human deliberation to determine whether enough proposals have been discussed and they are particularly useful to determine the educational value of deliberation in situations when an open knowledge base is introduced. However, existing systems for evaluation (e.g., [4]) use dialogue protocols based on the MHP model, thus their methods for evaluation are limited to assessing whether the agreement was found or not. We argue that if agents were to follow a more natural deliberation dialogue our criteria are necessary to assess the educational benefits of protocols for agent deliberation. While the criteria function as guidelines for evaluation, we must define specific measures to compute these benefits. We propose a measure for evaluating the following criteria within the system presented in Section 4:

(2) The accuracy and completeness of the information regarding the circumstances of the case; and

(5) How thoroughly each of the proposals that were put forward during the deliberation were engaged by supporting or attacking arguments.

Definition 1.

A feasible plan is one that an agent is able to enact without impeding another’s goals. A dialogue about two interdependent plans is beneficial when, at the end of the dialogue, the feasibility of the plans has increased. This is determined by an increase of the number of conflicts solved between the plans.

We intend to show the benefits of a more flexible protocol,

The following hypotheses may be used to evaluate the criteria:

Hypothesis 1.

The use of a protocol where agents can share additional information about circumstances

Hypothesis 2.

The use of a protocol where agents can share additional information about circumstances

7.2.Results

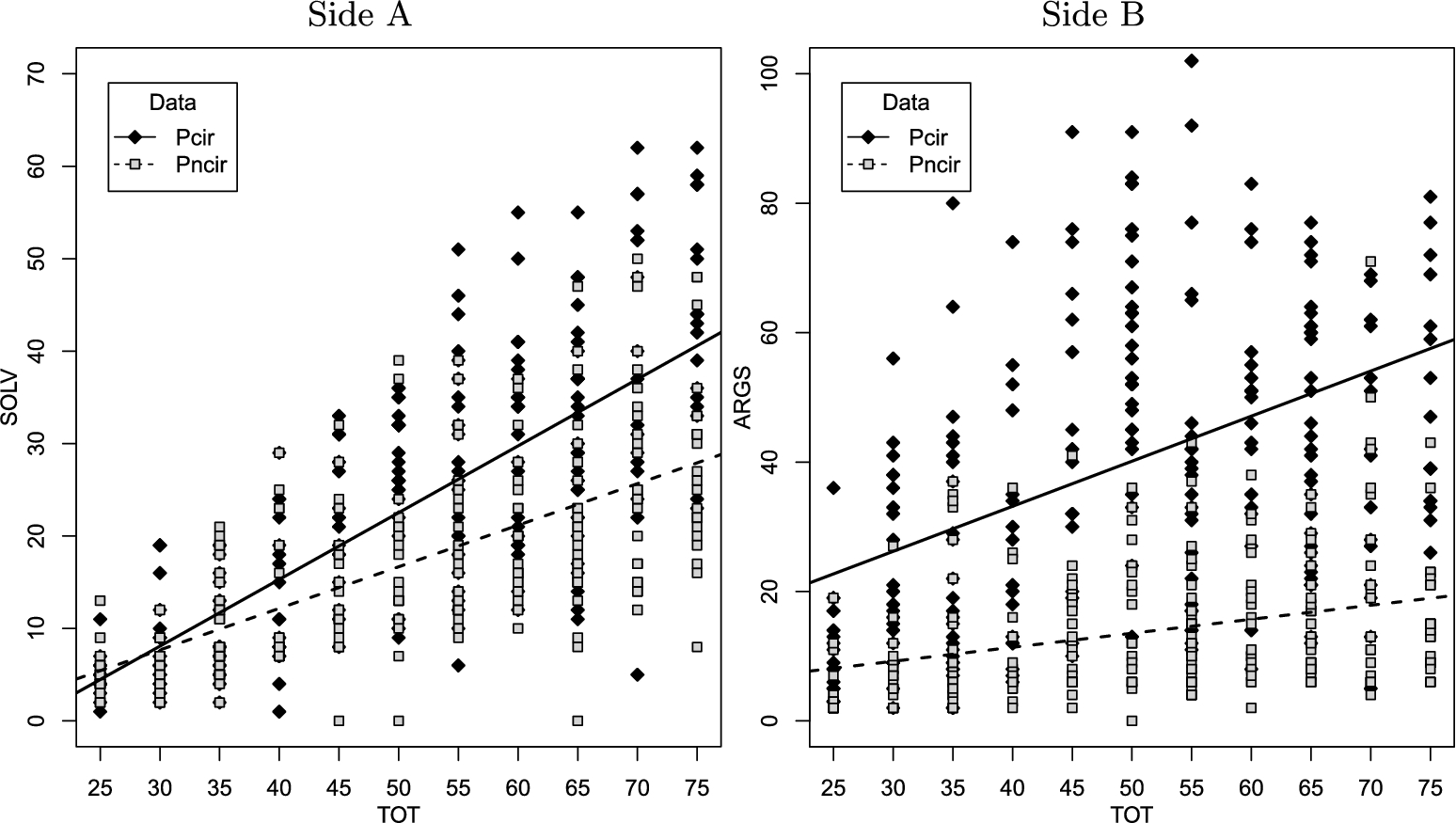

In Fig. 7 we report a subset of selected results obtained with similar experiments of [12]. Side A represents the data and linear regressions of the number of conflicts solved (

Fig. 7.

Conflicts solved and arguments as total number of conflicts increases.

Figure 7(A) shows the results on the number of conflicts solved when the complexity of the problems increases providing evidence for Hypothesis 1. The graph shows that the number of conflicts solved is significantly higher when agents employ

Our second hypothesis is verified in Fig. 7(B). The top line corresponds to the number of arguments moved in

We have presented here an initial simple method for measuring some of the criteria presented for resolving the closure problem: we considered the variation of number of conflicts solved as indicator of the accuracy and completeness of information exchanged, and the variation of number of arguments as indicator of the thoroughness of the discussion about a proposal. There is the need, however, to develop a more standard method for measuring these criteria in order to determine the educational value of deliberation in agent-based systems. More investigation within computational system is required to establish general conditions under which it is possible to measure these criteria, or to determine whether certain criteria are more useful than others in specific domains. In order to inform this framework, the work of Amgoud and Dupin De Saint Cyr [1] on measures of argumentation-based persuasion dialogue may be useful, especially in relation to those measures related to agents borrowing each other’s information, with adequate revisions to consider the differences that deliberation dialogue presents.

8.Conclusion

We have explored the problem of whether current models for agent deliberation capture the richness of human deliberative dialogue. Agent deliberation protocols are based on models of natural deliberation. Black and Atkinson [3], for example, consider agents with different expertise that deliberate over the best action to perform in collaboration. Agents establish what to believe about the surrounding circumstances, and then propose and discuss actions that promote or demote societal values. Similarly, in Kok et al. [7] a team of agents deliberate about the best option to achieve a goal. In the dialogue agents can propose, withdraw, or challenge options. However, these systems lack of representation of phases that may occur in natural deliberation.

In order to address this problem, we discussed the work of McBurney et al. [8], which presents a model of deliberation underpinning many dialogue systems including [3,7]. We showed that this represents a good model of how deliberation proceeds in real settings, permitting agents to interweave phases of information-seeking and argumentation for practical reasoning. An important characteristic for intelligent rational deliberation is, however, missing: considering changes in circumstances during the course of the dialogue. We proposed an extension to the MHP dialogue in which agents are open to the exchange of new knowledge. We showed that our framework [11,12] considers, to a certain extent, the situation where agents can willingly share information about new circumstances. We demonstrated that this is necessary to identify more successful outcomes.

New circumstances may initiate an infinite cycle of rethinking and reevaluating proposals. We argued that some criteria must be established to determine when the practical reasoning can be closed off and to declare whether the deliberation has been successful. People engaging in natural deliberation may find dialogue educationally successful even if the debate terminated without an agreement. In the MHP model, however, agents are required to find an agreement to declare the dialogue successful. How this can be extended so that agent deliberation better reflects human deliberation is a question to which we provided some answers. We proposed ten criteria to determine whether the dialogue has been successful; criteria that we argue should be implemented in agent-based models of deliberation dialogue. Measures for these criteria must also be defined to permit the evaluation of the educational benefits of the dialogue. Here, we demonstrated that, thanks to the sharing of information about new circumstances, agents are able to identify more beneficial agreements.

We proposed two new phases that extend the MHP dialogue to consider dynamic changes of circumstances during dialogue and to address the problem of how to determine success. We believe that our extended model will facilitate the development of applications that are able to represent rich deliberation processes to support human decision-making in a more effective way.

Notes

1 Results were statistically significant with

2 Results are statistically significant at

Acknowledgements

This research was partially supported by Social Sciences and Humanities Research Council of Canada Insight Grant 435-2012-0104. This research was also partially supported by the award made by the RCUK Digital Economy program to the dot.rural Digital Economy Hub at the University of Aberdeen; award ref.: EP/G066051/1. Further refinements of this work were supported by the SICSA PECE scheme.

References

[1] | L. Amgoud and F.D. De Saint Cyr, Measures for persuasion dialogs: A preliminary investigation, in: Computational Models of Argument, Frontiers in Artificial Intelligence and Applications, P. Besnard, S. Doutre and A. Hunter, eds, Vol. 172: , IOS Press, (2008) , pp. 13–24. |

[2] | K. Atkinson and T. Bench-Capon, Practical reasoning as presumptive argumentation using action based alternating transition systems, Artificial Intelligence 171: (10–15) ((2007) ), 855–874. doi:10.1016/j.artint.2007.04.009. |

[3] | E. Black and K. Atkinson, Dialogues that account for different perspectives in collaborative argumentation, in: Proceedings of the Eighth International Conference on Autonomous Agents and Multiagent Systems, (2009) , pp. 867–874. |

[4] | E. Black and K. Bentley, An empirical study of a deliberation dialogue system, in: Theory and Applications of Formal Argumentation, S. Modgil, N. Oren and F. Toni, eds, Lecture Notes in Computer Science, Vol. 7132: , Springer, Berlin/Heidelberg, (2012) , pp. 132–146. doi:10.1007/978-3-642-29184-5_9. |

[5] | J. Fox and S. Parsons, Arguing about beliefs and actions, in: Applications of Uncertainty Formalisms, A. Hunter and S. Parsons, eds, Lecture Notes in Computer Science, Vol. 1455: , Springer, Berlin/Heidelberg, (1998) , pp. 266–302. doi:10.1007/3-540-49426-X_13. |

[6] | T.F. Gordon, H. Prakken and D. Walton, The Carneades model of argument and burden of proof, Artificial Intelligence 171: (10–15) ((2007) ), 875–896. doi:10.1016/j.artint.2007.04.010. |

[7] | E.M. Kok, J.J.C. Meyer, H. Prakken and G.A.W. Vreeswijk, A formal argumentation framework for deliberation dialogues, in: Argumentation in Multi-Agent Systems, P. McBurney, I. Rahwan and S. Parsons, eds, Lecture Notes in Computer Science, Vol. 6614: , Springer, Berlin/Heidelberg, (2011) , pp. 31–48. doi:10.1007/978-3-642-21940-5_3. |

[8] | P. McBurney, D. Hitchcock and S. Parsons, The eightfold way of deliberation dialogue, International Journal of Intelligent Systems 22: (1) ((2007) ), 95–132. doi:10.1002/int.20191. |

[9] | R. Medellin-Gasque, K. Atkinson, P. McBurney and T. Bench-Capon, Arguments over co-operative plans, in: Theory and Applications of Formal Argumentation, S. Modgil, N. Oren and F. Toni, eds, Lecture Notes in Computer Science, Vol. 7132: , Springer, Berlin/Heidelberg, (2011) , pp. 50–66. doi:10.1007/978-3-642-29184-5_4. |

[10] | K. Sycara, T.J. Norman, J.A. Giampapa, M.J. Kollingbaum, C. Burnett, D. Masato, M. McCallum and M.H. Strub, Agent support for policy-driven collaborative mission planning, The Computer Journal 53: (5) ((2010) ), 528–540. doi:10.1093/comjnl/bxp061. |

[11] | A. Toniolo, Models of argument for deliberative dialogue in complex domains, Ph.D. thesis, University of Aberdeen, 2013. |

[12] | A. Toniolo, T.J. Norman and K. Sycara, An empirical study of argumentation schemes for deliberative dialogue, in: Proceedings of the Twentieth European Conference on Artificial Intelligence, Vol. 242: , (2012) , pp. 756–761. |

[13] | D. Walton, Goal-Based Reasoning for Argumentation, Cambridge University Press, (2015) . |

[14] | D. Walton, A. Toniolo and T.J. Norman, Speech acts and burden of proof in computational models of deliberation dialogue, in: Proceedings of the 1st European Conference on Argumentation: Argumentation and Reasoned Action, (2015) . |

[15] | D.N. Walton and E.C.W. Krabbe, Commitment in Dialogue: Basic Concepts of Interpersonal Reasoning, State University of New York Press, (1995) . |