Instance level analysis on linked open data connectivity for cultural heritage entity linking and data integration

Abstract

In cultural heritage, many projects execute Named Entity Linking (NEL) through global Linked Open Data (LOD) references in order to identify and disambiguate entities in their local datasets. It allows users to obtain extra information and contextualise the data with it. Thus, the aggregation and integration of heterogeneous LOD are expected. However, such development is still limited partly due to data quality issues. In addition, analysis on the LOD quality has not sufficiently been conducted for cultural heritage. Moreover, most research on data quality concentrates on ontology and corpus level observations. This paper examines the quality of the eleven major LOD sources used for NEL in cultural heritage with an emphasis on instance-level connectivity and graph traversals. Standardised linking properties are inspected for 100 instances/entities in order to create traversal route maps. Other properties are also assessed for quantity and quality. The outcomes suggest that the LOD is not fully interconnected and centrally condensed; the quantity and quality are unbalanced. Therefore, they cast doubt on the possibility of automatically identifying, accessing, and integrating known and unknown datasets. This implies the need for LOD improvement, as well as the NEL strategies to maximise the data integration.

1.Introduction

In recent years, Linked Open Data (LOD) has been widely acknowledged and data rich institutions have generated a large volume of LOD. As of May 2020, the LOD Cloud website reports 1,301 datasets with 16,283 links.11 The real power of LOD originates from a very simple philosophy of the Web inventor. Berners-Lee [5] states “include links to other URIs. so that they can discover more things”, hence the name “Linked” (Open) Data. LOD transforms distributed data in Resource Description Framework (RDF) into a connected global knowledge graph and allows us to find and formulate new information and knowledge [6]. This vision seems to be particularly suited for research activities. However, it seems that this scenario is not happening as quickly as we expected. It is still unclear whether we have discovered something significant in this manner. One of the reasons for this problem is the gap between the LOD producers and consumers, which is heavily attributed to data quality. Zaveri et al. [45] state that there is less focus on how to use good quality data than to how to publish it.

In this paper, we explore the problems of LOD quality from the user’s point of view. In particular, we analyse the linking quality of LOD from a research perspective in the field of cultural heritage and Digital Humanities (DH). Our study on this fundamental aspect of LOD should be able to provide a better understanding of a bottleneck of LOD practices. Although we concentrate on these domains, we believe that our analysis is equally valuable in other domains, because the analysed data is highly generic.

In cultural heritage and DH, many projects create and use a wide range of LOD for research purposes. In the course of populating and improving LOD, they often execute curatorial tasks such as Named Entity Recognition (NER), entity extraction, entity/coreference resolution, and Named Entity Linking (NEL) [7,10,16,41,46]. These are the tasks to identify, disambiguate, and extract entities/concepts from data, and to reconcile and make references to entities in another data. Thus, we can find more information on the web. In this article, we use NEL as a catch-all term for all these tasks.

For example, Europeana executes NEL in a large number of cultural heritage datasets and creates links to widely known LOD sources including GeoNames, DBpedia, and Wikidata that this paper discusses [35,37]. Jaffri et al. [28] echo this view, stating that many datasets are linked with DBpedia entities through the owl:sameAs property. In practice, this means that information about the same entity (e.g., place, person, event etc.) is stored in different LOD datasets on different servers. As Tomasuzuk and Hayland-Wood [39] indicate, RDF enables us to join data stored at disparate sources and provide the user with an integrated perspective of this data. This is called data integration. For instance, if one dataset only supplies partial information about an entity, NEL allows us to retrieve more information from all linked datasets, by “merging” data through links. In this regard, NEL serves as a building block of LOD, fostering connection, compilation, aggregation, and contextualisation of (distributed) information.

What is not investigated in cultural heritage and DH is, what impact NEL and subsequent data integration have for future research? Currently, there is a tendency for entity linking to become a purpose by itself, without examining the consequences of the linking. Due to the relative infancy of LOD in the field, perhaps most effort has been put into the aspect of data discoverability on the web, which NEL also facilitates. This function of LOD may not require extensive use cases after NEL is performed. In any case, data producers are often not fully aware of the next steps for research using LOD, as well as the needs of the data users. Although not limited to these domains, Data on the Web Best Practices22 observes: “the openness and flexibility of the web create new challenges for data publishers and data consumers, such as how to represent, describe and make data available in a way that it will be easy to find and to understand”.

Currently, the benefit of data integration using NEL is often restricted to the data sources within a single institution or domain. For instance, an advanced semantic search is developed for the historical newspapers in the Netherlands [42]. In fact, the investigation of the aggregation and integration of heterogeneous LOD from different data providers is rather rare [16], or done with relatively small multiple sources. A few exceptional cases are found in museums and institutions in France [1] and Spain [29]. Still, the formation of new knowledge based on complex queries across distributed LOD resources is not easily implemented. As such, the full potential of LOD has been neither fully explored nor verified. The practice of LOD-based research using distributed data still faces many challenges.

In terms of data linking quality, computer science communities have intensively worked on this issue in the past years. Critical quality issues of linking have been frequently raised and discussed in the studies of LOD [3,4,9,11–15,23,31–34,43,45]. We discuss this in Section 2 in more detail. However, one specific aspect helps here to explain our motivation. Most previous research regards owl:sameAs as a central property for LOD linkages, because it is a W3C recommended standard and serves as a bridging link between identical entities. We also think that it plays an important role to automate data processing using federated SPARQL queries in dispersed datasets, because we know the property beforehand without knowing heterogeneous and complicated ontologies of individual datasets. At least there is no doubt that LOD information can be automatically traversed and aggregated by simply following the links through this property. Therefore, we are interested to understand the future prospect of LOD automation by examining commonly used properties.

Taking this background into account, this article aims to evaluate the quality of widely known (referential) LOD as the target resources of NEL. In particular, the linking quality and connectivity is analysed in detail in order to provide an overview of the current “state of NEL ecosystem”. To this end, we examine LOD entities/instances through lookups. With a special emphasis on multi-level traversability in the LOD cloud, we can estimate the impact of NEL for end-users. In other words, our research questions are as follows.

RQ1: When a local dataset links to a global LOD, what level of information can we find?

RQ2: How can we follow links “to discover more things”?

RQ3: How are the entities in (the core part of) the LOD cloud connected to each other and can be navigated?

RQ4: What kind of information can be obtained by automatic graph traversals through standardised properties like owl:sameAs?

RQ5: What are the linking and content patterns for different types of entities?

As LOD potentially enables us to undertake machine-assisted research with the help of more automated data integration and processing, this project serves as a reality check for the current practices of LOD in the field.

The structure of this paper is as follows. Section 2 explores the related research. Section 3 describes objectives, scopes, and methodology. Section 4 presents the analysis of 100 entities in five categories relevant to cultural heritage data integration and contextualisation. The final section summarises the discussions and outlines ideas for future work.

2.Related work

Over the last years quantitative research has been carried out intensively for the LOD quality. The landscape of previous studies is examined in an in-depth survey by Zaberi et al. [45]. They analyse 30 academic articles on data quality frameworks and report 18 quality dimensions and 69 metrics, as well as 20 tools. Many studies investigate the linking quality, but some aim to assess broader aspects of LOD quality. For instance, Färber et al. compare DBpedia, Freebase, OpenCyc, Wikidata, and YAGO with 34 quality criteria [20]. They span from accuracy, trustworthiness, and consistency to interoperability, accessibility, and licences. Schmachtenberg et al. [34] update the 2011 report on LOD, using the Linked Data crawler, analysing the change of LOD (8 million resources) over the years. Debattista et al. [13] provide insights into the quality of 130 datasets (3.7 billion quads), using 27 metrics. However, the linking on which this paper would like to focus is a small part of the metrics. Mountantonakis and Tzitzikas [31] have developed a method for LOD connectivity analysis, reporting the results of connectivity measurements for over 2 billion triples and 400 LOD Cloud datasets. A rather unusual project has been conducted by Guéret et al. [22]. They concentrate on the creation of a framework for the assessment of LOD mappings using network metrics. They specifically look into the quality of automatically created links in the LOD enrichment scenario.

In parallel, a number of valuable contributions have been made to scrutinise owl:sameAs and “problem of co-reference” [28]. Firstly, there are critical discussions about the proliferation of owl:sameAs semantics [23]. Secondly, several large scale statistical analyses uncover the status of owl:sameAs networks to detect errors for 558 million links [32], verify the proliferation [14,15] (4352 and 8.7 million links respectively), and propose solutions. Most projects concentrate on macro studies and statistical observations of the comprehensive cross-domain LOD cloud, applying metrics to measure the data quality through dumps and SPARQL endpoints. Their methodologies help us to gain a holistic view of the development of the LOD cloud in terms of linking quality.

There are also a few examples of “semi-micro” research, using domain specific datasets. Ahlers [3] analyses the linkages of GeoNames (11.5 million names). He reveals some cross-dataset and cross-lingual issues and distribution biases. Debattista et al. [11] inspect the Ordnance Survey Ireland (50 million spatial objects) in order to identify errors in the data mapping for the LOD publishing and check the conformance to best practices. Although the datasets pass the majority of 19 quality metrics in the Luzzu framework [12], the low number of external links (only DBpedia) is clearly our concern.

The studies for the cultural heritage domain are relatively new. Candela et al. state that there has been so far no quantitative evaluation of the LOD published by digital libraries [8]. They systematically analyse the quality of bibliographic records from four libraries with 35 criteria covering 11 dimensions to provide a benchmark for the library community. The research on the LOD quality for a broader cultural heritage including museums and archives is scarce.

Apart from Mountantonakis and Tzitzikas, macro research projects oftentimes treat data sources (or corpora) as a whole, when investigating owl:sameAs link connectivity. In other words, the data connectivity is examined regardless of the user mobility at an instance level. For example, their research does not reveal if the connection for a specific instance such as Mozart is available between data source A and B, even if they detect many links between the instances in the two sources. This is because the domain coverage may be different: A originates from a Polish library and B from a Greek museum. Mozart could be found in both, but could be in neither. To this end, it is necessary to observe trees (Mozart as an instance) not forests (the data source A and B as a collection of instances).

In addition, most macro analyses are not designed for multiple graph traversals. One of the exceptions is Idrissou et al. [26] who indeed claim that gold standards for entity resolution do not go beyond two datasets. Interestingly, they develop hybrid-metrics that combine structure and link confidence score to estimate the quality of links between entities for six datasets from the social science domain. Although we agree that accurate automated evaluation of links is much needed, our study aims to gain deeper understanding of smaller sampling entities.

Going back to our analogy, we currently cannot know how much and what kind of data we can find by following a link from Mozart in data source A to an entity in source B, which provides links to an entity in source C. Therefore, a close observation of instances is needed. The instance level maneuverability indicates whether and how users can navigate themselves in the knowledge graphs and can obtain related information from various data sources, and potentially integrate them.

3.Objectives and methodology

We explain the process of defining objectives and methodology in four sub-sections. The first section describes the scope of the linking quality evaluation. The second section discusses the nature of research in cultural heritage and DH in relation to conceptual models and ontologies, in order to specify the object of analysis. The third section details the data sampling. The fourth section deals with the technical methods of a wide range of analyses.

3.1.Scope of analysis and graph traversals

This paper will not repeat the comprehensive statistical analyses on the LOD quality according to the existing or newly created comprehensive metrics. In contrast to previous research, we deploy a micro analysis. Our research deals with a small ecosystem of LOD in the cultural heritage NEL, based on an empirical qualitative and quantitative method. In particular, it focuses on user maneuverability for arbitrary LOD entities. We analyse multi-level graph traversability using standardised properties, especially bearing the automatic data traversals and integration in mind.

The primary goal is to create “traversal maps” of major LOD data sources at an instance level. “Traversal maps” are maps illustrating all possible routes of graph traversals in the LOD cloud (RQ3). We specialise in the route of standardised properties including owl:sameAs (RQ4). Naturally, the collections of instances covering the same topic (i.e. categories in Section 3.2) are of vital importance for the analysis (RQ5). Subsequently, it is expected to provide a better understanding of which referential resources are accessible in what way between multiple sources (RQ1 and RQ2). This scope enables us to deliver an observation more from the data user’s perspective than the producer’s. The traversal maps should be helpful for the end-users to orient themselves in the LOD cloud and formulate strategies for data navigation and integration to capitalise NEL.

The use case for the LOD traversals in this article is the following: we/user manually look up a LOD entity/resource identified. Then, they follow available links in the entity to reach identical and/or the most related LOD resources. For example, one may traverse an RDF graph from a resource in DBpedia to a resource in Wikidata via owl:sameAs:

dbr:1969 owl:sameAs wd:Q2485 .

Hyperlinks are documented and counted to generate traversal maps. To support the link quality analysis, information about other content is also documented and counted (RQ5). It includes the amount of rdfs:label, rdf:type, skos:prefLabel and skos:altLabel as well as rdf:resource, and rdf:about (see Section 3.4). The traversal continues as long as it is within the specified datasets boundaries (see Section 3.3). The reason to evaluate lookups instead of data dumps and SPARQL queries is that they play a vital role to publicly and openly raise awareness of the data existence that NEL essentially needs. To our knowledge, none of the previous studies works on lookups.

Regarding the link types, the W3C recommended properties, owl:sameAs, rdfs:seeAlso, and skos:exactMatch are used.33 It is a common practice that information providers set owl:sameAs links to URI aliases [4,6]. In addition, schema:sameAs is included, due to its popularity. One of the advantages of those standards is that the properties are widely known (see Section 2), implying no prior knowledge is required to access and process data. As Hartig [24,25] observes, it is highly important that the end users can obtain data from initially unknown data sources. In other words, they should be able to discover new LOD sources at run-time by following RDF links [6].

Since rdfs:seeAlso may be asymmetric, our analysis is not limited to LOD and symmetric graphs. This means that the sources and destinations of incoming and outgoing links are not 100% synchronised as identical LOD entities. For example, “Italy” in Getty TGN contains rdfs:seeAlso for an HTML representation (http://www.getty.edu/vow/TGNFullDisplay?find=&place=&nation=&subjectid=1000080). This is allowed in the specification.44 Another reason to avoid strict co-references is that it is hard to find and evaluate the same identity only by URIs. For instance, a VIAF record provides a link to Getty ULAN in the following syntax: http://vocab.getty.edu/ulan/500240971-agent. This resolves to http://vocab.getty.edu/ulan/500240971. In general, redirects introduce technical complexity for the analysis. As a consequence, the links to the same domain name in the URIs (e.g. getty.edu is same as vocab.getty.edu) are regarded as the same destination, regardless the identity and format of the entity. In this way, our analysis attempts to bypass complicated discussions over the accurate semantics of properties such as owl:sameAs [23].

When assessing the quality of LOD, proprietary properties cannot be ignored. They often contain interesting and specialised information. However, we put less emphasis on them. Compared to standardised properties, these properties may not be frequently used as a means to connect the data sources within the core part of the LOD cloud. Another reason is extensively explained in Section 3.4 in the context of difficulties in the data quality comparison, and our compromised approach is described.

Documentation on an instance is recorded in separate tabs in a spreadsheet for each source. VBA scripts are created to aggregate and/or facet datasets. Subsequently, various types of tables and charts are generated. In order to increase the research transparency and reproducibility, our datasets and documentation are fully archived in the Zenodo Open Access repository (https://doi.org/10.5281/zenodo.5913136).

3.2.Core questions and contextualisation in cultural heritage ontology

In order to narrow the scope of the LOD evaluation, this article focuses on addressing typical and generic core questions for cultural heritage and DH alike. For instance, one of the largest cultural heritage data platforms is Europeana. It has created the Europeana Data Model (EDM)55 in order to capture heterogeneous cultural heritage information. Its Primer66 notes that “EDM will let users browse Europeana in revealing new ways. It answers the ‘Who?’, ‘What?’, ‘When?’, ‘Where?’ questions, and makes connections between the networks of stories that will animate Europeana’s content”. EDM features five classes (agent, event, place, time-span, concept) for this purpose, which are called contextual entities, because they enrich and “contextualise” cultural heritage objects. Although these 4‘W’ questions are common sense for scientific research in general, they manifest the essence of cultural heritage research: without them, researchers are hardly able to solve any other research questions in their disciplines. Thus, they provide the contextualisation or foundation of research.

The importance of the four core questions is also reflected in other cultural heritage ontologies. CIDOC-CRM “provides the “semantic glue” needed to mediate between different sources of cultural heritage information, such as that published by museums, libraries and archives”.77 It centres “Event” as a core entity, connecting “Agent”, “Time-Span”, “Objects”, and “Place”. In the library sector, DCMI Metadata Terms88 also defines almost identical entities: “Agent”, “PeriodOfTime”, “PhysicalResource”, and “Location” among others. In addition, FRBR99 is a conceptual reference model for libraries which introduces hierarchical concepts of cultural works (i.e. work, manifestation, expression, and item). The Group 1 entities (the products of intellectual and artistic endeavor) are relevant to the What question, whereas the Group 2 entities (person and corporate body) are related to Who. Group 3 (the subjects of intellectual or artistic endeavor) is associated with other W-questions.

Therefore, the evaluation of LOD in this article concentrates on these four questions and use them as categories of our investigation. We employ the following terminology to be more specific: agents (for Who), events (for What), objects and concepts (for What), dates (for When), and places (for Where). Due to the genericness of the categories, investigating the five categories not only helps us to answer our research questions, but also makes our analysis valuable for research outside the cultural heritage field.

3.3.Data sources

Our study introduces two basic strategies for the selection of datasets/data sources. It examines LOD in (1) RDF/XML with (2) unrestricted look-up access (i.e. no API keys). Although there are other RDF serialisation formats, RDF/XML is the only commonly available one for all the data sources described below.1010 On top of the technical setup, we consider popularity (through literature [8,16,36,46]), data volume, coverage, and actual linkages for the selection. The aforementioned LOD cloud is also taken into account, as one of the comprehensive visualisations of LOD networks. Consequently, the following nine data sources which include significant content for the cultural heritage and DH are chosen for examination: (1) Getty vocabularies (ULAN (Union List of Artist Names), AAT (Art & Architecture Thesaurus), and TGN (Thesaurus of Geographic Names)), (2) GeoNames, (3) VIAF, (4) WorldCat FAST, (5) DBpedia, (6) Wikidata, (7) the Library of Congress, (8) BabelNet, and (9) YAGO.

There are two exceptions for the selection criteria. Wikipedia delivers its articles in HTML, but it may be studied as an indicator, because it has a unique position as a global reference on the web inside and outside the LOD context [2,3,30,41]. Indeed, the data in DBpedia and YAGO are derived from Wikipedia.1111 Wikidata has a close tie with Wikipedia project. The other case is Europeana. It provides an alpha version API with a public API key.1212 However, it is one of the most valuable LOD resources in the cultural heritage sector, and therefore, it is included.

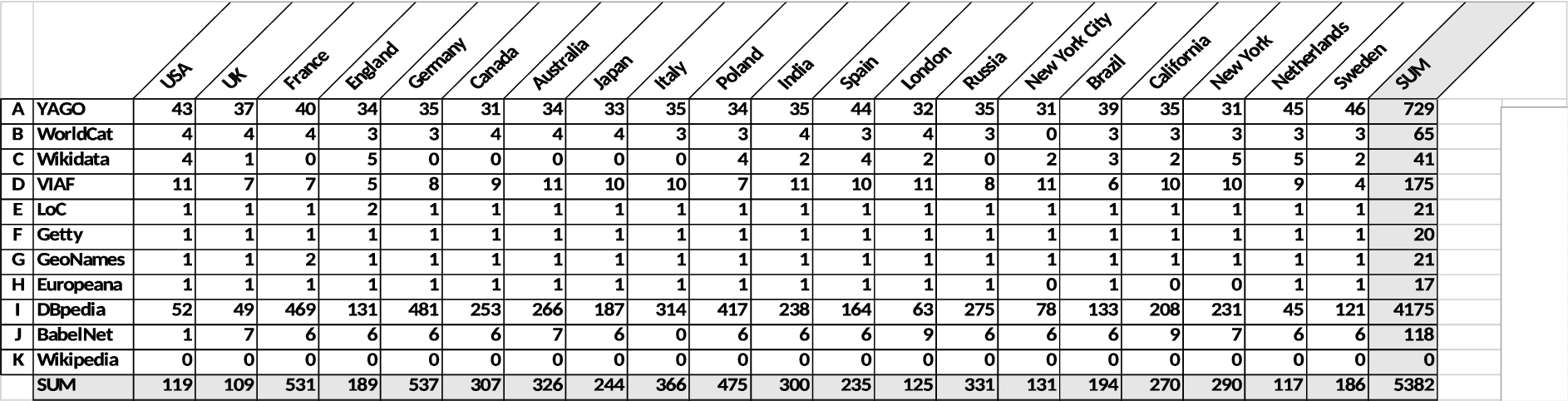

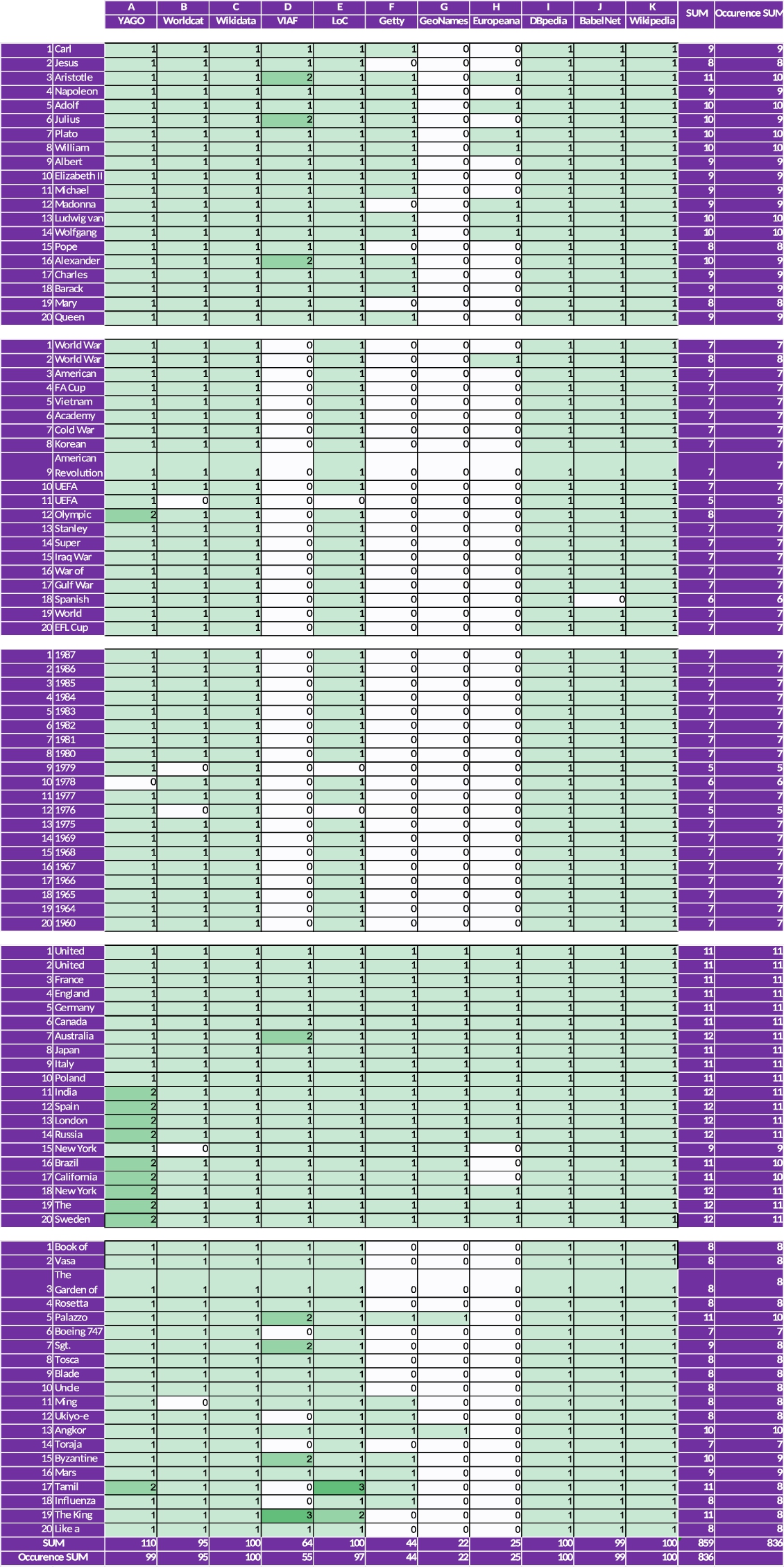

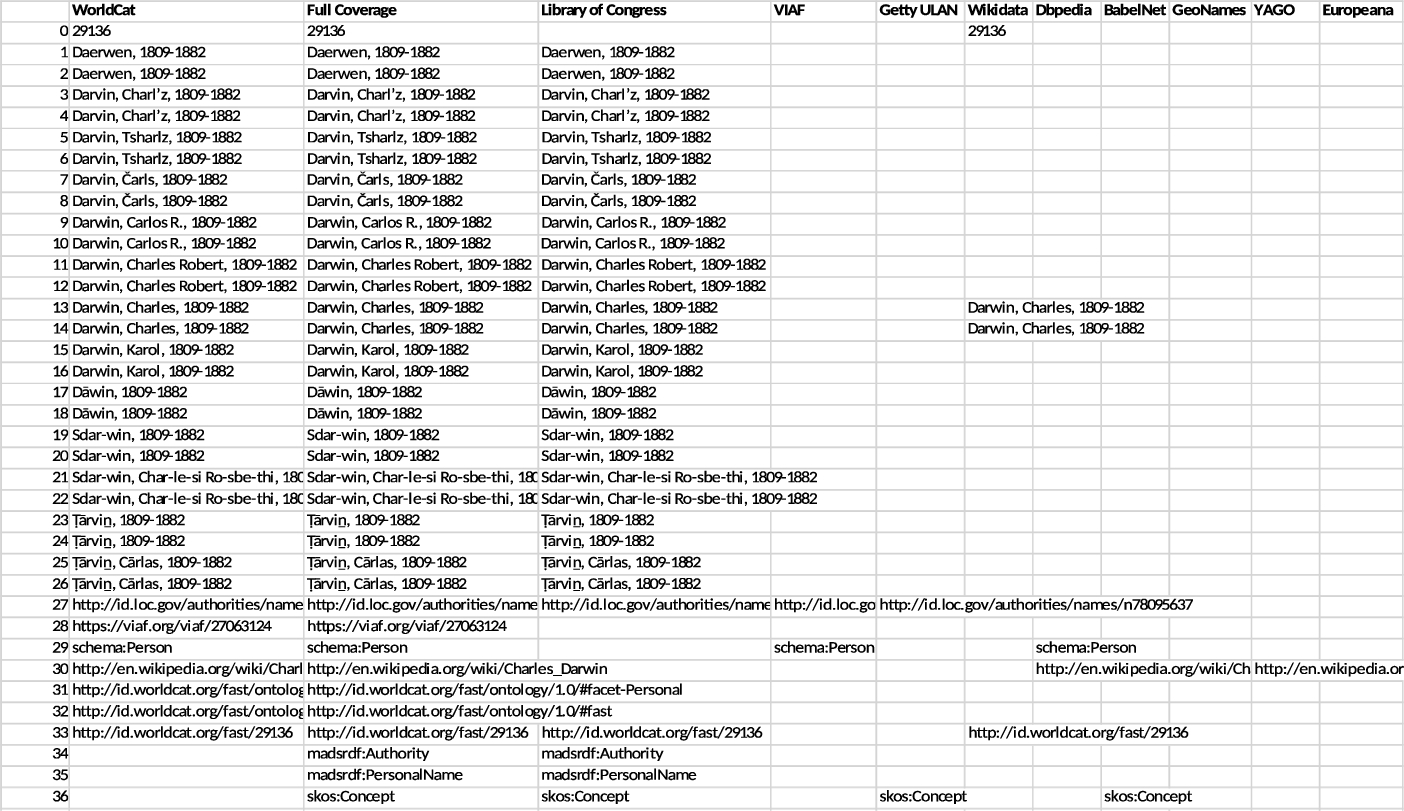

Table 1

100 entities in five categories selected for analysis

| ID | Agents1 | Events2 | Dates | Places3 | Objects and Concepts |

| 1 | Carl Linnaeus | World War II | 1987 | United States | Book of Kells |

| 2 | Jesus | World War I | 1986 | United Kingdom | Vasa (ship) |

| 3 | Aristotle | American Civil War | 1985 | France | The Garden of Earthly Delights (paining) |

| 4 | Napoleon | FA Cup | 1983 | England | Rosetta Stone |

| 5 | Adolf Hitler | Vietnam War | 1980 | Germany | Palazzo Pitti (building) |

| 6 | Julius Caesar | Academy Awards | 1984 | Canada | Boeing 747 |

| 7 | Plato | Cold War | 1982 | Australia | Sgt. Pepper’s Lonely Hearts Club Band (album) |

| 8 | William Shakespeare | Korean War | 1968 | Japan | Tosca (opera) |

| 9 | Albert Einstein | American Revolutionary War | 1979 | Italy | Blade Runner (film) |

| 10 | Elizabeth II | UEFA Champions League | 1969 | Poland | Uncle Tom’s Cabin (novel) |

| 11 | Michael Jackson | UEFA Europa League | 1978 | India | Ming Dynasty |

| 12 | Madonna (entertainer) | Olympic Games | 1967 | Spain | Ukiyo-e (art) |

| 13 | Ludwig van Beethoven | Stanley Cup | 1981 | London | Angkor Wat (building) |

| 14 | Wolfgang Amadeus Mozart | Super Bowl | 1977 | Russia | Toraja (ethnic group) |

| 15 | Pope Benedict XVI | Iraq War | 1976 | New York City | Byzantine Empire |

| 16 | Alexander the Great | War of 1812 | 1975 | Brazil | Mars (planet) |

| 17 | Charles Darwin | Gulf War | 1964 | California | Tamil language |

| 18 | Barack Obama | Spanish Civil War | 1966 | New York | Influenza (disease) |

| 19 | Mary (mother of Jesus) | World Series | 1965 | The Netherlands | The King and I (musical) |

| 20 | Queen Victoria | EFL Cup | 1960 | Sweden | Like a Rolling Stone (song) |

1 The priority is given in the following order: page rank, 2Drank (24 languages), and page rank (female).

2 International events are prioritised, thus a couple of specific events such as US censuses are removed.

3 Top 20 places are extracted from the general list.

As this study deploys a qualitative analysis, a manageable level of data sampling is considered. It selects twenty representative instances/entities from five categories defined in the Section 3.2 (Table 1), resulting in 100 entities in total.1313 In order to objectively and systematically select the most relevant entities, we consulted the “Wikipedia most referenced articles”1414 (2011) for the top 20 places and dates, whereas a scientific article about the interaction of top people in Wikipedia is used for the 20 agents [17]. In addition, the top 20 events are retrieved by a SPARQL query from the EventKG endpoint1515 as follows:

PREFIX eventKG-s: <http://eventKG.l3s.uni-hannover.de/schema/>

PREFIX eventKG-g: <http://eventKG.l3s.uni-hannover.de/graph/>

PREFIX rdf: <http://www.w3.org/1999/02/22-rdf-syntax-ns#>

PREFIX sem: <http://semanticweb.cs.vu.nl/2009/11/sem/>

PREFIX rdfs: <http://www.w3.org/2000/01/rdf-schema#>

PREFIX owl: <http://www.w3.org/2002/07/owl#>

PREFIX dbr: <http://dbpedia.org/resource/>

SELECT ?dbp ?links {

?event rdf:type sem:Event .

GRAPH eventKG-g:dbpedia_en { ?event owl:sameAs ?dbp . } .

{

SELECT ?event (SUM(?link_count) AS ?links) WHERE {

?relation rdf:type eventKG-s:Relation .

?relation rdf:object ?event .

GRAPH eventKG-g:wikipedia_en { ?relation eventKG-s:links ?link_count . }.

} GROUP BY ?event

}

} ORDER BY DESC(?links)

LIMIT 30

It is not trivial to nominate 20 objects and concepts, because cultural heritage and DH cover an extremely broad field. In fact, there are countless numbers of material entities such as museum objects and buildings. Moreover, millions of archaeological objects are even unnamed. Indeed, many object entities are not globally and uniquely identifiable, because they have not (yet) been created in the global references. As such, it is much more challenging to implement entity linking for those entities. Nevertheless, we manually selected 20 entities from the featured articles of Wikipedia.1616 They aim to represent a wide range of chronological, geographical, and thematic diversity.1717

The actual number of entities analysed is 836 (859 occurrences), since some sources do not have the entities the others have. Full details of the entity coverage per data source are provided in Appendix A. Statistically speaking, in case of missing entities, they are included in the calculation and the data values are counted as null.1818 In addition, there are double identity/occurrence (or a kind of “duplicate”1919) in some sources. The double identities are consolidated as one identity.2020 When an entity lookup is not accessible for technical reasons, the data is included in the statistics as a zero value.2121

In practice, it is not feasible to fully automate the analysis process. In order to properly document the data quality, it is required to search, identify, and verify the same entity across 11 data sources. The quality of each entity needs to be manually double-checked. The main problem of our analysis is semantic disambiguation. It is even not always possible to accurately find an entity. For instance, the challenges of disambiguation and entity matching across multiple LOD sources are presented by Farag [19]. In our case, three reasons are worth mentioning: (a) the lack of cross linking between data sources makes it hard to find all available entities, (b) the entities are confusingly organised and hidden from the mainstream contents, especially in aggregated LOD, and (c) the search functionalities on the website of the data sources may have limited capacity and have not been optimised. In these cases, lookups are executed on a best-effort basis.2222 Another justification of our manual evaluation is the lack of gold standard. In fact, the research on the LOD quality in digital libraries requires manual reviews for several metrics [8].

3.4.Analysis methodologies

In this study, we conduct both qualitative and quantitative analysis. As for the qualitative approach, this paper presents some examples that are found during the manual inspection of LOD instances. As for the quantitative approach, we generate chord diagrams in R2323 to examine the basic flow of incoming and outgoing links within the 11 data sources. We deploy Data to Viz, based on the circlize package.2424 For the creation of traversal maps, we import matrix data from spreadsheets to R and generate network diagrams with igraph2525 packages. In addition, we calculate the amount and percentage of links and provide different views on the quality. Moreover, a basic network analysis is also conducted with R to objectively evaluate the characteristics of the small LOD network. It turns out that this approach is useful, because Guéret et al. [22] subsequently proposed a linking quality method with some of the network metrics we use in the R analysis.

Furthermore, this paper also analyses other data content (such as literals) in addition to the links. This is important, as we cannot obtain a full picture of link quality without studying the content of the link destination. In an RDF graph, there can be three types of nodes: IRIs,2626 literals, and blank nodes.2727 As the blank nodes are not heavily used in our target datasets and add extra complexity, we limit ourselves to literals. For this purpose, first we simply extend our calculation to check the use of four W3C standardised properties, mainly for literals. The amount and percentage of rdfs:label, rdf:type, skos:prefLabel, and skos:altLabel are calculated. In addition, the total amount of content associated with rdf:resource and rdf:about is assessed. These two properties are at the centre of RDF/XML and are used to describe and connect resources. Although there are other important properties than the six properties described above, they are the most fundamental and frequently used properties to describe entities. These statistics allow us to obtain basic holistic views on the data content. However, they are not sufficient to draw conclusions.

The challenge is how to objectively compare and evaluate the content quality of different LOD sources. The major problems are: (a) there is no standard theory about what is regarded as high quality, and (b) it is hard to evaluate the quality of semantics. In terms of (a), for example, the number of links (edges) or labels/literals (strings) alone would not be able to indicate the data quality. In terms of (b), the same hyperlinks and labels can be found in different context. For example, the link “http://www.example.com” can be found in skos:exactMatch or dcterms:isPartOf, while the string “Book of Kells” can be in skos:prefLabel or rdfs:label. Both of these cases carry the same information, but there is no easy way to assess the quality of semantics of the properties. This is especially the case when proprietary properties are used. It is practically impossible to judge the quality, due to the nature of freedom in LOD. Moreover, we cannot give any preference to a hyperlink or literal as the object of a property.

To minimise the impact of a biased evaluation, Python scripts2828 are developed to supplement our analyses. They compare the overlap of data content in each LOD source without any interpretations/assumptions. Technically this means that the scripts analyse the objects of the main entity with string matching, and calculate the amount of unique content. The objects include both edges and literals, where URIs are considered as string values to be compared. In other words, the semantics of the properties are not evaluated. Although this method may not be the most accurate way to measure the content quality, it allows us to perform systematic and automatic measurements. It provides us with a sense of the amount of information and the coverage or diversity of data contents.

It is anticipated that a broad mix of above-mentioned methods can provide new insights into the linking quality at different levels.

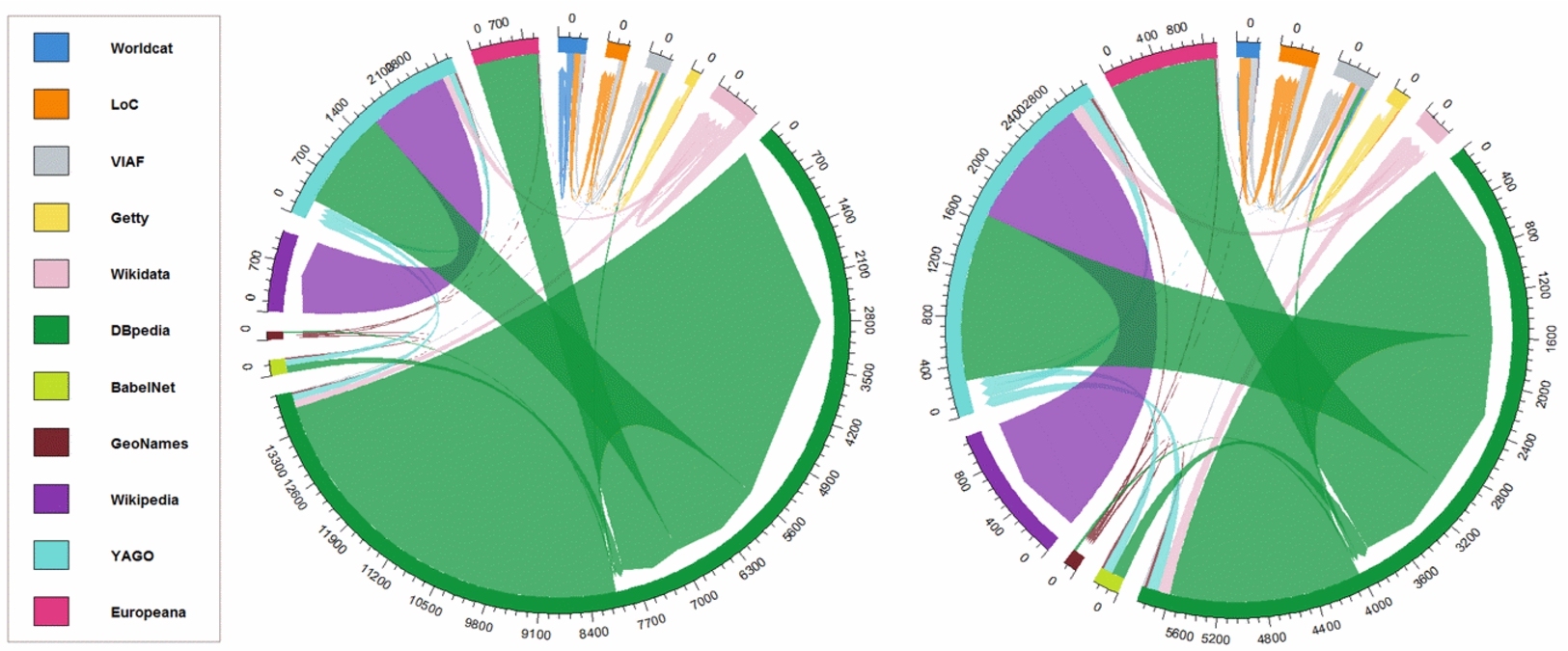

Fig. 1.

Chord diagram illustrating the amount of link flows between 11 data sources (left) and after removing inverse links (right).

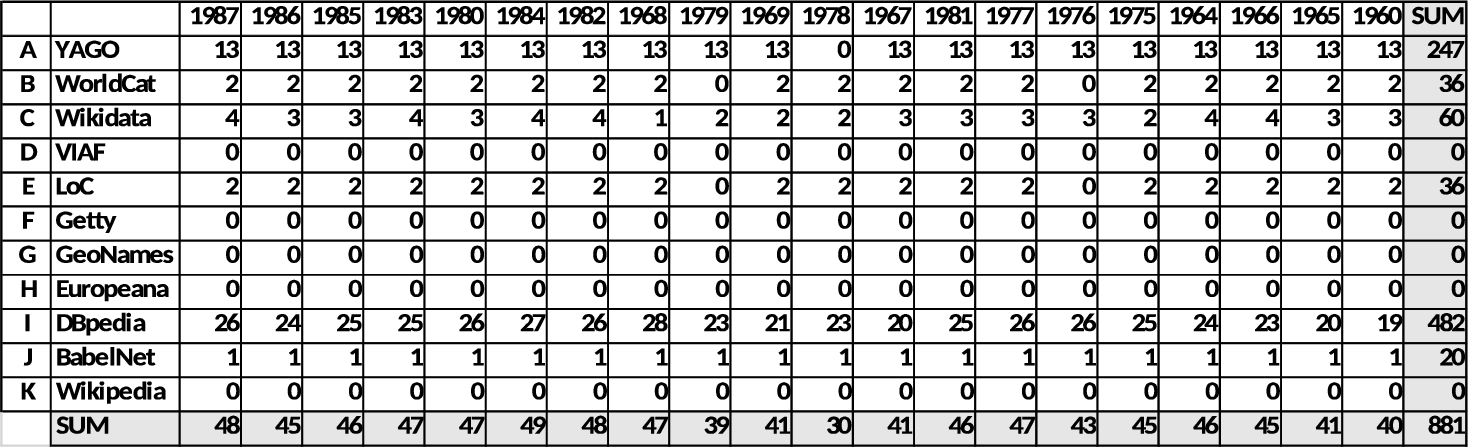

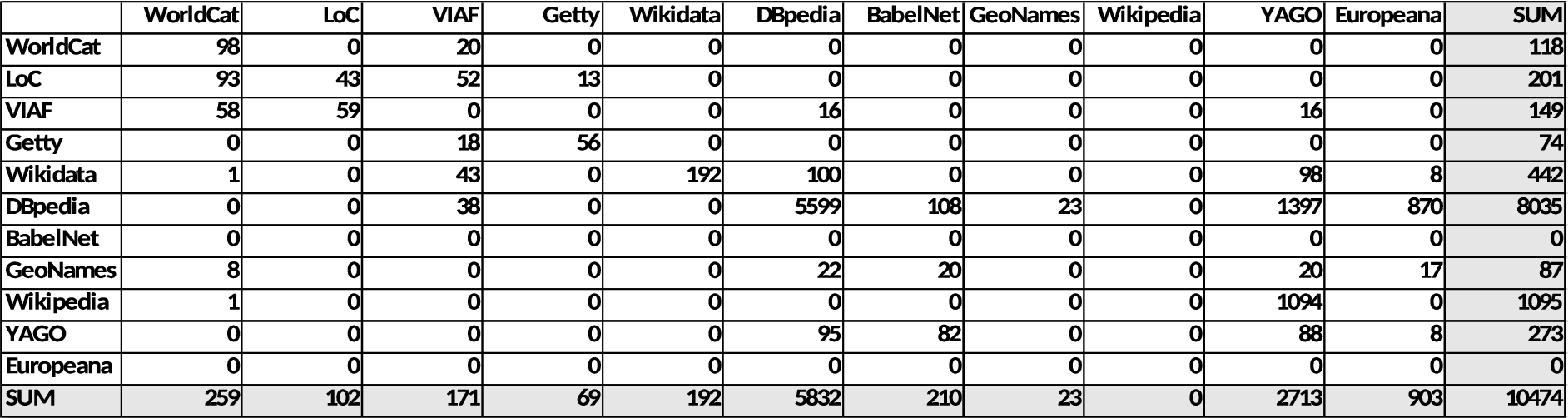

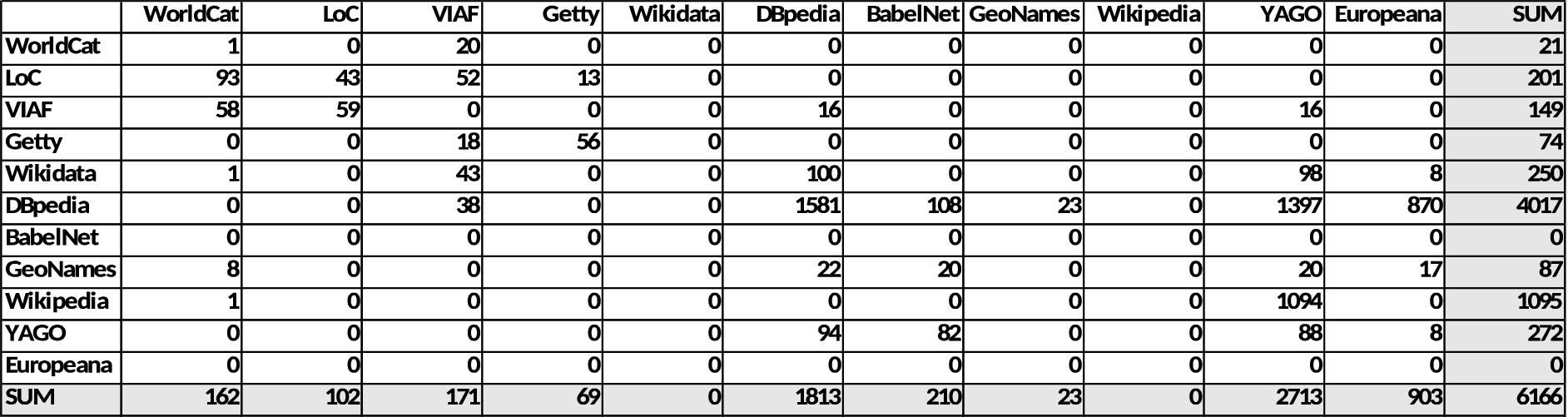

Table 2

The total and average number of outgoing links (to the 11 data sources) held by the data sources

| ID | A | B | C | D | E | F | G | H | I | J | K | Total |

| Source | YAGO | WorldCat | Wikidata | VIAF | Library of Congress | Getty | GeoNames | Europeana | DBpedia | BabelNet | Wikipedia | |

| Total | 2713 | 259 | 192 | 171 | 102 | 69 | 23 | 903 | 5832 | 210 | 0 | 10474 |

| Average | 27.4 | 2.7 | 1.9 | 3.1 | 1.1 | 1.6 | 1.0 | 36.1 | 58.3 | 2.1 | 0.0 | 12.5 |

4.Linked open data analysis

4.1.Overall traversal map

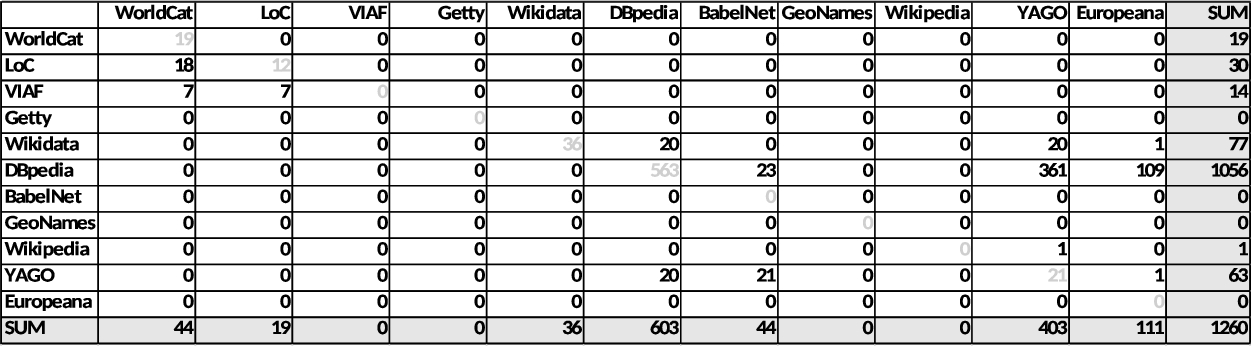

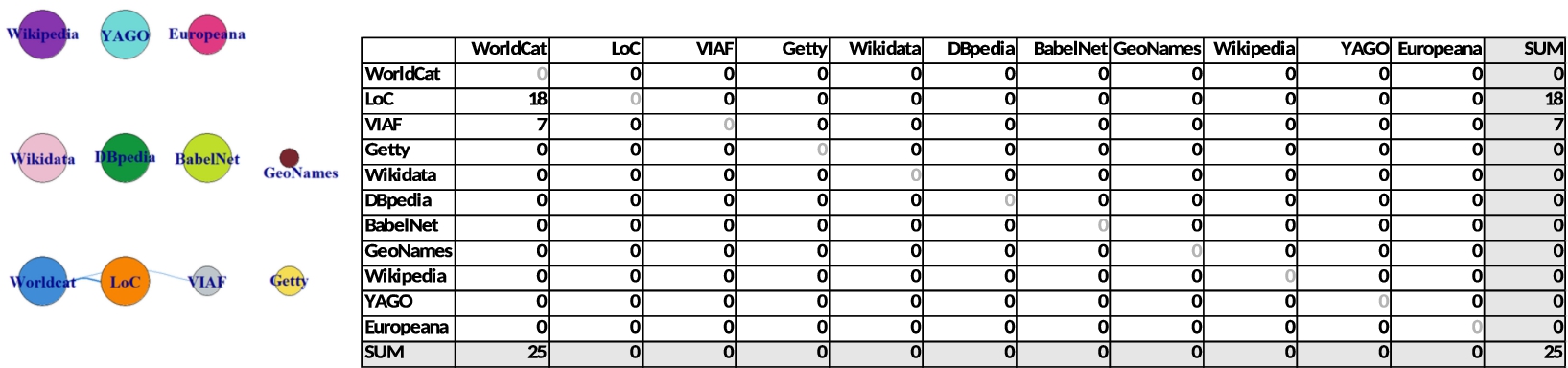

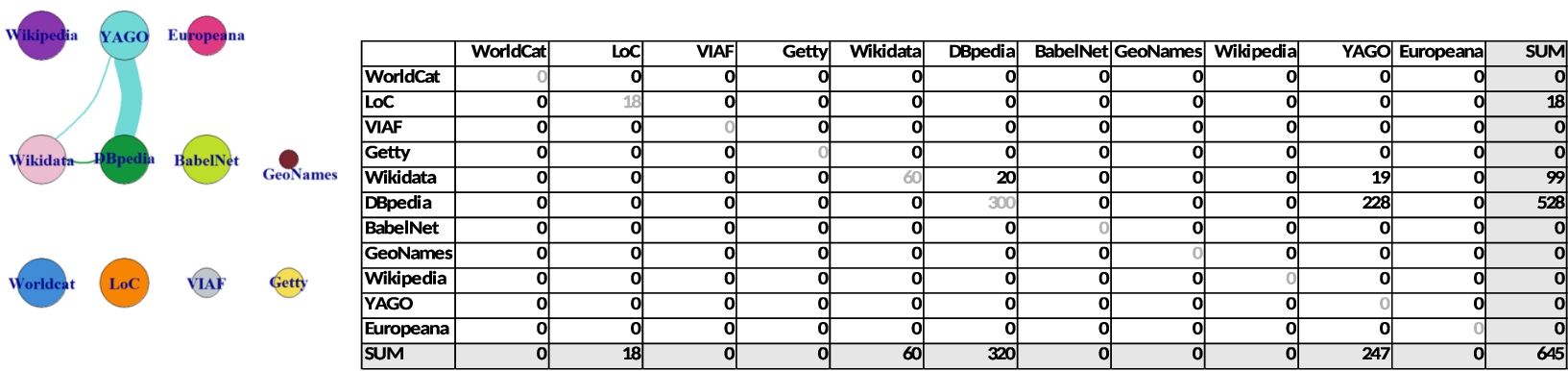

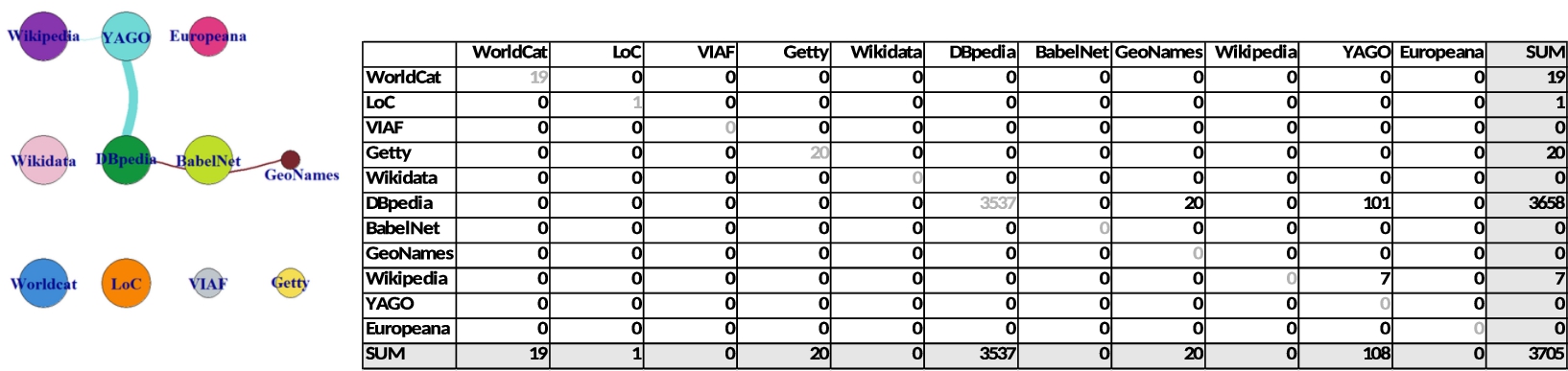

The first analysis starts with chord diagrams. Figure 1 primarily focuses on the number of links and their origins and destinations within the 11 data sources. The source data which produce Fig. 1 is found in Appendix B.

The total number of links amounts to 10474. The dominance of DBpedia is obvious, occupying over 66.2% of the entire linkages (Fig. 1 left). It is also noticeable that self-links significantly contribute to the volume of the links. YAGO supplies a substantial amount of links to DBpedia and Wikipedia. This results in the influential position of Wikipedia (5.2%), although it is not LOD. Surprisingly, Europeana comes fourth, despite the significantly limited amount of available entities (Appendix A). WorldCat, the Library of Congress, and VIAF somewhat share similar numbers of links. The outgoing and incoming links are unbalanced for Europeana.

From these numbers we can derive the following: the average number of links in all sources is 952.2, whereas the medians are 2.1 and 149 for both outgoing and incoming links. In fact, the amount of outgoing hyperlinks found in each source is moderate, given the entire size of those datasets (i.e. millions of triples); on average it is mostly under four links per entity (Table 2). These small figures are alarming, because this survey focuses on well-known sources often used for NEL for the cultural heritage datasets. It is clear that there is a great deal of room for improvement. Nevertheless, DBpedia, Europeana, and YAGO stand out, showing more promising quality for LOD with high number of links per entity.

When inverse traversals are removed from the statistics, the situation looks largely different (Fig. 1 right). The sum of the links decreases to 6166. DBpedia loses an ample number of links (47.3%), whereas YAGO gains most (24.2%). Such a dramatic shift is an evidence of abundance of inverse properties described in DBpedia. If we scrutinise the data closely, we notice that this is mostly due to the inverse use of rdfs:seeAlso in DBpedia. For instance, the entity of Sweden contains:

dbr:Lund rdfs:seeAlso dbr:Sweden .

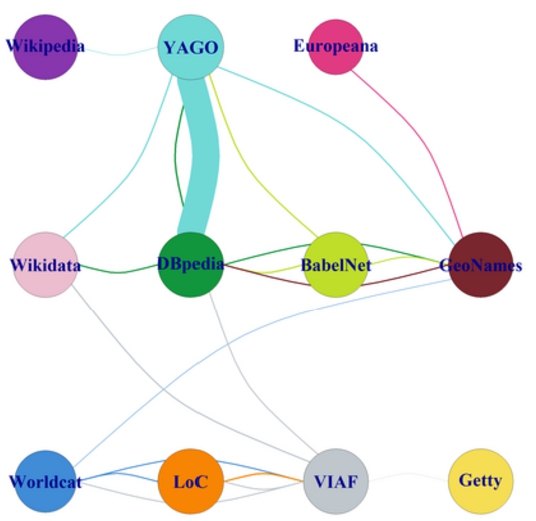

Figure 2 is the simplified overall “traversal map” for all data sources. It is a network diagram, illustrating all possible paths between the 11 data sources. However, since we observe a very high volume of links in DBpedia, YAGO, and Europeana, volumes and self-links/loops (i.e. links pointing to the same data source/domain) are not included in this figure. Thus, the diagram concentrates on the routes of traversals (i.e., the users’ mobility and traversability).

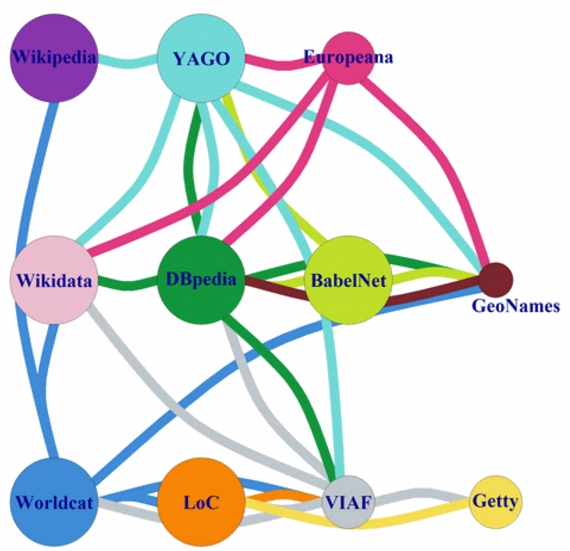

Fig. 2.

The overall “traversal map” shows available links/paths through four standardised properties between the 100 entities in 11 data sources (after self-links to the same domain is removed).29

It29 is clear that the traversing routes are not equally available across the data sources, and thus, it may be hard to navigate the LOD network. It is found that YAGO delivers four connections as well as one to Wikipedia. The next contenders are Europeana and DBpedia with four outgoing connections. In contrast, Wikidata has no outgoing connections.3030 Whilst GeoNames only links to DBpedia, the Library of Congress and Getty have one channel. With regard to incoming connections, GeoNames is an attractive destination to which five sources refer. Wikidata and DBpedia are also a centre of gravity, inviting five connections. On the other hand, Europeana and BabelNet receive no links. Whereas the lack of incoming links to BabelNet may be surprising, in Europeana’s case it is not, because it is not equipped with a truly public lookup. This would mean that the generation of LOD dump and/or SPARQL endpoint may not be sufficient. It is best to publicly declare entities that are resolvable via lookups without access restrictions. WorldCat and Getty are both only reached by VIAF.

It is particularly remarkable that reciprocal links are quite rare. There are several nodes/vertices which can be reached via only particular edge(s)/path(s). This implies that network is not desirably populated by the standard properties, and that the users would not be able to efficiently obtain information through these properties. They need to follow the best paths to retrieve the identical or closely matching information. It is possible for data publishers to use other RDF properties, but it would be an irregular practice.

Idrissou et al. [26] stress that a full mesh (fully connected network) has the highest quality in their link quality metrics. When they compare different structures (e.g. ring, line, star, mesh, tree), the more a network resembles a fully connected graph, the higher the quality of the links in the network for all metrics (bridge, diameter, closure). One might argue that a full mesh is not necessarily a prerequisite of high data quality. This may be true for much LOD, however, let us remember that we focus on the most well-known data sources that many other LOD tend to link to. Therefore, it helps the connectivity of LOD on the web as a whole. Guéret et al. [22] use clustering coefficient and owl:sameAs chains as their criteria for high quality.

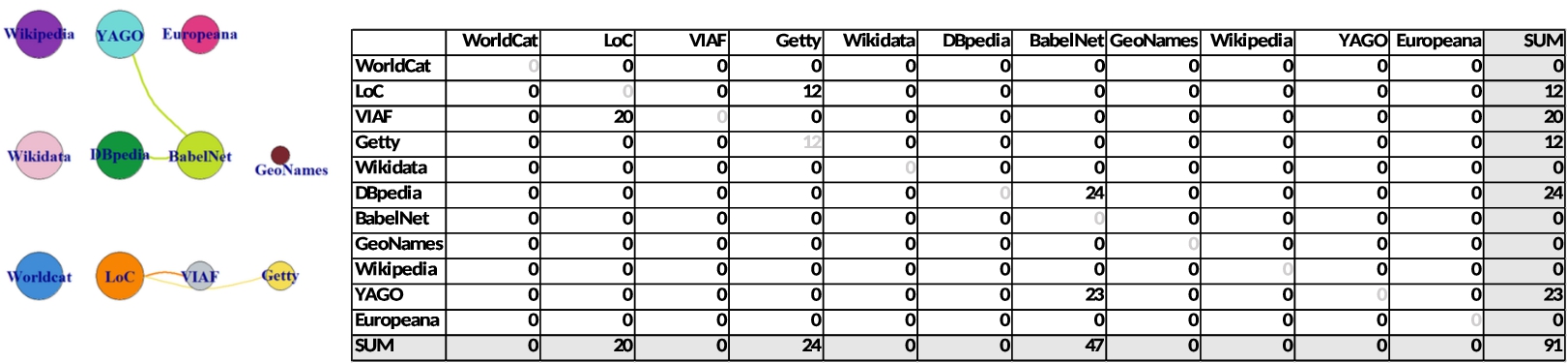

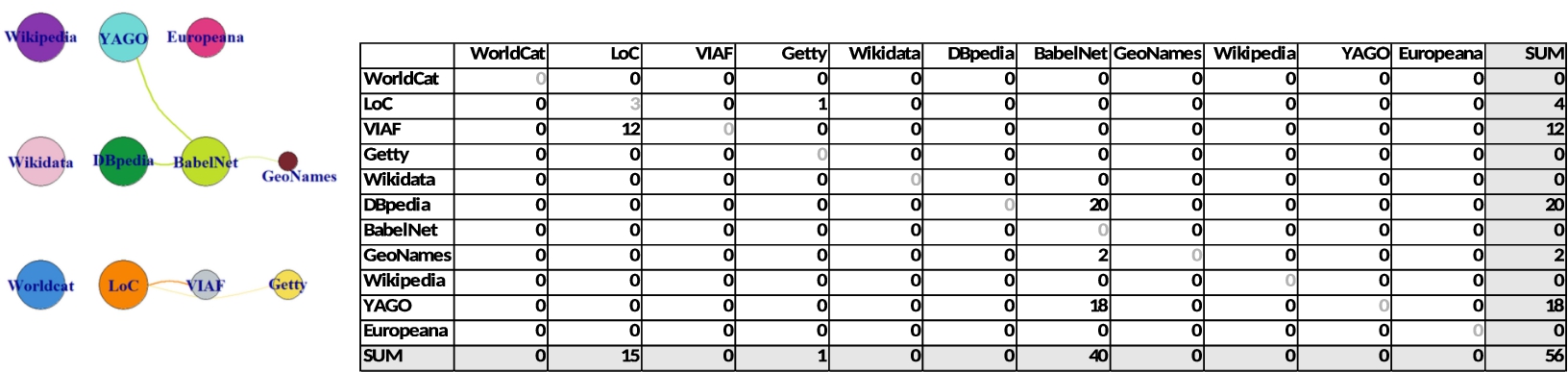

Figure 3 depicts traversal maps faceted by four link types. From now on, inverse properties are included but loops are excluded for the traversal map visualisation. Thus, the distortion of the “route diagram” that we avoided in Fig. 2 is minimal. However, the rest of the statistics (matrix data and in the texts) include both inverse properties and loops, so that they reflect the actual situation.

Although we decided to avoid discussions on interpretations of link semantics, there is at least a clear difference between owl:sameAs (as well as skos:exactMatch and schema:sameAs) and rdfs:seeAlso. It can be clearly seen that Europeana, the Library of Congress, and BabelNet are the only data publishers using skos:exactMatch. rdf:seeAlso is used mostly by YAGO, while GeoNames and WorldCat are also visible. However, the proportions of owl:sameAs and schema:sameAs are higher. In particular, Europeana and YAGO provide a large amount of connections to either DBpedia or Wikipedia. We also realise that WorldCat and VIAF opt more for schema:sameAs. In general, Fig. 3 suggests that the data creators made different ontological decisions on the choice of standardised properties. We will explore this further in the following sections.

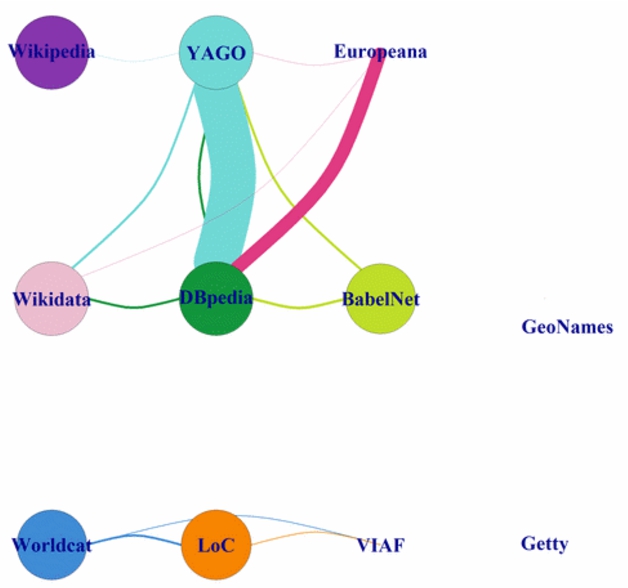

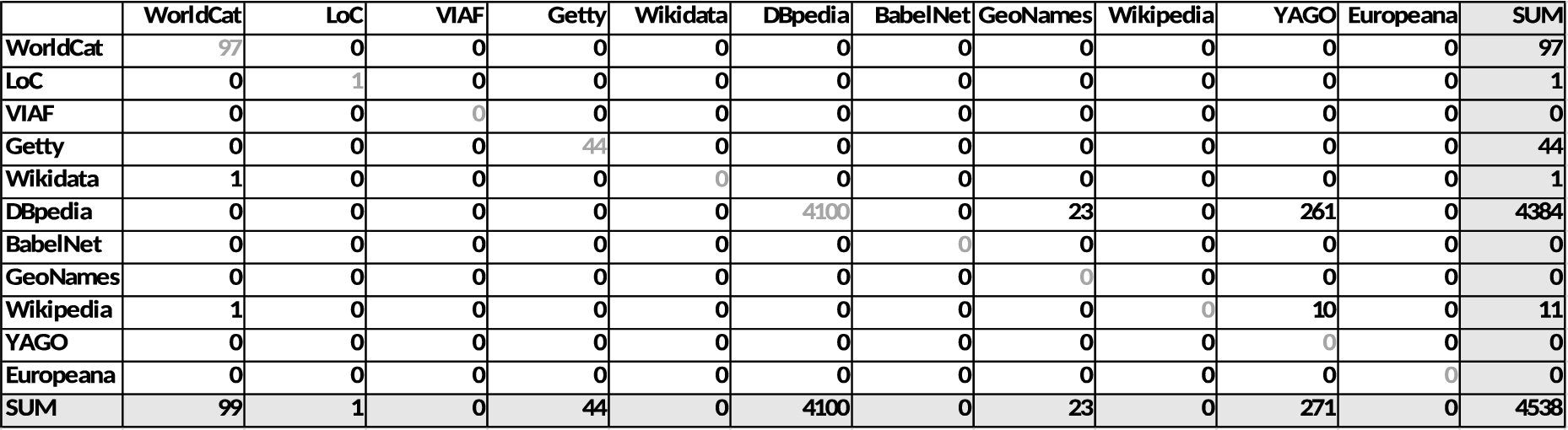

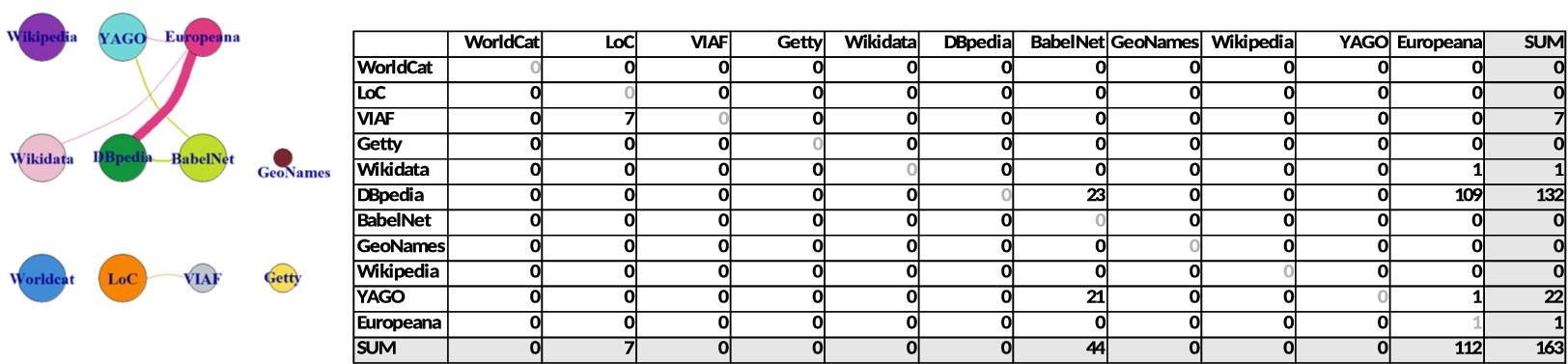

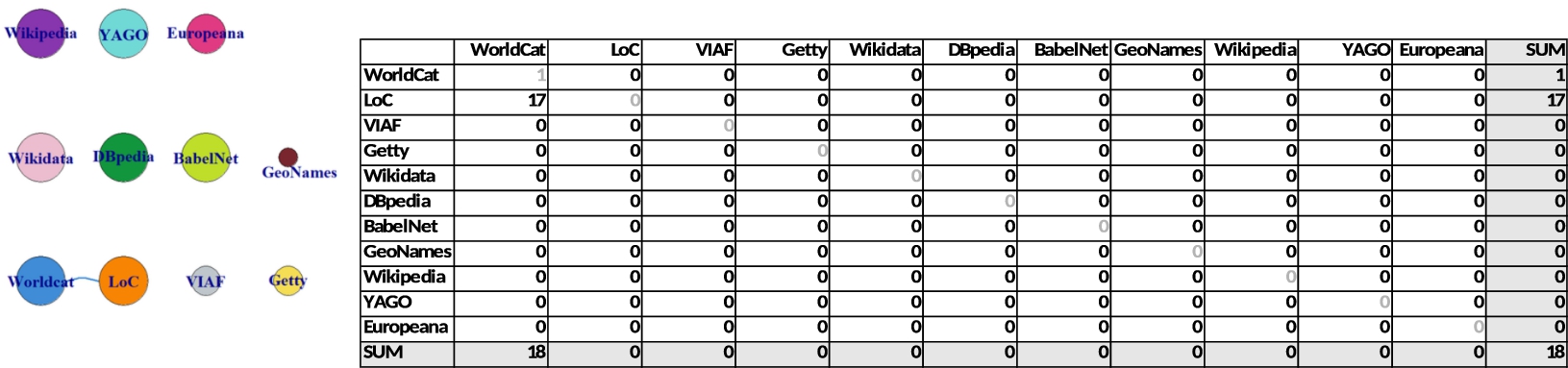

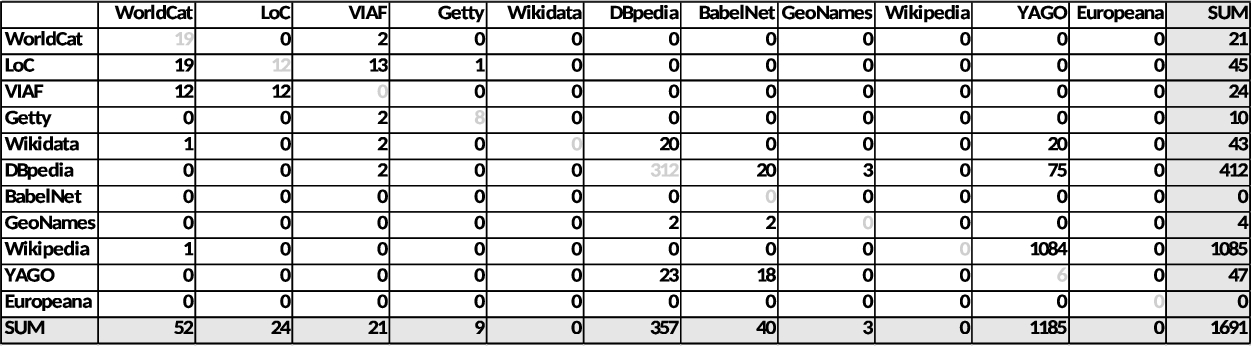

4.2.Agent traversal map

Figure 4 depicts the traversal map for agents. Appendix B includes the source matrix data and the traversal maps for all four properties. In general, agents have much less influence from loops than from other categories, because 72.4% of links are still present after removing recursive links, compared to the overall 42.0%. The most eye-catching result is Europeana. Especially, it uses owl:sameAs to link to DBpedia. In cultural heritage, VIAF plays a valuable role for agents as an aggregation of authority files of national libraries. For instance, it is the only source which offers four outgoing paths. This category has only three sets of nodes that have bilateral links. Therefore, segmentation is visible in the network and truly standardised LOD connectivity is limited.

In Table B3 in Appendix B, the role of DBpedia is expectedly prominent for incoming links, attracting 1555 links (80%). Unlike the outgoing links, Wikidata captures 121 referrals, making it the second highest source. Manual examination found that VIAF had only 72 incoming links, however, it contains more links which connect its entity to data sources outside the 11 sources, than any of the other sources. For instance, only four links with schema:sameAs are recorded for Beethoven. However, the destinations of a further eight links include the national libraries of France, Germany, Japan, Spain, and Sweden.

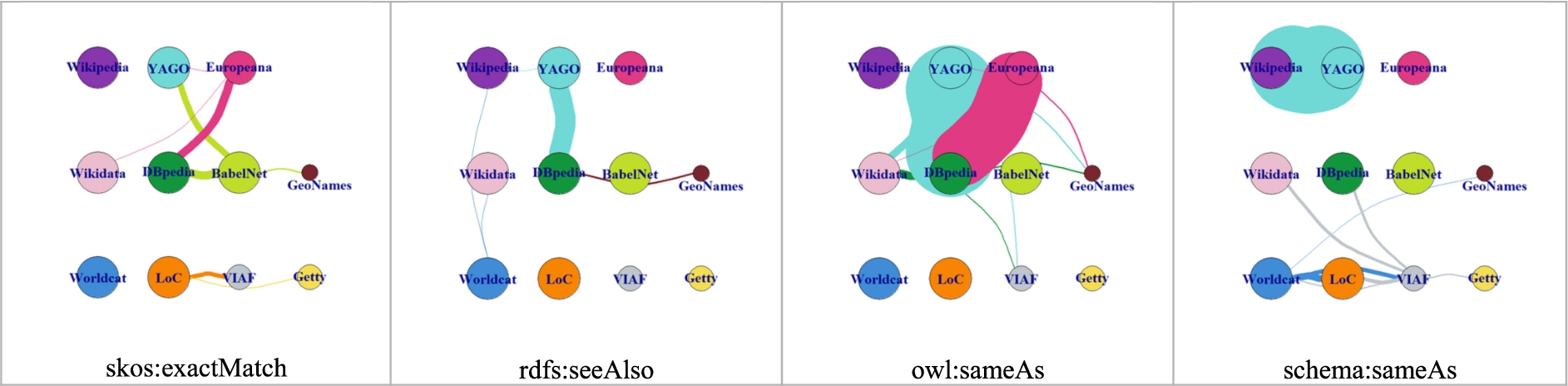

Fig. 3.

The overall traversal map by each standardised property (after removing self-links to the same domain).

Fig. 4.

The overall traversal map for agent entities.

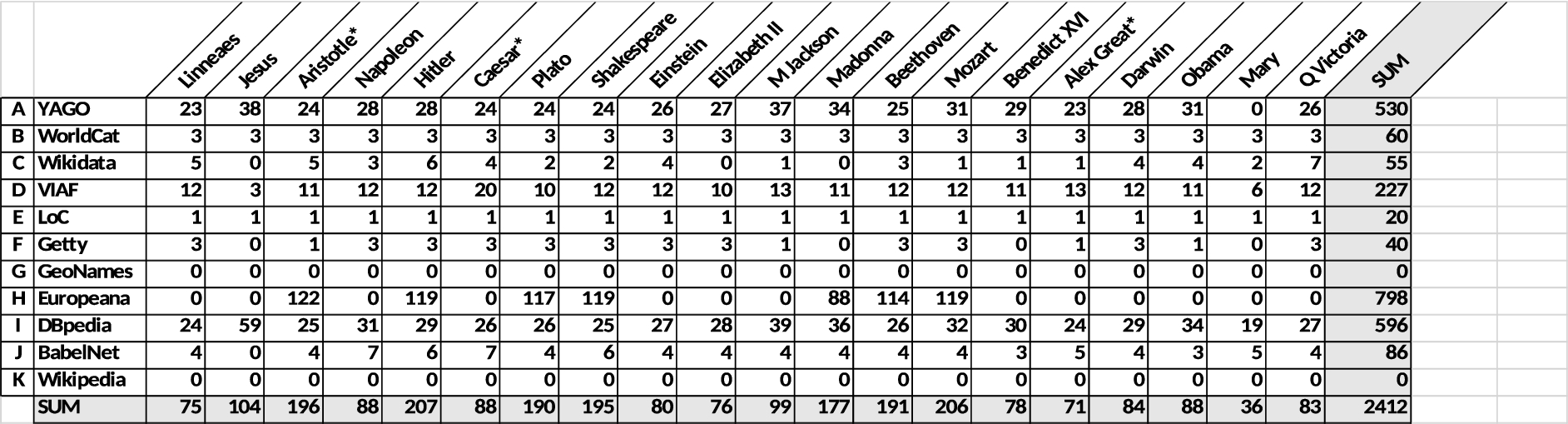

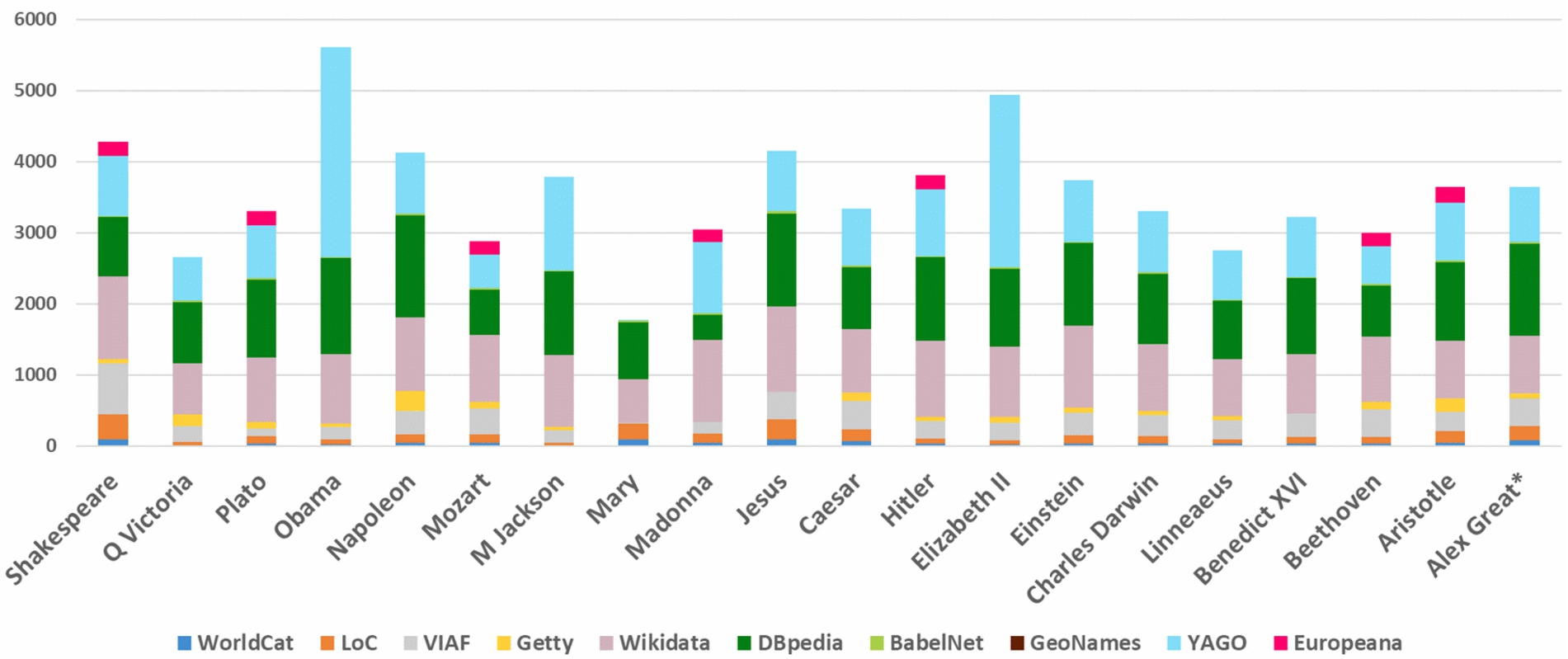

The amount of outgoing links held by 11 data sources in each entity is visualised in Table 3. When comparing the total amount in this table and in Table B3 in Appendix B, we notice that 1945 incoming links are received within the 11 data sources, out of 2412 outgoing links (80% ).3131 Whereas Europeana has 798 outbound links (33%), DBpedia and YAGO follow at 596 and 530 respectively. There is a considerable gap between the highest number of outgoing links across 11 sources (Hitler, 207) and the lowest (Mary, 36). The highest cluster are from Europeana, however, the outgoing links in Europeana are unevenly distributed. Only Aristotle, Hitler, Plato, Shakespeare, Madonna, Beethoven and Mozart are present. This would offer evidence that art and cultural figures are more important for the cultural heritage objects that Europeana deals with than politicians and scientists. DBpedia and YAGO show a similar pattern, mainly due to the tight connections between them. In there, we observe relative popularity for Jesus, Michael Jackson, and Madonna.

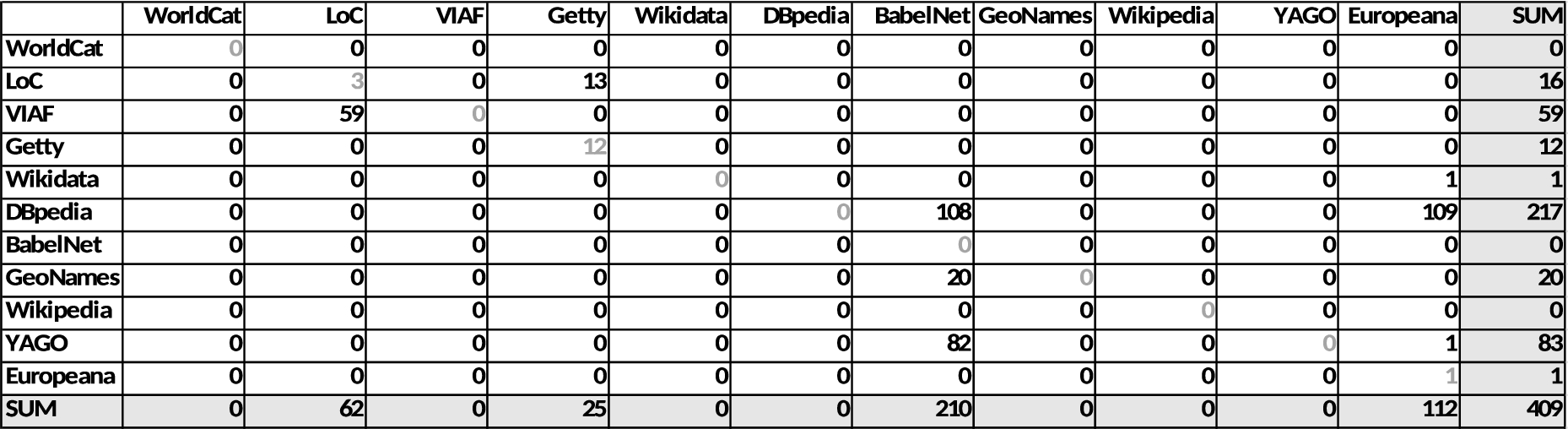

Table 3

The amount of outgoing links that the 11 data sources hold in each agent entity (* means duplicate consolidation)

WorldCat holds exactly three links per entity.3232 One is caused by the description of a new WorldCat identifier via the inverse property of rdf:seeAlso. The other two are schema:sameAs which links to the Library of Congress and VIAF. Similarly, the Library of Congress has exactly one link per entity (skos:exactMatch to VIAF).3333 These two cases suggest evenly distributed and highly normalised RDF content, probably due to systematically generated links between the library sources.

Whilst most data sources cover all 20 agents, Jesus Madonna, Benedict XVI, and Mary are totally missing in Getty vocabularies. Similarly, the number of VIAF links is sharply reduced for Jesus and Mary. This is understandable since Getty ULAN and VIAF are typically orientated toward artists and authors in the context of libraries and museums, and religious figures are harder to be recognised as agent entities. Indeed, Jesus has the lowest number of links for five data sources (Mary for four data sources). As such, it is remarkable that Jesus is relatively high in DBpedia (59 links). It is also interesting that non-artists figures such as Einstein, Elizabeth II, and Obama are found in ULAN.

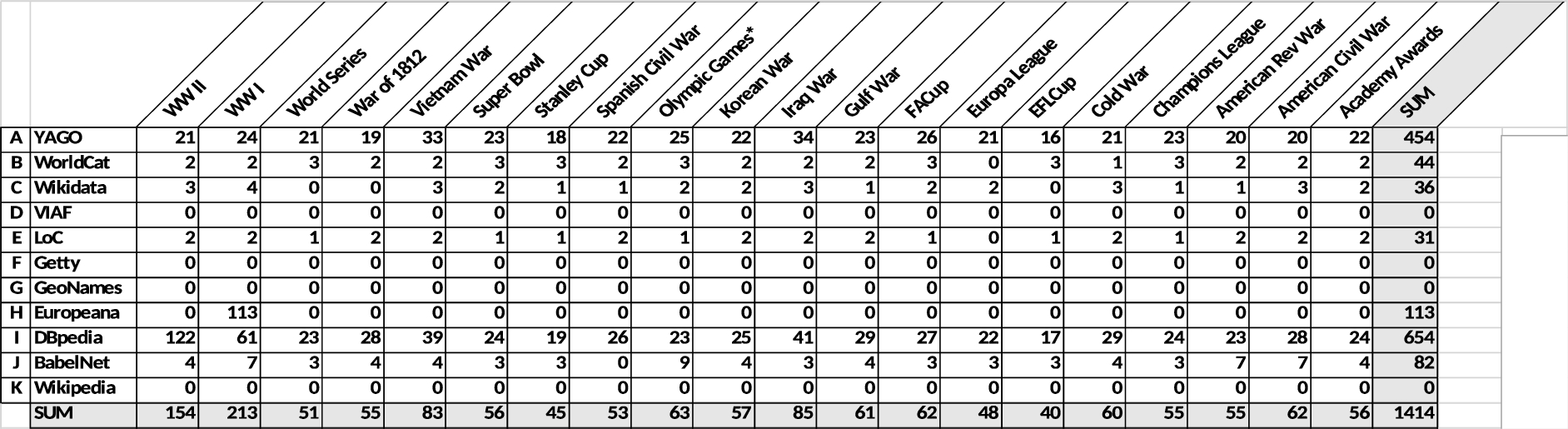

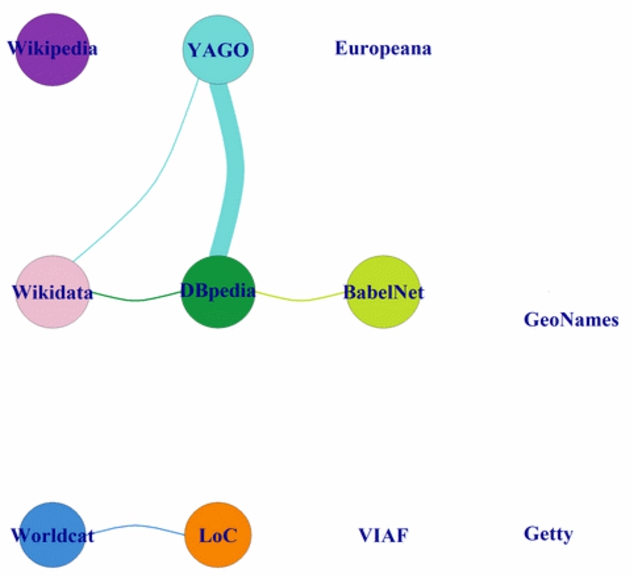

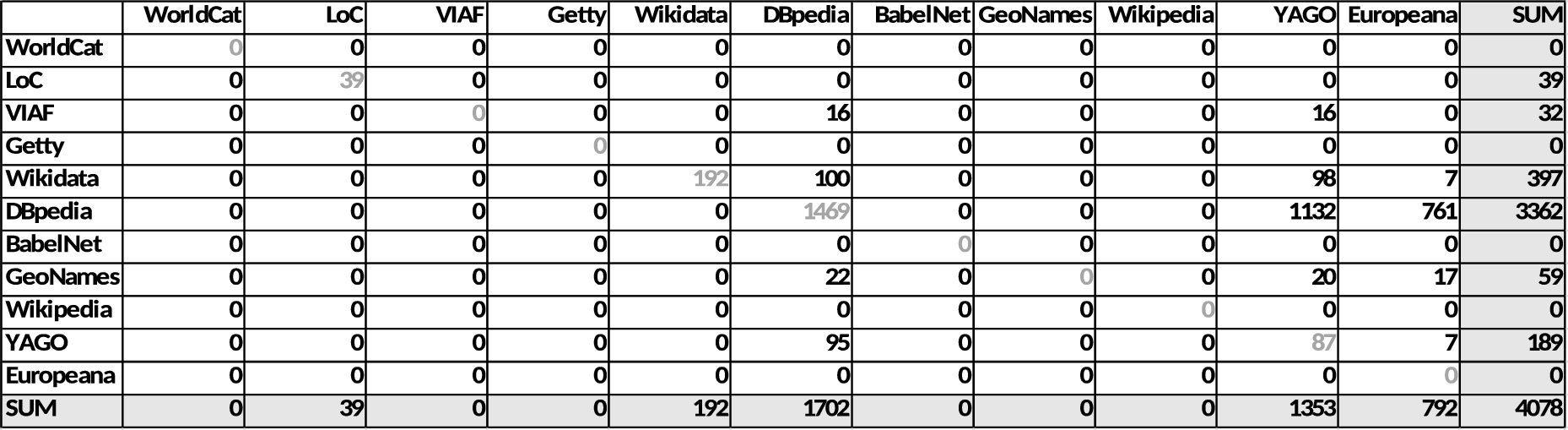

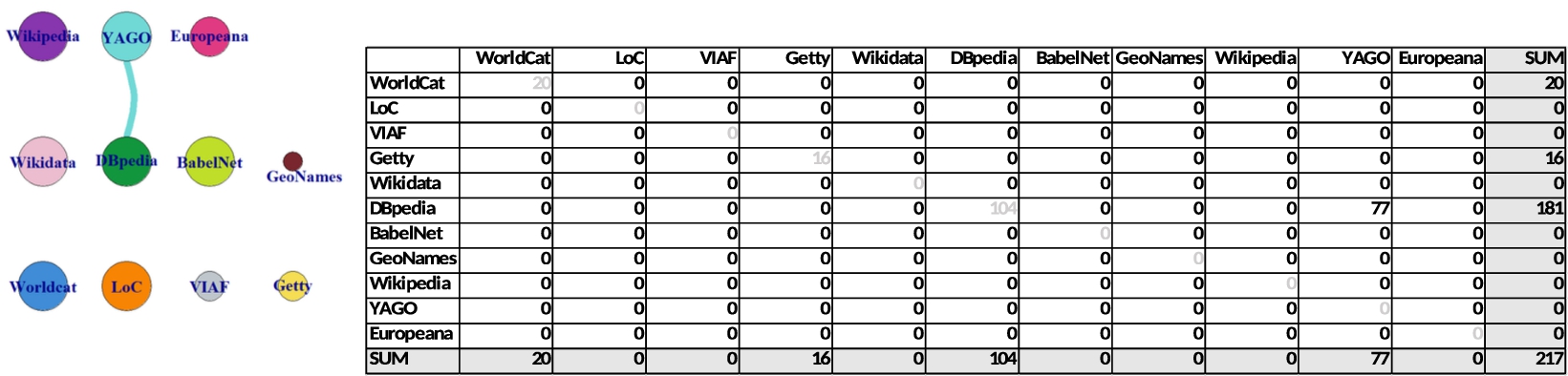

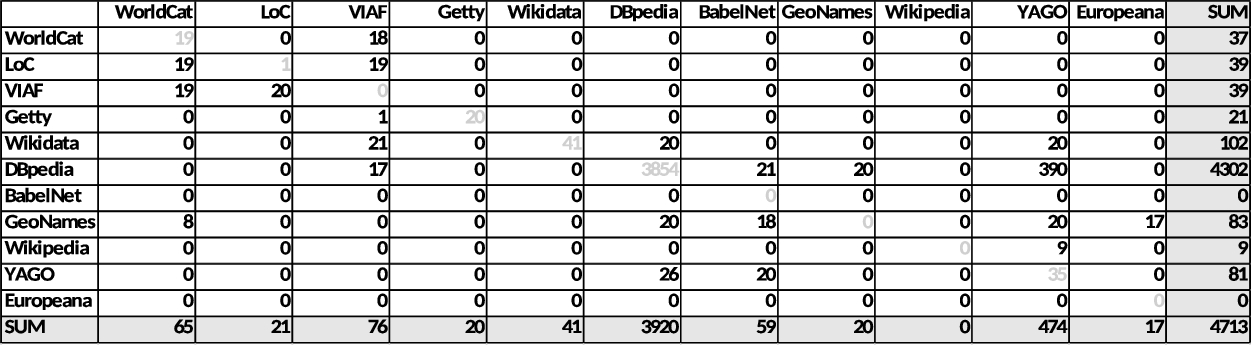

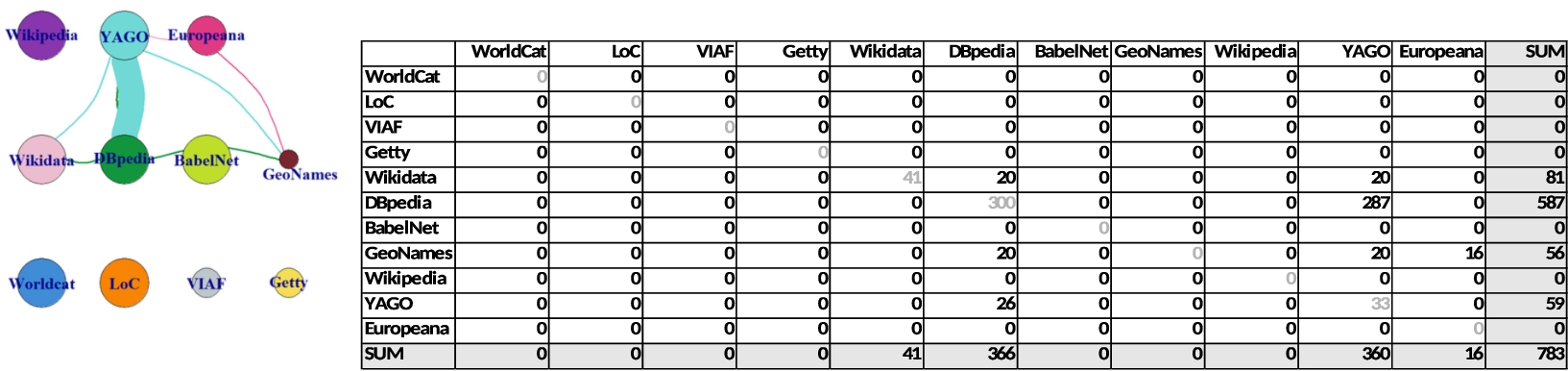

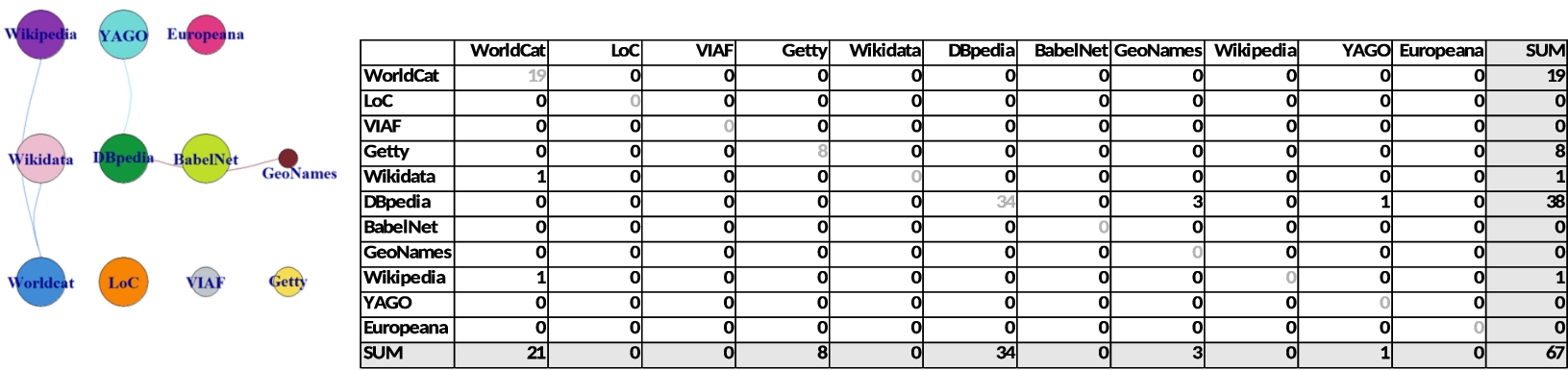

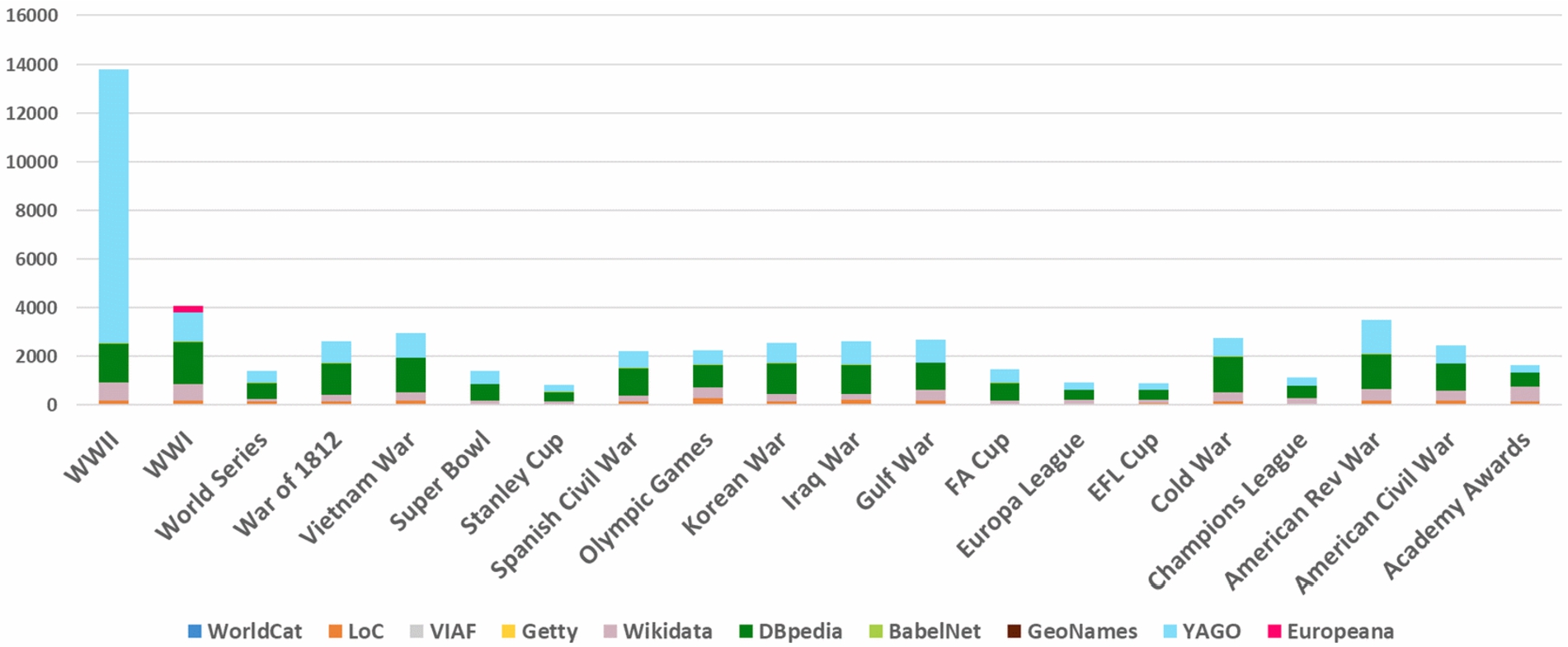

4.3.Events traversal map

Figure 5 clearly illustrates the lack of links. Bilateral links are extremely rare: only between YAGO and DBpedia. As a result, it is not possible, for example, to move from the Library of Congress to Wikidata. This implies that the entry point to a network determines the movement within it. DBpedia contains far more links than other sources. Although Europeana has only one entity in this category (i.e. World War I), it manages to draw a thick line (skos:exactMatch) in the figure (111 links).

Fig. 5.

The overall traversal map for event entities.

In general, events were not found in VIAF during the manual data exploitation, however, it turns out that WorldCat and the Library of Congress refer to it seven times each. For example, the former links to the World Series in French (skos:prefLabel is Séries mondiales (Base-ball) and skos:altLabel is World Series (Base-ball)). Another 13 cases are all sporting events and awkwardly labelled as corporate entity in VIAF. Although those entities may be exceptional cases, they also reveal interesting cataloguing practices (or perhaps errors) by libraries in data modelling or mapping. Whatever the reasons are, we may face challenges in the future to tackle errors and inconsistency for semantic reasoning.

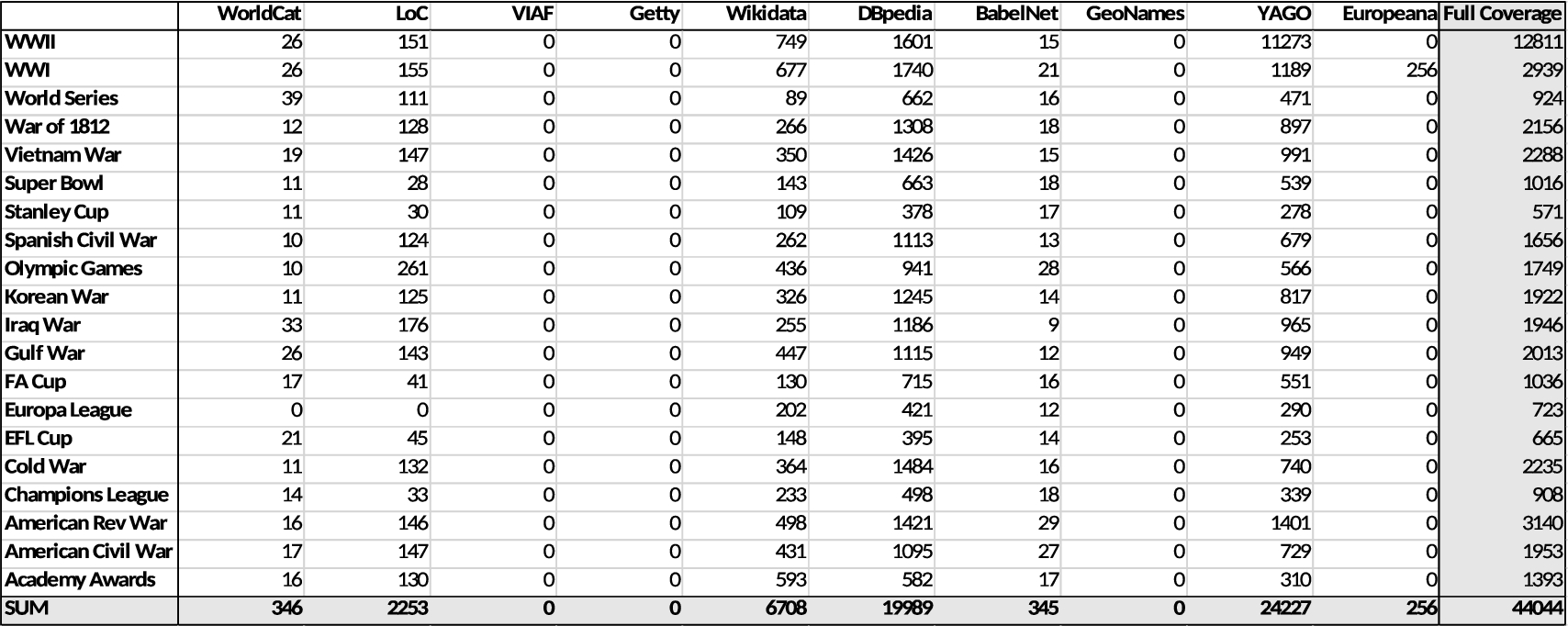

In terms of each entity (Table 4), the most appealing entity is World War II, followed by World War I and the Iraq War. Europeana’s contribution to World Wart I is considerable. Although the EFL Cup is the lowest, the gaps between entities are relatively subtle except the top three (i.e., median 49.5, average 57.5).

Table 4

The amount of outgoing links that the 11 data sources hold in each event entity (* means duplicate consolidation)

The principal reason for the prominence of DBpedia for the World War II is rdfs:seeAlso inverse links which include the DBpedia entities of agents (e.g. Winston Churchill), places (e.g. Leipzig), ships (e.g. USS Hornet), and the lists and articles derived from Wikipedia (e.g. tanks in the German Army, history of propaganda). In this case it is advantageous for the users to discover and access detailed information about the war. However, as RDF representation is not guaranteed for rdfs:seeAlso, this situation would hamper predicting the source of link destination and decreasing the possibility of efficient and/or automatic data processing.

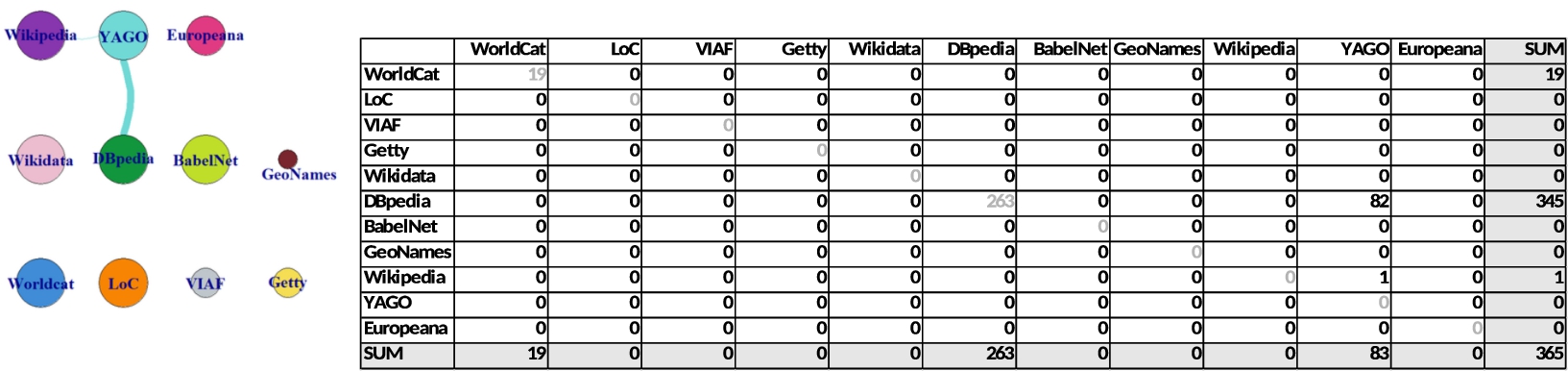

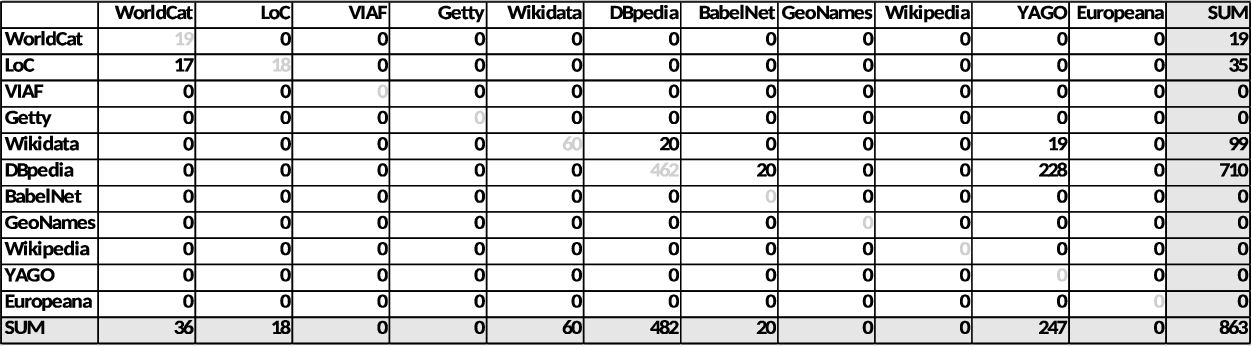

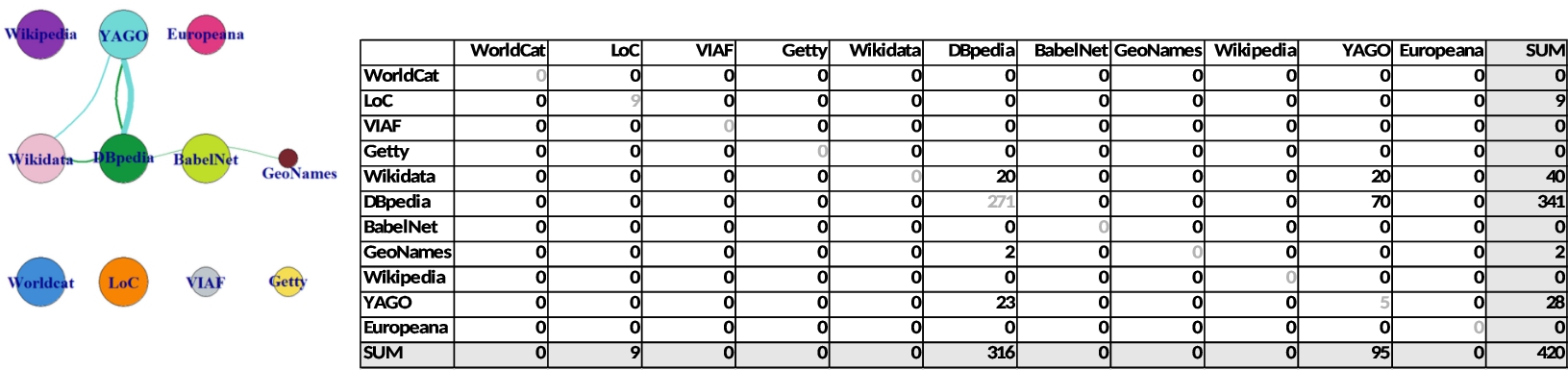

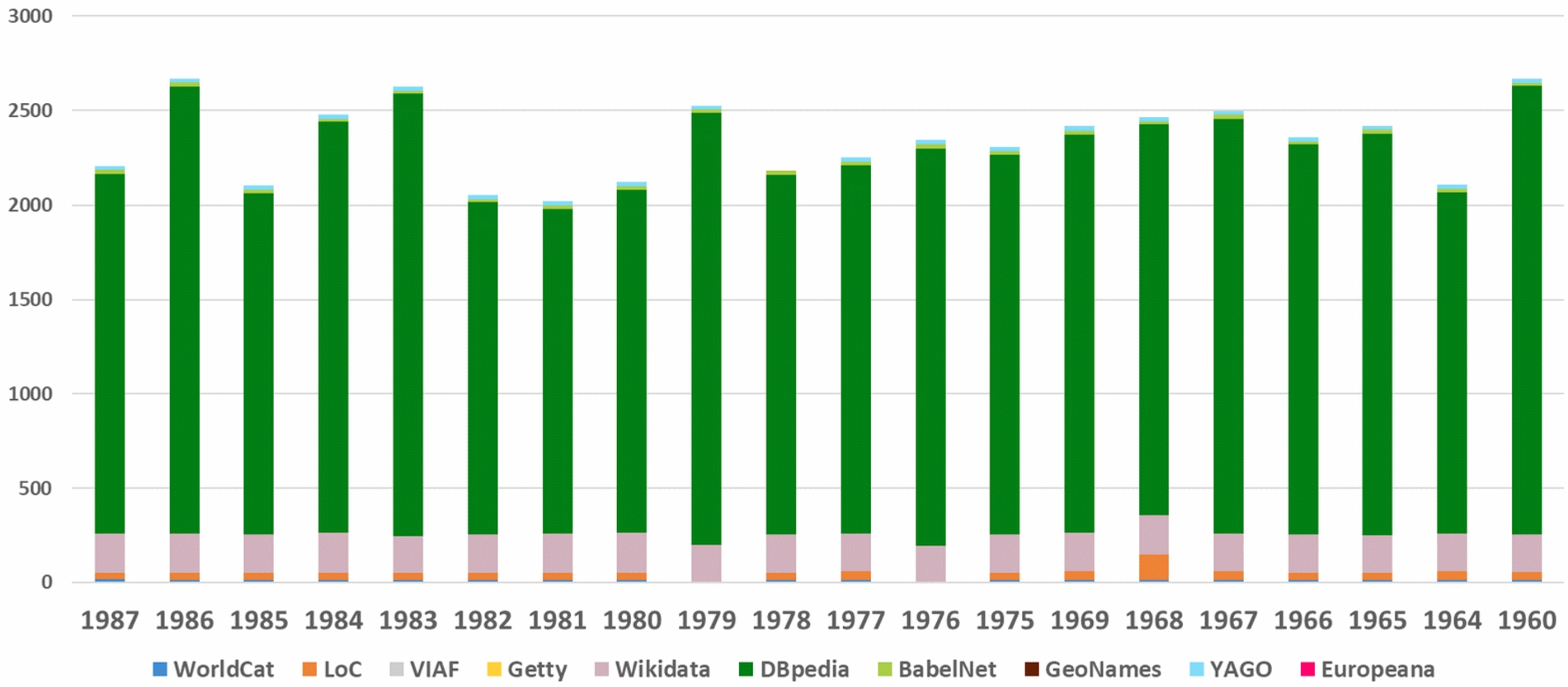

4.4.Dates traversal map

It is striking that the volume of links is very low (Fig. 6). Out of 881 outgoing links, 863 links are consumed within the 11 data sources, implying a high level of closure in the network. In addition, only three sources are referenced: DBpedia, Wikidata, and the Library of Congress. Although YAGO provides many links to DBpedia and Wikidata via owl:sameAs, it does not receive any incoming links. Since bilateral links do not exist, the movement in the network is highly restricted. There are only three possible paths. Consequently, the fluctuation of linking patterns is also minor (Table 5).

Fig. 6.

The overall traversal map for date entities.

The economy of the creation of date entities may show serious issues. 1978, 1979, and 1976 do not seem to exist in YAGO, the Library of Congress, and WorldCat, while other consecutive years in the 1970’s are available (see Appendix A). Such inconsistency would become problematic, when queries are constructed to look for answers to research questions on years and periods. In semantic queries, erroneous links and data omissions require careful presentation to LOD users in the future, in order to avoid misinterpretation and misjudgment.

One reason for this phenomenon is the lack of recognition and/or needs for numeric date instant entities, in comparison with other date representations, including textual dates (e.g. “End of the 17th century”), numeric durations (e.g. “1880–1898”), and periods and eras (e.g. “Bronze Age” and “Roman Republic”). For example, a quick search indicates the entity for “Neolithic” exists in all our data sources except GeoNames, VIAF, and Europeana.

In cultural heritage, numeric dates are often stored in a database as string/literal data type, when encoded in XML or RDF. They can be typed as date in the XML Schema (e.g. xsd:date). Thus, they are not designed for NEL, although it would have many advantages, especially for data linking and integration. What is clear is that users have currently a very limited possibility to execute NEL for numeric dates. To fill this gap, we have recently started a project to create LOD for the numeric date entities [38].

Table 5

The amount of outgoing links that the 11 data sources hold in each date entity

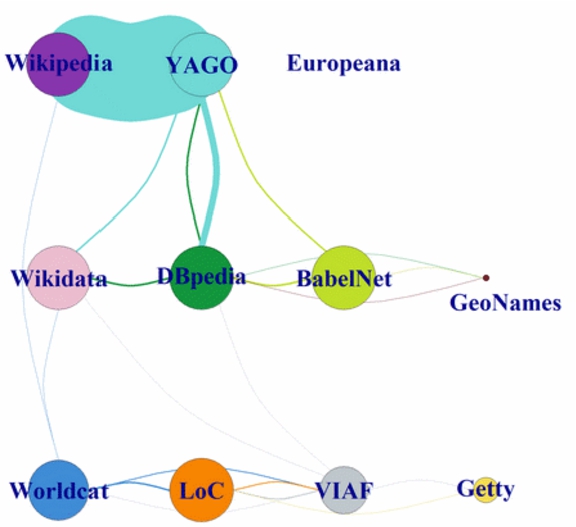

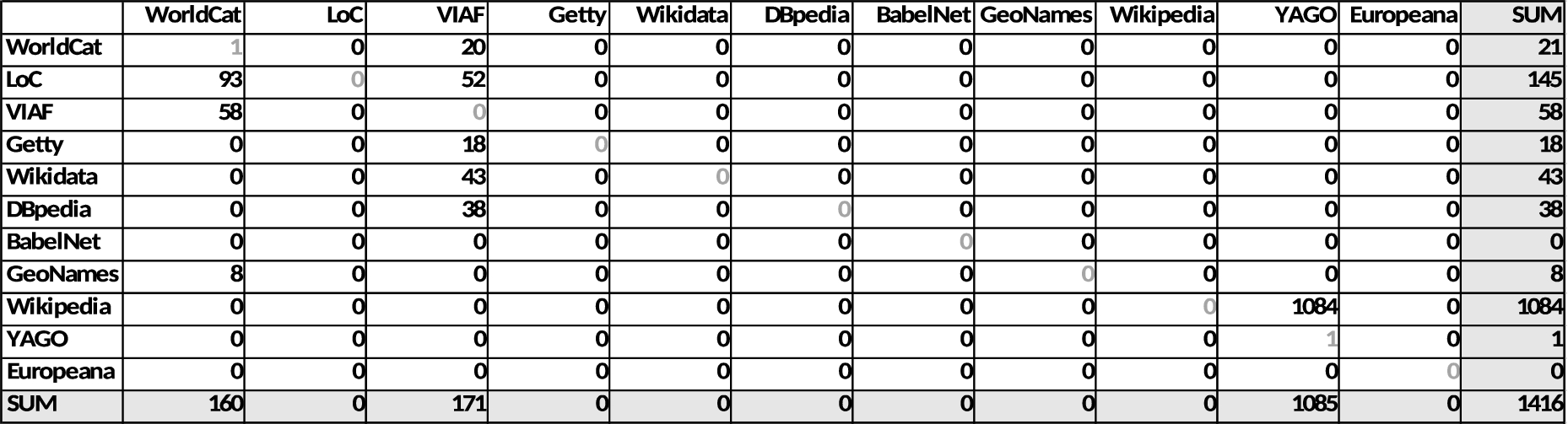

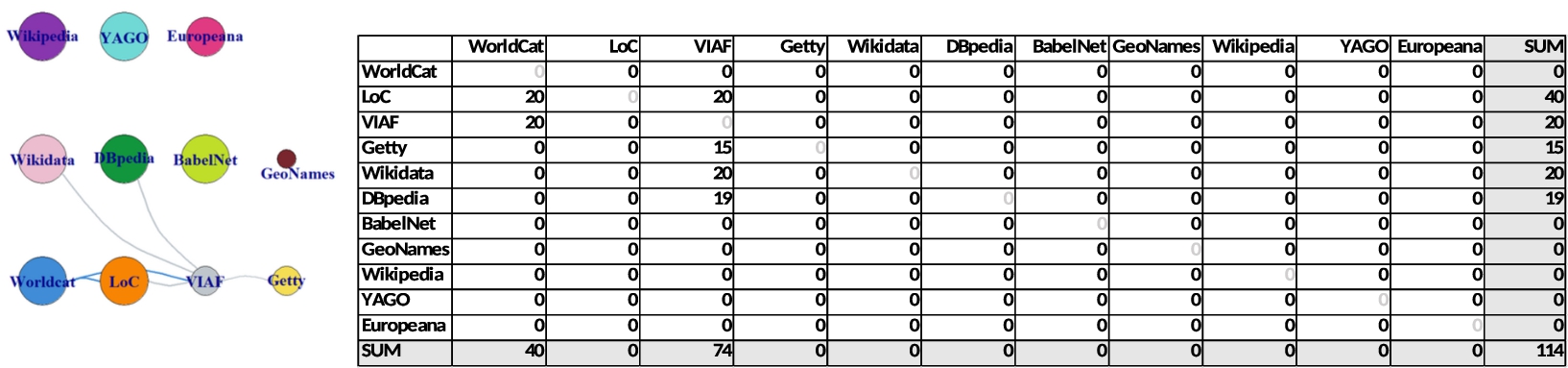

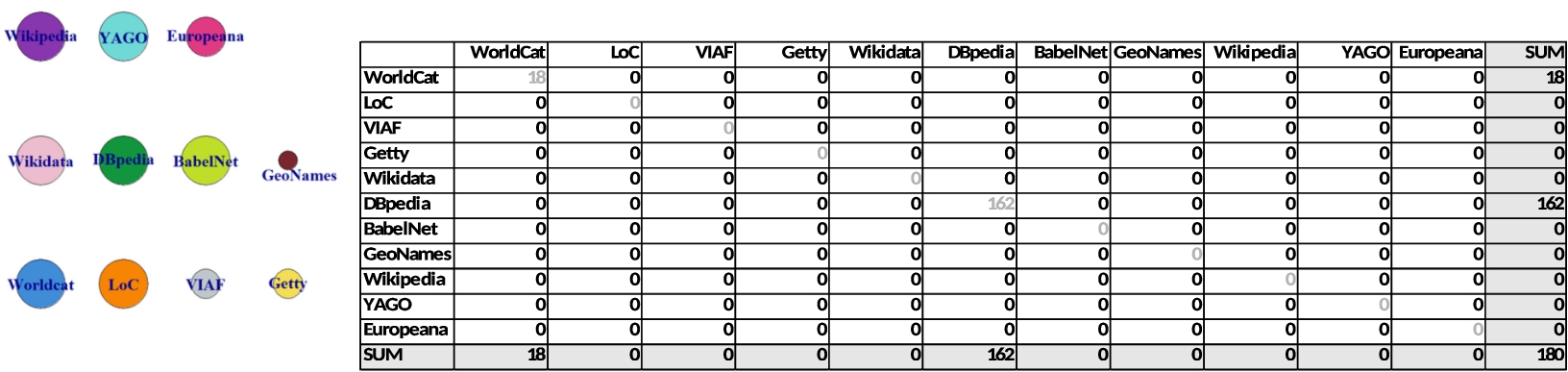

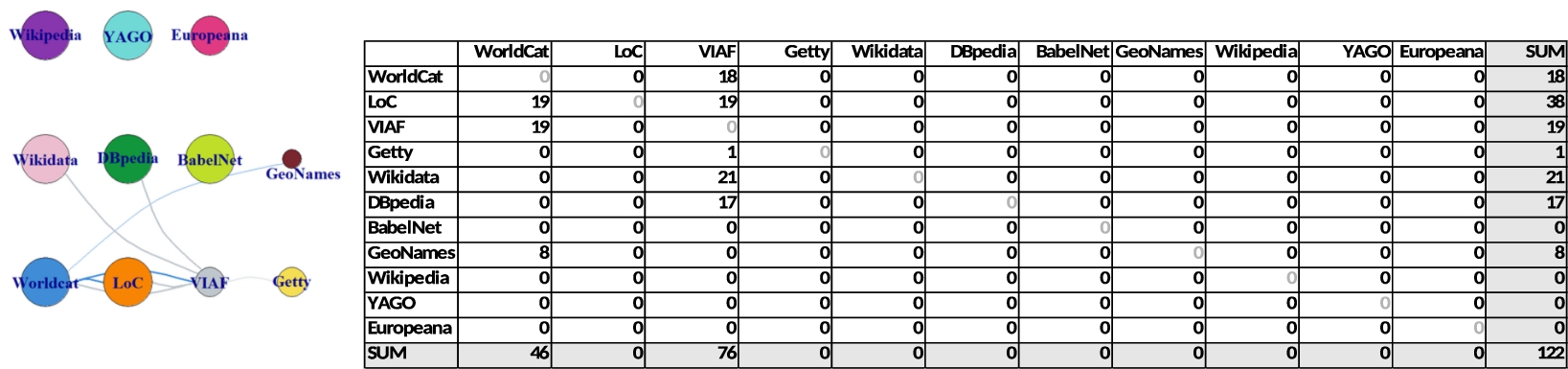

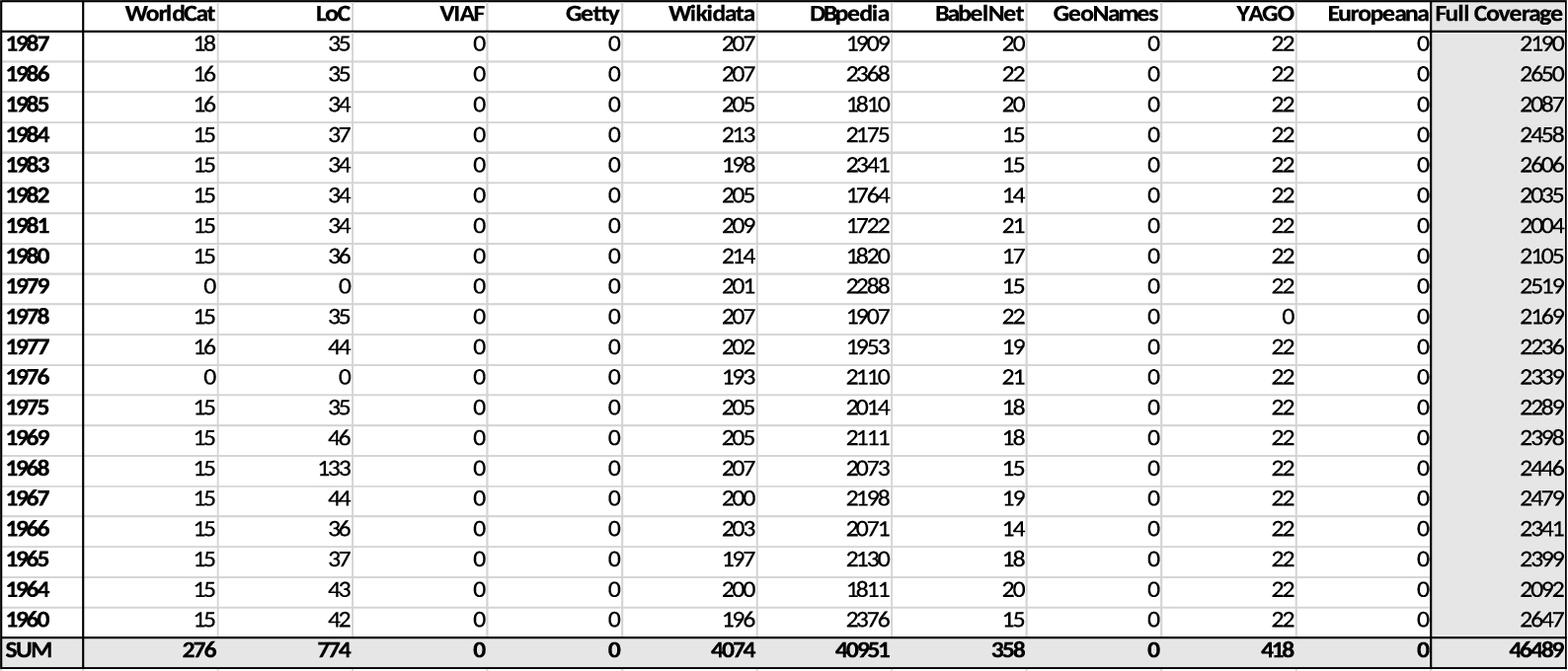

4.5.Places traversal map

Traversability for places is better than in other categories. YAGO dominates the scene for outgoing links (Fig. 7). Interestingly VIAF comes third despite its focus on agent entities. The Library of Congress, Getty TGN, and GeoNames contain an almost consistent number of links, each typically pointing to DBpedia. Users need to be careful regarding Europeana, because it does not provide the entities for the USA at all (USA, California, New York, and New York City). This type of inconsistency may be problematic for NEL implementers. They should scrutinise the occurrences of their place entities in their local datasets before selecting the right NEL targets. Strangely, no outgoing links are found for Australia, Canada, France, Germany, Italy, Japan, and Russia in Wikidata.

The presence of GeoNames, in particular, facilitates more fluid movements in the network. Although Ahlers [3] claims that it is the largest contributor to geospatial LOD and is intensely cross-linked with DBpedia, it is a disadvantage that it only connects to DBpedia. This makes the overall mobility less ideal. Apart from a link to VIAF, Getty TGN only contains 20 self-links mostly in the form of rdfs:seeAlso for a HTML representation. RDF/XML for New York City (tgn:7007567) holds:

tgn:7007567 rdfs:seeAlso <http://www.getty.edu/ vow/TGNFullDisplay? find=&place=&nation=&subjectid=7007567> .

Therefore, it is a dead end in terms of network traversals, of which the users need to be aware during their traversing. Europeana is disappointing including only 17 outgoing links only to GeoNames.

If loops are included, DBpedia holds 86% of all outgoing links. This is caused by a vast number of inverse links. For example, in case of Australia, 255 out of 266 outgoing links in DBpedia are those inverse rdfs:seeAlso links to DBpedia itself. It is possible to find both important and less important links:

dbr: Health_care_in_Australia rdfs:seeAlso dbr:Australia .

On one hand, the DBpedia loops may be confusing, especially due to the use of ambiguous rdfs:seeAlso links and the flexibility of information provided. On the other hand, they allow users to find unexpected related information that other LOD sources do not provide, leading to the serendipity that LOD is good at.

Fig. 7.

The overall traversal map for place entities.

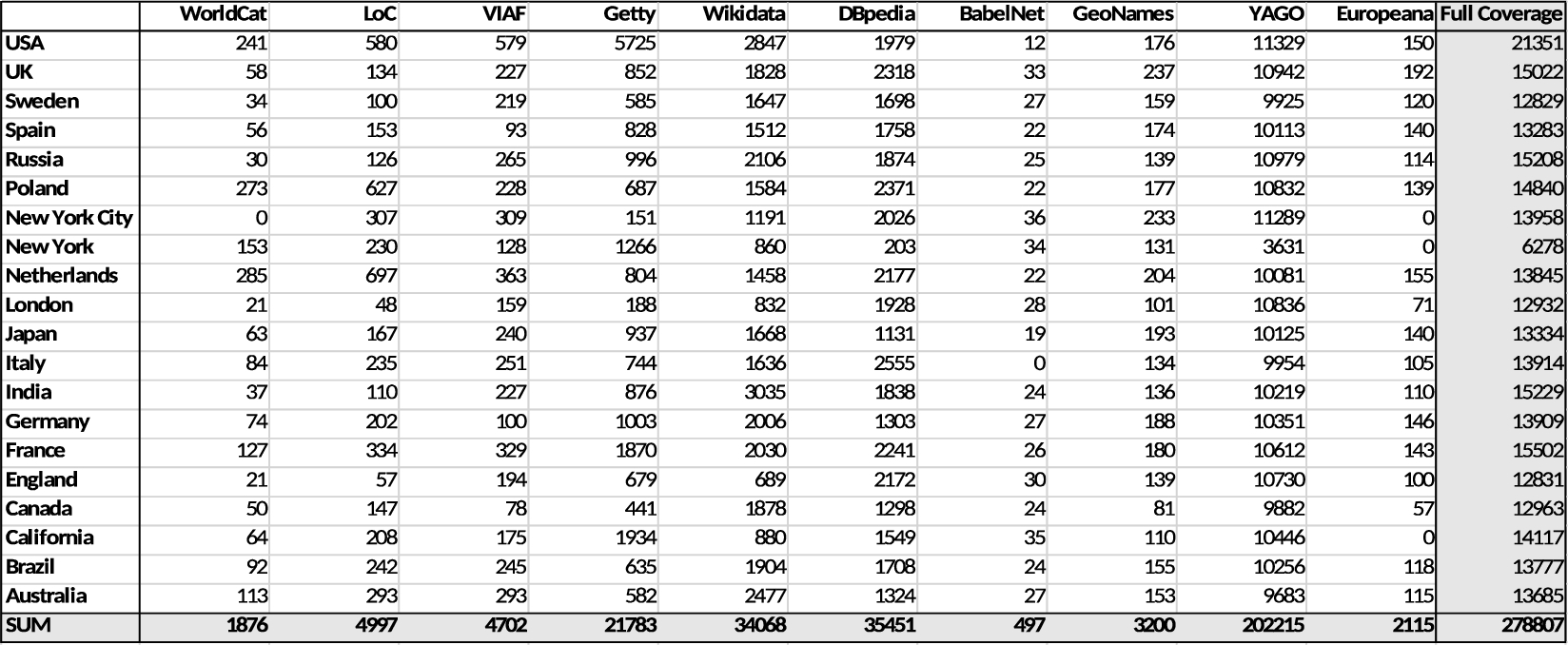

In Table 6, the lowest entities are surprisingly: the Netherlands, United Kingdom, and United States. This is chiefly attributed to fewer numbers of DBpedia links. However, the reason for this is unclear. On the contrary, the top entities receive a large quantity of links, which include Germany, France, and Poland.

Table 6

The amount of outgoing links that the 11 data sources hold in each place entity

The outgoing links are the lowest for United Kingdom, followed by the Netherlands, and United States. In contrast, Poland, Germany and France are the top three. The cause is obvious: the numbers are affected by the uneven pattern of links in DBpedia. The amount of links in other sources are instead more or less evenly spread across different entities. It would be intriguing to investigate the reasons by inspecting the corresponding entities in Wikipedia articles and the linking mechanism behind the DBpedia transformation. It would reveal pros and cons of a crowdsourcing approach to LOD, as opposed to authority approach such as the Library of Congress, VIAF, and Getty from libraries and museums.

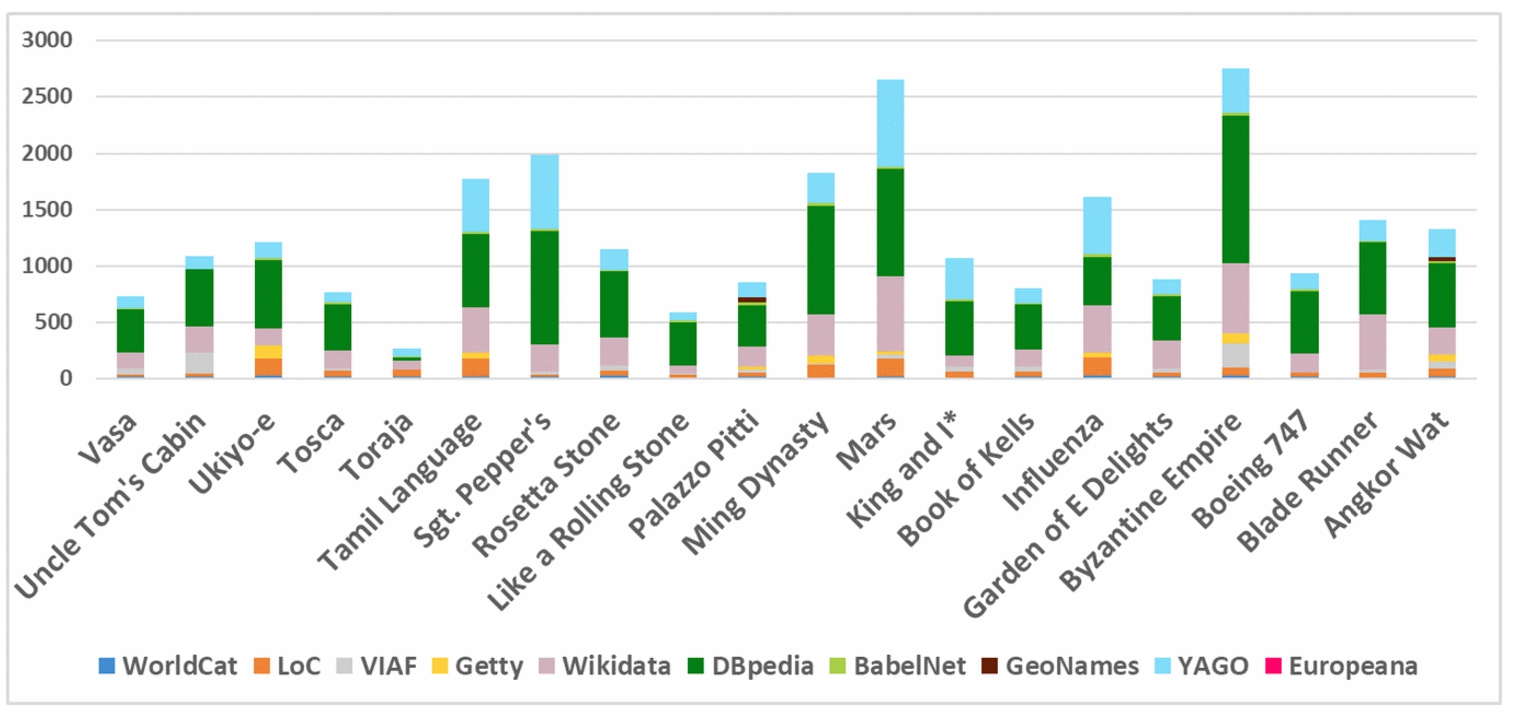

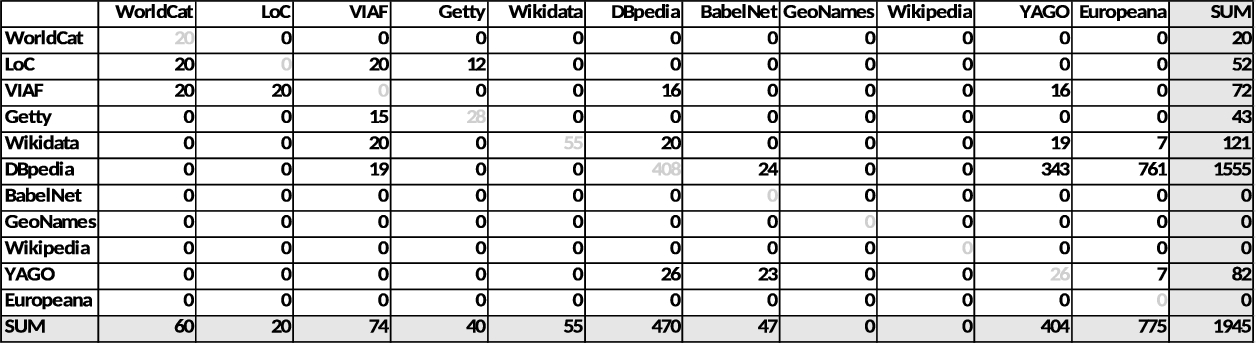

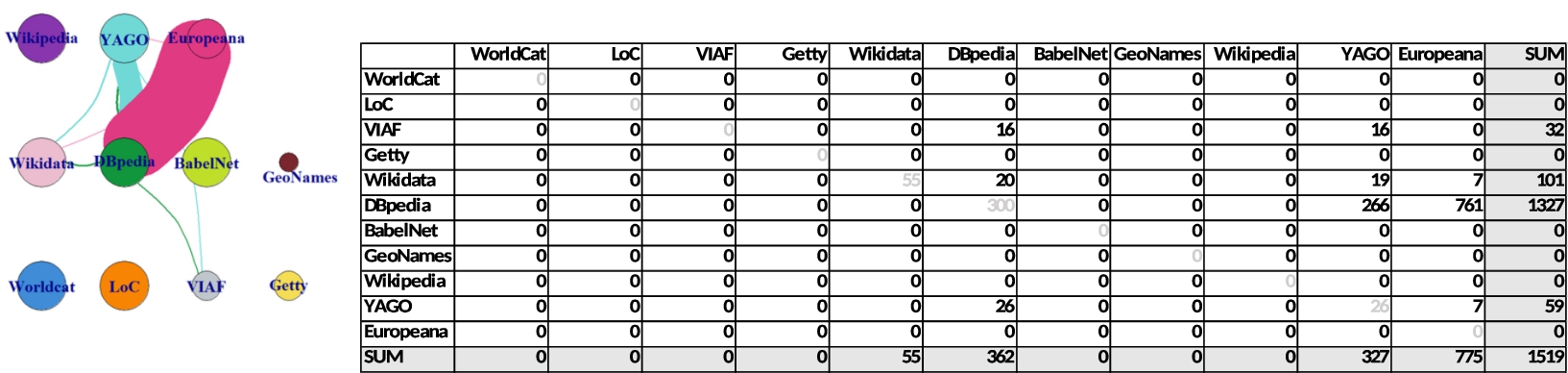

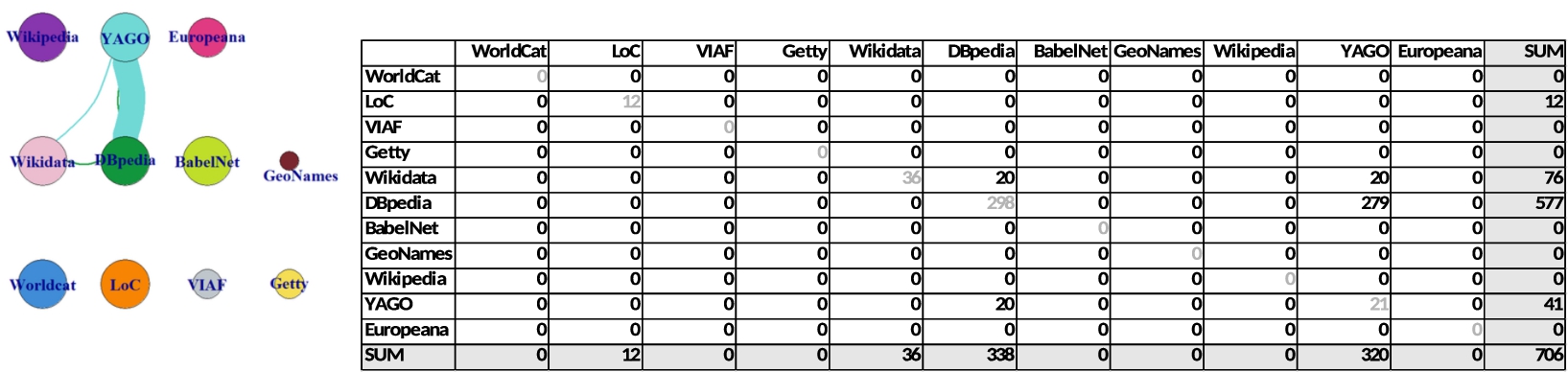

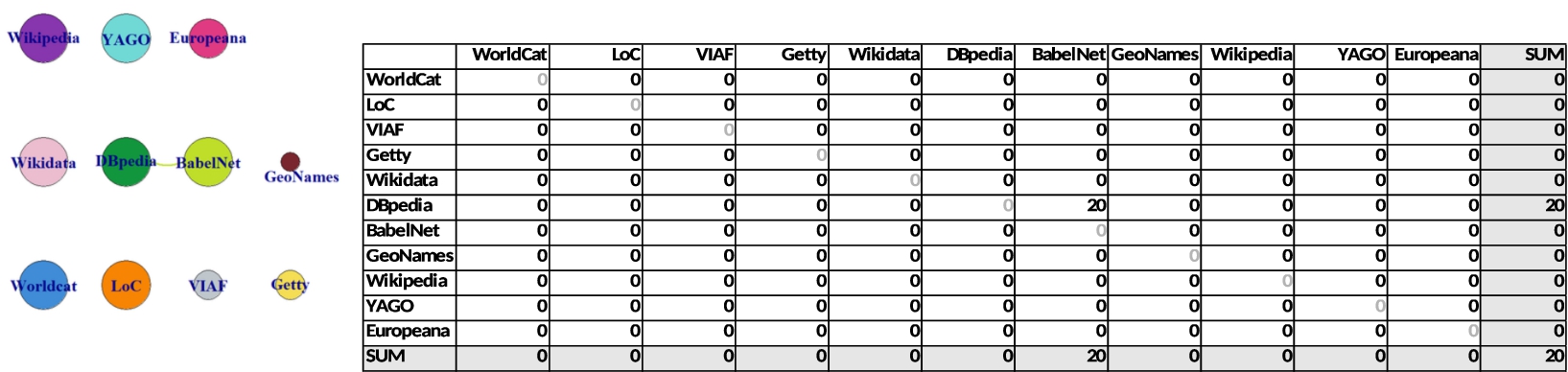

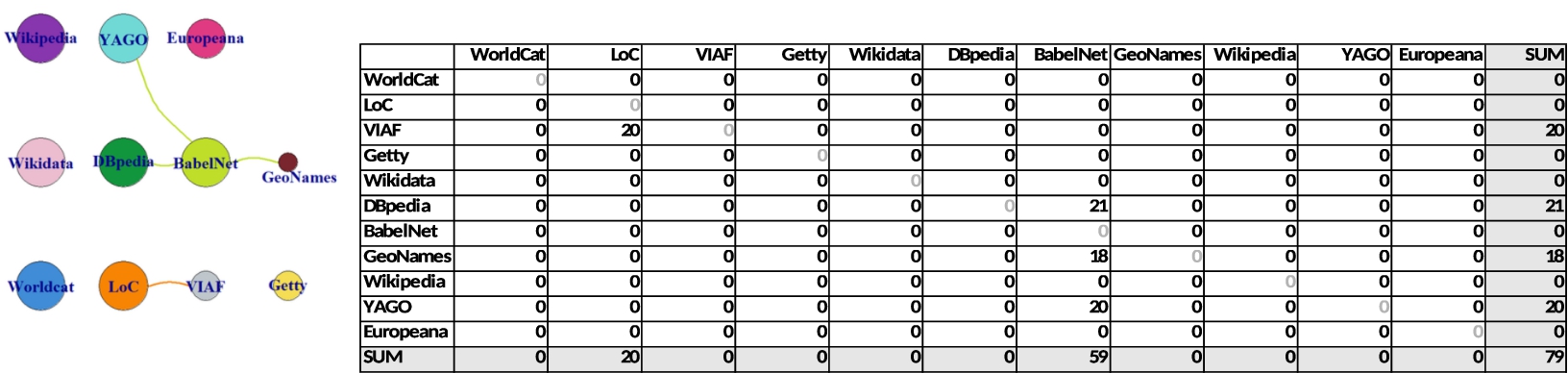

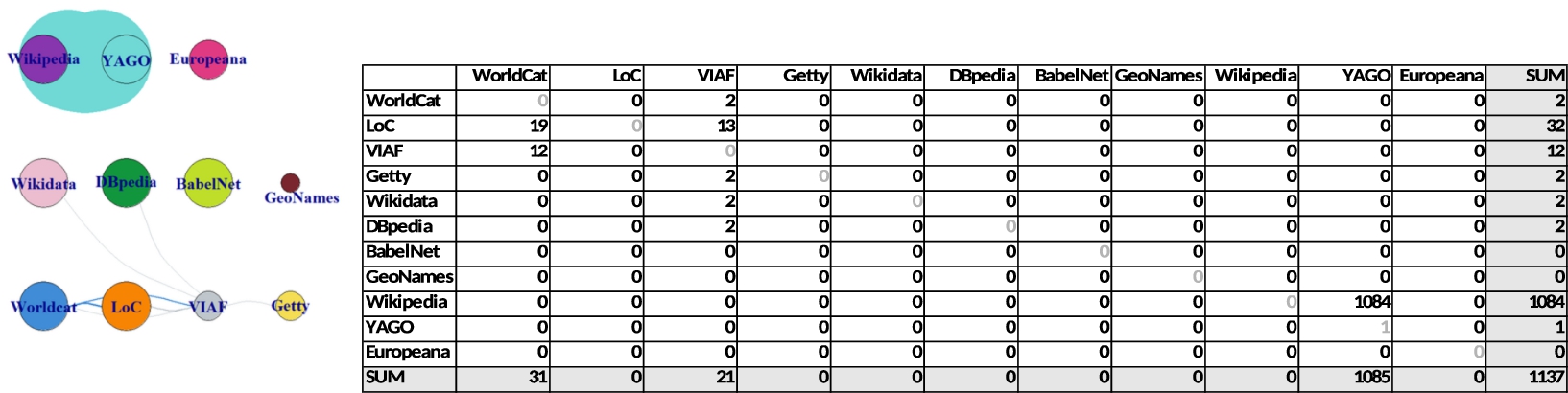

4.6.Objects and concepts traversal map

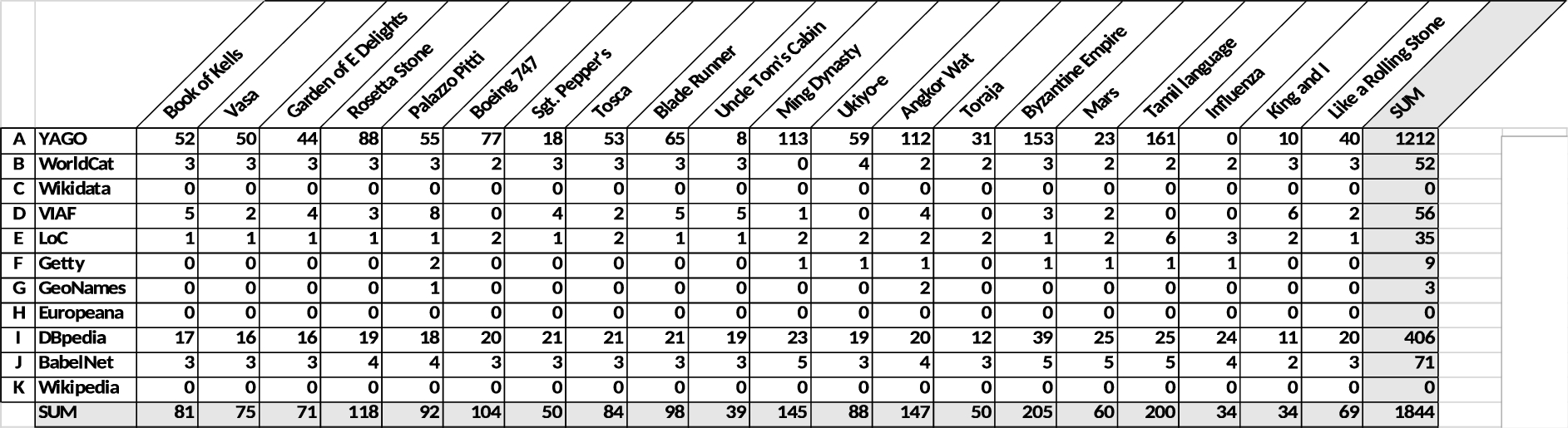

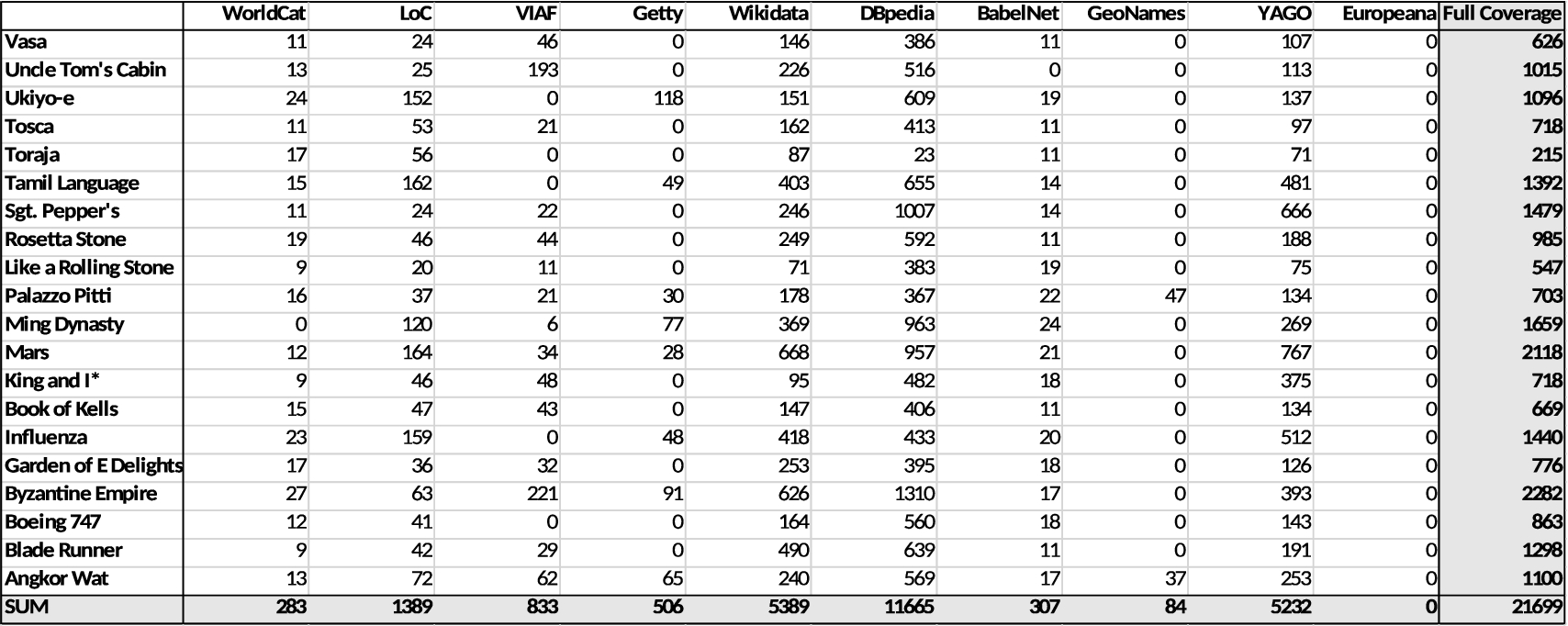

Objects and concepts are the subject matter in which cultural heritage researchers would be most interested. To a large degree, they are the target entities of contextualisation which is substantiated through data integration and inferences, thus, the contextualised entities are out of our scope. Rather we analyse them as the entities supporting contextualisation (Fig. 8). 1844 outgoing links are recorded of which 91% are bounded for the 11 data sources. Network closure also persists in this category. 81.3% of all incoming links concentrate on Wikipedia (1085), with DBpedia (100) and Wikidata (43) lagging far behind. The same can be said for outgoing links: YAGO (1212) and the rest. This happens, because YAGO provides a considerable number of links to Wikipedia.

Fig. 8.

The overall traversal map for objects and concepts entities.

Although Europeana produces LOD out of digital cultural heritage objects, its entity API is merely an experimental reference point, thus, no contribution is observed in our traversal scenarios. Interestingly, VIAF plays an authoritative role for this category. It serves a small number of links to five sources. Although the number of outgoing links from BabelNet is not high, it performs better in this category.

During the process of identifying and collecting the entities, some data quality issues are recognised. The significant concepts of cultural objects in FRBR, namely Work, Manifestation, Expression, and Item, are not easily conceptualised and encoded in the LOD observed. For example, taking a book as an example, we consider a single physical copy of a book as Item. Then, all published copies of the book which share the same ISBN are defined as Expression. Manifestation is considered as a book in a specific language by a specific author, whereas Work is a higher level of abstraction to cover the idea or the fundamental creation of the book by an author. Therefore, for instance, VIAF holds records on The King and I as Expression (motion picture) and Work (the original artwork). However, partly due to the technical mechanism of VIAF, Work may not be easily created. Similarly, Wikipedia has a disambiguation page for the King and I to distinguish the original musical from films and music products associated to the musical. This implies some difficulties in terms of co-reference resolution during NEL, as well as graph traversing.

As this category is deliberately broad and vague in principle, it is not possible to see clear-cut results. For example, GeoNames has entities for Palazzo Pitti and Angkor Wat, which could be classified as places and object simultaneously. Nevertheless, it reminds us that the data modelling for cultural heritage entities is intentionally complex. There could be entities that have multi-types. Depending on the perspective, the data modelers and users would need to find a common view on both practical use and theoretical truth and/or fuzziness of datasets. For instance, Palazzo Pitti could be a geographical place, as well as a building structure, concept, or organisation. However, complicated roles may introduce unnecessary complexity for real usage, confusing end users.

Another interesting finding is that Mars appears in TGN of the Getty vocabulary. It is normally considered that the vocabulary contains place names on earth, as one expects from GeoNames. There could be some surprise for LOD users in terms of how data is conceptualised and modelled, and from where data is obtained, especially when automatic data collection and integration are implemented in the future.

Regarding the individual entities (Table 7), Byzantine Empire and Tamil language in YAGO display a distinct pattern. The cause of this pattern seems to be clear; it includes links to language orientated resources such as language codes, maybe suggesting an important role of language resources in the LOD scenario. For other entities in YAGO it is hard to find exact causes and correlations between the entities with more links (Rosetta Stone, Ming Dynasty, Angkor Wat) and the ones with fewer links (Uncle Tom’s Cabin, Influenza, King and I). The results from Getty imply the exclusion of specific objects.

Table 7

The amount of outgoing links that the 11 data sources hold in each object and concept entity

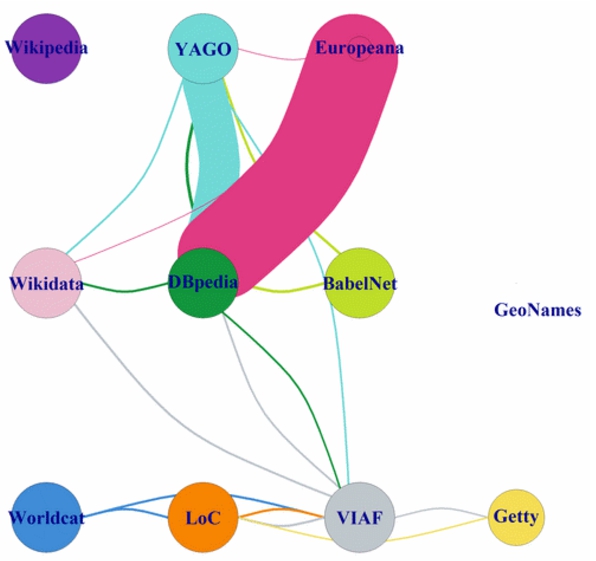

4.7.Network analysis

We deploy a network analysis using R to supplement the so far relatively subjective impressions and interpretations of the traversal maps (Table 8). Although the work of Idrissou et al. [26] is highly relevant here, unfortunately we are unable to use their metrics, because they are based on undirected weighted graph with link strength (confidence scores). As seen in the traversal maps, reciprocity is generally low. The unavailability of bilateral links are obvious for dates and events. Mean distance is short, mostly under 2.0. Diameter is the length of the longest geodesic. We have rather short diameters, implying connections are limited within a small circle. Edge density is the ratio of the number of edges and the number of possible edges. Here we observe low density.

Table 8

Network analysis measurements by category

| Measurement | Overall | Agents | Events | Dates | Places | Objects & Concepts |

| Reciprocity | 0.345 | 0.316 | 0.154 | 0.000 | 0.381 | 0.381 |

| Transitivity | 0.505 | 0.600 | 0.692 | 0.600 | 0.420 | 0.447 |

| Mean Distance | 1.919 | 1.791 | 1.235 | 1.167 | 1.826 | 1.878 |

| Diameter | 4 | 4 | 2 | 2 | 4 | 4 |

| Edge Density | 0.264 | 0.173 | 0.118 | 0.045 | 0.191 | 0.191 |

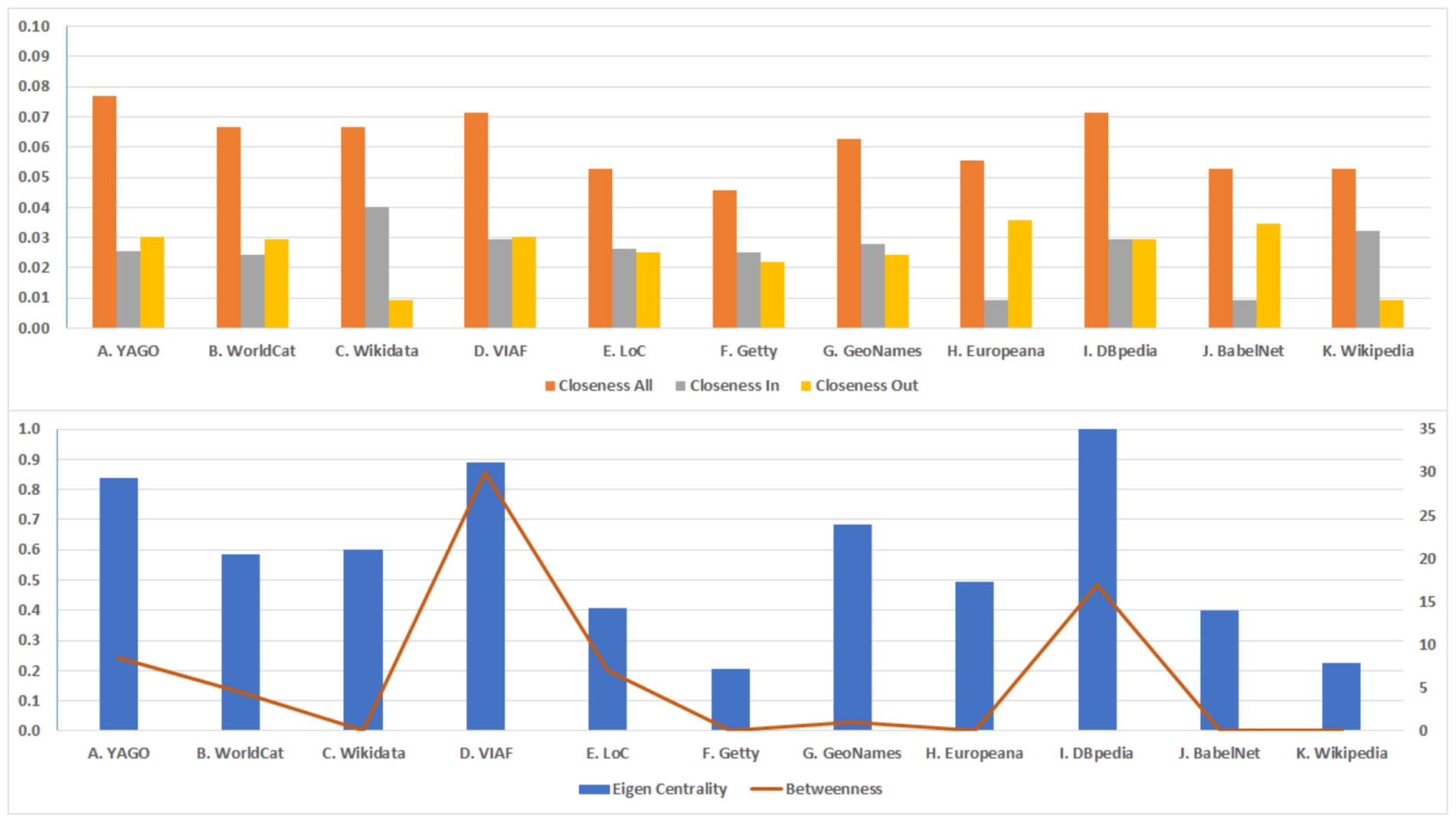

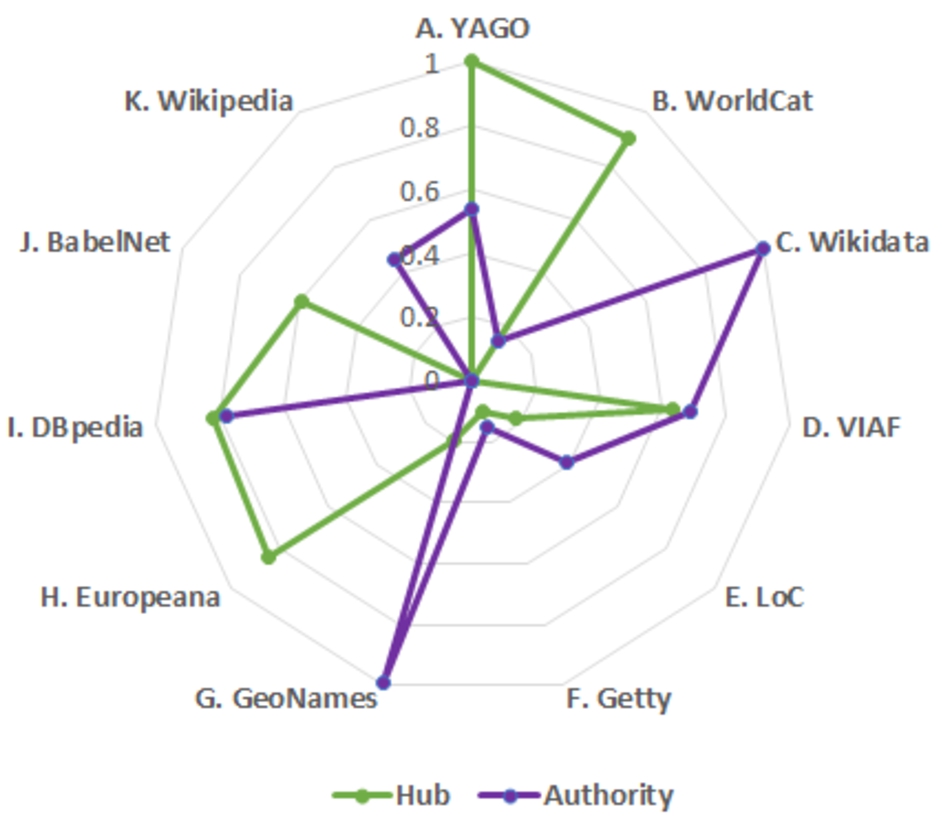

In addition, centrality is calculated, using three methods: Closeness (in and out), Eigen Vector, and Betweenness (Fig. 9). The Closeness statistically suggests the LOD hubs of outgoing and incoming links. The overall Closeness is similar across 11 sources. However, the contrast between Wikidata and Wikipedia as an incoming source and BabelNet and Europeana as an outgoing source can be observed. It is rather unexpected that there are no big differences between the sources for the centrality by Eigen Vector. Thus, the dominance of DBpedia (and to a less extent YAGO) is not clearly visible in the chart. VIAF and DBpedia seem to sit in-between position, mediating the linking flows. Moreover, a radar chart (Fig. 10) shows the indicator by R for the roles of vertex. The vertex is called a “hub” if it functions as a node to hold many outgoing edges, while it is called “authority” if it serves as a node to attract many incoming edges. Whereas YAGO, WorldCat, and Europeana are hubs, Wikidata and GeoNames are authorities. DBpedia has both characteristics, and is, therefore, a strong influencer for the analysed LOD sources.

Fig. 9.

Closeness (above) and centrality (below, left Y axe) and betweenness (below, right Y axe) for 11 data sources.

Fig. 10.

Indicator by R if a data source is authority or hub.

Generally speaking, the overall situation shows a mosaic of segmentation even in a small LOD cloud. It is far from a full mesh network, if not data silos, which LOD is supposed to resolve. Our result simultaneously indicates a couple of tightly connected LOD clusters at best. Thus, it is currently hard to implement automatic traversals among the datasets without studying non-standardised properties (i.e. ontologies) and traversal maps.

4.8.Connectivity and link types in detail

In order to better understand the overall connectivity of LOD datasets, we additionally generated more segmentation and detailed statistics.

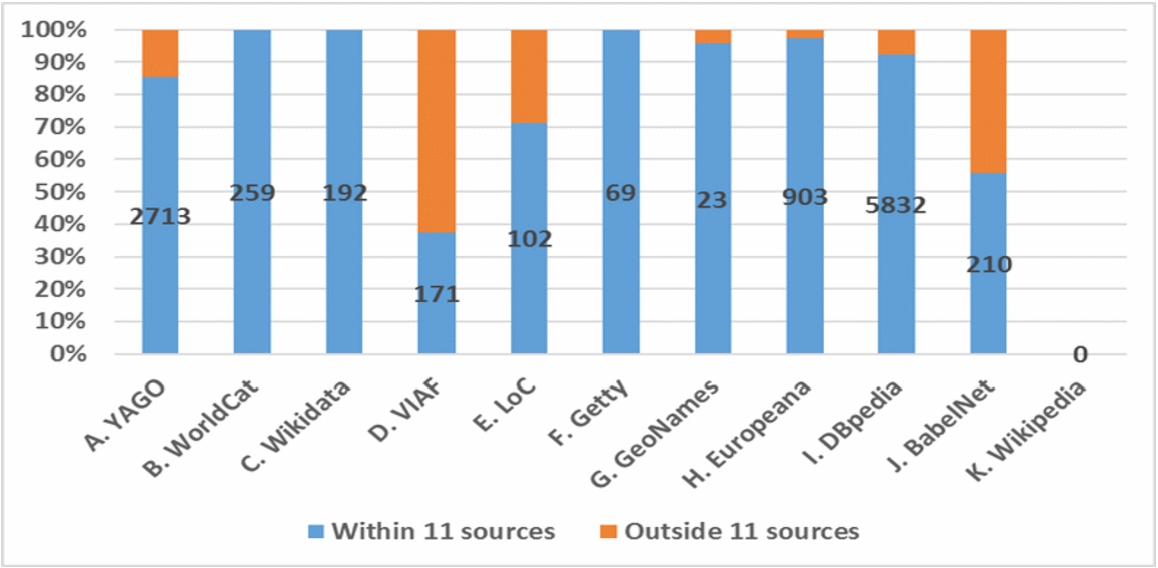

Figure 11 illustrates how close the 11 data sources are connected to each other through four standardised properly links. It displays the ratio of the hyperlinks bounding for the domains of the 11 datasets. Thus, it should represent the openness or closure of this small network. A high level of exclusivity for our data sources is observed. On average, 87.8% of links are within the 11 dataset boundary. Except Wikipedia, VIAF remains the lowest source in terms of links to the other datasets, but still holds over 37.3%. The statistics clearly indicate the closed and close connections of the 11 data sources in terms of standardised traversability.

When combing with analysis in the previous sections, this closure and the homogeneity and centrality of the 11 datasets are a worrying sign in the sense that the users of 11 datasets are not able to identify and explore new and unknown datasets beyond those giants of LOD, hampering serendipity for users’ research. This phenomenon would also decrease the diversity of the LOD cloud. Our analysis indicates that the identical entities in local cultural heritage datasets cannot be effectively connected to each other through NEL via the 11 global LOD sources. Data integration and/or contextualisation would only be possible if the users know the connectivity of datasets in advance and conduct a federated SPARQL query at known endpoints.

Fig. 11.

The ratio of the four standardised property links going within and outside 11 data sources.

In fact, Ding et al. [15] note that the typical size of sameAs networks either remains a small constant or increases slowly, and that single central resources are connected to a number of peripheral resources. This condensed view of LOD is adequately depicted in their cluster analysis and visualisation, where a few LOD data sources investigated in this paper are clearly seen as in-degree or out-degree hub nodes such as DBpedia, GeoNames, Wikipedia, and WorldCat. Correndo et al. [9] also report a power-based LOD network. Moreover, recent research discovers two high-centrality nodes (DBpedia and Freebase) and domain specific naming authorities/hubs such as GeoNames among others [4]. The added value of our study is to reveal the extent of this phenomenon for four different properties at an instance level.

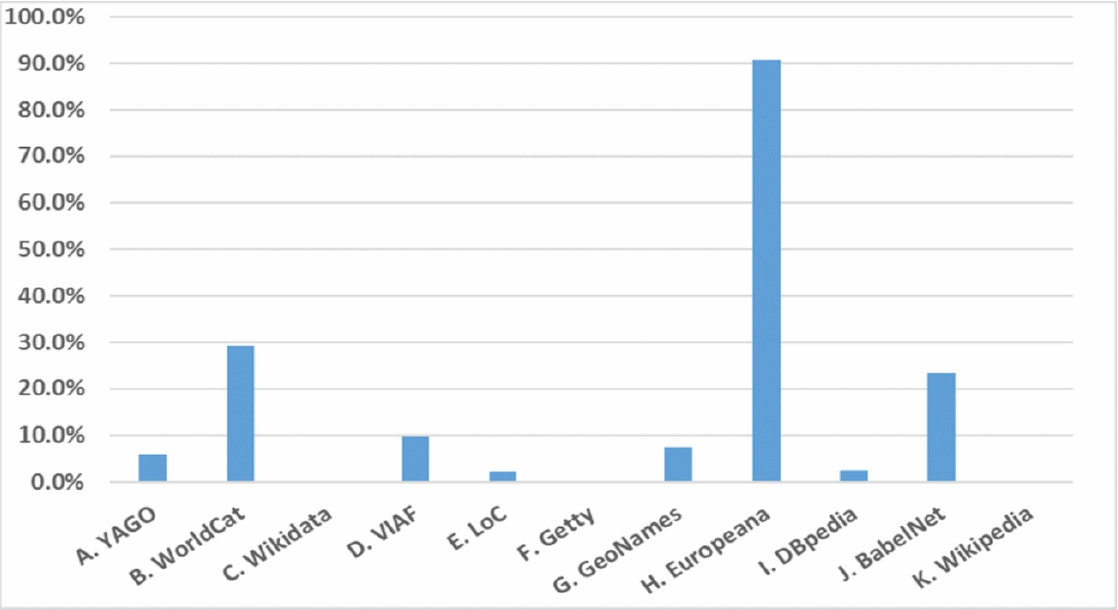

Now, let us take a close look at link types. Figure 12 presents the percentage of the four standard properties used within rdf:resource. In RDF/XML, rdf:resource is the property to indicate the URI of the object node in a graph.3434 In this sense, it should normally contain all the outgoing links. By dividing the ratio of the four properties, we can highlight the balance between them and other properties including proprietary ones.

The overall percentage is, unsurprisingly, low because the four properties are normally a small part of RDF content. Nevertheless, the range varies from 30% to close to 0%. An exception is Europeana. 90.6% of links use them, demonstrating a high conformity to the standardised RDF properties and highly limited use of proprietary properties. The result suggests relatively high importance of the four properties in the WorldCat and BabelNet datasets. In contrast, Getty vocabularies and Wikidata use other properties almost exclusively. Indeed, a query on WDProp3535 lists 8732 unique properties in Wikidata as of 26 January 2021. A manual examination of Wikidata entities further justifies the outcome: the properties are organised by its proprietary wdt: with P prefix, while wdtn: is the entities with Q prefix.3636

For example, the entity of France contains 9500 rdf:resource, while wdt: is used 294 times with rdf:resource. 7292 rdf:type are included in combination with rdf:resource. The W3C properties of our concern are not available at all. owl:sameAs only appears occasionally to provide inverse relations for obsolete (mostly duplicate) properties that offer redirects. Erxleben et al. [18] explain that Wikidata is keen to faithfully represent the original data using the language of RDF and linked data properly. In particular, they claim that owl:sameAs would often not be justified to relate external URIs to Wikidata. This leads to their hesitation to use this property as well as to include links to many external data.

Fig. 12.

The percentage of four srandardised properties used for the purpose of rdf:resource linking in 11 data sources.

On the one hand, proprietary properties in Wikidata enable the users to refine the semantics of outbound links. It is useful in some cases where one needs to identify a particular link among tens of owl:sameAs links. On the other hand, they make it more difficult to automate graph traversals, when used with other LOD. In addition, there is a question of manageability and usability. As the outgoing link properties can be suggested by the users, the number of the properties could grow sharply. Then, the complication of selecting them will be amplified.

Another issue is that the Wikidata entities do not use human “guessable” URIs, even if they are not absolutely opaque URIs such as hash. For instance, the syntax of the entity URI for Cold War is https://www.wikidata.org/entity/Q8683. They are agnostic about their semantics and are language independent, which prevents human users from guessing the meaning of properties and/or hacking the URIs3737 without examining the ontology behind. We should recognise that self-describing URIs are rated high for the quality metrics of Candela et al. [8].

When we manually examined France in Getty, we found that there were 1783 rdf:resource. 1349 SKOS properties are used among which 10 skos:prefLabel, 18 skos:altLabel, and 1246 skos:narrower are present. Whereas 251 Dublin Core Metadata Terms (dct:)3838 and 202 Getty Ontology (gvp:)3939 are in use, 60 PROV (prov:)4040 and 56 SKOS-XL (skosxl:)4141 are also found. Although not all properties use rdf:resource, the figures provide us a clue about the relation between linking and property usage.

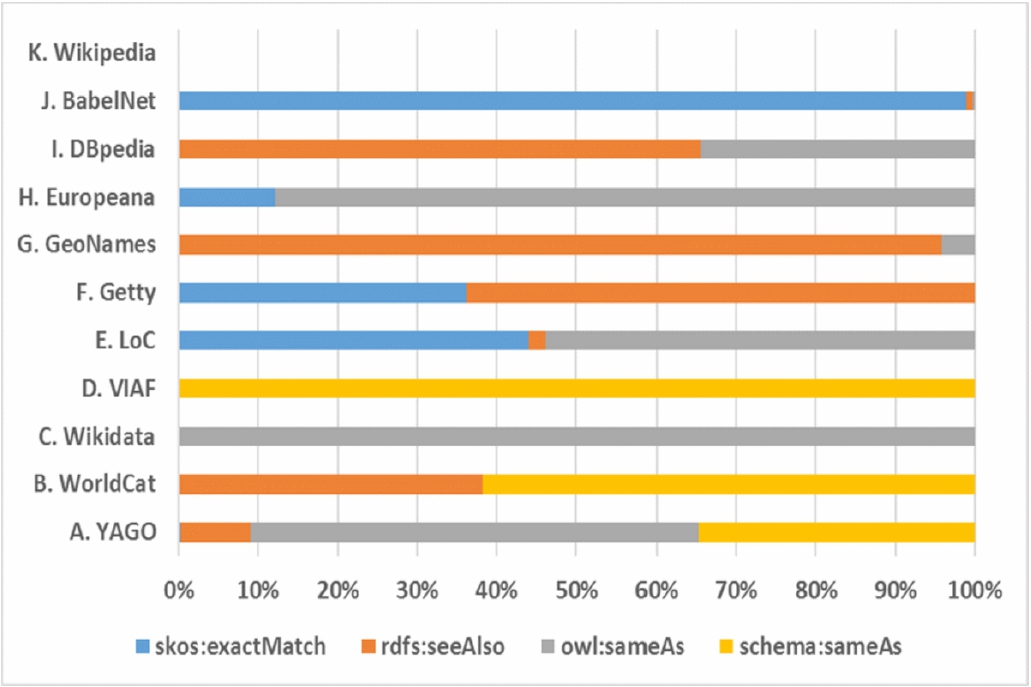

Figure 13 illustrates the ratio of each property among the four properties. Despite the wide spread of research concerning owl:sameAs, its use for outgoing links is less than the majority for all outgoing links (42.2%). While 38.4% use rdfs:seeAlso, schema:sameAs and skos:exactMatch are in the minority. As GeoNames provides the link to DBpedia with rdfs:seeAlso, the equivalent identity cannot be inferred. skos:exactMatch is present in BabelNet, Europeana, Getty vocabularies, and the Library of Congress. VIAF exclusively uses schema:sameAs, whilst more than half of WorldCat entities are described with it. YAGO also uses it for more than one third of its entities. However, its use is debatable, since the schema.org ontology is not a W3C recommendation.4242 Moreover, Beek et al. [4] point out that it is semantically different from owl:sameAs.

From Fig. 12 and 13, it becomes clear that some data providers set different strategies to design their ontologies in spite of the W3C recommendations. The results indicate that it is not feasible to traverse LOD and collect information, if the users specify only one type of property. As seen throughout Section 4, the need of traversing strategies is also verified from this perspective.

Fig. 13.

The ratio of each property among the four standardised properties used in 11 data sources.

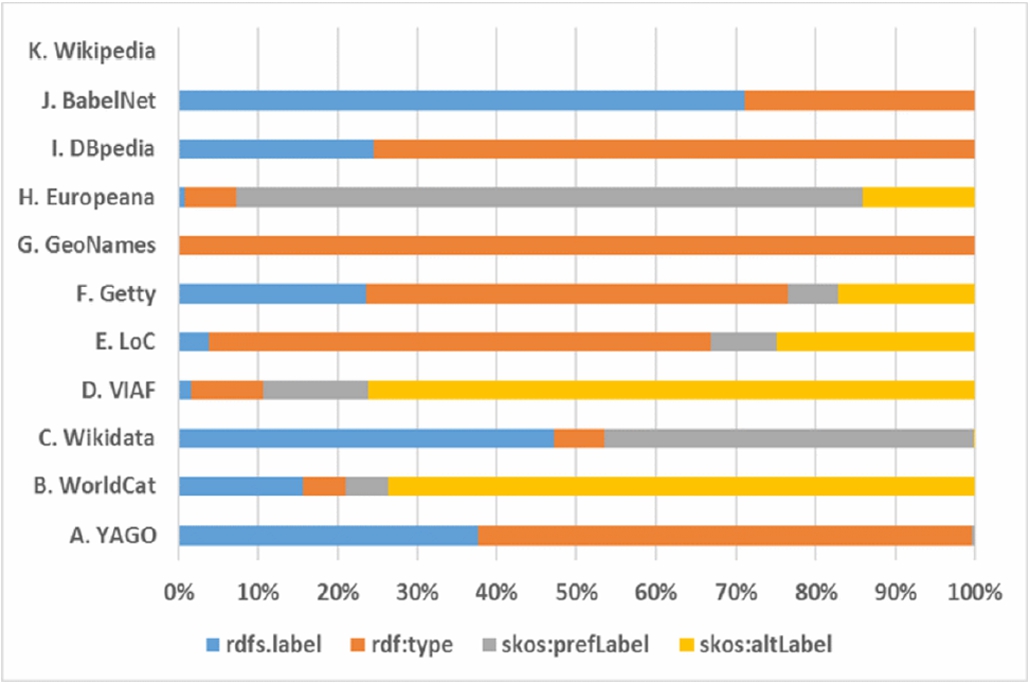

4.9.Literals

This section examines the quality of other data content to supplement the analysis of link quality. The content-related four W3C standard properties are analysed, namely, rdfs:label, rdf:type, skos:prefLabel, and skos:altLabel. Figure 14 shows the ratio of each property among the four properties used in the 11 data sources.

Fig. 14.

The ratio of each content-related property among the four content-related properties used in 11 data sources.

Here one can also observe the characteristics of data sources. The contrast between rdfs:label and SKOS vocabularies is one focal point. Interestingly BabelNet prefers to use the former this time, in place of the latter. It is noted that GeoNames only uses rdf:type, primarily because it employs proprietary properties for the name of places (gn:):

<https://sws.geonames.org/6251999/> gn:name "Canada";

gn:alternateName "Canada"@nn, "Kanuadu"@olo, "Ca-na-đa"@vi , "Kanada"@nds, "Kanada"@mt,  @lo;

@lo;

The library sector (VIAF, the Library of Congress, and WorldCat) uses skos:altLabel extensively. Generally speaking, it is evident that the use of properties is diverse and not standardised. Therefore, automatic retrieval of basic information such as entity labels would require good understanding of each data source before data processing begins.

We further investigate the core constructs of RDF/XML. The use of rdf:resource and rdf:about is analysed. The average amount of rdf:resource, rdf:about, and literals is shown in Table 9. In general, contrast is clearly visible between the data providers with a high volume of content (Wikidata, YAGO, DBpedia) and the rest. Somehow Getty has competitive numbers. We are also curious about the low average of 1.1 for rdf:about in YAGO. When we had a close look at the dataset, we discovered that it used a single instance of rdf:about for the entity itself, for example, as follows:

<rdf:Description rdf:about="http://dbpedia.org/resource/World_War_II"> </rdf:Description>

Similarly, each entity in GeoNames contains it exactly twice (2.0 for rdf:about):

<gn:Feature rdf:about="http://sws.geonames.org/2077456/"></gn:Feature> <foaf:Document rdf:about="http://sws.geonames.org/2077456/about.rdf"></foaf:Document>

The second rdf:about preserves the technical metadata about the entity such as a Creative Commons license and creation date.

Moreover, we investigate the amount of literals. However, they have to be treated carefully, as they may include less relevant information about the entity. Despite the caveats, the figures do provide a rough idea of how much content is described in each LOD instance. Manual inspection indicates that the number of literals in some LOD is extremely high. This is not only due to an enormous amount of technical metadata, but also to repetitions (e.g. literals expressed in several schemas) and language variations in them. For example, there are in total over 4.5 million literals and, on average, more than 50 thousand for the 100 entities in Wikidata.

Table 9

The average number (per entity) of rdf:resource, rdf:about, and literals for each data source

| ID | A | B | C | D | E | F | G | H | I | J | K | Total |

| Source | YAGO | WorldCat | Wikidata | VIAF | Library of Congress | Getty | GeoNames | Europeana | DBpedia | BabelNet | Wikipedia | |

| rdf:resource | 530.6 | 9.3 | 4696.1 | 84.1 | 62.2 | 595.4 | 14.3 | 41.0 | 2546.5 | 16.3 | 0.0 | 8595.9 |

| rdf:about | 1.1 | 8.1 | 2164.8 | 27.5 | 93.5 | 73.6 | 2.0 | 1.3 | 2285.8 | 5.9 | 0.0 | 4663.7 |

| Literals | 105.2 | 43.7 | 50723.9 | 448.1 | 230.5 | 207.2 | 176.1 | 138.1 | 82.6 | 2.9 | 0.0 | 52158.3 |

Table 10

The number of unique data content per data source in each category (values in parentheses indicate coverage in percentage)

| ID | Data Source | Overall | Agents | Events | Dates | Places | Objects & Concepts |

| A | YAGO | 251293 (56.2) | 19201 (34.2) | 24227 (55.0) | 418 (0.9) | 202215 (72.5) | 5232 (24.2) |

| B | WorldCat | 3667 (0.8) | 886 (1.6) | 346 (0.8) | 276 (0.6) | 1876 (0.7) | 287 (1.3) |

| C | Wikidata | 69183 (15.5) | 18944 (33.8) | 6708 (15.2) | 4074 (8.8) | 34068 (12.2) | 5389 (24.9) |

| D | VIAF | 11207 (2.5) | 5695 (10.2) | 0 (0.0) | 0 (0.0) | 4702 (1.7) | 810 (3.7) |

| E | LoC | 11980 (2.7) | 2587 (4.6) | 2253 (5.1) | 774 (1.7) | 4997 (1.8) | 1369 (6.3) |

| F | Getty | 23894 (5.3) | 1605 (2.9) | 0 (0.0) | 0 (0.0) | 21783 (7.8) | 506 (2.3) |

| G | GeoNames | 3284 (0.7) | 0 (0.0) | 0 (0.0) | 0 (0.0) | 3200 (1.1) | 84 (0.4) |

| H | Europeana | 3746 (0.8) | 1375 (2.5) | 256 (0.6) | 0 (0.0) | 2115 (0.8) | 0 (0.0) |

| I | DBpedia | 128307 (28.7) | 20212 (36.0) | 20003 (45.4) | 40951 (88.1) | 35469 (12.7) | 11672 (53.9) |

| J | BabelNet | 1866 (0.4) | 359 (0.6) | 345 (0.8) | 358 (0.8) | 497 (0.2) | 307 (1.4) |

| K | Wikipedia | 0 (0.0) | 0 (0.0) | 0 (0.0) | 0 (0.0) | 0 (0.0) | 0 (0.0) |

| – | Full Coverage | 447065 (100.0) | 56068 (100.0) | 44044 (100.0) | 46489 (100.0) | 278807 (100.0) | 21657 (100.0) |

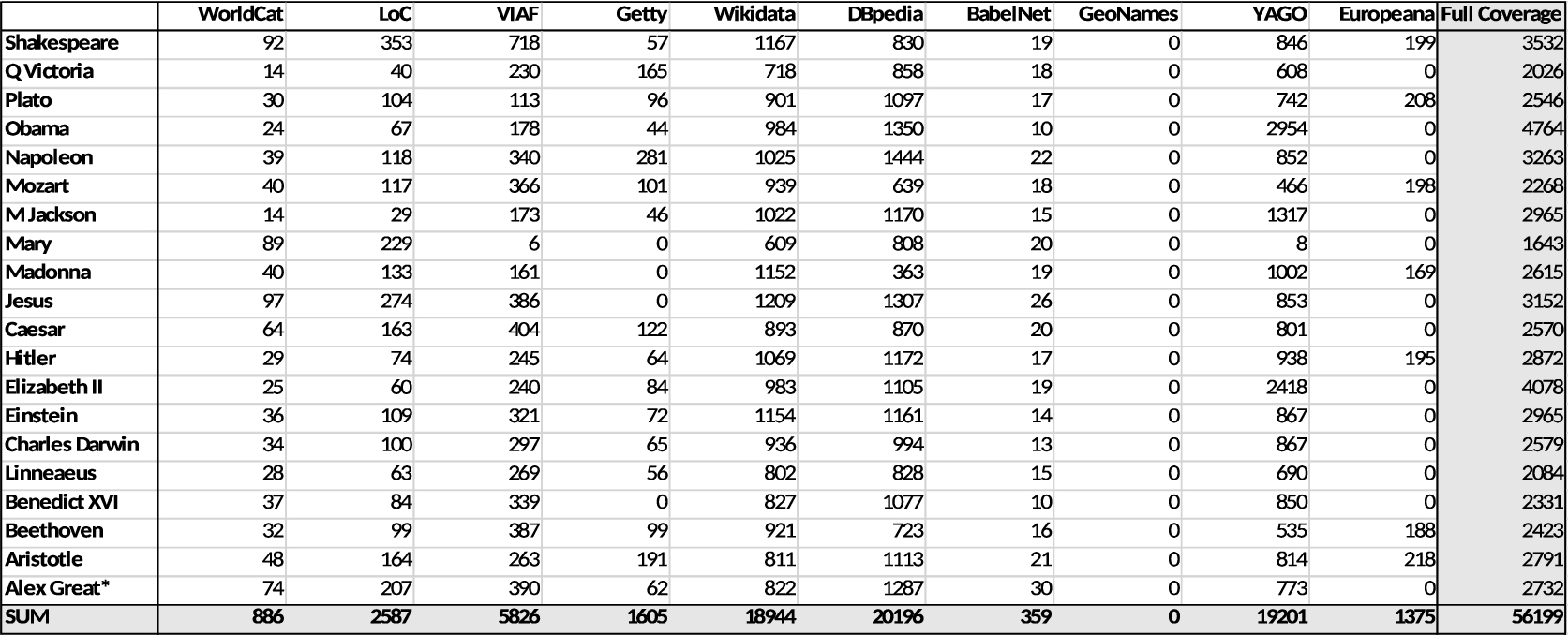

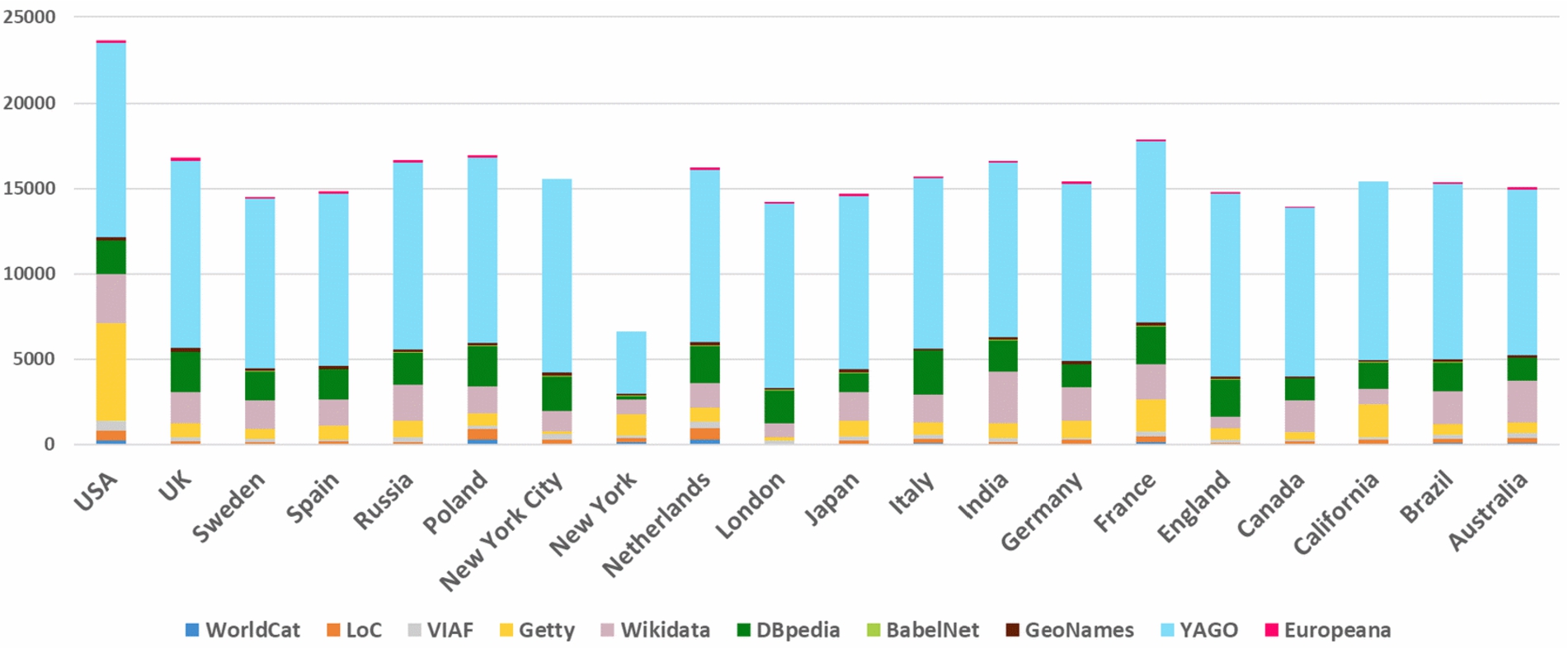

4.10.Content coverage

This section presents our attempt to further enhance the results of Section 4.9. Our Python scripts compare the content differences of the 100 instances across the 11 data sources (see Fig. C.1 in Appendix C). The amount of unique content of a single entity and the ratio are automatically calculated, and the aggregated view for the 11 data sources is shown in Table 10. In theory, they should represent the coverage and diversity of content (for a data source). The table is grouped by categories (i.e. all entities within are aggregated), because the instances tend to show similar patterns within the same category. “Full coverage” indicates the total amount of the unique content that 11 data sources hold as a whole (thus 100% coverage). It means that overlapping content is calculated once. The percentage of a data source indicates the ratio of the unique content against the full coverage.

In the overall column of Table 10, YAGO holds the largest amount of unique content (56.2%), which also implies that it is the data source with the most diverse content. It is nearly double the size of DBpedia. It may be also surprising that Wikidata contains just over a half of the DBpedia data. When we look at this from a cross-domain LOD perspective, the Library of Congress and WorldCat are considered as small-scale datasets, while the number of BabelNet content is even smaller. Obviously, data sources containing fewer entities provide less content.

Regarding the agents category, DBpedia exceeds YAGO and Wikidata. As expected VIAF is also prominent. However, the number is rather disappointing, compared to these three sources.

With regard to events, the reasons why the Library of Congress has relatively high number of contents is mostly due to bflc:subjectOf link. DBpedia provides a large number of seemingly Wikipedia derived content, ranging from links (related persons, places, events, and digital resources) to literal descriptions in different languages.

In the dates category, DBpedia has substantial advantage (88.1%). Other sources are unlikely to offer highly informative content. We also conducted manual inspection on our data sources. We discovered that the high volume of DBpedia in general was most likely due to a large number of links (derived from Wikipedia article dbo:wikiPageExternalLink (i.e., external links, further reading in Wikipedia) and dbo:wikiPageWikiLink (i.e., many useful links in Wikipedia). Wikidata is the second highest source (as it contains labels in many languages), but it is hard to understand the target resource with opaque entity names (wd:Qxxxx). The Library of Congress has useful links to their library resources related to the date (bflc:subjectOf). The Library of Congress and WorldCat use SKOS to connect to broader concepts of decade. It is noticeable that the library-based LOD sources (WorldCat, the Library of Congress, VIAF) have many overlapping content. BabelNet also uses skos:broader, but it seems the links are generated programmatically and it uses proprietary IDs (like Wikidata). Thus, it is hard for machine (and humans) to understand the meaning of the links. In addition, for some reason, the RDF representation of an entity has a significantly lower number of links compared to the HTML representation, therefore, some useful information may be lost.

YAGO shows strength in the places category, given that the ratios are more evenly distributed across all sources due to the availability of the entities in this popular category. Interestingly, Getty Vocabularies (TGN) performs relatively well, whereas GeoNames is not as good as we expected. New and diverse information may not be found in the latter.

As for objects and concepts category, the strength of DBpedia persists. It seems that it extracted a great deal of data from Wikipedia. Understandably, Wikipedia articles would be more exciting for human users than a collection of factual data in LOD.

In general, this analysis suggests: (a) the concentration of (diverse) content in DBpedia, YAGO, and Wikidata, and (b) data richness in specific proprietary properties. A critical question is how the 11 LOD producers facilitate users to find them among hundreds of properties, in order to access rich information, especially if they are unfamiliar with their ontologies. The hurdle could be higher for the data integration by federated queries in multiple LOD sources.

Table 11 illustrates the amount of data overlaps per category. While the one-source column indicates the number of non-overlapping content for the source (i.e., unique content), other columns indicate the number of overlapping content (i.e, two to ten sources hold identical string). Interestingly, the content covering all data sources does not exist at all. This implies that even the most standard English label cannot be found in every source. Over 75% of content is unique. However, overlaps in two sources are relatively high for agents, events, and objects and concepts. The numbers drop sharply for the overlap in more than two sources. However, very high coverage is also seen for agents, places, and objects and concepts. One reason for these phenomena would be the contrasting volume of data sources. As we have seen earlier, the disproportionately high volume of DBpedia, YAGO, and Wikidata makes the rest of the sources look insignificant. Therefore, although there are some highly overlapping content, the percentages remain very low.

Table 11