When linguistics meets web technologies. Recent advances in modelling linguistic linked data

Abstract

This article provides a comprehensive and up-to-date survey of models and vocabularies for creating linguistic linked data (LLD) focusing on the latest developments in the area and both building upon and complementing previous works covering similar territory. The article begins with an overview of some recent trends which have had a significant impact on linked data models and vocabularies. Next, we give a general overview of existing vocabularies and models for different categories of LLD resource. After which we look at some of the latest developments in community standards and initiatives including descriptions of recent work on the OntoLex-Lemon model, a survey of recent initiatives in linguistic annotation and LLD, and a discussion of the LLD metadata vocabularies META-SHARE and lime. In the next part of the paper, we focus on the influence of projects on LLD models and vocabularies, starting with a general survey of relevant projects, before dedicating individual sections to a number of recent projects and their impact on LLD vocabularies and models. Finally, in the conclusion, we look ahead at some future challenges for LLD models and vocabularies. The appendix to the paper consists of a brief introduction to the OntoLex-Lemon model.

1.Introduction

The growing popularity of linked data, and especially of linked open data (that is, linked data that has an open license), as a means of publishing language resources (lexica, corpora, data category registers, etc.) calls for a greater emphasis on shared models and vocabularies for linguistic linked data (LLD), since these are key to making linked data resources more reusable and more interoperable (at a semantic level). The purpose of this article is to provide a comprehensive and up-to-date survey of such models, while also touching upon a number of other closely related topics. The article will focus on the latest developments in this area and will both build upon and attempt to complement previous works covering similar territory by avoiding too much repetition and overlap with the latter.

In the following section, Section 2, we give an overview of a number of trends from the last few years which have had/are having/are likely to have, a significant impact on the definition and use of LLD models. This overview is intended to help to locate the present work within a wider research context, something that is particularly useful in an area as active as linguistic linked data, as well as helping readers in navigating the rest of the article. Section 3 gives an overview of related work, and Section 4 an overview of the most widely used models in LLD. Next, in Section 5, we take a look at recent developments in community standards and initiatives: this includes a description of the latest extensions of the OntoLex-Lemon model, as well as a discussion of relevant work in the modelling of corpora and annotations and LLD metadata. Finally, the article contains a section dedicated to the use of models in LLD-centered projects, Section 6, and a concluding section, Section 7 in which we look at some potential future trends.

2.Setting the scene: An overview of relevant trends in LLD

We have decided to focus on three overarching trends in this overview. These are: the FAIRification of data in Section 2.1; the role of projects and community initiatives in Section 2.2; and, finally, the increasing influence of Digital Humanities use cases in Section 2.3. All three of these trends have arguably had a major impact on the development of and need for shared LLD models and vocabularies. The second of the themes listed above – the role of projects and community initiatives in the creation and maintenance of LLD models – has always been important for our topic and continues to be so; the other two, however, have really begun to taken on a marked relevance for LLD over the last few years.

FAIR data (defined below, in Section 2.1) plays a central role in a number of prominent initiatives which have recently been proposed for the promotion of open science and data on the part of numerous organisations and especially of research funding bodies. It would be useful to understand therefore how LLD models can contribute to the creation of FAIR language resources, and this is the topic of Section 2.1. Similarly, the Digital Humanities, an area of research which has rapidly gained ground over the last few years, have also become more and more significant as a both a producer and consumer of LLD, something which has inevitably had an impact on LLD vocabularies and models, see Section 2.3.

2.1.FAIR new world

It should come as no surprise, given the growing importance of Open Science initiatives and in particular those promoting the FAIR guidelines (where FAIR stands for Findable, Accessible, Interoperable and Reusable) for the modelling, creation and publication of data [179], that shared models and vocabularies have begun to take on an increasingly prominent role within numerous disciplines, and not least in the fields of linguistics and language resources. And although the linguistic linked data community has been active in advocating for the use of shared RDF-based vocabularies and models for quite some time now, this new emphasis on FAIR language resources is likely to have a considerable impact in several ways, not least in terms of the necessity for these models and vocabularies to demonstrate greater coverage with respect to the kinds of linguistic phenomena they can describe, and for them to be more interoperable with each other. We will look at one recent and influential series of FAIR related recommendations for models in Section 4 in order to see how they might be applied to the case of LLD. In the rest of this subsection, we will take a closer look at the FAIR principles themselves and show why their widespread adoption is likely to lead to a greater role for LLD models and vocabularies in the future.

In The FAIR Guiding Principles for scientific data management and stewardship [179], the article which first articulated the by-now ubiquitous FAIR principles, the authors state that the criteria proposed by those principles are intended both “for machines and people” and that they provide “‘steps along a path’ to machine actionability”, where the latter is understood to describe structured data that would allow a “computational data explorer” to determine:

– The type of “digital research object”

– Its usefulness with respect to tasks to be carried out

– Its usability especially with respect to licensing issues, with this information represented in a way that would allow the agent to take “appropriate action”.

Publishing data using a standardised, general purpose, data model such as the Resource Description Framework22 (RDF) goes a long way towards facilitating the publication of datasets as FAIR data. Indeed RDF, taken together with the other standards proposed in the Semantic Web stack and the technical infrastructure which has been developed to support it, was specifically intended to facilitate interoperability and interlinking between datasets. In order to ensure the interoperability and re-usability of datasets within a domain, however, it is vital that in addition to more generic data models such as RDF there also exist domain specific vocabularies/terminologies/models and data category registries (compatible with the former). Such resources serve to describe, ideally in a machine actionable way, the shared theoretical assumptions held by a community of domain experts as reflected in the terminology or terminologies in use within that community.

The following specific FAIR principles are especially salient here (emphasis ours):

– F2. data are described with rich metadata.

– I1. (meta)data use a formal, accessible, shared, and broadly applicable language for knowledge representation.

– I2. (meta)data use vocabularies that follow FAIR principles.

It is important to note here that the emphasis placed on machine actionability in FAIR resources (that is, recall, on enabling computational agents to find relevant datasets and resources and to take “appropriate action” when they find them) gives Semantic Web vocabularies/models/registries a substantial advantage over other (non-Semantic Web native) standards in the fields of linguistics and language resources, such as the Text Encoding Initiative (TEI) guidelines33 [164], the Lexical Markup Framework (LMF) [68] or the Morpho-syntactic Annotation Framework (MAF) [40].

For a start, none of these other standards possess a ‘native’, widely-used, widely supported and broadly applicable formal knowledge representation (KR) language for describing the semantics of vocabulary terms in a machine-readable way, or at least nothing as powerful as the Web Ontology Language (OWL)44 or the Semantic Web Rule Language (SWRL).55 This means that in effect there is no standardised way of, for instance, describing the meanings of terms such morpheme, or lemma, etc. in TEI in a machine actionable way. KR languages like OWL allow for precise, axiomatic definitions to be given to terms in a way that permits reasoning to be carried out on them (in the case of OWL there exist numerous, freely available reasoning engines such as Pellet [157]); more generally, they allow for much richer machine actionable metadata descriptions. Furthermore, the use of KR languages like OWL can be allied with already established conceptual modelling techniques and best practises – including the use of top level ontologies such as DOLCE66 or BFO,77 both of which are available in OWL, and ontology validation methodologies such as OntoClean [83] which help to clarify what we mean when we say that one concept is a subtype of another – in order to define vocabularies and models which further enhance the interoperability and machine actionability of linguistic datasets.

Moreover, thanks to the use of a shared data model with a powerful native linking mechanism, LLD datasets can easily be integrated with, and therefore enriched by, linked data datasets belonging to other domains, for instance, geographical and historical datasets or gazetteers and authority lists. Indeed, OWL vocabularies, such as PROV-O,88 make it straightforward to add complex, structured information describing when something happened or to make hypotheses explicit99 – all of which contributes towards the creation of ever richer and more machine actionable metadata for linked data language resources.

The pursuit of the FAIR ideal has in fact encouraged the definition of new ways of publishing linked data datasets, which offer additional opportunities for the re-use and integration of such datasets in an automatic or semi-automatic way. These include nanopublications, cardinal assertions and knowlets.1010 The potential of these new approaches for discovering new facts as well as for comparing different concepts together and tracking how single concepts change and evolve is well described in [128].

When it comes to language resources we are faced with a rich array of highly structured datasets arranged into different types (lexica, corpora, treebanks, etc) according to a series of widely shared conventions – something that would seem to lend itself well to making such resources FAIR in the machine-oriented spirit of the original description of those principles. However, in order to ensure the continued effectiveness of linked data and the Semantic Web in facilitating the creation of FAIR resources, it is critical that pre-existing vocabularies/models/data registries be re-used whenever possible in the modelling of language resources. In many instances, these models will not have sufficient coverage to capture numerous kinds of use cases, in which case we will have to define new extensions to these models (an ongoing process and one which is a major theme of this article, see for instance Section 5.1), in other cases it may be necessary to create training materials suitable for different groups of users. Part of the intention of this article, together with the foundational work carried out in [9], is to provide an overview of what exists in terms of LLD-focused models, to look at those areas and use-cases which have so far gained the most attention and to highlight those which are so far underrepresented.

2.2.The importance of projects and community initiatives in LLD

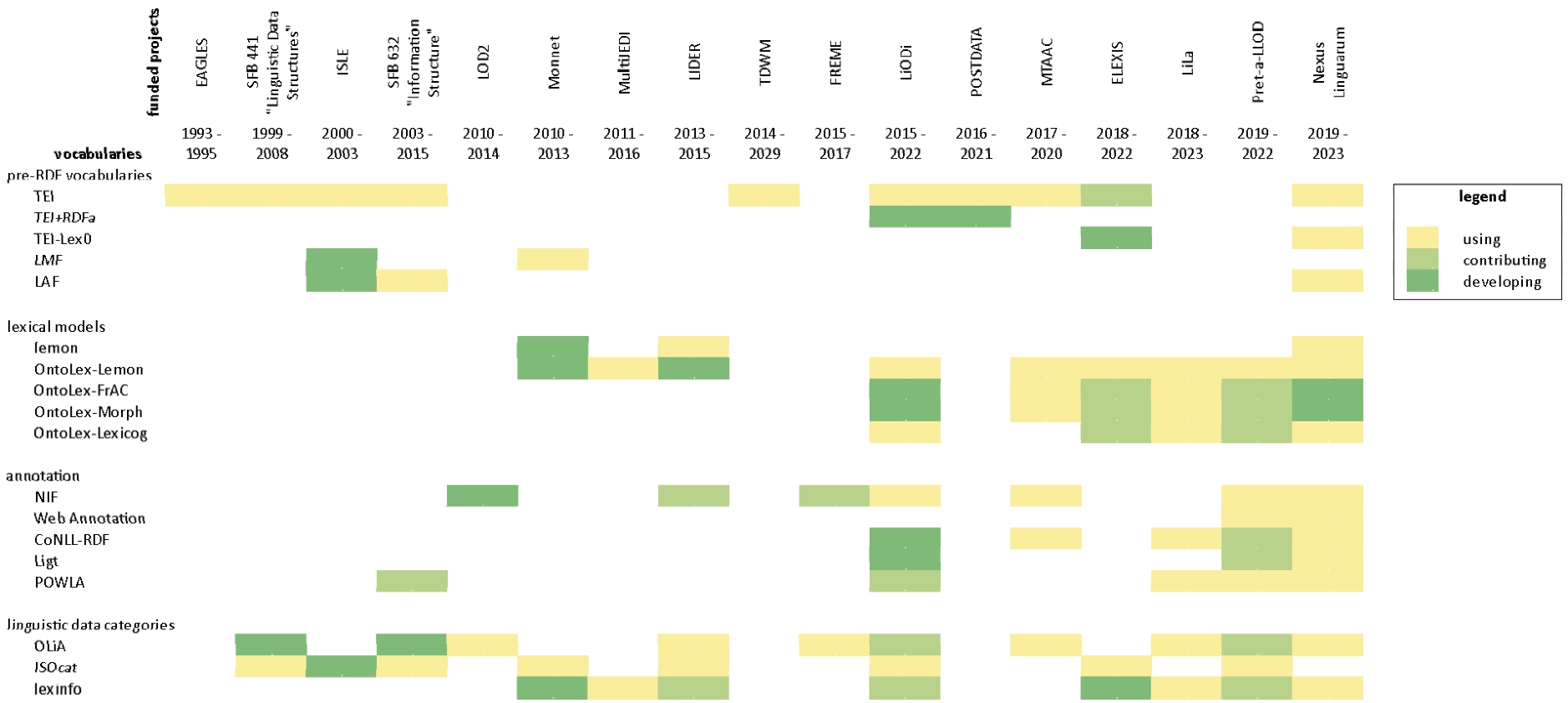

One significant indicator of the success which LLD has enjoyed in the last few years is the variety of newly funded projects which have emerged in this period, and which have included the publication of linguistic datasets as linked data as a core theme. These include projects both at a continental or transnational level – notably European H2020 projects,1111 ERCs1212 and COST actions1313 – as well as at the national and regional levels. Arguably, this recent success in obtaining project funding reflects a wider recognition of the usefulness of linked data as a means of ensuring the interoperability and accessibility of language resources. It also demonstrates the ongoing maturation of the field, as LLD continues to be successfully applied to new domains and use cases within the context of such projects. In addition, these projects also offer us numerous examples of the application of some LLD vocabularies and models, which we look at in this article in the creation of medium to large-scale language resources.

We have therefore decided to dedicate a whole section of the present article, Section 6, to a detailed discussion of the current situation as regards research projects and LLD models and vocabularies. This includes a detailed overview of the area, Section 6.1, along with an extended description of a number of projects which we regard as the most significant from the point of view of LLD models and vocabularies. These are (in order of appearance): the Linked Open Dictionaries (LiODi) project (Section 6.2.1); the Poetry Standardization and Linked Open Data (POSTDATA) project (Section 6.2.2); the European Lexicographic Infrastructure (ELEXIS) project Section 6.2.3; the LiLa: Linking Latin ERC project (Section 6.2.4); the Prêt-à-LLOD project (Section 6.2.5); the European network for Web-centred linguistic data science (NexusLinguarum) COST action (Section 6.2.6). A list of all the projects described in Section 6 can be found in Table 3.

Note, however, that although the projects which we discuss in Section 6 have, in many cases, set the agenda for the development of LLD models and vocabularies, much of the actual work on the definition of these resources was carried out – and is being carried out – within community groups, such as the W3C OntoLex group. We therefore include an update on community standards and initiatives in Section 5. These include a subsection on the latest activities in the OntoLex group (Section 5.1); a discussion of recent work on LLD models for corpora and annotation (Section 5.2); and similarly for what concerns models and vocabularies for LLD resource metadata (Section 5.3). Section 6.1.2 features a discussion of the relationship between community initiatives and projects.

2.3.The relationship of LLD to the digital humanities

Several of the projects discussed in this article fall under the umbrella of the Digital Humanities (DH). For this and other reasons this is the third major trend which we want to highlight here, since it represents a move away (or more precisely a branching off) from LLD’s beginnings in computational linguistics and natural language processing (although these latter two still perhaps represent the majority of applications of LLD), and this we claim is something that is leading to a shift in emphasis in the definition and coverage of LLD models. The overlap between LLD and DH is especially apparent in the modelling of corpora annotation (Section 5.2) and in the context of linked data lexicographic use cases (see Section 5.1.1 and Section 6.2.3).

One use case which clearly highlights these shared concerns is the publication of retro-digitised dictionaries as LLD lexica (a major theme of the ELEXIS project, see Section 6.2.3). This use case confronts us with the challenge of formally modelling both the content of a lexicographic work, that is, the linguistic descriptions which it contains, and those aspects which pertain to it as a physical text to be represented in digital form. In the latter case, this includes the representation of (elements of) the form of the text, i.e., its structural layout and overall visual appearance;1414 we may also wish to model different aspects of the history of the lexicographic work as a physical text.1515 In fact, as we touch upon in our description of the OntoLex-Lemon Lexicography module in (Section 5.1.1), the structural division of lexicographic works into textual units such as entries and senses is not always isomorphic to the representation of the lexical content of those units using OntoLex-Lemon classes such as LexicalEntry and LexicalSense.

All of this calls for a much richer provision of metadata categories than has been considered up till now for LLD lexica: both at the level of the whole work and at the level of the individual entry. It also requires the capacity to model salient aspects of the same artefact or resource at different levels of description (something which is indeed offered by the OntoLex-Lemon Lexicography module, see Section 5.1.1). We discuss metadata challenges in humanities use cases more generally in Section 5.3. A related topic is the relationship between notions such as word taken from the lexical/linguistic and philological points of view and, more broadly, the relationship between linguistic and philologically motivated annotations of text. This latter topic which is just starting to gain attention within the context of LLD is being studied both at the level of community initiatives (see Section 5.2) and in projects such as LiLa (see Section 6.2.4) and POSTDATA (Section 6.2.2).

An additional series of challenges arises in the consideration of resources for classical and historical languages, or indeed, historical stages of modern languages. For instance in the case of lexical resources for historical languages we often come up against the necessity of having to model attestations (discussed in Section 5.1.3) and these can sometimes cite reconstructed texts, something that underscores the desirability of being able to represent different scholarly and philological hypotheses. This is a need which also arises in the context of modelling of word etymologies. The LiLa project [134] (Section 6.2.4 for a more detailed description) provides a good example of the challenges and opportunities of adopting the LLD model to represent linguistic (meta)data for both lexical and textual resources for a classical language (Latin).

One extremely important (non RDF-based) standard for encoding documents in the Digital Humanities is TEI/XML. We discuss in this article the relationship between TEI and RDF-based annotation approaches (in Section 5.2.1), introduce the new lexicographic TEI-based standard TEI Lex-0, and describe current work on a crosswalk between OntoLex-Lemon and the latter (in Section 6.2.3).

Finally, see Section 6.1.1 for an overview of a number of projects combining DH and LLD.

3.Related work

This article is intended, among other things, to both complement and to update a previous general survey on models for representing LLD, published by Bosque-Gil et al. in 2018 [9]. Although we are now only four years on from the publication of that work, we feel that enough has happened in the intervening time period to justify a new survey article. In addition, our intention is to cover a much wider range of topics than the previous article. We also feel that our overall focus is quite different. Broadly speaking, that previous work offered a classification of various different LLD vocabularies according to the different levels of linguistic description that they covered. The current paper concentrates more on the use of LLD vocabularies in practise and on their availability (this is very much how we have approached the survey in Section 4). Moreover, the present article includes a detailed discussion of recent work in the use of LLD models and vocabularies in corpora and annotation, Section 5.2, as well as an extensive section on metadata, Section 5.3, neither of which were given the same detailed level of coverage in [9]. Additionally, we also cover the following initiatives which were not discussed in the previous article because they had not yet got underway:

4.LLD models: An overview

The current section gives an overview of some of the most well known and widely used models and vocabularies in LLD. A summary of the models discussed in the current section (and in the whole article) can be found in Tables 1 and 2 (with Table 1 dealing with published LLD models/vocabularies and 2 with models/vocabularies that are currently unavailable or no longer updated). An account of some of the latest developments with regard to these models, on the other hand, can be found in Section 5. We classify each of the models described in this section according to the scheme given in the linguistic LOD cloud diagram1616 (the cloud itself is described in [28]). These are:

Table 1

Summary of published LLD vocabularies

| Summary | |||||

| Name | Other vocabularies/models used | LLD category | Licenses | Versions (at time of writing 26/07/21) | Extended coverage in current article |

| OntoLex-Lemon | CC, DC, FOAF, SKOS, XSD | Lexica and Dictionaries | CC0 1.0 | Version 1.0, 2016 (but this is closely based on the prior lemon model [121]) | Section 4.2, Section 5.3.3 and Appendix x |

| Lexicog (OntoLex-Lemon) | DC, LexInfo, SKOS, VOID, XSD | Lexica and Dictionaries | CC0 | Version 1.0, (2019-03-08) | Section 4.2 and Section 5.1.1 |

| MMoOn | DC, FOAF, GOLD, LexVo, OntoLex-Lemon, SKOS, XSD | Terminologies, Thesauri and KBs (Morphology) | CC-BY 4.0 | Version 1.0, 2016 | Section 4.3 |

| Web Annotation Data Model (OA) | AS, FOAF, PROV, SKOS, XSD | Corpora and Linguistic Annotations | W3C Software and Document Notice and License | Version “2016-11-12T21:28:11Z” | Section 4.1 and Section 5.2.3 |

| NLP Interchange Format (NIF Core) | DC, DCTERMS, ITSRDF, levont, MARL, OA, PROV, SKOS, VANN, XSD | Corpora and Linguistic Annotations | Apache 2.0 and CC-BY 3.0 | Version 2.1.0 | Section 4.1 and Section 5.2.2 |

| POWLA | FOAF, DC, DCT, | Corpora and Linguistic Annotations | NA | Last Updated 2018-04-03 | Section 5.2 |

| CoNLL-RDF | DC, NIF Core, XSD | Corpora and Linguistic Annotations | Apache 2.0 and CC-BY 4.0 | Last Updated 2020-05-26 | Section 5.2.4 |

| Ligt | DC, NIF Core, OA | Corpora and Linguistic Annotations | NA | Version 0.2 (2020-05-26) | Section 5.2.4 |

| META-SHARE | CC, DC, DCAT, FOAF, SKOS, XSD | Linguistic Resource Metadata | CC-BY 4.0 | Version 2.0 (pre-release) | Section 4.4 and Section 5.3.2 |

| OLiA | DCT, FOAF, SKOS | Linguistic Data Categories | CC-BY-SA 3.0 | Version last updated 27/02/20 | Section 4.5 |

| LexInfo | CC, Ontolex, TERMS, VANN | Linguistic Data Categories | CC-BY 4.0 | Version 3.0, 14/06/2014 | Section 4.5 |

| LexVo | FOAF, SKOS, SKOSXL, XSD | Typological Databases | CC-BY-SA3.0 | Version 2013-02-09 | Section 4.6 |

Table 2

Other LLD vocabularies discussed in this paper

| Summary | |||

| Name | LLD category | Status (at time of writing 26/07/21) | Extended coverage in current article |

| OntoLex-Lemon: FrAC | Lexica and Dictionaries | Under Development | Section 5.1.3 |

| OntoLex-Lemon: Morphology | Lexica and Dictionaries | Under Development | Section 5.1.2 |

| PHOIBLE | Terminologies, Thesauri and KBs | Unavailable | Section 4.3 |

| FRED | Corpora and Linguistic Annotations | Project Specific Vocabulary | Section 5.2 |

| NAF | Corpora and Linguistic Annotations | Project Specific Vocabulary | Section 5.2 |

| GOLD | Linguistic Data Categories | No Longer Updated | Section 4.5 |

We describe our methodology for the rest of the section below. In Section 4.7 we discuss tools and platforms for the publication of LLD.

Our approach to classification As this section is intended to be an overview we will not give detailed descriptions of single models or vocabularies here (several of these models and vocabularies are described in more detail in the rest of the article, or in the case of OntoLex-Lemon in the appendix, and others receive a more detailed treatment in [9] and [36]). Instead, we describe them on the basis of a number of criteria, many of which are related to their status as FAIR models and vocabularies. In doing so we refer to a recent survey on FAIR Semantics [88], the result of a dedicated brainstorming workshop and subsequently an evaluation session of the FAIRsFAIR project.1717 This report outlines a number of recommendations and best practices for FAIR semantic artefacts where the latter are defined as “machine-actionable and -readable formalisation[s] of a conceptualisation enabling sharing and reuse by humans and machines”; this term is intended to include taxonomies, thesauri and ontologies.

Even though all the recommendations listed in [88] are important, for reasons of space, we have selected the following subset on the basis of their salience to the set of models and vocabularies under discussion:

– (P-Rec 2) Globally Unique, Persistent, and Resolvable Identifiers must be used for Semantic Artefact Metadata Records. Metadata and data must be published separately, even if it is managed jointly;

– (P-Rec 4) Semantic Artefact and its content should be published in a trustworthy semantic repository;

– (P-Rec 6) Build semantic artefact search engines that operate across different semantic repositories;

– (P-Rec 10) Foundational Ontologies may be used to align semantic artefacts;

– (P-Rec 13) Crosswalks, mappings and bridging between semantic artefacts should be documented, published and curated;

– (P-Rec 16) The semantic artefact must be clearly licensed for use by machines and humans.

We use (P-Rec 16) as a guide in analysing the resources covered in the article. So that we point out cases where licensing information is available as machine actionable metadata, using properties like DCT:license and URI’s such as https://creativecommons.org/publicdomain/zero/1.0/ as this practice enhances the re-usability of those resources. Recommendations (P-Rec 4) and (P-Rec 6), on the other hand, alert us to the value of being able to find models and vocabularies on specialised search engines/archives (findability being one of the pillars of FAIR). As we will see, several of the models discussed below are listed on the Linked Open Vocabulary (LOV)1919 search engine2020 [170] and the DBpedia archivo ontology archive.2121

In addition to the textual descriptions of different LLD models given in the rest of this section, we also give a tabular summary of the most well-known/stable/widely available2222 of these models in Table 1; this table also refers, in relevant cases, to sections of the paper where more details about a model are given.

Every one of the models listed in the table uses the RDFS vocabulary, and each one of them is an OWL ontology. We also list the additional models/vocabularies which they make use of in the table on a case by case basis. These include the following well known ones: XML Schema Definition2323 (XSD); the Friend of a Friend Ontology2424 (FOAF); the Simple Knowledge Organisation System2525 (SKOS); Dublin Core2626 (DC); Dublin Core Metadata Initiative (DCMI) Metadata Terms;2727 the Data Catalog Vocabulary2828 (DCAT), described also in Section 5.3; and the PROV Ontology2929 (PROV-O).

In addition, the table also mentions the following vocabularies.

– Activity Streams(AS): a vocabulary for activity streams.3030

– GOLD: an ontology for describing linguistic data, which is described in Section 4.5.

– MARL: a vocabulary for describing and annotating subjective opinions.3131

– ITSRDF: an ontology used within the Internationalization Tag Set.3232

– VANN: a vocabulary for annotating vocabulary descriptions.3434

– SKOS-XL: an extension of SKOS with extra support for “describing and linking lexical entities”.3535 SKOS and SKOS-XL are, along with lemon and its successor OntoLex-Lemon, amongst the most well known ways of enriching linked data taxonomies and conceptual hierarchies with linguistic information. We will look at the use of a SKOS-XL vocabulary in the context of a project on the classification of folk tales in Section 6.

4.1.Vocabularies and models for corpora and linguistic annotations

Linguistic annotation for the purposes of creating digital editions, corpora, and linking texts with external resources etc, has long been a topic of interest within the context of RDF and linked data. Coexisting with relational databases, XML-based formats (most notably, TEI, see Section 5.2) or simply text-based formats, RDF-based annotation models have been steadily undergoing development and are increasingly being taken up in research and industry.

Currently there are two primary RDF vocabularies which are being widely used for annotating texts. These are NLP Interchange Format (NIF),3636 used mostly in the language technology sector and Web Annotation,3737 formerly known as Open Annotation (abbreviated here as OA), used in digital humanities, life sciences and bioinformatics. Each vocabulary has its own particular advantages and shortcomings, and a number of proposals to extend them have been proposed. Above all, however, there is a need for synchronization between the two. Both are listed in LOV3838 and archivo3939 (the NIF core in the case of NIF4040). The Web Annotation model, although it is covered by a W3C software and document notice and license, does not express this information as machine actionable metadata; while NIF does with its licensing information. More details about both models and their recent developments are given in Section 5.2.

Other vocabularies described in that section include POWLA, CoNLL-RDF and Ligt. The first of these, POWLA,4141 is available on archivo,4242 the only one of the three that has been made available in this way. CoNLL-RDF4343 expresses version info as a string using the owl:versionInfo property and is covered by a CC-BY 4.0 license as specified in the LICENSE.data page.4444

4.2.Lexica and dictionaries

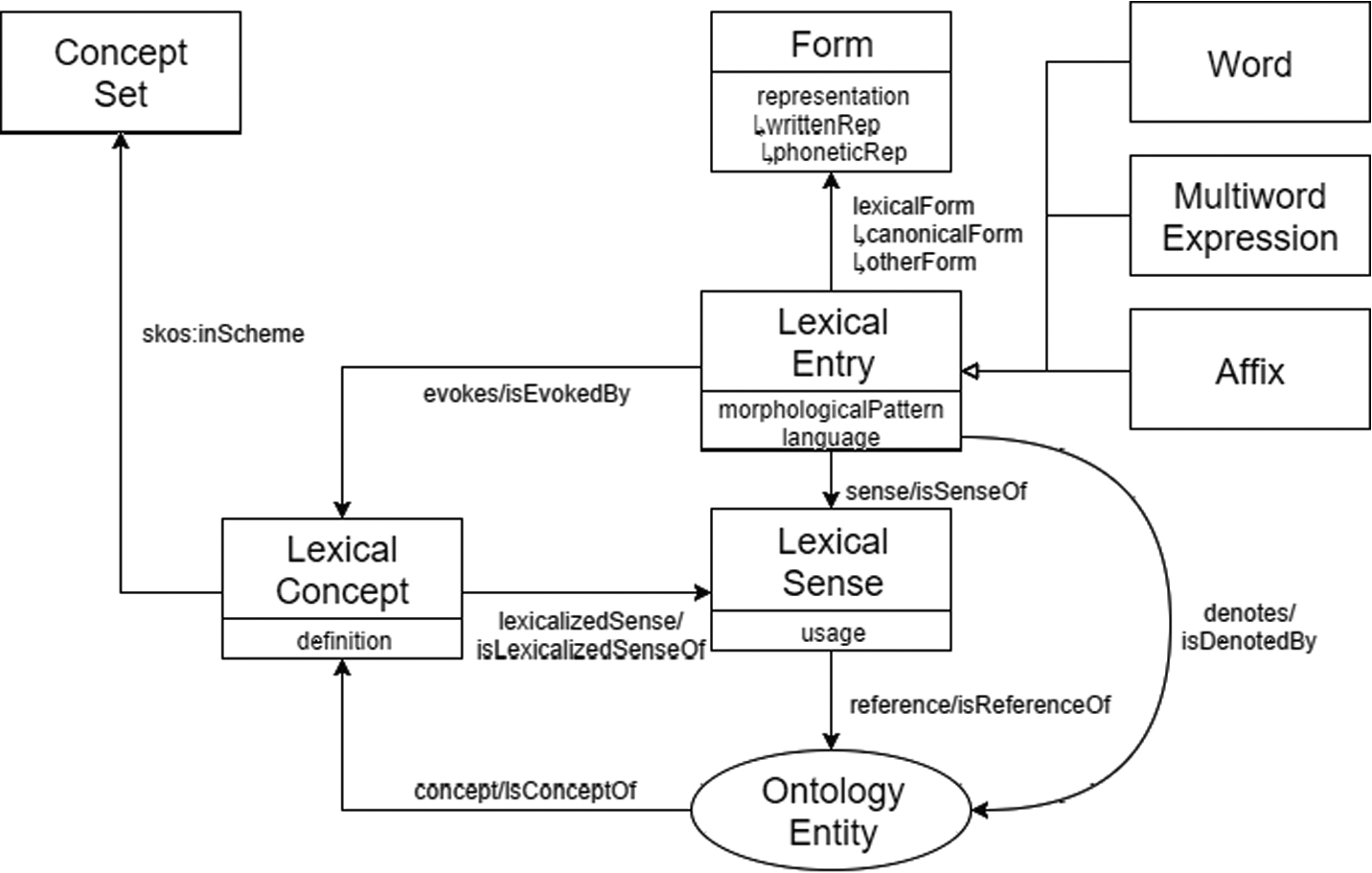

The most well known model for the creation and publication of lexica and dictionaries as linked data is OntoLex-Lemon4545 [39] (see Appendix x for an introduction to the model with examples, and Section 5.1 for extensions and further developments). This was an output of the W3C Ontology-Lexica working group (we will refer to this as the OntoLex group in what follows) which also manages its ongoing development along with the publication of further extensions. OntoLex-Lemon is based on a previous model, the LExicon Model for ONtologies (lemon) [121] and as was the case with its predecessor, it is intended as a model for enriching ontologies with linguistic information and not for modelling dictionaries and lexica per se. Thanks to its popularity, however, it has come to take on the status of a de facto standard for the modelling and codification of lexical resources in RDF in general. Resources which have been modelled using OntoLex-Lemon include the LLD version of the Princeton Wordnet,4646 DBnary (the linked data version of Wiktionary) [155], and the massive multilingual knowledge graph Babelnet [60].

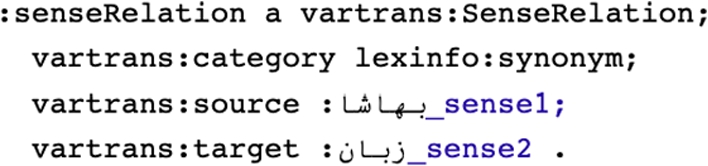

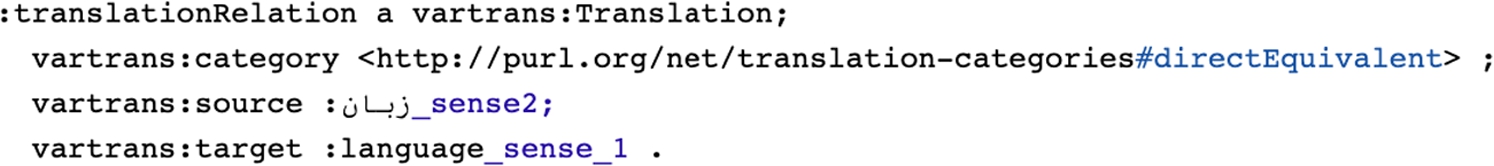

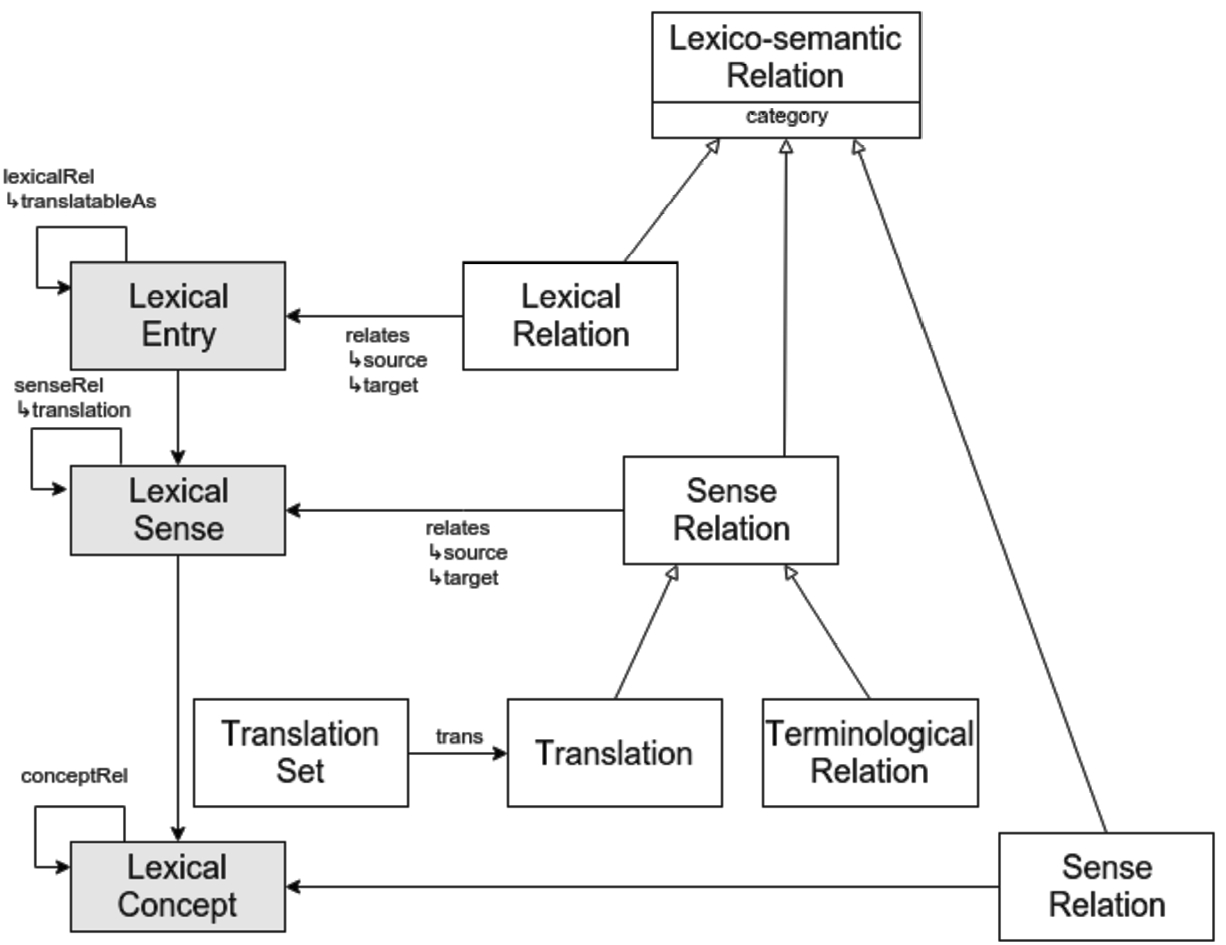

The OntoLex-Lemon model is modular and consists of a core module along with modules for Syntax and Semantics,4747 Decomposition,4848 and Variation and Translation,4949 as well as a dedicated metadata module, lime5050 (all of which are described in Appendix x, except for lime which is described in Section 5.3.3).

OntoLex-Lemon is available on LOV as is its predecessor lemon.5151 All of its individual modules are listed separately: the core;5252 lime;5353 vartrans;5454 synsem;5555 the decomp module.5656 Three of its modules are available on archivo, the core:5757 the lime metadata module5858 and the Variation and Translation module.5959 All the OntoLex-Lemon modules have their licensing information (they are all CC0 1.0) described with RDF triples using the CC vocabulary6060 with a URI as an object. Version information is described using owl:versionInfo.

The OntoLex-Lemon Lexicography module,6161 described in more detail in Section 5.1.1, was published separately from OntoLex-Lemon. It is not available on LOV yet, but it is available on archivo.6262 Its licensing information (CC-Zero) is described with RDF triples using the CC and DC vocabularies.6363 Version information is described using owl:versionInfo.

4.3.Vocabularies for terminologies, thesauri and knowledge bases

The Simple Knowledge Organisation System (SKOS) is a W3C recommendation for the creation of terminologies and thesauri, or more broadly speaking, knowledge organisation systems.6464 We will not discuss it in any depth here since it is a general purpose vocabulary which has applications well beyond the domain of language resources.

In terms of specialised vocabularies or models for the modelling of linguistic knowledge bases – and aside from linguistic data category registries, which will be discussed in Section 4.5 – we can list two prominent ones here. The first is MMoOn ontology6565 which was designed for the creation of detailed morphological inventories [104]. It does not currently seem to be available on any semantic repositories/archives/search engines, but it does have its own dedicated website6666 which offers a SPARQL endpoint.6767 Its licensing information (it has a CC-BY 4.0 license) is available as triples using dct:license with a URI as an object.

PHOIBLE is an RDF model for creating phonological inventories [9]. As of the time of writing, PHOIBLE data was no longer available as a complete RDF graph, but only in its native (XML) format from which RDF fragments are dynamically generated. The original data remains publicly available,6868 but on the PHOIBLE website, it is only possible to browse and export selected content into RDF/XML.6969 Since it no longer provides resolvable URIs for its components, PHOIBLE data does not fit within the narrower scope of LLD vocabularies anymore. It does, however, maintain a non-standard way of linking, as it has been absorbed into the Cross-Linguistic Linked Data infrastructure [67, CLLD] (along with other resources from the typology domain). CLLD datasets and their RDF exports continue to be available as open data under https://clld.org/.7070

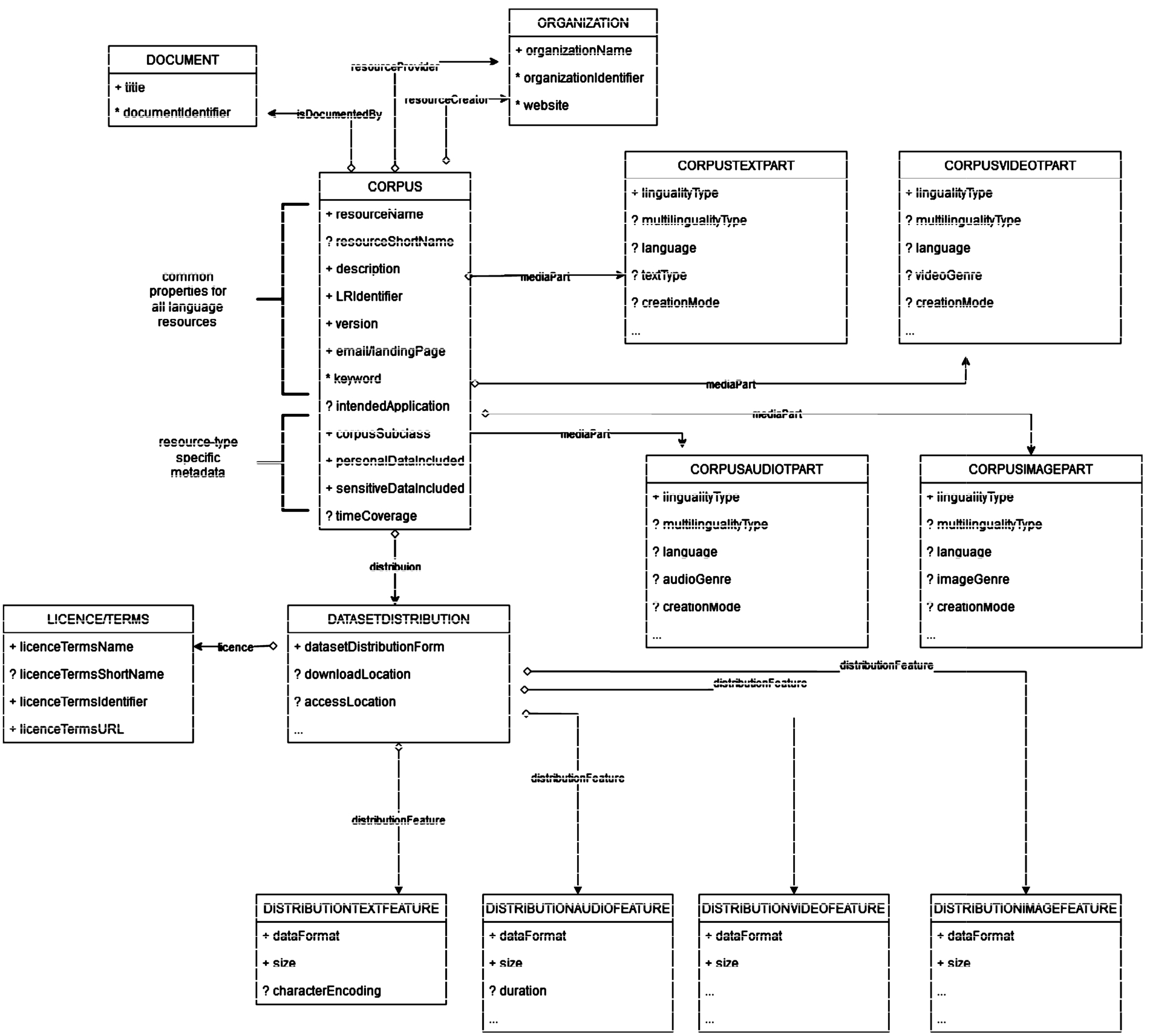

4.4.Linguistic resource metadata

Due to the importance of this topic, we give a more detailed overview in Section 5.3. Here, we consider only accessibility issues for the two models for language resource metadata, which are described in Section 5.3: The METASHARE ontology7171 and lime. The latter has been previously introduced and is described in more detail in Section 5.3.3. The former is currently in its pre-release version 2.0 (the last update being 2020-03-20). Its license information (it has a CC-BY 4.0 license) is available as triples using dct:license with a URI as an object.

4.5.Linguistic data categories

History Looking back to 2010, two major registries were in widespread use by different communities for addressing the harmonization and linking of linguistic resources via their data categories.

In computational lexicography and language technology, the most widely applied terminology repository was ISOcat [98] which provided human-readable and XML-encoded information about linguistic data categories that were applicable to tasks such as linguistic annotation, the encoding of electronic dictionaries and the encoding of language resource metadata via persistent URIs.

In the field of language documentation and typology, the General Ontology of Linguistic Description (GOLD) emerged in the early 2000s [61], having been originally developed in the context of the project Endangered Metadata for Endangered Languages Data (E-MELD, 2002–2007).7272 GOLD stood out in particular because of its excellent coverage of low resource languages. In the RELISH project, a curated mirror of GOLD-2010 was incorporated into ISOcat [3]. Unfortunately, since then, GOLD development has stalled and, while the resource is still being maintained by the LinguistList (along with the data from related projects) and still remains accessible,7373 it has not been updated since [110] (and for this reason we have not included it in our summary table). In parts, its function seems to have been taken over by ISOcat, but it is worth pointing out here that the ISOcat registry exists only as a static, archived resource, and is no longer an operational system.

The current situation The ‘official’ successor of ISOcat, the CLARIN Concept Registry is briefly discussed in Section 5.3 below, but it is not strictly speaking a linked data vocabulary. Another successor of ISOcat is the LexInfo ontology,7474 the data category register used in OntoLex-Lemon and which has re-appropriated many of the concepts contained in ISOcat for use within the domain of lexical resources. Currently in its third version, LexInfo can be found both on the LOV search engine7575 and on archivo,7676 it appears both times however in its second version. Version 3.0 has been under development since late 2019 in a community-guided process via GitHub,7777 and is not registered with either service, yet. LexInfo has a (CC-BY 4.0) license, which is described with RDF triples using the CC vocabulary and DCT, with a URI as an object in both cases. Version information is described using owl:versionInfo.

A separate terminology repository for linguistic data categories in linguistic annotation exists: the Ontologies of Linguistic Annotation [35, OLiA].7878 OLiA has been in development since 2005 in an effort to link community-maintained terminology repositories such as GOLD, ISOcat or the CLARIN Concept Registry with annotation schemes and domain- or community-specific models such as LexInfo or the Universal Dependencies specifications by means of an intermediate “Reference Model”. OLiA consists of a set of modular, interlinked ontologies and is designed as a native linked data resource. Its primary contributions are to provide machine-readable documentation of annotation guidelines and to link together other terminology repositories. It has been suggested that such a collection of linking models, developed in an open source process via GitHub, may be capable of circumventing some of the pitfalls of earlier, monolithic solutions of the ISOcat era [24]. At the moment, OLiA covers annotation schemes for more than 100 languages, for morpho-syntax, syntax, discourse and aspects of semantics and morphology. OLiA has a (CC-BY 4.0) license; this is described using the Dublin Core property license with a URI as an object.

4.6.Vocabularies for typological datasets

Relevant resources and initiatives Linguistic typology is commonly defined as the field of linguistics that studies and classifies languages based on their structural features [63]. The field of linguistic typology has natural ties with language documentation, and accordingly, considerable work on linguistic typology and linked data has been conducted in the context of the GOLD ontology (see above, Section 4.5). We can identify the following relevant datasets.

One of the main contributors and advisors to the scientific study of typology is the Association for Linguistic Typology (ALT).7979 They facilitate the description of the typological patterns underlying datasets. One of the most well-known resources that ALT makes available is the World Atlas of Language Structures (WALS)8080 [59,85] which is a large database of phonological, grammatical, and lexical properties of languages gathered together from various descriptive materials. This resource can both be used interactively online and is also downloadable. The CLLD8181 (Cross-Linguistic Linked Data) project integrates WALS, thus, offering a framework that structures this typological dataset using the Linked Data principles.

Another collection that provides web-based access to a large collection of typological datasets is the Typological Database System (TDS) [57,126,127]. The main goals of TDS are to offer users a linguistic knowledge base and content metadata. The knowledge base includes a general ontology and dictionary of linguistic terminology, while the metadata describes the content of the term ontology databases. TDS supports a unified querying across all the typological resources hosted with the help of an integrated ontology. The Clarin Virtual Language Observatory (VLO)8282 incorporates TDS among its repositories.

Finally, another group of datasets relevant for typological research include large-scale collections of lexical data, as provided, for example, by PanLex8383 and Starling.8484 An early RDF edition of PanLex was described by [177] and was incorporated in the initial version of the Linguistic Linked Open Data cloud diagram; at the time of writing, however, this version does not seem to be accessible anymore. Instead, CSV and JSON dumps are being provided from the PanLex website. On this basis, [25] describe a fresh OntoLex-Lemon edition of PanLex (and other) data as part of the ACoLi Dictionary Graph.8585 However, they currently do not provide resolvable URIs, but rather redirect to the original PanLex page. The authors mention that linking would be a future direction, and in preparation for this, they provide a TIAD-TSV edition of the data along with the OntoLex-Lemon edition, with the goal to adapt techniques for lexical linking developed in the context of, for example, the ongoing series of shared tasks on translation inference across dictionaries (TIAD).8686 As for the specific modelling requirements of lexical datasets in linguistic typology, these are not fundamentally different from other forms of lexical data. They do, however, require greater depth with respect to identifying and distinguishing language varieties. This was one of the driving forces behind the development of Glottolog (see Section 5.3.4 below).

Vocabularies for typological datasets In terms of linked data vocabularies and models which are relevant for the creation of typological databases, we can identify LexVo8787 [51]. This vocabulary bridges the gap between linguistic typology and the LOD community and brings together language resources and linked data entity relationships. Indeed, the project behind LexVo has managed to link a large variety of resources on the Web, besides providing global IDs (URIs) for language-related objects. LexVo is available on archivo8888 but is not yet available on LOV. Further discussion of this vocabulary can be found in Section 5.3.4

4.7.Excursus: Tools and platforms for the publishing of LLD

The availability of tools and platforms for the editing, conversion and publication of LLD resources, on the basis of the models which we discuss in this article, is critical for the adoption of those models amongst a wider community of end users. It can be especially important for users who are unfamiliar with the technical details of linked data and the Semantic Web, and yet who are highly motivated to create and/or make use of linked data resources. Such tools/platforms are helpful, for instance, when it comes to the validation and post-editing by domain experts of language resources which have been generated automatically or semi-automatically.

In terms of existing tools or software which offer dedicated provision for the models which we look at in this article, we can mention VocBench and LexO for OntoLex-Lemon. Both of these are web-based platforms which allow for the collaborative development of computational lexical resources by a number of users. In the case of the VocBench platform, currently in its third release [160], users can also develop OWL ontologies and SKOS thesauri as well as OntoLex-Lemon lexica. LexO focuses on assisting users in the creation of OntoLex-Lemon lexical resources and was originally developed in the context of DitMaO a project on the medico-botanical terminology of the Old Occitan language [5]. A first generic (i.e., non-project specific) version of LexO, LexO-lite, is available at https://github.com/andreabellandi/LexO-lite.

Finally, we should mention LLODifier8989 a suite of tools for creating and working with LLD which is currently being developed by the Applied Computational Linguistics Lab of the Goethe University Frankfurt. These include the vis visualization routines9090 for working with NIF and unimorph which works with CoNLL-RDF.

5.An overview of developments in LLD community standards and initiatives

Summary and overview The current section comprises an extensive overview of recent developments in various different LLD community standards and initiatives as they relate to LLD models and vocabularies. In particular, it focuses on three areas that we believe have either been the most active or most prominent over the last few years. These are lexical resources (Section 5.1), annotation and corpora (Section 5.2), and metadata (Section 5.3). We have referred to these as community standards/initiatives because they have been pursued or developed as community efforts rather than within a single research group or project. Membership in these communities is (often) open to all, rather than being limited to members of a specific project or to experts nominated by a standards body. The intention being to allow for the participation of a wider range of stakeholders, as well as encouraging the collection of a wider variety of use-cases than might otherwise be possible.

One of the most notable community efforts in the context of LLOD is the Open Linguistics Working Group (OWLG) of Open Knowledge International.9191 It was OWLG which first introduced the vision of a Linguistic Linked Open Data cloud in 2011 [27], and it was OWLG’s activities, most notably the organization of the long-standing series of international Workshops on Linked Data in Linguistics (LDL, since 2012), as well as the publication of the first collected volume on the topic of Linked Data in Linguistics [34], which ultimately led to the implementation of LLOD cloud in 2012 (something which was celebrated with a special issue of the Semantic Web Journal published in 2015 [125]). The LLOD cloud, now hosted under http://linguistic-lod.org/, has been enthusiastically embraced, with the Linguistics category becoming a top-level category in the 2014 LOD cloud diagram, and since 2018, it has represented the first LOD domain sub-cloud.

Around the same time, a number of more specialized initiatives emerged for which the Open Linguistics Working Group acted and continues to act as an umbrella organisation, facilitating information exchange among them and between these initiatives and the broader circles of linguists interested in linked data technologies and knowledge engineers interested in language. Currently, the main activities of the OWLG are the organization of workshops on Linked Data in Linguistics (LDL), the coordination of datathons such as Multilingual Linked Open Data for Enterprises (MLODE 2012, 2013) and the Summer Datathon in Linguistic Linked Open Data (SD-LLOD, 2015, 2017, 2019), maintaining the Linguistic Linked Open Data (LLOD) cloud diagram9292 and continued information exchange via a shared mailing list9393

Over the years, the focus of discussion has shifted from the OWLG to more specialized mailing lists and communities. At the time of writing, particularly active community groups concerned with data modelling include

– the W3C Community Group Ontology-Lexica,9494 originally working on ontology lexicalization, the group extended their activities after the publication of the OntoLex-Lemon vocabulary (May 2016) and now represents the main locus for discussing the modelling of lexical resources with web standards and in LL(O)D. See Section 5.1.

– the W3C Community Group Linked Data for Language Technology,9595 with a focus on language resource metadata and linguistic annotation with W3C standards

A discussion of the relationship between community initiatives and projects can be found in Section 6.1.2 below.

5.1.Lexical resources: OntoLex-Lemon and its extensions

Summary In this section we describe some of the most recent work that has been carried out on the OntoLex-Lemon model,9696 both within and outside of the ambit of the W3C OntoLex group. With regard to the former case, we discuss three of the latest extensions to the model (the first of which has been published with the other two are still currently under development) in Sections 5.1.1, 5.1.2, and 5.1.3. In Section 5.1.4 we look at a number of new extensions to OntoLex-Lemon which have emerged independently of the W3C OntoLex group over the last two years and which moreover have not been discussed in [9] (for an in-depth discussion of such developments prior to 2018 please refer to the latter paper).

Note that the use of OntoLex-Lemon in a number of different projects is described in Section 6.

5.1.1.The OntoLex-Lemon lexicography module (lexicog)

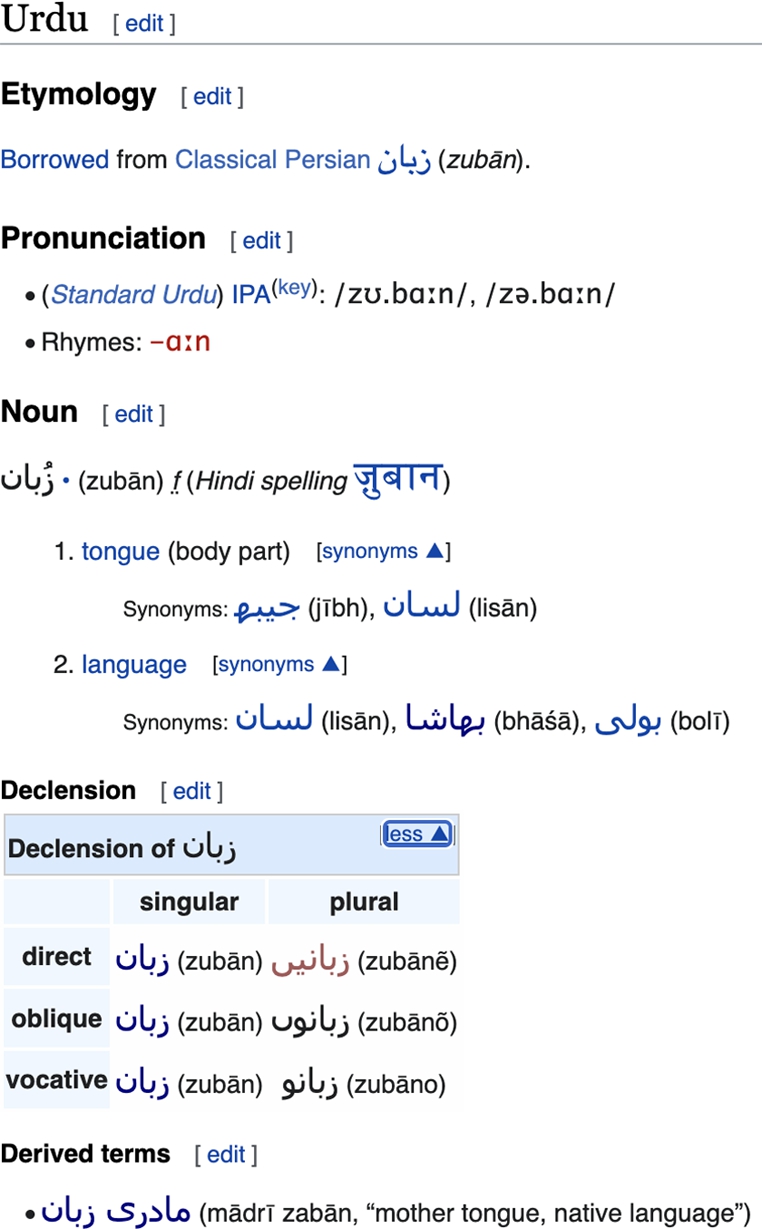

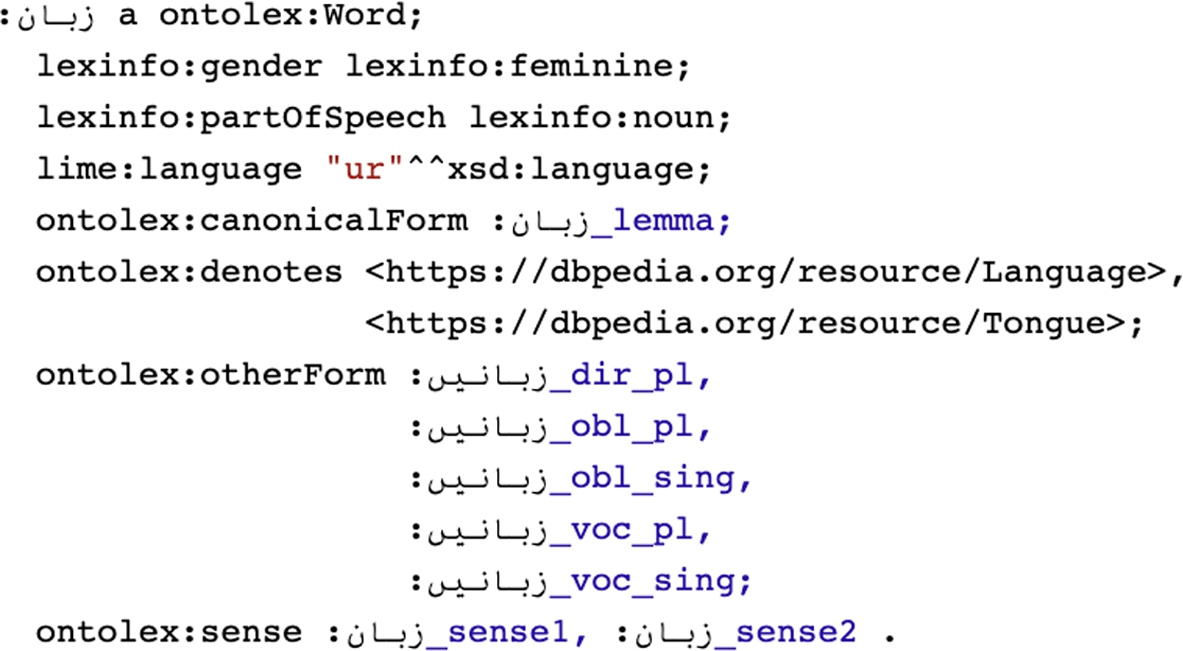

As mentioned previously, lemon and its successor OntoLex-Lemon have been widely adopted for the modelling and publishing of lexica and dictionaries as linked data. Both of them have proven to be reasonably effective in capturing many of the most typical kinds of lexical information contained in dictionaries and in lexical resources in general (e.g., [1,8,82,102,105]). However, there are some fairly common situations in which the model falls short, and most notably in the representation of certain specific elements of dictionaries and other lexicographic datasets [7]. This is not surprising, given that (as we have mentioned above) lemon was initially conceived as a model for a somewhat different use case (grounding ontologies with linguistic information).

In order to adapt OntoLex-Lemon to the modelling necessities and particularities of dictionaries and other lexicographic resources, the W3C OntoLex community group developed a new OntoLex-Lemon Lexicography Module (lexicog).9797 This module was the result of collaborative work with contributions from lexicographers, computer scientists, dictionary industry practitioners, and other stakeholders and was first released in September 2019. As stated in the specification, the lexicog module “overcome[s] the limitations of lemon when modelling lexicographic information as linked data in a way that is agnostic to the underlying lexicographic view and minimises information loss”.

The idea is to keep purely lexical content separate from lexicographic (textual) content. For that purpose, new ontology elements have been added that reflect the dictionary structure (e.g., sense ordering, entry hierarchies, etc.) and complement the OntoLex-Lemon model. The lexicog module have been validated with real enterprise-level dictionary data [10] and a final set of guidelines have been published as an output of the W3C OntoLex group. We give a description of the main classes and properties of the model below9898

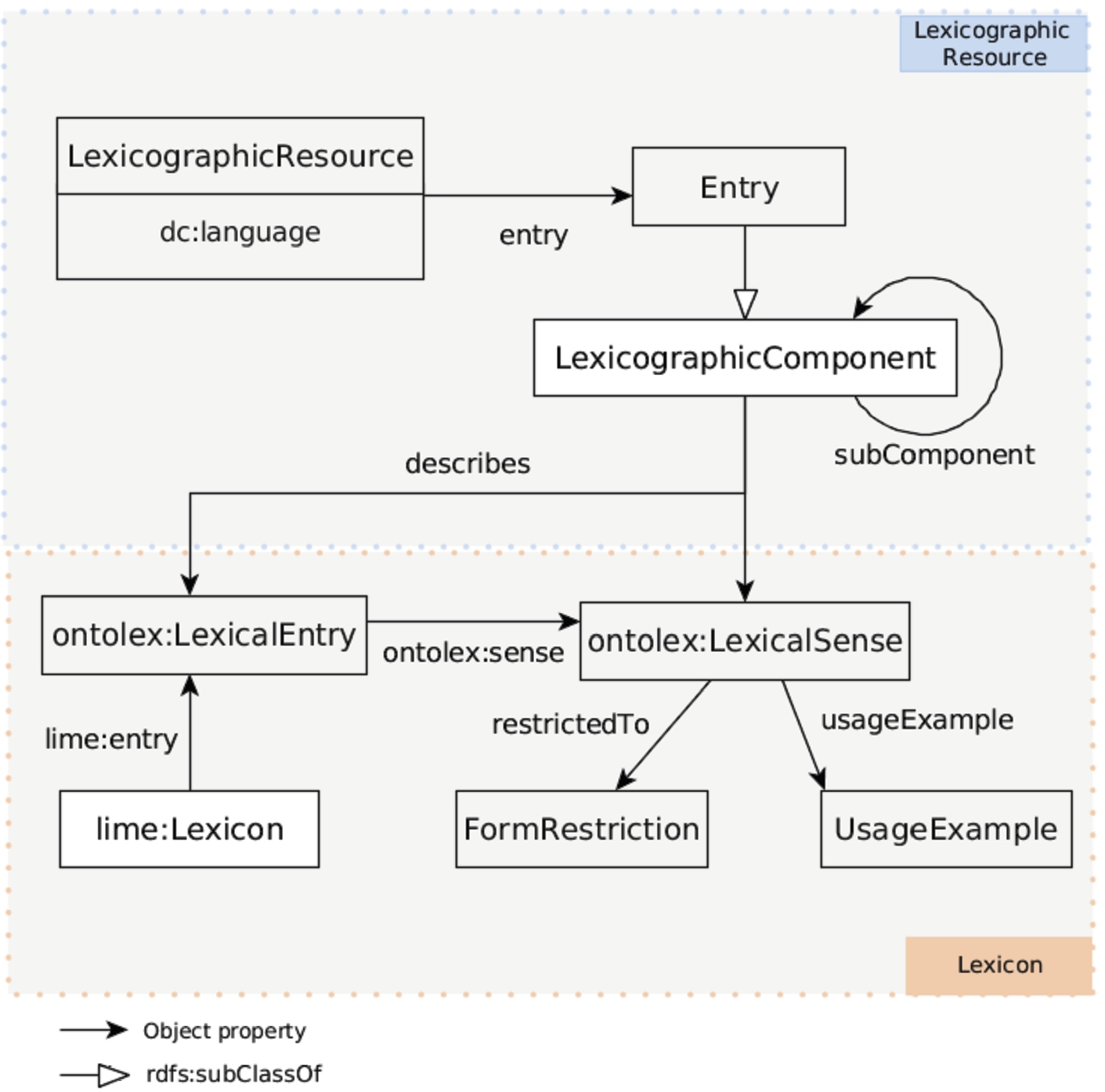

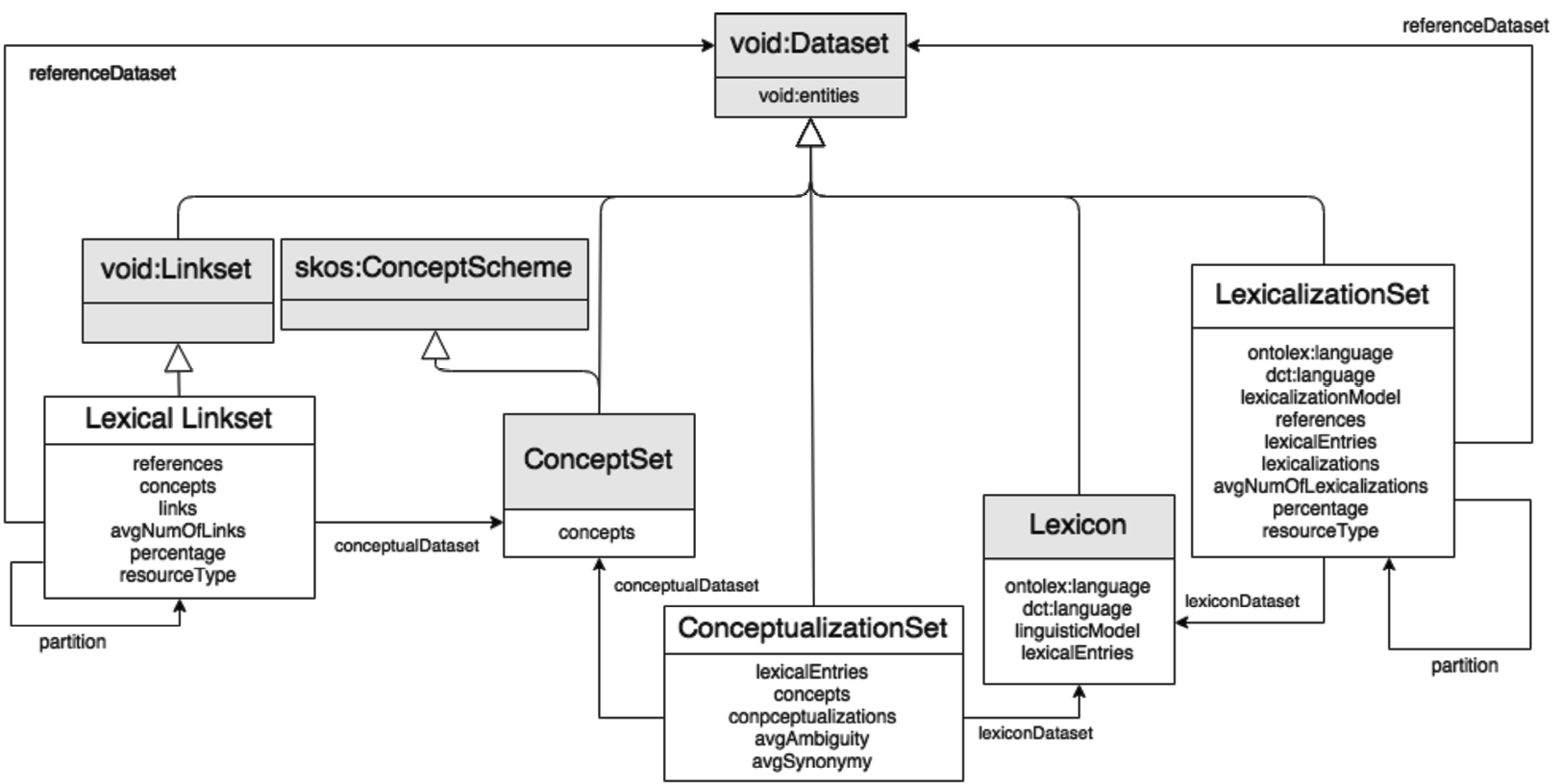

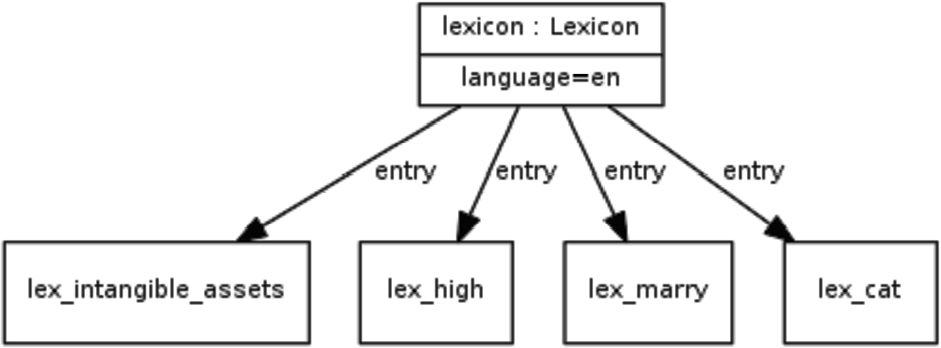

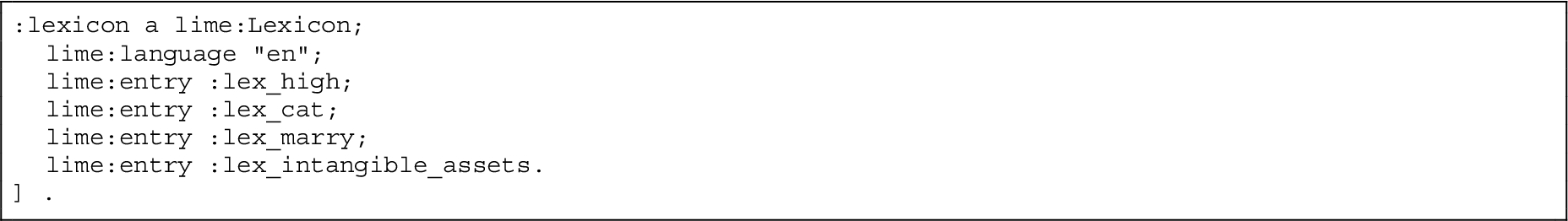

In lexicog the structural organisation of a lexicographic resource is now associated with the class Lexicographic Resource (a subclass of the VoID9999 class Dataset) whereas the lexical content is (as previously) associated with the lime class Lexicon (see Section 5.3.3). The former is described as representing “a collection of lexicographic entries[...]in accord with the lexicographic criteria followed in the development of that resource”.100100

These lexicographic entries are represented in their turn by another new lexicog class, namely, the class Entry, which is defined as being a “structural element that represents a lexicographic article or record as it arranged in a source lexicographic resource”101101 (emphasis ours). An Entry furthermore is related to its source Lexicographic Resource via the object property entry.

The class Entry is a subclass of the more general class Lexicographic Component, defined as “a structural element that represents the (sub-)structures of lexicographic articles providing information about entries, senses or sub-entries”, members of this class “can be arranged in a specific order and/or hierarchy”.102102 That is, Lexicographic Component allows for the representation of the ordering of senses in an entry (and even potentially entries if this is required), the arrangement of senses and sub-senses in a hierarchy, etc. in a published lexicographic resource (by making use of the classes and properties we have looked at above, along with the lexicog object property subComponent) separately from the representation of the same resource as lexical content.

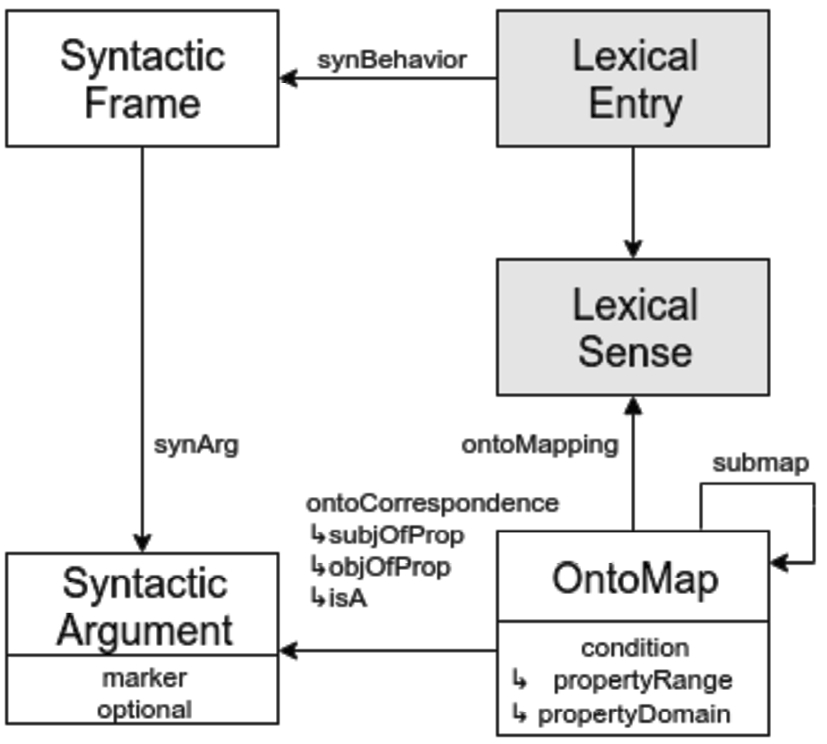

Finally, we need some way of linking together these two levels of representation. This is provided by the lexicog object property describes which relates individuals of class Lexicographic Component, which belong to a specific lexicographic resource, “to an element that represents the latest information provided by that component in the lexicographic resource”.103103 These elements are described in Fig. 1.

Fig. 1.

The lexicog module (taken from the guidelines).

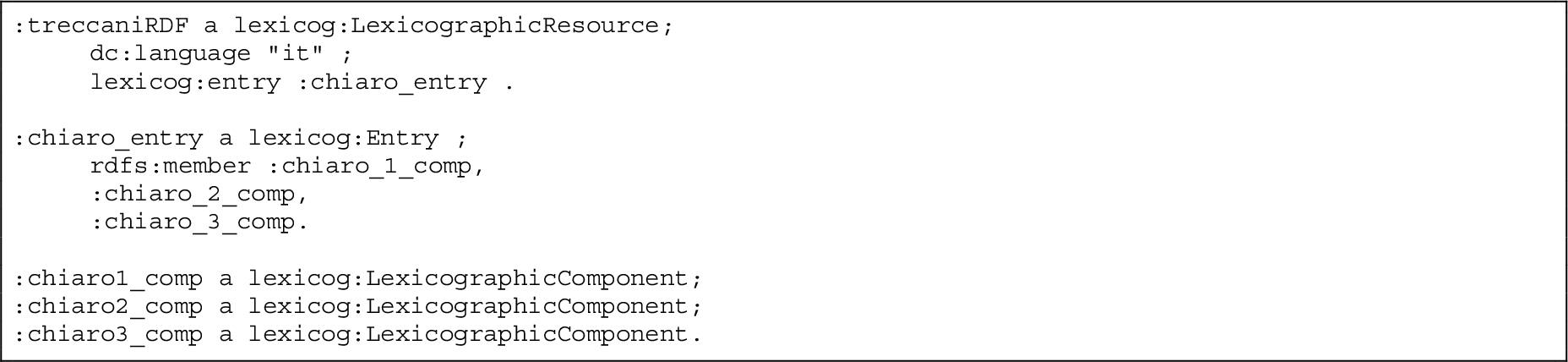

As an example, let’s look a lexicog encoding for the entry for the Italian word chiaro ‘clear’ in the popular Italian language dictionary Treccani.104104 This latter lists the word an adjective, a masculine noun and an adverb. It also lists the adverb chiaramente ‘clearly’ as a related entry, along with the diminutive chiaretto.

More precisely, the first two of the (four) subsenses of the entry are classed as adjectives, the third as a noun, and the fourth as an adverb. We will simplify this for the purposes of exposition by assuming that the first subsense is an adjective, the second a noun, and the third an adverb. This can be represented as follows. First, we represent the encoding of the Treccani dictionary structure itself, and the different sub-components of the entry for chiaro:

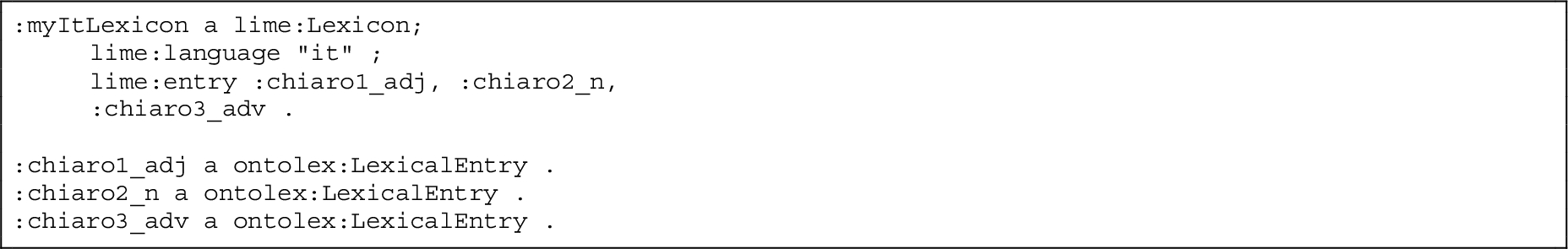

Next we encode a lexicon which represents the content of the resource in the last listing.

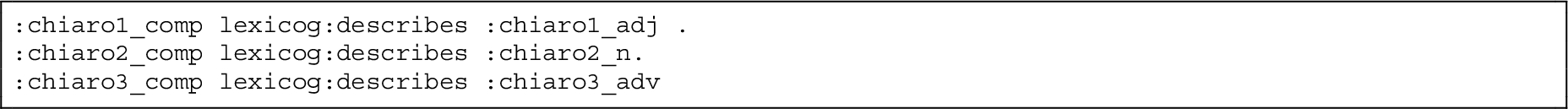

Finally, we bring the two resources together using the describes property.

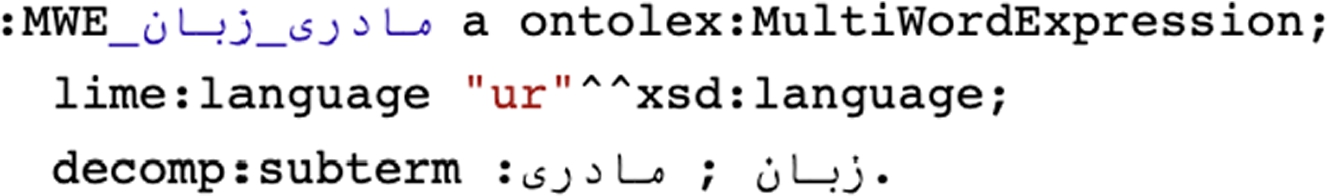

5.1.2.OntoLex-Lemon morphology module

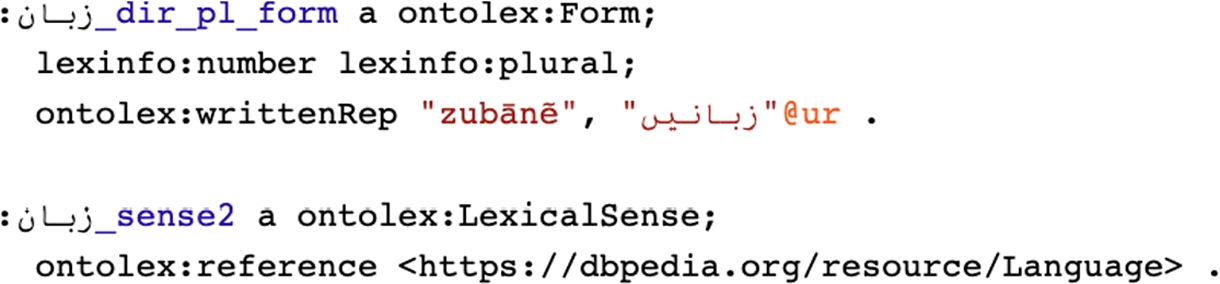

Morphology often an important role in the description of languages in lexical resources, even if the extent of its presence in can often vary, ranging from the sporadic indication of certain specific forms in a dictionary (e.g. plural form for some nouns) to electronic resources which provide tables with entire inflectional paradigms for every word.105105 Consequently, the W3C OntoLex community group since November 2018 has been developing another extension of the original model that would allow for better representation of morphology in lexical resources.

The original OntoLex-Lemon model, together with LexInfo (see Section 4.5), provides the means of encoding basic morphological information. For lexical entries, morpho-syntactic categories such as part of speech can be provided and basic inflection information (i.e., the morphological relationship between a lexical entry and its forms) can be modelled by creating additional inflected forms with corresponding morpho-syntactic features (e.g. case, number, etc.). However, this only covers a small portion of the morphological data to be modelled in many lexical resources. Neither derivation (i.e. morphological relationships between lexical entries) nor additional inflectional information (e.g. declension type for Latin nouns) can be properly modelled with the original model. The new OntoLex-Lemon Morphology module has been proposed to address these limitations. The scope of the module is threefold:

– Representing derivation: for a more sophisticated description of the decomposition of lexical entries;

– Representing inflection: introducing new elements to represent paradigms and wordform-building patterns;

– Providing means to create wordforms automatically based on lexical entries, their paradigms and inflection patterns.

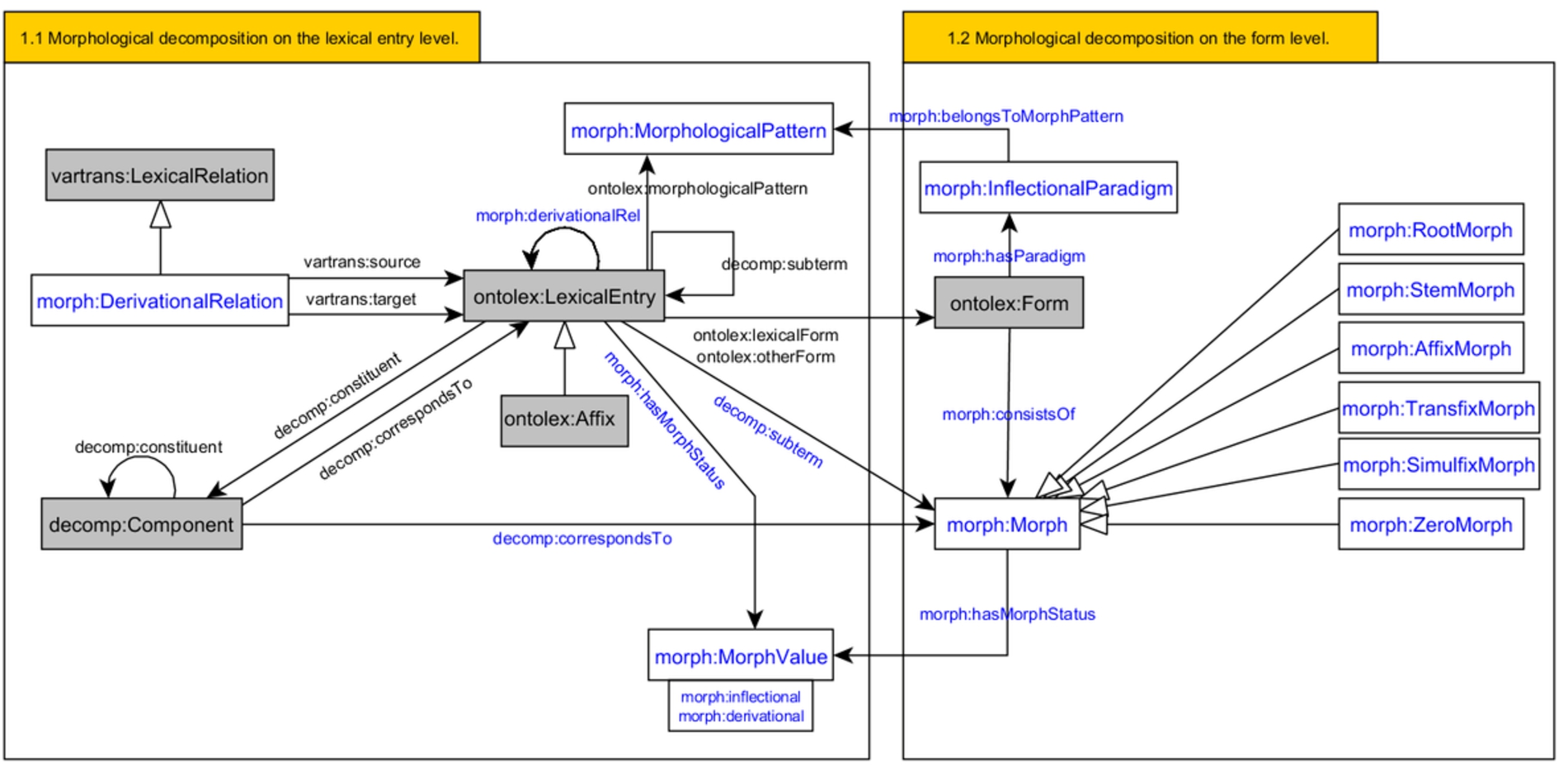

Figure 2 presents a diagram for the module.

Fig. 2.

Preliminary diagram for the morphology module.

The central class of the module, used in the representation of both derivation and inflection, is Morph with subclasses for different types of morphemes.

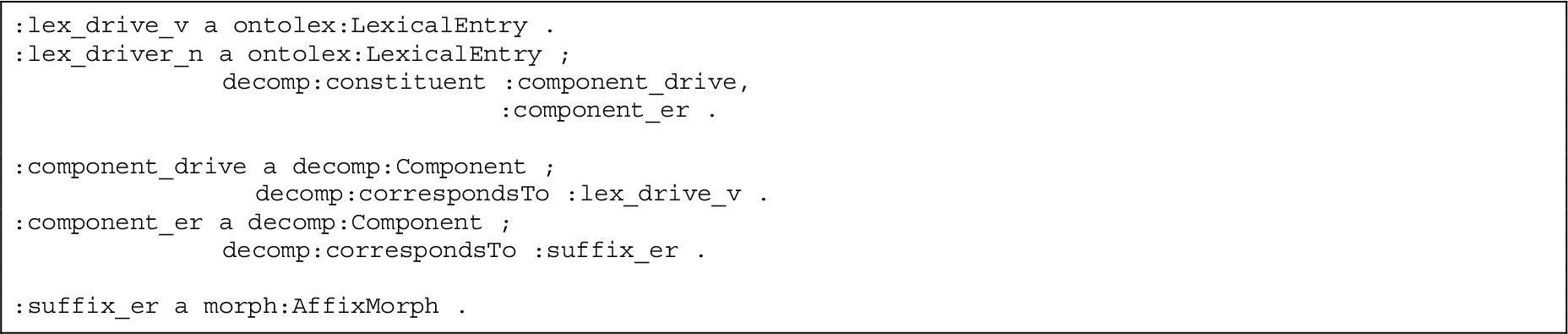

For derivation, elements from the decomp module are reused. A derived lexical entry has Components for each of the morphemes of which it consists. A stem corresponds to a different lexical entry whereas morphemes, which do not correspond to any headwords, correspond to an object of a Morph class. A derived lexical entry has constituent properties pointing to objects of the Component class:

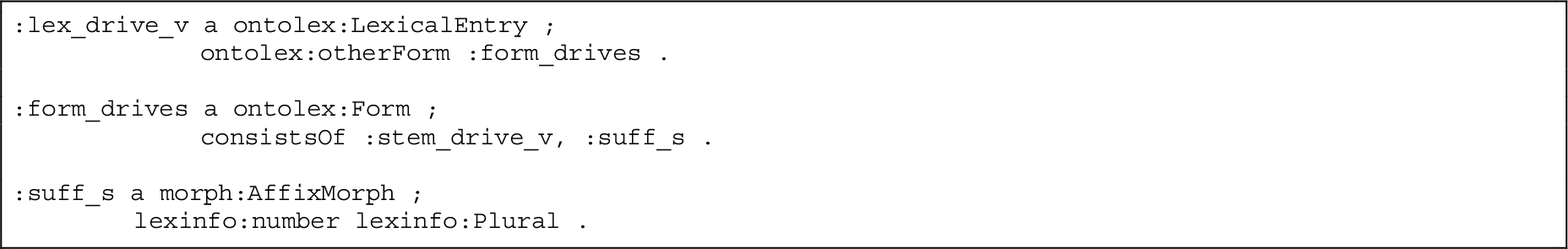

Inflection is modelled as follows: every instance of Form has properties morph:consistsOf which point to instances of morph:Morph.106106 These instances can have morpho-syntactic properties expressed by linking to an external vocabulary, e.g. LexInfo:

The module107107 has not yet been published and is still very much under development by the W3C group. At the time of writing, a consensus was reached on the first two parts of the module, and an overview of these has been published in [106]. The third part, which concerns the automatic generation of forms, is currently being discussed, and the next step will be validating the model by creating resources using the module.

5.1.3.OntoLex-FrAC: Frequency, attestations, corpus information

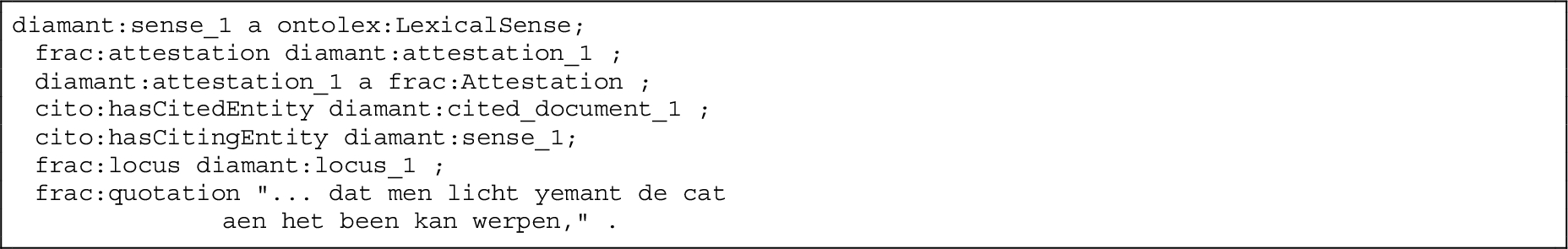

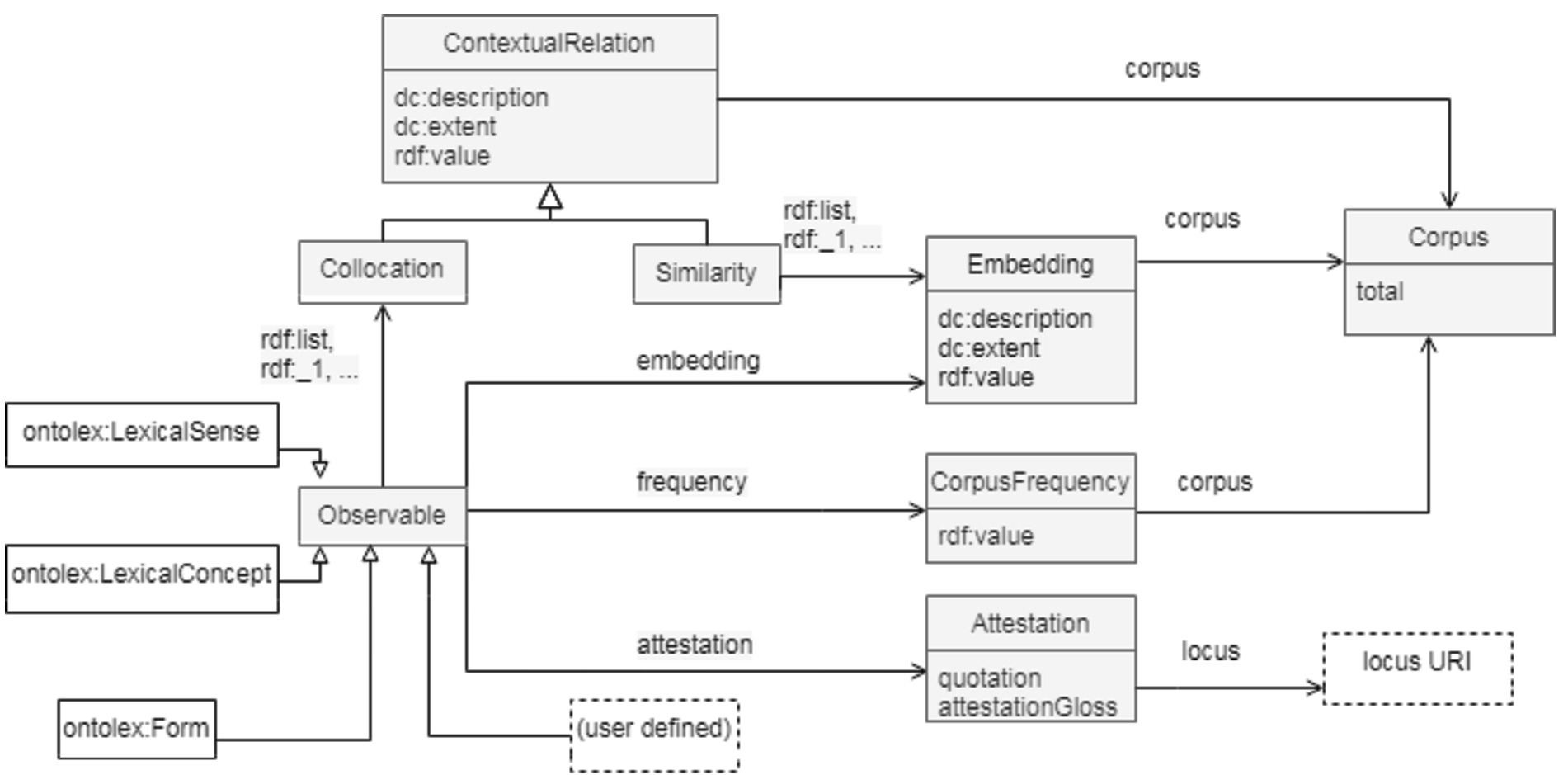

In parallel with the development of the Morphology Module, the OntoLex W3C group has also started developing a separate module that would allow for the enrichment of lexical resources with information drawn from corpora. Most notably, this includes the representation of attestations (often used as illustrative examples in a dictionary). These latter were originally discussed within lexicog (See 5.1.1), but this discussion quickly outgrew the confines of computational lexicography/e-lexicography alone. Furthermore, it was observed that OntoLex-Lemon lacked any support for corpus-based statistics, a cornerstone not only of empirical lexicography, but also of computational philology, corpus linguistics and language technology, and thus, again, beyond the scope of the lexicog module. Finally, the OntoLex community group felt the need to specifically address the requirements of modern language technology by extending its expressive power to corpus-based metrics and data structures like word embeddings, collocations, similarity scores and clusters, etc.

The development of the module has been use-case-based, which has dictated the order and development for various parts of the FRaC module. The stable parts of the module include the representation of (absolute) frequencies and attestations, and, by analogy, any use case that requires pointing from a lexical resource into an annotated corpus or other forms of external empirical evidence [30]. We will limit ourselves to describing these stable parts in what follows.

The central element which has been introduced in FRaC e is frac:Observable defined as “an abstract superclass for any element of a lexical resource that frequency, attestation or corpus-derived information can be expressed about”.108108 Since the type of elements for which corpus-based information can be provided is not limited to an entry, form, sense, or concept but can be any of these, Observable was introduced as a superclass for all these classes, among others to be potentially defined by the user.

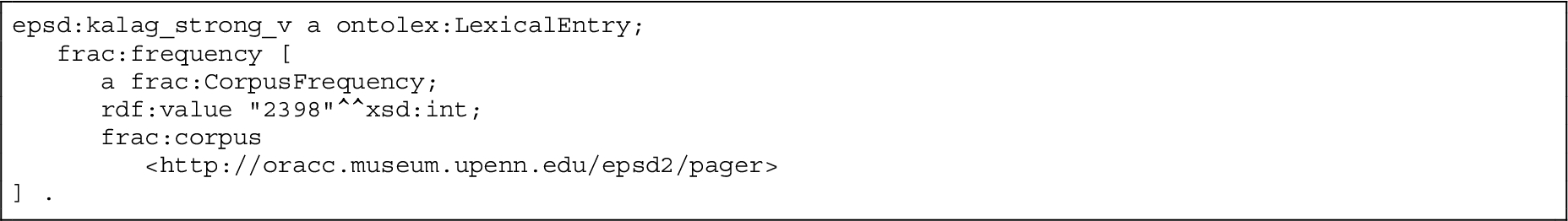

The module provides means to model only absolute frequency, because “relative frequencies can be derived if absolute frequencies and totals are known” [30, p. 2]. To represent frequency, a property frequency with an instance of CorpusFrequency as an object should be defined. This instance must implement the properties corpus and rdf:value:109109

The usage recommendation is to define a subclass of CorpusFrequency for a specific corpus when representing frequency information for many elements in the same corpus.

In FRAC corpus attestations, i.e. corpus evidence in FrAC, are defined as “a special form of citation that provides evidence for the existence of a certain lexical phenomenon; they can elucidate meaning or illustrate various linguistic features”.110110 As with frequency, there is a class Attestation, an instance of which should be an object of a property attestation. This class is associated with two properties: attestationGloss – the text of the attestation – and locus – the location where the attestation can be found:

The FrAC module does not provide an exhaustive vocabulary and instead promotes reuse of external vocabularies, such as CITO [136] for a citation object and NIF or WebAnnotation (see 5.2) to define a locus.

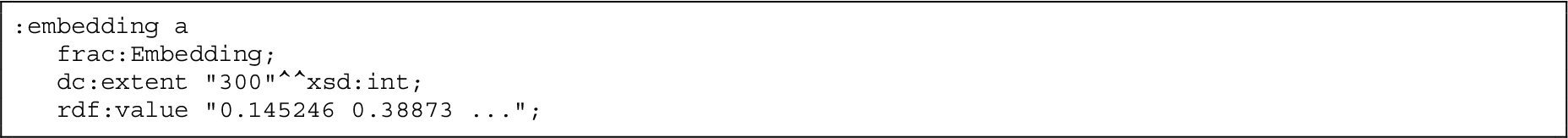

Another, more recent paper focused on representing embeddings in lexical resources is [20]. It should be noted that the term embedding is used here in a broader sense than is usual in the field of natural language processing, namely as a morphism Y (

The main motivation to model embeddings as a part of this module is to provide metadata as RDF for pre-computed embeddings, therefore a word vector itself is stored as a string with an embedding vector:

As with modelling frequency, the recommendation is to define a subclass for the specific type of embedding concerned in order to make the RDF less verbose.

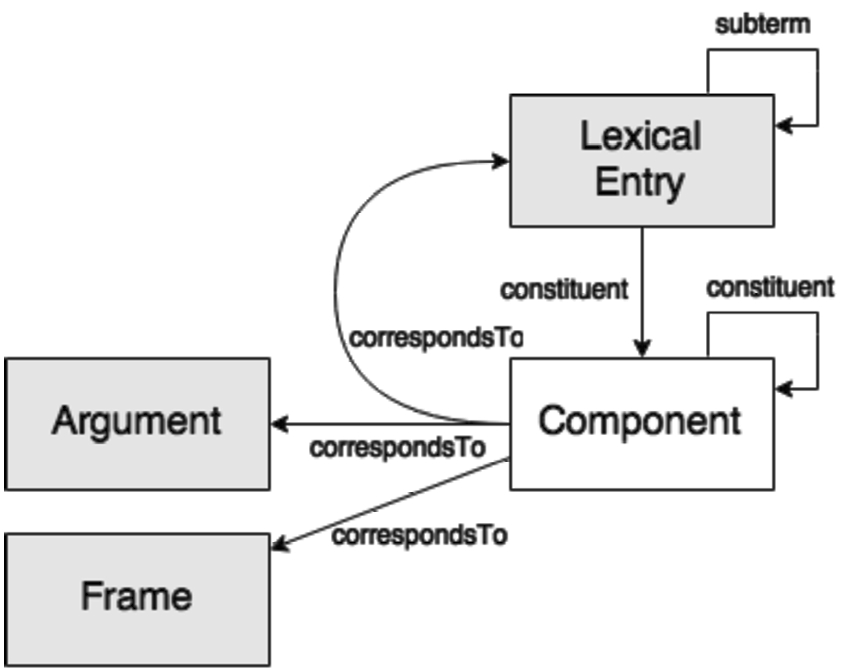

Figure 3 presents a diagram of the latest version of the module. Note that we will not go into detail on the classes Similarity, Collocation and ContextualRelation here, since the definitions of these classes and their related properties is still under discussion. However, we leave them in the diagram to give the reader an idea of the current progress of the model.

Fig. 3.

Preliminary diagram for the FrAC module.

At the time of writing, module development is focused on collecting and modelling various use-cases. Among the many use-cases that were proposed during this phase, one stood out in particular and seemed to be more challenging than the others: this was related to the modelling of sign language data. Given the nature of the data (video clips with signs and/or time series of key coordinates for preprocessed data), it was decided that although the use-case was out of the scope of the FrAC module, it did indeed raise serious interest within the community, and therefore discussion on whether it will be developed as a separate module in the future, is now underway. The question of the scope of this new module and, more generally, its connection to OntoLex-Lemon, is currently subject to discussion.

5.1.4.Selected individual contributions

‘Unofficial’ OntoLex-Lemon extensions developed outside the W3C OntoLex Community Group are manifold, and while these are not yet being pursued as candidates for future OntoLex-Lemon modules by the group, they may represent a nucleus and a cumulation point for future directions.

Selected recent extensions include lemon-tree [161], an OntoLex-Lemon and SKOS based model for publishing topical thesauri, where the latter are defined as lexical resources which are organised on the basis of meanings or topics.112112 The use of the lemon-tree model to publish the Thesaurus of Old English [162] reveals the flexibility of the OntoLex-Lemon/LLD approach in modelling more specialised kinds of linguistic information. As indeed does lemonEty [100] another ‘unofficial’ extension of the OntoLex-Lemon model, which has been proposed as a means of encoding etymological information contained both in lexica and dictionaries as well in other kinds of resources (such as articles or monographs). The lemonEty model does this by exploiting the graph-based structure of RDF data and by rendering explicit the status of etymologies as linguistic hypotheses.

In both of these cases, the RDF data model together with the various different standards and technologies which make up the Semantic Web stack as a whole, allows for the structuring of data that is strongly heterogeneous and integrates together temporal,113113 geographical and historical information.

5.2.Annotation and corpora

Summary In this section, we give an overview of a number of LLD vocabularies for the annotation of texts. Section 5.2.1 constitutes a detailed introduction and general overview of this topic. Then we focus on the two most popular LLD vocabularies for text annotation, the NLP Interchange Format (Section 5.2.2) and Web Annotation (Section 5.2.3). Next, in Section 5.2.4 we look at two domain specific vocabularies, Ligt and CoNLL-RDF. Finally, in Section 5.2.5, we look at the prospects of a convergence between the vocabularies which we have discussed. (Note that in this section, we only discuss vocabularies that define data structures for linguistic annotation by NLP tools and in annotated corpora. Linguistic categories and grammatical features, as well as other information that represents the content of an annotation, are assumed to be provided by a(ny) repository of linguistic data categories (see above))

5.2.1.Introduction and overview

Linguistic annotation of corpora by NLP tools in a way that integrates Semantic Web standards and technologies has long been a topic of discussion within LLD circles, with different proposals grounded in traditions from natural language processing [14], web technologies [173], knowledge extraction [86], but also from linguistics [120], philology [2], and the development of corpus management systems [17,55].

A practical introduction to the various different vocabularies used (by various different communities, for different purposes and according to different capabilities) for linguistic annotation in RDF today is given over the course of several chapters in [36]. In brief, the RDF vocabularies which are most widely used for this purpose are the NLP Interchange Format (NIF, in language technology) and Web Annotation (OA, in bioinformatics and digital humanities), as well as customizations of these. We describe NIF in Section 5.2.2 and Web Annotation in Section 5.2.3.

In the current section we give an overview of the relationship between RDF and two other pre-RDF vocabularies, then we will touch upon some platform specific RDF vocabularies for annotations that have been developed over the years. Aside from software- or platform-specific formats, a number of vocabularies has been developed that address specific problems or user communities.

Pre-RDF vocabularies Developed by the ISO TC37/SC4 Language Resource Management group, the Linguistic Annotation Framework (LAF) vocabulary represents “universal” data structures shared by the various, domain- and application specific ISO standards [96]. Following the earlier insight that a labelled directed multigraph can represent any kind of linguistic annotation, LAF produces concepts and definitions for four main aspects of linguistic annotation: anchors and regions elements in the primary data that annotations refer to; markables (nodes) elements that constitute and define the scope of the annotation by reference to anchors and regions; values (labels) elements that represent the content of a particular annotation; and relations (edges) links (directed relations) that hold between two nodes and can be annotated in the same was as markables.

Note that in relation to Web Annotation anchors roughly correspond to Web Annotation selectors (or target URIs); markables roughly correspond to annotation elements; values to the body objects of Web Annotation. In Web Annotation, relations as data structures are not foreseen.114114 As for NIF, its relation with LAF is more complex. Like Web Annotation, NIF does not provide a counterpart of LAF relations, but more importantly, the roles of regions and markables are conflated in NIF: Every markable must be a string (character span), and for every character span, there exists exactly one potential markable (URI, or, a number of URIs with different schemes that are owl:sameAs).

At the moment, direct RDF serializations of LAF do not seem to be widely used in an LLOD context. The reason is certainly that the dominant RDF vocabularies for annotations, despite their deficiencies, cover the large majority of use cases. One notable RDF serialisation of LAF however is POWLA [19], an OWL2/DL serialization of PAULA, a standoff-XML format that implemented the LAF as originally described by [91]. POWLA complements LAF core data structures with formal axioms and slightly more refined data structures that support, for example, effective navigation of tree annotations. On current applications of POWLA see the CoNLL-RDF Tree Extension below.115115

It is also worth mentioning TEI/XML in the context of this discussion. The standard, widely used in the digital humanities and in computational philology, only comes with partial support for RDF and does not represent a publication format for Linked Data. Traditionally there has been an acknowledgement on the part of the TEI community of the value in being able to link from a digital edition (or another TEI/XML document) to a knowledge graph.116116 Interlinking between (elements of) electronic editions created with TEI was addressed by means of specialised XML attributes with narrowly defined semantics. Accordingly, electronic editions in TEI/XML do not normally qualify as Linked Data, even if they use and provide resolvable URIs (TEI pointers).117117

The annotation of rather than within TEI documents, however, has been pursued by Pelagios/Pleiades, a community interested in the annotation of historical documents and maps with geographical identifiers and other forms of geoinformation (though this does not yet run to linguistic annotations). One result of these efforts is the development of a specialised editor called Recogito, and its extension to TEI/XML. In this case the annotation is not part of the TEI document, but stored as standoff annotation in a JSON-LD format, and thus, is in compliance with established web standards and re-usable by external tools and addressable as Linked Data. However, this approach is restricted to cases in which the underlying TEI document is static and no longer changes.118118 Therefore, there is a need for encoding RDF triples directly inline in a TEI document. Happily, it has been demonstrated that this can be done in a W3C- and XML-compliant way by incorporating RDFa attributes into TEI [150,167]. As a result and after more than a decade of discussions, the TEI started in May 2020 to work on a customization that allowed the use of RDFa in TEI documents.119119

Platform specific RDF vocabularies Over the years, several platforms, projects and tools have come up with their own approaches for modelling annotations and corpora as linked data. Notable examples include the RDF output of machine reading and NLP systems such as FRED [74], NewsReader [174] or the LAPPS Grid [90]. We discuss these below.

FRED provides output based on NIF or EARMARK [135], with annotations partially grounded in DOLCE [73], but enriched with lexicalized ad hoc properties for aspects of annotation covered by these.120120 The NewsReader Annotation Format (or NLP Annotation Format) NAF, is an XML-standoff format for which an NIF-inspired RDF export has been described [66], and LIF, the LAPPS Interchange Format [172], a JSON-LD format used for NLP workflows by the LAPPS Grid Galaxy Workflow Engine [92].121121

Both LIF and NAF-RDF are, however, not generic formats for linguistic annotations but rather, provide (relatively rich) inventories of vocabulary items for specific NLP tasks.122122 Neither seem to have been used as a format for data publication, and we are not aware of their use independently of the software they have originally been created for or are being created by.

5.2.2.NLP interchange format

The NLP Interchange Format (NIF),123123 developed at AKSW Leipzig, was designed to facilitate the integration of NLP tools in knowledge extraction pipelines, as part of the building of a Semantic Web tool chain and a technology stack for language technology on the web [86]. NIF provides support for a broad range of frequently occurring NLP tasks such as part of speech tagging, lemmatization, entity linking, coreference resolution, sentiment analysis, and, to a limited extent, syntactic and semantic parsing. In addition to providing a technological solution for integrating NLP tools in semantic web annotations, NIF also provides specifications for web services.

A core feature of NIF is that it is grounded in a formal model of strings and that it makes the use of String URIs as fragment identifiers obligatory for anything annotable by NIF. Every element that can be annotated in NIF has to be a string.124124 NIF does support different fragment identifier schemes, e.g., the offset-based scheme defined by RFC 5147. [178] As a consequence, any two annotations that cover the same string are bound to the same (or owl:sameAs) URI. While this has the advantage of being able to implicitly merge the output of different annotation tools, this limits the applicability of NIF to linguistically annotated corpora.

As an example, NIF does not allow us to distinguish multiple syntactic phrases that cover the same token. Consider the sentence “Stay, they said.”125125 The Stanford PCFG parser126126 analyzes Stay as a verb phrase contained in (and only constituent of) a sentence. In NIF, both would be conflated. Likewise, zero elements in syntactic and semantic annotation cannot be expressed. Another limitation of NIF is its insufficient support for annotating the internal structure of words. It is thus largely inapplicable to the annotation of morphologically rich languages.

Overall, NIF fulfills its goals to provide RDF wrappers for off-the-shelf NLP tools, but it is not sufficient for richer annotations such as are frequently found in linguistically annotated corpora. Nevertheless, NIF has been used as a publication format for corpora with entity annotations.127127 It also continues to be a popular component of the DBpedia technology stack. At the same time, active development of NIF seems to have slowed down since the mid-2010s, whereas limited progress on NIF standardization has been achieved. A notable exception in this regard is the development of the Internationalization Tag Set [64, ITS] that aims to facilitate the integration of automated processing of human language into core Web technologies. A major contribution of ITS 2.0 has been to add an RDF serialization into NIF as part of the standard.

More recent developments of NIF include extensions for provenance (NIF 2.1, 2016) and the development of novel NIF-based infrastructures around DBpedia and Wikidata [72]. In parallel to this, NIF has been the basis for the development of more specialised vocabularies, e.g., CoNLL-RDF for linguistic annotations originally provided in tabular formats, see Section 5.2.4.

5.2.3.Web annotation

The Web Annotation Data Model is an RDF-based approach to standoff annotations (in which annotations and the material to be annotated are stored separately) proposed by the Open Annotation community.128128 It is a flexible means of representing standoff annotation for any kind of document on the web. Although the most common use case of Web Annotation is the attaching of a piece of text to a single web resource, it is intended to be applicable across different media formats. So far, Web Annotation has been primarily applied to linguistic annotations in the biomedical domain, although other notable applications include NLP [173] or Digital Humanities [95]. Web Annotation recommends the use of JSON-LD to add a layer of standoff annotations to documents and other resources accessible over the web, with primary data structures defined by the Web Annotation Data Model, formalised as an OWL ontology.

The core data structure of the Web Annotation Data Model is the annotation, i.e., instances of oa:Annotation that have an oa:hasTarget property that identifies the element that carries the annotation, and the oa:hasSource property that – optionally – provides a value for the annotation, e.g., as a literal. The target can be a URI (IRI) or a selector, i.e., a resource that identifies the annotated element in terms of its contextual properties, formalised in RDF, e.g., its offset or characteristics of the target format. By supporting user-defined selectors and a broad pool of pre-defined selectors for several media types, Web Annotation is applicable to any kind of media on the web. Targets can also be more compact string URIs, as introduced, for example, by NIF. NIF data structures can thus be used to complement Web Annotation [86].

Web Annotation can be used for any labelling or linking task, e.g., POS tagging, lemmatization, entity linking. It does, however, not support relational annotations such as syntax and semantics, nor (like NIF) the annotation of empty elements. The addition of such elements from LAF has been suggested [173], but does not seem to have been adopted, as labelling tasks dominate the current usage scenarios of Web Annotation.

Unlike NIF, Web Annotation is ideally suited for the annotation of multimedia content or entities that are manifested in different media simultaneously (e.g., in audio and transcript). As a result, it has become popular in the digital humanities, e.g., for the annotation of geographical entities with tools such as Recogito [156], especially since support for creating standoff annotations for static TEI/XML documents was added (around March 2018 [37, p.247]).

5.2.4.Domain-specific solutions: Ligt and CoNLL-RDF

Interlinear glossed text (IGT) is a notation where annotations are placed, as the name suggests, between the lines of a text with the purpose of helping readers to understand and interpret linguistic phenomena. The notation is frequently used in education and various language sciences such as language documentation, linguistic typology, and philological studies (for instance, it is commonly used to gloss linguistic examples). Moreover, IGT data can consist of different layers, including translation and transliteration layers, and usually contains layers for ensuring morpheme-level alignment. IGT is not supported by any established vocabularies for representing annotations on linguistic corpora. And although there exist several specialised formats which are specifically designed for the storage and exchange of IGT, these formats are not re-used across different tools, limiting the reusability of annotated data.