Linked Open Images: Visual similarity for the Semantic Web

Abstract

This paper presents ArtVision, a Semantic Web application that integrates computer vision APIs with the ResearchSpace platform, allowing for the matching of similar artworks and photographs across cultural heritage image collections. The field of Digital Art History stands to benefit a great deal from computer vision, as numerous projects have already made good progress in tackling issues of visual similarity, artwork classification, style detection, gesture analysis, among others. Pharos, the International Consortium of Photo Archives, is building its platform using the ResearchSpace knowledge system, an open-source semantic web platform that allows heritage institutions to publish and enrich collections as Linked Open Data through the CIDOC-CRM, and other ontologies. Using the images and artwork data of Pharos collections, this paper outlines the methodologies used to integrate visual similarity data from a number of computer vision APIs, allowing users to discover similar artworks and generate canonical URIs for each artwork.

1.Introduction

The matching of visually similar artworks across cultural heritage image collections, in particular when applied to two-dimensional works, has been a topic of growing interest to scholars and institutions [12,18]. Various conferences, symposia, and workshops have been organized over the years to explore the usefulness of applying Computer Vision (CV) technology to the arts.11 Although this paper provides a report on some CV APIs and their applicability to the cultural heritage domain, its main focus is to provide a report on a Semantic Web application that enables institutions to link artworks across collections and generate canonical URIs for each work. The platform makes these tools available to non-technical users and provides open access to artwork similarity data through a SPARQL endpoint for interpretation and reuse. These URIs can then be integrated into any number of external artwork collection platforms, and be used by researchers to cite and identify a specific artwork in their publications. As the platform provides SPARQL access to both its CV APIs as well as existing similarity data between images, these can also be used to provide training data to new machine learning models to refine notions of visual similarity between artworks. The platform uses the image collections of Pharos,22 The International Consortium of Photo Archives. This collaboration between fourteen European and North American art historical research institutes aims to create an open and freely accessible digital research platform to provide consolidated access to their collections of photographs and associated scholarly documentation. The institutions collectively own an estimated 20 million photographs documenting works of art and the history of photography itself, forming the largest repository of images for the field of art history. The platform is built using the ResearchSpace platform, a Semantic Web knowledge representation research environment designed by the British Museum to support a move away from traditional static and narrow data indexes to dynamic and richer contextualized information patterns [15]. The data are published using the CIDOC-CRM ontology, the methodology of which will be outlined in forthcoming articles following the publication of the platform in 2022.

The ArtVision platform, published at https://vision.artresearch.net, provides a user interface for institutional staff from participating Pharos institutions to log in and perform a supervised matching of artworks across collections. By matching up artworks, the platform generates a canonical, de-referenceable URI for each artwork, allowing these to be reused across the web and in scholarly publications. On the main Pharos platform, these records are then merged and users can visualize data from multiple providers related to a single artwork. Provenance information for each piece of data allows users to compare and contrast often conflicting data related to a single artwork. Additionally, through SPARQL federation across REST APIs, the ArtVision application allows users to upload an image to the platform and find visually similar artworks from the Pharos collections in real-time.

2.Background

During the greater part of the 20th century, photo archives and slide libraries served as the primary source of images for art historians prior to a transition to a digital medium [21]. Institutions that held these archives have sought to digitize and catalog these images, providing valuable insights into the histories of these objects. Historical data pertaining to conservation, provenance, copies, and preparatory studies is contained on the backs of the photographs in the form of annotations written but scholars over last century and more [1]. Art historians have a long tradition of writing about images, one could say, transcribing a visual language to a textual one. As with all translation activities, there is a loss of meaning in this process. While the perception of images is instantaneous and universal, writing is bound by time and cultural bias [5]. This bias becomes especially evident when comparing the annotations on the backs of some historical photographs, as art historians and catalogers have often attributed these works to different artists, artistic schools, or time periods. Although also biased, Computer Vision has emerged as a powerful tool for the field of Digital Art History with the potential to bridge some of these gaps between images and text, allowing images to dialog with one another without the mediation or dependence on texts. While the analytical capacity of CV generally cannot surpass that of an art historian, the ability to perform specific tasks at a scale otherwise unattainable by humans makes it attractive to certain use-cases for art historians [13]. For the image collections of the Pharos Consortium, CV tools that can match artworks across collections are especially promising as they can provide an initial set of results to curators, who can then verify them through a manual process.

3.Related work

A good deal of work has already been done in applying CV to images of artworks: attempts to automatically classify paintings [19], recognize style [10], object detection studies to identify objects in artworks [2,3,6], evaluating influence [4], and large-scale analysis of broad concepts within artworks such as gesture [8,9]. The opportunities offered by this technology are numerous but the solutions are generally ad-hoc and are rarely made available to non-technical users. John Resig33 and Seguin et al [16,17] have used CV to match artwork images across collections to compare metadata at scale, and have the most similar use case. This application builds on and extends some of this work by integrating it with semantic technologies, providing a user interface for collection curators to verify results through a supervised process. Additionally, similarity data are stored in an openly-accessible knowledge graph for interpretation and reuse and a canonical URI for each artwork is generated on the main Pharos platform. This URI provides the foundation for artwork records that allow users to visualize consolidated data from multiple partners.

4.Visual search for cultural heritage

A range of off-the-shelf Computer Vision and Machine Learning APIs were tested for their ability to find visually similar artworks. To gauge their usefulness, each tool was tested with specific use-cases. As outlined in the Table 1, three forms of visual search were evaluated: exact image match, visually similar, and partial image match. Although Google Cloud Vision did not allow for the loading of a custom image index, it was included in this study as a future integration could include the matching of Pharos images across the web using data provided by Google.

Table 1

CV/ML tools tested for visual search

| Exact image match | Visually similar | Partial image match | Custom image index | Custom classifiers | |

| Google Cloud Vision | x | x | |||

| Clarifai | x | x | x | x | |

| Pastec.io | x | x | x | x | x |

| Match | x | x | |||

| InceptionV3 | x | x | x | x |

An exact image match, meaning that two images are nearly identical, proved to be the most broadly supported task. In this case, it is assumed that the crop and content of images would be nearly identical, or with minor variations. The implementation that proved to be the most robust and easy to deploy was Match,44 a reverse image search based on ascribe/image-match.55 This API provides a signature for each image which is stored in ElasticSearch for quick retrieval. It scales very well to billions of images, a functionality that few other tools can claim. The use case for a near or exact image search is limited however, as different images of artworks will almost always subsume some level of variation in crop, color, or angle when photographed. Nevertheless, these results can provide an additional layer of verification to other tools that offer a partial image match, and can be useful to find historical photographs that are prints made from the same negative, or multiple scans of the same photograph.

APIs that support searches for images with differing crops, color reproduction, angle of view, or physical alterations of a work were less consistent. This work expanded on and corroborated earlier tests by John Resig66 on behalf of the Pharos consortium that showed that Pastec.io,77 an open-source image similarity search API, can provide a very usable set of results for historical photographs.

Tools that were not accessible through an API (free or commercial), did not allow for searching within a set of images provided by the user, or were too outdated were not tested. These include Visual Search by Machine Box,88 Deep Video Analytics,99 Bing Visual Search API,1010 the Replica project,1111 and ArtPi.1212

Among the APIs or models that did allow for an internal index of images, Clarifai and InceptionV3 provided the least useful results. A sampling of the highest similarity scores produced by these tools showed false positives in over fifty percent of the results. This is likely because they were trained on images of real world objects and use Convolutional Neural Networks rather than the bag-of-words methodology [20] employed by Pastec or the pHash1313 library used by Match.

The Pastec API was able to produce the most useful results that were applicable to the ArtVision use case as it was able to find identical and visually similar artworks even where the images were significantly different from one another. Matches were found between images even when the frame was removed, when the crop was different, where one image was in grayscale and another in color, with variations in the angle in which the photograph was taken, or when one artwork was a copy of another.

5.System architecture

The ArtVision ResearchSpace instance stores a minimal subset of data pertaining to each artwork image in its Blazegraph database backend. To add image data into the Pastec internal index (or any other CV API), an ArtVision API1414 was developed to handle the process of downloading the image URLs from the blazegraph endpoint and loading them into a CV API.

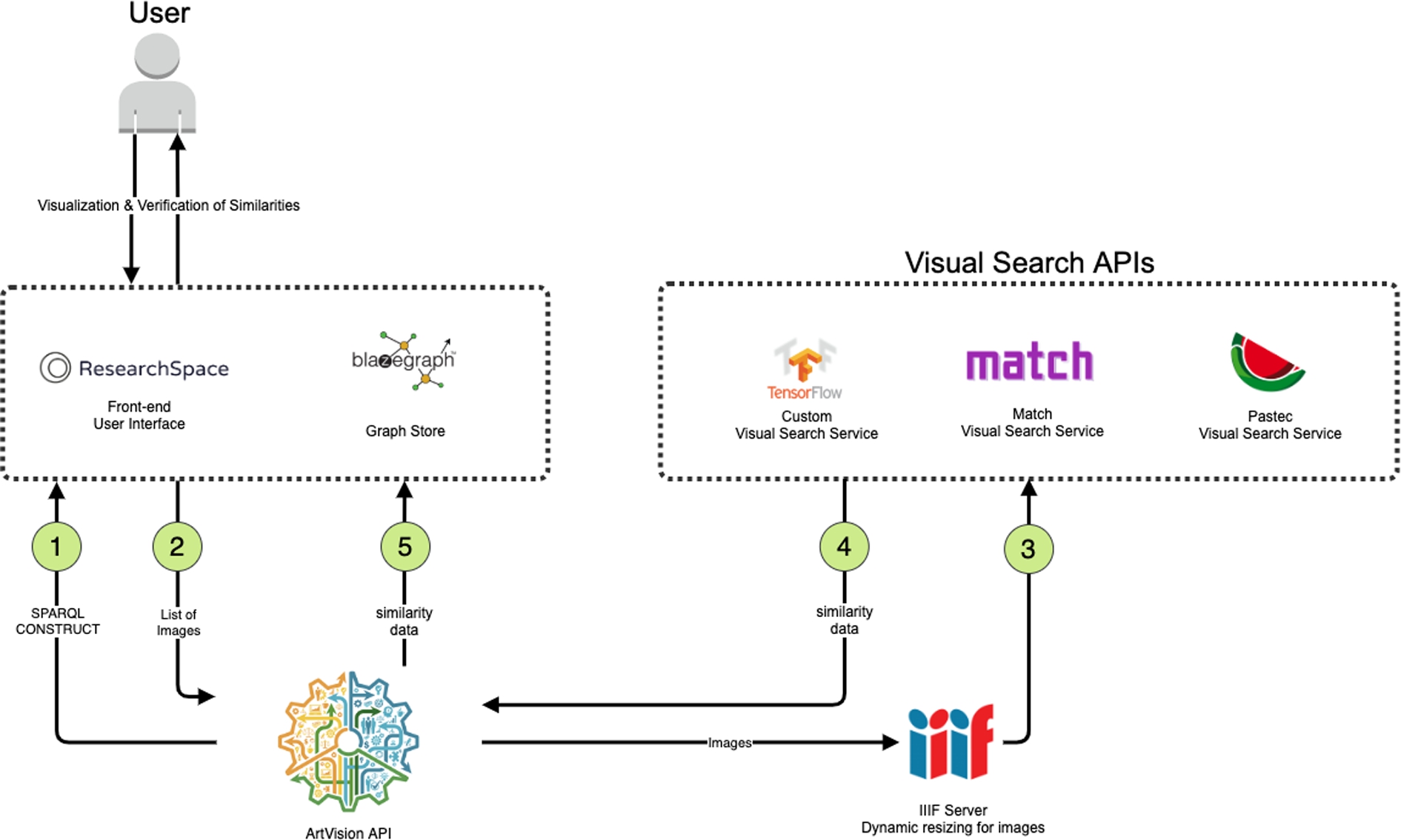

Fig. 1.

Software architecture for the ArtVision API.

First, the Blazegraph endpoint is queried through a SPARQL construct query (Fig. 1). This process returns a list of images(2) and saves them according to a predefined data model in a turtle file. The ArtVision API then iterates through this list of images and passes them to each CV API (3) for indexing after resizing them through a IIIF server.1515 After indexing is complete, another query is sent to retrieve similar images(4), the results of which are transformed according to the predefined data model and inserted via SPARQL(5) into the Blazegraph database. When an image is indexed by a specific CV API, the identifier within that index is materialized to the image URI node within the Pharos dataset ensuring that when the original construct query is re-run, that image does not get re-indexed to any particular API. Each CV API is added through a configuration file, where java classes can be added or modified to handle different API parameters. The data model is completely modular to serve a wide range of use cases. The ArtVision ResearchSpace platform then renders these data in a user interface, allowing collection curators to review results and perform matches. The ArtVision API is built as a Java application, where the architecture is agnostic to the visual search tool being used, allowing for the system to grow with additional services over time.

6.Data model

The underlying data model was instrumental in ensuring the interoperability and extensibility of similarity results from multiple CV APIs. At the most basic level, the requirement was to describe a level of similarity between two target images according to a specific association method (CV API) and have a similarity score for that methodology. When searching for either image in a given pair, results from CV APIs should always yield the same similarity score, resulting in a bidirectional similarity.

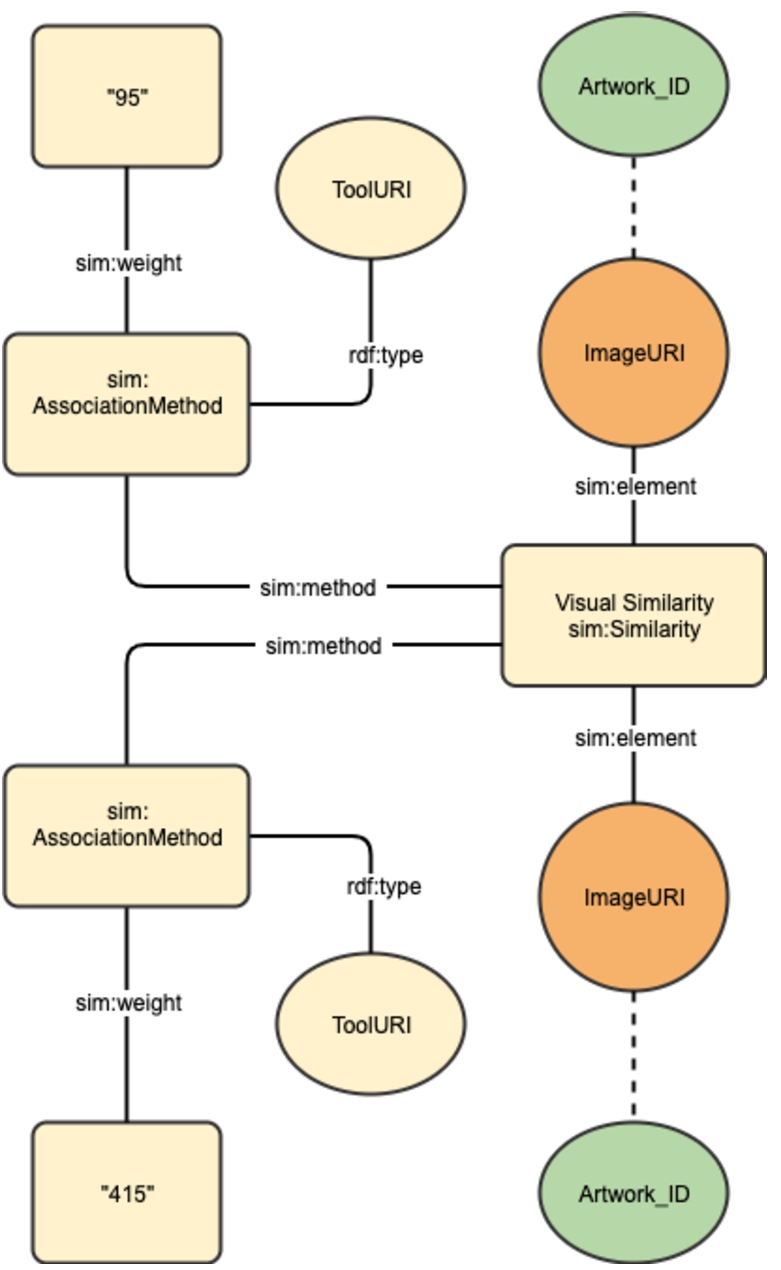

Fig. 2.

ArtVision data model.

As shown in Fig. 2, the Similarity Ontology,1616 originally designed for music but applicable to any domain, provided the necessary ontological framework to encode similarities for any pair of images. A single similarity node can have multiple association methods, each with its own weight. Although the website documenting the ontology is no longer available (it was last modified in 2010), the internet archive has a copy and the ontology itself is available on Linked Open Vocabularies.1717

7.URI hashing

One challenge with having a single similarity node connecting each pair of images was generating this URI programmatically without having to perform a lookup to see if one already existed. To allow for additional CV APIs to be added at a later date, the URI of the similarity node was generated as a hash based on the URL of the two connected images. The ability to calculate the URI of this node through SPARQL was a requirement in order to allow for the creation of these nodes through the ResearchSpace infrastructure.

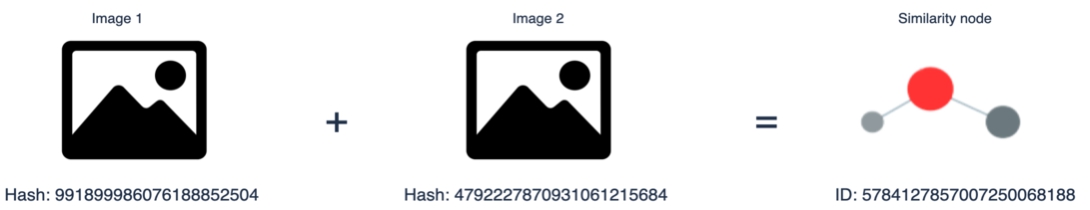

Fig. 3.

URI hashing for ArtVision.

As seen in Fig. 3, the similarity node should be the sum of a hash of the two image URLs. This way, the sum of a hash of Image 1 + Image 2 (or the inverse Image 2 + Image 1), would equal that of the similarity node. This was implemented by obtaining a SHA1 hash of each image and removing all characters that are not integers through a regular expression, subsequently adding them together.

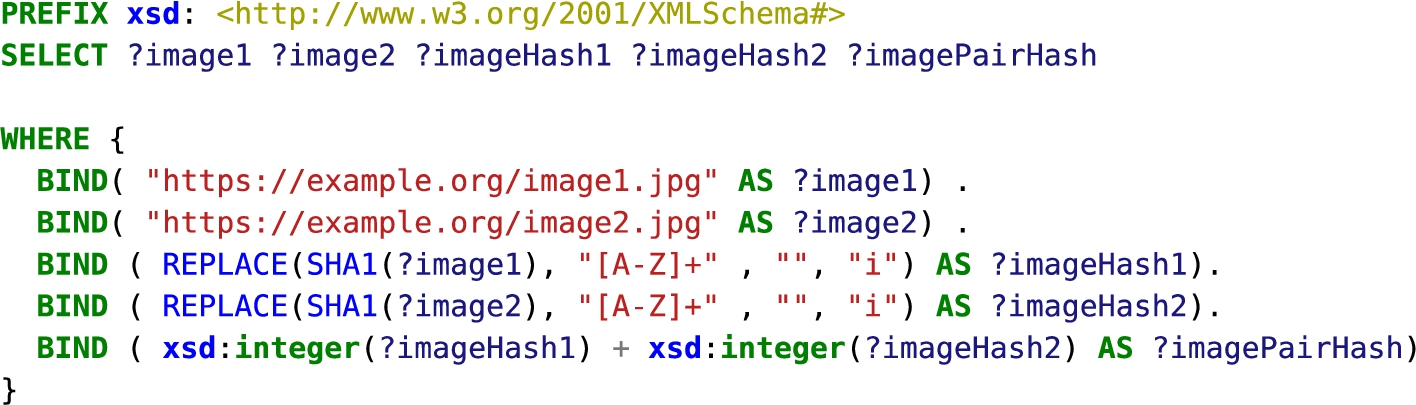

Fig. 4.

URI hashing.

Figure 4 illustrates how this hashing can be calculated through SPARQL, allowing for this to be implemented both in the separate java application of the ArtVision API as well as through ResearchSpace via SPARQL. Depending on the number of images in the dataset, other hashing functions such as SHA256 or SHA512 could also be implemented to avoid the possibility of clashing URIs.

8.SPARQL federation across REST APIs

Leveraging the RDF4J Storage and Inference Layer (SAIL) libraries, A SPARQL to REST API federation service within ResearchSpace enables end-users to paste an image URL into a webform and retrieve matched artworks from the Pharos dataset in real-time. This federation service was developed by researchers at the Harvard Digital Humanities Lab1818 with help from the ResearchSpace team and was generalized to allow for the querying of a wide range of REST endpoints. Each REST endpoint is registered as a SPARQL repository and mapping files provide a translation between the API results using a predefined ontology. This functionality is especially useful for art historians aiming to find the corresponding record in the Pharos dataset when they are unable to find the record through a metadata search, do not know who the artist is, or simply want to find images that are visually similar.

9.ArtVision user interface

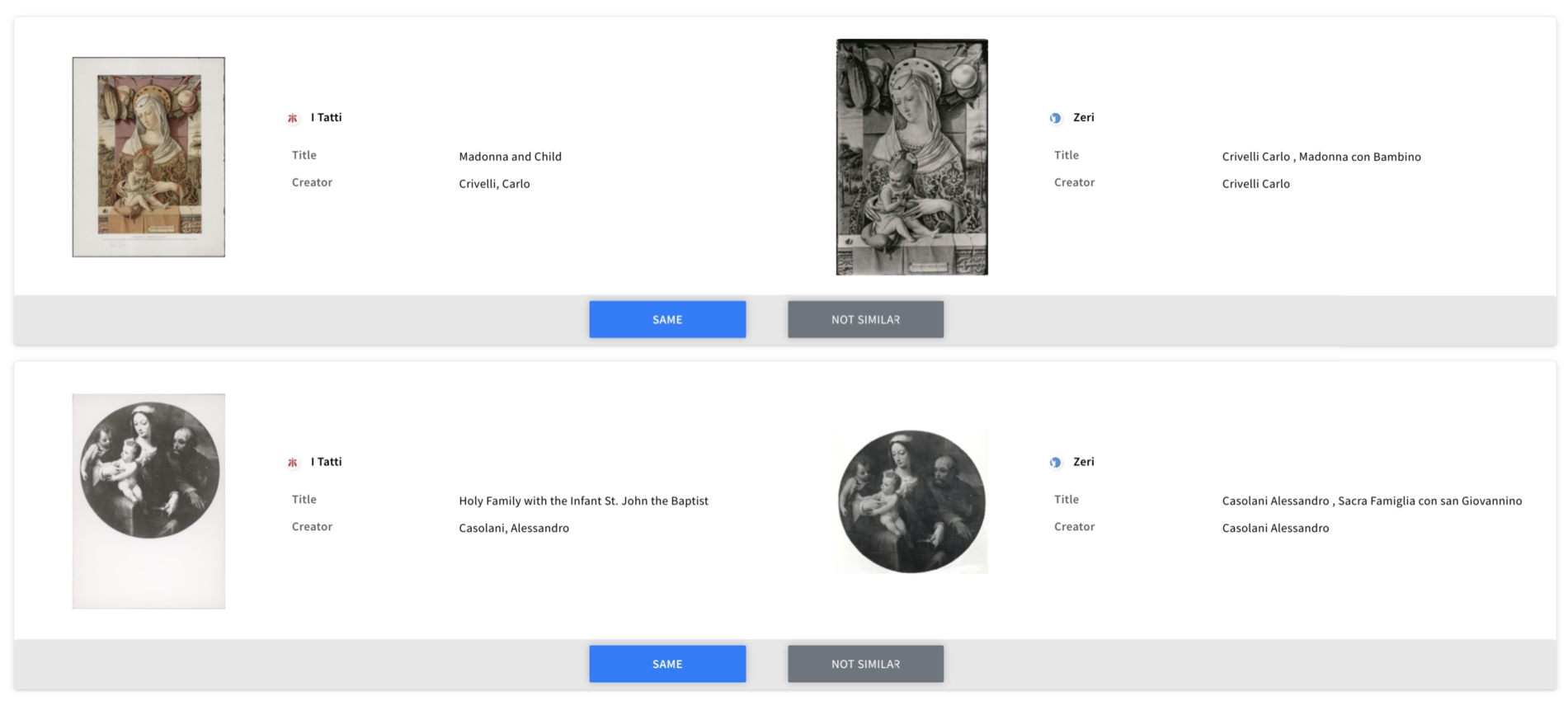

Two user interfaces were developed within the ArtVision ResearchSpace instance to allow collection curators to log in and match up artwork records across institutions. In a more simplified view as illustrated in Fig. 5, staff are presented with a pair of visually similar images and a limited subset of metadata to quickly compare two artworks. In the Pharos dataset, each artwork as cataloged by a particular institution is stored in a named graph that allows for the tracking of the provenance of these data. Each artwork is represented by both an institutional URI as well as a canonical Pharos URI. By clicking the “same” button in the ArtVision platform, one of the Pharos URIs is marked as being deprecated and owl:sameAs links are created between the two institutional URIs and the remaining Pharos URI. These owl:sameAs links allow multiple artwork records to be merged into one on the main Pharos platform, enabling public users to compare and contrast data from multiple sources.

Fig. 5.

Simplified view: comparing images and metadata from Pharos collections.

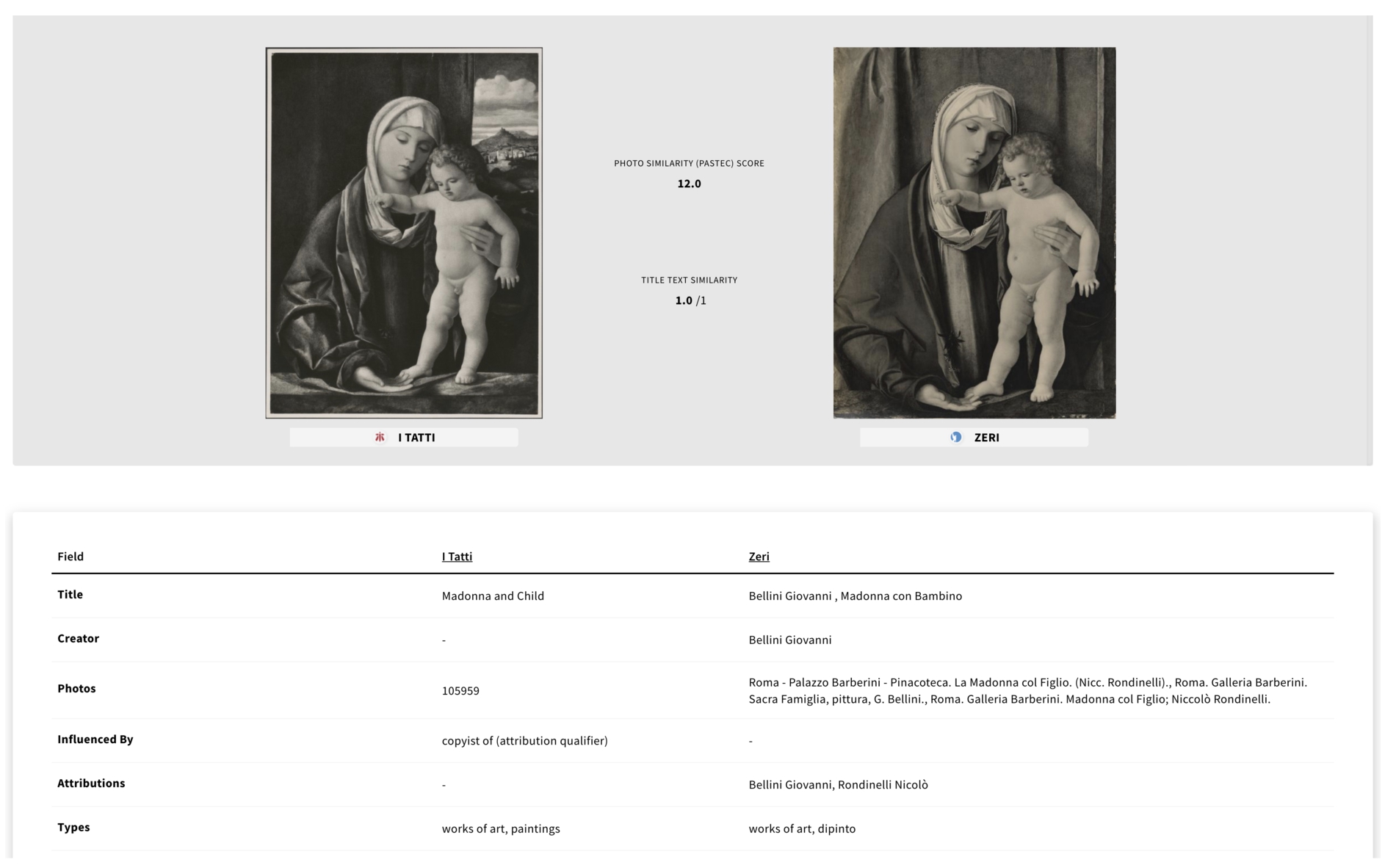

A second interface, as shown in Fig. 6, allows staff to view both artwork records side-by-side with more detailed metadata. Clicking on an image, both images are shown in a IIIF viewer that allows users to zoom in and perform an in-depth comparison of these two images. Visual similarity scores for each CV API are shown between the two images, allowing users to visualize the raw results from multiple CV APIs. This interface also allows users to make assertions about different types of similarity using the CIDOC CRMInf Argumentation Model. Here, an assertion is created that describes the relationship between two images as one being a copy of another, one a preparatory drawing, or two photographs derived from the same negative.

Fig. 6.

Detailed view: comparing images and metadata from Pharos collections.

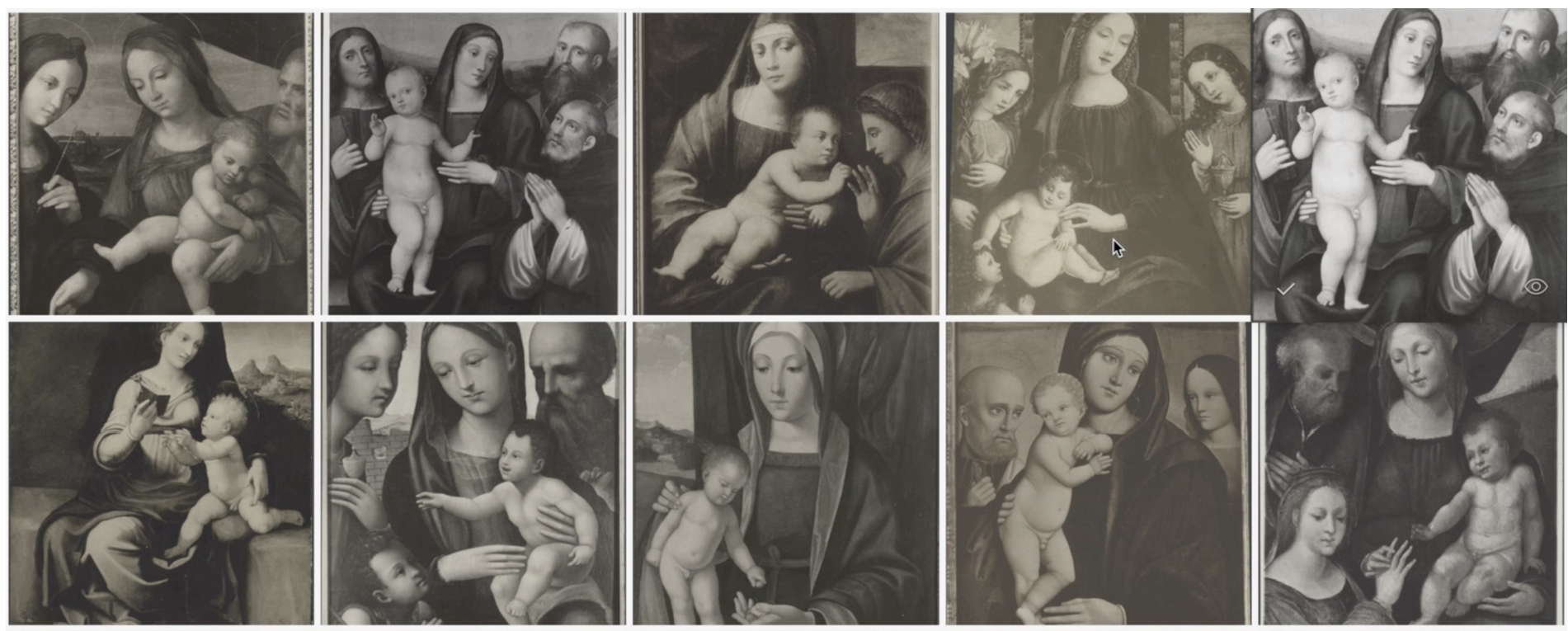

Fig. 7.

Matching iconographic themes.

10.Visual cataloging

Another (future) use case that could prove to be disruptive to libraries and archives needing to catalog images of artworks, is to leverage the functionality of partial image match or similar image matching to assist in the cataloging of images in batch. As seen in Fig. 7 the ability to use images to search for others with the same iconographic theme presents a powerful tool for institutions to be able to apply metadata to vast numbers of images. This is particularly useful in situations where image metadata are not available, potentially emboldening the publishing efforts of photograph collections by institutions that have no metadata.

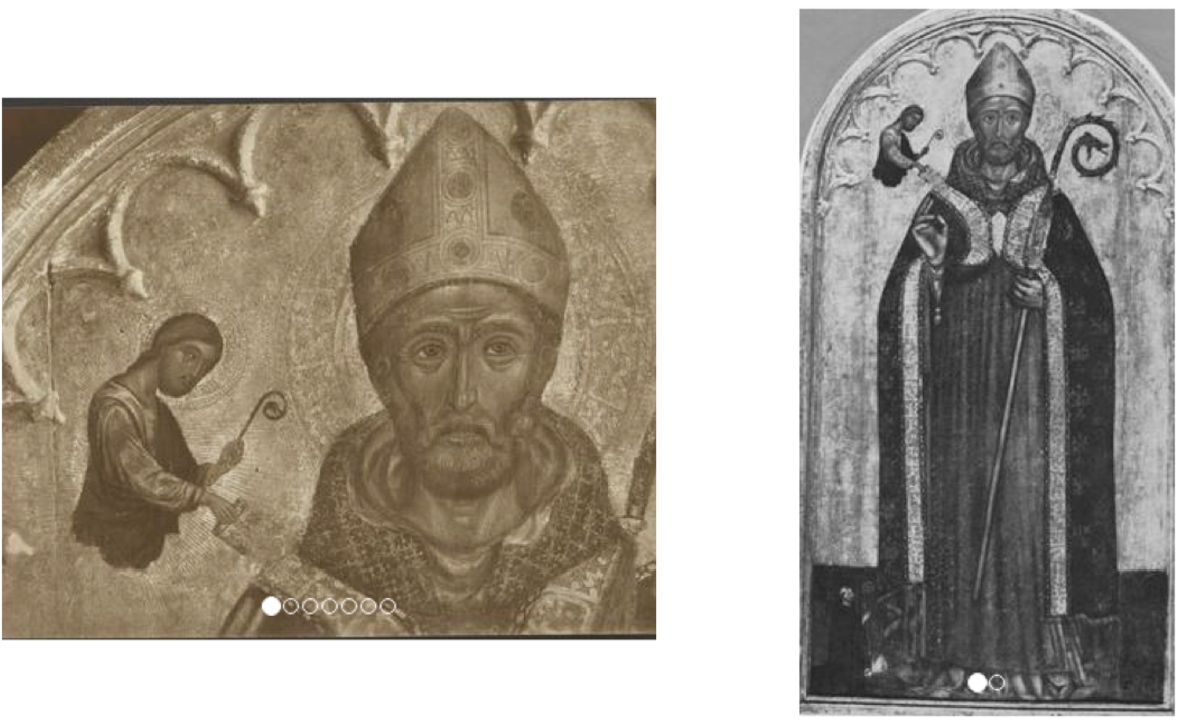

Fig. 8.

Matching details of artworks to their whole.

Partial image match allows for cropped images or details of works to be found within images that contain the whole. There are numerous use cases for this kind of functionality, especially images of artworks that have been split up into pieces, as is often the case with altarpieces and triptychs. As seen in Fig. 8, a small detail of an artwork was matched with the Pastec API. With partial image match, visual cataloging can be implemented to search the verso of photographs. As illustrated by Fig. 9, this could be applied to photographer or institution stamps. These kinds of searches could be very useful for scholars researching the history of photography, tracking the works of particular photographers across collections that have not published these data. Visual cataloging can be implemented by allowing users to leverage the IIIF API to select a portion of an image and query the CV API to retrieve images with similar details in real-time. Users could then select groups of images to which they would like to apply metadata in batches.

Fig. 9.

Matching stamps and handwritten text on the verso of photographs.

11.Impact and future work

The ArtVision platform can be used to find discrepancies in metadata, identify visually similar images, and merge records of artworks that are the same. A single artwork that was documented in two different photographs may have been cataloged as being by two separate artists or having different production dates. This is a fairly common practice when cataloging images of artworks that are lost, where the only data a cataloger has to work with is the photograph itself. Using visual similarity search, data about lost artworks can be easily merged and these histories can be reconstructed.

The ability to generate canonical URIs for artworks across collections is a first for the field of art history. While many vocabularies are available to disambiguate and provide identifiers for artists (ULAN1919), places (TGN,2020 GeoNames2121), Institutions (VIAF2222), and bibliographic entities (OCLC2323) across the web of data, the challenge of providing identifiers to artworks has never been properly tackled. The Getty CONA vocabulary2424 has made some efforts on this front but has not seen much development over the last ten years [7]. ArtVision seeks to overcome these challenges by serving as a linking mechanism between institutions that are owners of artworks, institutions that hold reproductions, and scholars who seek to reference them through Linked Data services.

In addition to providing artwork disambiguation services to individuals and institutions seeking to match up images of artworks across the web, ArtVision enables the linking of visually similar artworks, copies, restorations, preparatory drawings and their subsequent works, cropped images of larger artworks, or two photographic prints that have been produced from the same negative. The platform aims to democratize the accessibility of CV functionality to non-technical users. Comparing similarity data generated by CV APIs with similarity data obtained through a supervised process will also enable the creation of Machine Learning models that are more fine-tuned to works of art. As the dataset of similarity data on artworks grows, new classification models can be trained to refine different notions of visual similarity.

The platform will also serve as an attractive tool for institutions that need to digitize image collections but do not have the capacity to catalog them, as is the case with many Pharos partners. Over time, image collections from museums and archives can be integrated, allowing for the linking of archival records to museum collection websites across the web. The platform could prove to be transformative for scholars who typically need to search through collections data from multiple siloed repositories. These data would otherwise be spread out in various databases across the web, with a sometimes limited ability to track them down if not with visual search. While Google image search has been useful to scholars when searching for copies of similar images, most images from institutional repositories are not indexed [11].

ArtVision continues to evolve in parallel to the main public-facing Pharos platform. As curators from collections perform matches through a supervised process, these data will provide a set of measurable metrics to evaluate the quality of results from CV APIs. Questionnaires will be administered to assess qualitative feedback on the user interface, and heuristics will capture possible issues with non predefined tasks [14]. Given the 20th century practice of collecting photographs that depict works of art, it is very likely that a sizeable portion of the consolidated Pharos collections will have some overlap. Once a critical mass of artwork matches has been completed through the supervised process, this will lead to new insights into the field of photography and the history of collecting.

Although the ArtVision platform was developed specifically to address the needs of institutions that publish data and images about artworks, the methodology is by no means restricted to this field. The open-source software could be reused by heritage institutions to match images across manuscript collections or historical documents that have some type of visual similarity. The broader scientific community could also stand to benefit where there is a need to match images in other domains. As many artworks are lacking in titles and unique identifiers, visual search has proven to be a manageable process through which to reconcile these records across collections. The platform has already made a substantial impact on the publishing efforts of the Pharos consortium, automating a task that would otherwise be impossible for humans. By exposing the APIs publicly and allowing non-technical users to perform visual searches across Pharos collections, ArtVision facilitates collection interoperability and the cross-pollination of collections data, disrupting barriers posed by proprietary databases where information is kept in silos. It opens new doors to performing large-scale analysis on artworks, providing unprecedented opportunities to individuals, institutions and the field of Digital Art History more broadly.

Notes

1 Searching Through Seeing: Optimizing Computer Vision Technology for the Arts | The Frick Collection. (n.d.). Retrieved March 10, 2019, from https://www.frick.org/interact/video/searching_seeing.

2 “PHAROS: The International Consortium of Photo Archives.” Accessed March 15, 2021. http://pharosartresearch.org/.

3 John Resig – Building an Art History Database Using Computer Vision. https://johnresig.com/blog/building-art-history-database-computer-vision/. Accessed 10 Mar. 2019.

4 Crystal ball: Scalable Reverse Image Search Built on Kubernetes and Elasticsearch: Dsys/Match. 2016. Distributed Systems, 2019. GitHub, https://github.com/dsys/match.

5 EdjoLabs/Image-Match: Quickly Search over Billions of Images. https://github.com/EdjoLabs/image-match. Accessed 10 Mar. 2019.

6 John Resig – Italian Art Computer Vision Analysis. https://johnresig.com/research/italian-art-computer-vision-analysis/. Accessed 10 Mar. 2019.

7 Pastec, the Open Source Image Recognition Technology for Your Mobile Apps. http://pastec.io/. Accessed 10 Mar. 2019.

8 Hernandez, David. “Visual Search by Machine Box.” Machine Box, 7 Oct. 2017,https://blog.machinebox.io/visual-search-by-machine-box-eb30062d8abe.

9 Deep Video Analytics. https://www.deepvideoanalytics.com/ Akshay Bhat from Cornell University. Accessed 10 Mar. 2019.

10 Bing Visual Search Developer Platform. https://www.bingvisualsearch.com/docs. Accessed 10 Mar. 2019.

11 Diamond. https://diamond.timemachine.eu/. Accessed 10 Mar. 2019.

12 ArtPi – Artrendex. http://www.artrendex.com/artpi Accessed 10 Mar. 2019.

14 The ArtVision API architecture was designed by Lukas Klic and implemented by Manolis Fragiadoulakis of Advance Services. https://github.com/ArtResearch/vision-api.

17 Linked Open Vocabularies (LOV). https://lov.linkeddata.es/dataset/lov/vocabs/sim. Accessed 10 Mar. 2019.

18 Gianmarco Spinaci, https://github.com/researchspace/researchspace/pull/187.

24 Cultural Objects Name Authority (Getty Research Institute). http://www.getty.edu/research/tools/vocabularies/cona/. Accessed 10 Mar. 2019.

Acknowledgements

The Pharos platform is generously supported by the Andrew W. Mellon Foundation to work on a first phase of publishing six of the fourteen collections.2525 The ArtVision Java API was implemented by Manolis Fragiadoulakis, and the ResearchSpace user interface was implemented by Andreas Michelakis together with Nikos Minadakis of Advance Services. A special thanks to Gianmarco Spinaci and Remo Grillo of the Harvard Digital Humanities Lab for their contributions, Dominic Oldman and Artem Kozlov from the British Museum, Louisa Wood Ruby of the Frick Collection, as well as all of Pharos institutions that contributed images and data that made this work possible.

References

[1] | C. Caraffa, E. Pugh, T. Stuber and L.W. Ruby, PHAROS: A digital research space for photo archives, Art Libraries Journal 45: (1) ((2020) ), 2–11, http://www.cambridge.org/core/journals/art-libraries-journal/article/pharos-a-digital-research-space-for-photo-archives/AC7D9F996BDA0526AF7EF4072A16C364. doi:10.1017/alj.2019.34. |

[2] | E.J. Crowley and A. Zisserman, in: Search of Art, in: Computer Vision – ECCV 2014 Workshops, L. Agapito, M.M. Bronstein and C. Rother, eds, Lecture Notes in Computer Science, Springer International Publishing, (2015) , pp. 54–70. ISBN 978-3-319-16178-5. doi:10.1007/978-3-319-16178-5_4. |

[3] | E.J. Crowley and A. Zisserman, The Art of Detection, ECCV Workshops. doi:10.1007/978-3-319-46604-0_50. |

[4] | A. Elgammal and B. Saleh, Quantifying Creativity in Art Networks, http://arxiv.org/abs/1506.00711. |

[5] | J. Elkins and M. Naef, What is an image? ISBN 978-0-271-05064-5. |

[6] | N. Gonthier, Y. Gousseau, S. Ladjal and O. Bonfait, Weakly supervised object detection in artworks, in: Computer Vision – ECCV 2018 Workshops, L. Leal-Taixé and S. Roth, eds, Lecture Notes in Computer Science, Springer International Publishing, (2019) , pp. 692–709. ISBN 978-3-030-11012-3. doi:10.1007/978-3-030-11012-3_53. |

[7] | P. Harpring, Development of the getty vocabularies: AAT, TGN, ULAN, and CONA 29: (1) ((2010) ), 67–72. doi:10.1086/adx.29.1.27949541. |

[8] | L. Impett, Analyzing gesture in digital art history, in: The Routledge Companion to Digital Humanities and Art History, Routledge, (2020) . 22. ISBN 978-0-429-50518-8. |

[9] | L. Impett and S. Süsstrunk, Pose and pathosformel in aby Warburg’s bilderatlas, in: Computer Vision – ECCV 2016 Workshops, G. Hua and H. Jégou, eds, Lecture Notes in Computer Science, Vol. 9913: , Springer International Publishing, (2016) , pp. 888–902, ISBN 978-3-319-46603-3 978-3-319-46604-0. doi:10.1007/978-3-319-46604-0_61. |

[10] | S. Karayev, M. Trentacoste, H. Han, A. Agarwala, T. Darrell, A. Hertzmann and H. Winnemoeller, Recognizing Image Style, http://arxiv.org/abs/1311.3715. |

[11] | I. Kirton and M. Terras, Where Do Images of Art Go Once They Go Online? A Reverse Image Lookup Study to Assess the Dissemination of Digitized Cultural Heritage | MW2013: Museums and the Web 2013, https://perma.cc/CT5E-QZ77. |

[12] | S. Lang and B. Ommer, Attesting similarity: Supporting the organization and study of art image collections with computer vision 33: (4) ((2018) ), 845–856, https://academic.oup.com/dsh/article/33/4/845/4964861. doi:10.1093/llc/fqy006. |

[13] | L. Manovich, Computer vision, human senses, and language of art. doi:10.1007/s00146-020-01094-9. |

[14] | J. Nielsen, Usability inspection methods, in: Conference Companion on Human Factors in Computing Systems, CHI ’94, Association for Computing Machinery, (1994) , pp. 413–414. ISBN 978-0-89791-651-6. doi:10.1145/259963.260531. |

[15] | D. Oldman, D. Tanase and S. Santschi, The problem of distance in digital art history: A ResearchSpace case study on sequencing Hokusai print impressions to form a human curated network of knowledge, International Journal for Digital Art History 4: ((2019) ), 5.29–5.45, https://journals.ub.uni-heidelberg.de/index.php/dah/article/view/72071. doi:10.11588/dah.2019.4.72071. |

[16] | B. Seguin, The replica project: Building a visual search engine for art historians 24: (3) ((2018) ), 24–29. doi:10.1145/3186653. |

[17] | B. Seguin, C. Striolo, I. diLenardo and F. Kaplan, Visual link retrieval in a database of paintings, in: Computer Vision – ECCV 2016 Workshops, G. Hua and H. Jégou, eds, Lecture Notes in Computer Science, Springer International Publishing, pp. 753–767. ISBN 978-3-319-46604-0. |

[18] | X. Shen, A.A. Efros and M. Aubry, Discovering visual patterns in art collections with spatially-consistent feature learning, in: 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), IEEE, (2019) , pp. 9270–9279. https://ieeexplore.ieee.org/document/8954148/. ISBN 978-1-72813-293-8. doi:10.1109/CVPR.2019.00950. |

[19] | W.R. Tan, C.S. Chan, H.E. Aguirre and K. Tanaka, Ceci n’est pas une pipe: A deep convolutional network for fine-art paintings classification, in: 2016 IEEE International Conference on Image Processing (ICIP), (2016) , pp. 3703–3707. doi:10.1109/ICIP.2016.7533051. |

[20] | J. Yang, Y.-G. Jiang, A.G. Hauptmann and C.-W. Ngo, Evaluating bag-of-visual-words representations in scene classification, in: Proceedings of the International Workshop on Workshop on Multimedia Information Retrieval, MIR ’07, Association for Computing Machinery, (2007) , pp. 197–206. ISBN 978-1-59593-778-0. doi:10.1145/1290082.1290111. |

[21] | D. Zorach, Kress Foundation | Transitioning to a Digital World: Art History, Its Research Centers, and Digital Scholarship, http://www.kressfoundation.org/research/transitioning_to_a_digital_world/. |