Guidelines for collaborative development of sustainable data treatment software

Abstract

Software development for data reduction and analysis at large research facilities is increasingly professionalized, and internationally coordinated. To foster software quality and sustainability, and to facilitate collaboration, representatives from software groups of European neutron and muon facilities have agreed on a set of guidelines for development practices, infrastructure, and functional and non-functional product properties. These guidelines have been derived from actual practices in software projects from the EU funded consortium ‘Science and Innovation with Neutrons in Europe in 2020’ (SINE2020), and have been enriched through extensive literature review. Besides guiding the work of the professional software engineers in our computing groups, we hope to influence scientists who are willing to contribute their own data treatment software to our community. Moreover, this work may also provide inspiration to scientific software development beyond the neutron and muon field.

1.Introduction

1.1.Software needs at user facilities

Large research facilities, such as neutron, x-ray or muon sources, provide researchers across a range of disciplines (including physics, chemistry, biology and engineering) with unique opportunities to explore properties of materials. To achieve this, they operate a broad range of highly specialised instruments, each targeting a particular area of investigation. The structure of the generated datasets reflects the specific operation modes of the instruments, and therefore requires bespoke data treatment software.

In most cases, the data treatment is conceptually and practically divided in two stages: reduction and analysis. Typical data reduction steps are background subtraction, normalization to incident flux, detector efficiency correction, axis calibration, unit conversions and histogram rebinning. In a good first approximation, the outcome is a function such as the static structure factor

The major sources in our field are user facilities: They operate instruments primarily for externals users, with different degrees of in-house research. In the early days many users had a special interest in the experimental method, and analysed their data with their own tools. Into the 1990s, it was quite normal for a PhD thesis to consist of a few scattering experiments on a single sample and a detailed analysis supported by some new software. With increasing maturity of the experimental techniques, users are less interested in methodological questions; they rightfully expect an instrument to just work, with the results obtained often contributing to a wider study. This is reflected in more recent PhD theses and journal publications, where increasingly experiments at large facilities are just one of several methods that are used to gain a complete understanding of the system under study.

Laboratory instruments bought from specialized manufacturers, such as the popular physical property measurement systems used for magnetic, thermal and electrical characterisation of samples, come with fully integrated software for experiment control and, to a varying degree, for data analysis. Well designed graphical interfaces minimize training needs. When developing software for large facilities we should strive for no lesser quality standard – even if experimentalists are exceptionally forbearing with unstable software, counter-intuitive interfaces and idiosyncratic commands, as long as they somehow succeed in treating their precious data.

In the past, much of the data treatment software in our field has been contributed by engaged scientists, who developed it primarily for their own needs, then shared or published it. In particular, many instrument scientists have increased the utility of their instrument by providing software tailored for the needs of their users. While this software contains invaluable application-domain expertise, it often raises concerns about its long-term maintenance. There have already been cases where outdated computers had to be kept in operation because source code was lost, or could not be recompiled. Therefore the development and maintenance of data treatment software needs to be professionalised.

Most instruments have a lifetime of decades, with occasional refurbishments or upgrades. Therefore software development is as a long-term endeavor. Solid engineering should facilitate maintenance and future updates. Most instruments embody a basic design that is shared with a number of similar instruments at different facilities. This opens opportunities to share software between similar instruments and across facilities. By sharing the development, better use is made of limited resources, bugs are found earlier, the confidence in the correctness grows and the scientific end users are better served.

1.2.From computer programming to software engineering

Software engineering has been defined as the “systematic application of scientific and technological knowledge, methods, and experience to the design, implementation, testing, and documentation of software to optimize its production, support, and quality” [145]. Software engineering goes beyond mere computer programming in that it is concerned with size, time, and cost [321].

Size concerns the code base as well as the data to be processed. In either respect, programming techniques and development methods that are fully sufficient at small scale may prove inadequate at large scale. With a growing code base comes the need for developer documentation, version control and continuous integration. With big data comes the need for optimization and parallelization. With involvement of more than one developer comes the need for coordination and shared technical choices.

Time refers to both the initial development and the total life span of a software. No engineering overhead is needed for code that is written in a few hours and run only once. However, for projects where the code will be used for a considerable period, one needs to consider what kinds of changes shall be sustained. To support incremental development, version control may already pay off after a couple of days. To react to changed requirements without breaking extant functionality, good tests are essential. To keep a software running for years and decades, one must cope with changes in external dependencies like libraries, compilers, and hardware [133].

Cost as the limiting factor of any large-scale or long-term effort means that we have to live with compromises, and constantly decide about trade-offs [190, p. 15].

To become a good programmer and software engineer, one needs theoretical knowledge and understanding as well as experience. Any programming course therefore involves exercises. Academic exercises, however, cannot be scaled to the size, complexity and longevity that are constitutive for engineering tasks. Engineering training therefore is mostly done on the job, but needs to be supported by continuous reading and other forms of self-education [10, rule 2.2]. Professionalisation of scientific software development is now a strong movement, expressed for instance in the take up of the job designation “research software engineer” (RSE), coined by the UK Software Sustainability Institute [273] and adopted by a growing number of RSE associations [156].

1.3.The case for shared engineering guidelines

In engineering, established rules and available technologies still leave vast freedom for the practitioner to do things in different ways. However, freedom in secondary questions can be more distracting than empowering. Arbitrary, seemingly inconsequential, choices can become the root of long-standing incompatibilities. Any organisation, therefore, needs to complement universal rules of the profession with more detailed in-house guidance [260]. In our field, the minimum organisational level for agreeing on software engineering rules is either the software project11 or the developers’ group. Groups for scientific software, or specifically for data reduction and analysis software, have been created at most large facilities. As some projects involve several computing groups and some developers contribute to several projects, it is desirable to agree on basic guidelines across groups and across projects.

Software groups from five major European neutron and muon facilities [73,125,143,144,212] came together in the SINE2020 consortium [267] to define a set of guidelines for collaborative development of data treatment software. This collaboration is continuing, including through the League of advanced European Neutron Sources (LENS) [167]. The authors of this paper include software engineers and project managers at the aforementioned five sources. The guidelines presented in the following are based on our combined experience. They improve and update on material collected in SINE2020 [181].

1.4.Scope of these guidelines

This paper has been compiled with a broad audience in mind. We hope that our guidelines, beyond governing work in our own groups, will also be taken into consideration by instrument responsibles and other scientists who are contributing to data treatment software, as this would facilitate future collaborations. Aspects of our work may also be of interest to RSEs from other application fields and to managers who want to get a better understanding for the scope of software engineering and the challenges of long-term maintenance.

Our guidelines are meant not only for new projects but also for the maintenance, renovation, and extension of extant code. In any case, with years, the distinction between new and old projects blurs. In our field, where projects have a lifetime of decades, most of us are working most of the time on software initiated by somebody else. Conversion to the tools and practices recommended here needs not to be sudden and complete, but can be done in steps, and in parallel with work on new functionality.

This paper is at the same time descriptive and prescriptive. It documents our current best practices, gives mandatory guidance to our own staff, explains our choices to external collaborators and invites independent developers to consider certain tools and procedures. In the following, each subsection starts with a brief guideline in a text box, which is then discussed in detail. Section 3 describes the procedures and infrastructure that should be setup by a software group, Section 4 addresses the functionality that is expected from data treatment software, and Section 5 gives recommendations for non-functional choices.

1.5.Reproducible computation, FAIR principles and workflow preservation

While many of the points discussed in this paper hold true for software engineering or data treatment in general, one concern is particularly pertinent for research code: reproducibility. Computational reproducibility is one of several requirements for replication, and “replication is the ultimate standard by which scientific claims are judged” [214].

In reasearch with scattering methods, there is no tradition of explicit replication studies. However, breakthrough experiments on novel materials or discoveries of unusual material behavior motivate many follow-up studies on similar samples. Erroneous conclusions would soon lead to inconsistencies, which would provoke further experiments and deeper analysis until full clarification is reached. Potential sources of error include sample preparation, hardware functioning, instrument control, and data treatment. Disentangling these is much easier if experimental data are shared and data treatment is made reproducible. As experiments are quite expensive, replicating and validating data reduction and analysis should be among the first steps in scrutinizing unusual results.

Open data is encouraged, and increasingly requested, by research organizations. Broad consensus has been reached that research data should be findable, accessible, interoperable, and reusable (FAIR) [17,45,316]. Software is data as well, albeit of a very special kind. The FAIR principles, suitably adapated, can provide guidance how to share and preserve software [150,157,166]. Functional aspects of software, on the other hand, are outside the scope of these principles.

To make software findable and accessible, we recommend version control (Section 3.2.1), numbered releases and digital object identifiers (Section 3.2.6), provenance information in treated data (Section 4.3), rich online documentation (Section 5.9), and possibly the deployment in containers (Section 5.10.4). For interoperability, we recommend versatile interfaces (Section 4.1), standard data formats (Section 4.2) and code modularization (Section 5.4.1). To make code reusable, we emphasize the need for a clear and standard open-source license (Section 5.1), and recommend the use of standard programming languages (Section 5.2.2) and of plain American English (Section 5.3.2).

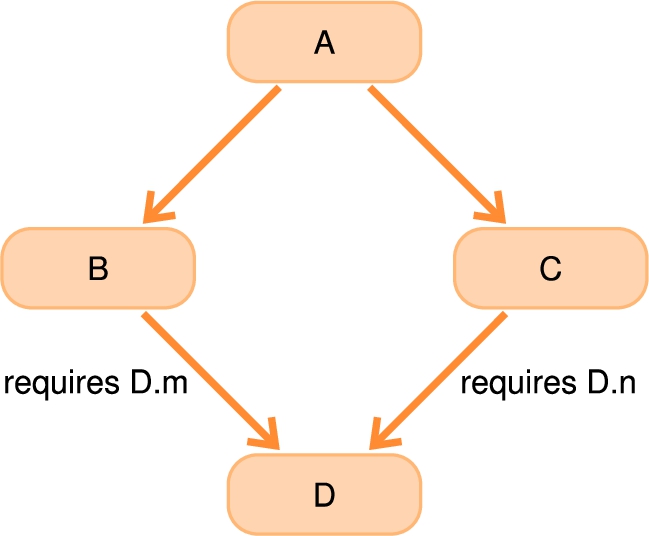

In the long term, the biggest risk to software reuse, and thereby to computational reproducibility, comes from interface breaking changes in lower software or hardware layers [133]. To give a recent example, numeric code that relied on long double numbers to be implemented with 80 bits no longer works on new Macintosh machines as Apple replaced the x86 processor family by the arm64 architecture, with compiler backends falling back to the minimum length of 64 bits required by the C and C++ standards. Strategies to reduce the risk of such software collapse [133] include: Keep your software simple and modular (Section 5.4.1); wherever possible, use standard components (Section 5.4.2); ideally, depend on external tools or libraries that have several independent embodiments (in our example, long double never had more than 64 bits under the Visual Studio compiler, which should have warned us not to rely on a non-standard extension); run nightly tests to detect defects as soon as possible (Section 5.8.2). Backward compatibility can help to replicate old data analyses even if old software versions no longer work (Section 5.10.2).

To replicate a data treatment, one needs to retrieve the data, the software, and the full parameterization of the workflow (Section 4.1, Fig. 2). We must therefore enable our users to preserve enough machine readable information so that entire data reduction and analysis workflows can be replayed. While this is relatively straightforward for script-based programs (Sections 4.1.2–4.1.5), extra functionality must be provided if software is run through a graphical user interface (Sections 4.1.1, 4.3).

2.How we wrote this paper

To get an overview of received practices in our field, we started this work with a questionnaire targeted at five neutron software packages that were under active development and were partly funded by SINE2020. The packages considered were Mantid [9,178], ImagingSuite [32,154,155], McStas [188,317,318], BornAgain [23,228] and SasView [254]. Questions and answers are fully documented in a project report [181], which also contains some statistics and a summary of the commonalities. The empirical guidelines that emerged from this study became a deliverable of work package 10 of the European Union sponsored consortium SINE2020 (Science & Innovation with Neutrons in Europe) [267]. For the present publication we updated and extended the guidelines, based on evidence from research and practice.

For each section of this paper, we searched for literature, using both Google Scholar and standard Google search to discover academic papers as well as less formal work. We also (re)read a number of books [40,76,84,127,134,183,191,246,248,290,323] and style guides [115,286,297], and looked through many years of the “Scientific Programming” department in the journal Computing in Science & Engineering [46]. Other formative influences include the question and answer site Stackoverflow [278], mailing lists or discussion forums of important libraries (e.g. Scipy or Qt), conferences (or videorecorded conference talks) on software engineering or on single programming languages, initiatives such as CodeRefinery [44] and the carpentries of the Software Sustainability Institute [273]. Insights from these sources are reflected in numerous places below. References attest that our views and recommendations are mainstream. Much good advice has been found in blogs, and in books that risk strong opinions. Most of these sources are about software engineering in general, not specifically about research software.

Each guideline in the final text has been approved by each of us. Even so, we do not claim that we are already following all guidelines in all our own software efforts. During the years that led to the compilation of this paper we learned a lot from each other and from the literature, and we continue to bring the gained insights to our own work.

3.Procedures and infrastructure

3.1.Development methodology

3.1.1.Ideas from agile

Over the past twenty years, the discourse on software development methodology has been dominated by ideas from the Agile Manifesto [20,196]. Subsets of these ideas have been combined and expanded into fully fledged methods like Extreme Programming (XP) [19], Scrum [258,259], Lean [227], Kanban [6], Crystal [42] and hybrids of these like Scrumban [165] and Lean-Kanban [49]. For a critical review, we recommend the book by Bertrand Meyer [191].

Many organizations that claim to be agile do not strictly adhere to one of the more formal methods, but have adopted and adapted some agile ideas. This is also the case for our facilities: We all embrace agile but do not consider it to be a panacea. To varying extent, our groups practice concepts like short daily meetings, retrospectives, pair programming, user stories. Refactoring (Section 3.1.3), iterative workflows and continous integration (Section 3.2.3) have become second nature to our teams. Indeed, good ideas from agile permeate much of this paper.

3.1.2.Project management and stakeholder involvement

Scrum introduced the notion of a product owner who represents the customers, “who champions the product, who facilitates the product decisions, and who has the final say about the product” [222]. At our facilities, we have no dedicated full-time person for this role. Regardless, it is an important insight that developers need a counterpart who forcefully represents the interests of end users.

To identify such representatives in our application domain, the distinction of data reduction versus analysis software (Section 1.1) comes into play. For data reduction, the instrument responsibles are the natural mediators between the user community at large and the software developers; they have full knowledge of the instrument physics, and they get direct feedback from their users when there are any problems with the software. Indeed, we have had excellent results with instrument scientists guiding our work and testing our software prototypes. Some facilities also have staff (e.g. instrument data scientists) whose job includes the task to liaise with users, instrument teams, and developers. For a multi-facility software like Mantid, formal governance bodies are required [179]. While instrument scientists can help with the customization to their respective instruments, they may have quite different requirements for the common parts of the software; these divergent wishes need to be bundled, reviewed, and reconciled.

In contrast, many software projects in the realm of data analysis are steered by a scientist who acts as product owner and chief maintainer in one person. This is essentially the “benevolent dictator” management model [92,142,247], which has proven viable for large and important open-source projects like Linux, Emacs, Python, and many more [313]. SasView, governed by a committee [255], seems a rare exception in our field. Many owners of analysis software are scientists who originally wrote the software for their own research needs, then started to support a growing user community. If such a creator retires, and maintenance is taken over by software engineers without domain knowledge, then the loss of application-domain knowledge must somehow be compensated from within the user community. The community must be empowered to articulate their needs, for instance through online forums, user meetings, webinars, not to mention essentials like an issue tracker and a contact email. In addition, users can participate in formal or de facto steering committees or user reference groups.

3.1.3.Refactoring

“Refactoring is the process of changing a software system in such a way that it does not alter the external behavior of the code yet improves its internal structure” [84]. We refactor before and after changing external behavior, both to lay the groundwork and to clean up the code. Continual refactoring prevents the accumulation of technical debt and keeps the code readable, modular and maintainable. Through refactoring, code from different sources is made more homogeneous, and brought in line with received design principles (Section 5.4.3). Typical targets of refactoring are known as code smell [84,183,288], which include inaccurate names, code duplication, overly large classes, overlong functions, more than three or four arguments to a function, chains of getter calls, etc.

Even trivial refactorings, like the renaming of variables, are error-prone. Automatic editing tools can help to avoid certain errors, at the risk of introducing errors of other kinds. Each single refactoring should be separately committed to the version control (Section 3.2.1) so that it can easily be reverted if needed. Good test coverage is a precondition; often, it is appropriate to add a new test to the regression suite (Section 5.8.1) before undertaking a specific refactoring step.

In private enterprises, it is often hard for developers to assert the need for refactoring while management presses for new functionality. In our non-profit setting, we must be no less wary of the opposite danger of procrastinating in refactoring without adding value to our product.

At times, the complexity of a software feels so overwhelming that developers will propose to rewrite big parts or all of the “legacy” code [183, p. 5]. We have had bad experiences with such initiatives. Legacy code, for all its ugliness and complexity, “often differs from its suggested alternative by actually working and scaling” [285]. There is considerable risk, well-known as the second-system effect [29], that the new project, in response to all lessons learned from the previous one, is so heavily overengineered that it will never be finished. On the other hand, if there is need for architectural improvements, then piecemeal refactoring without sufficient upfront design is also “terrible advice, belonging to the ‘ugly’ part of agile” [191]. We therefore recommend that major changes to a software framework be proposed in writing, and reviewed, before substantial time is invested into implementation. Innovative ideas may require development of a prototype or a minimum viable product, or some other proof of concept.

For significant renovations, or for writing code from scratch, work should be broken down into packages and iterations such that tangible results are obtained every few months at most, and not much work is lost in case a developer departs. For critical work, more than one developer should be assigned from the outset if possible.

3.2.Development workflow and toolchain

3.2.1.Version control

Source code, and all other human-created artifacts that belong to a software project, should be put under version control [215,275]. Version control (also called revision control or source code management) is supported by dedicated software that preserves the full change history of a file repository. With each commit, the timestamp, the author name and a message are logged. Any previous state of any file can be restored. This is useful when some modification of the code either did not meet expectations or broke functionality. For particularly difficult bug hunts, bisection on the history can help.

Working habits can and should change once version control is ensured. One needs no longer to care about safety copies. One can take more risks, such as using automatic editing tools as it is easy to revert failed operations. Since deleted code can easily be restored, there is hardly a good reason left for outcommenting used code or for maintaining unused files [172]. And most importantly, version control makes it safe and easy for developers to work in parallel on branches of the code. Conflicting edits have to be resolved before a branch is merged back into the common trunk.

We recommend the version control software Git [35,43,95], written by Linux founder Linus Torvalds [298], which is the de facto standard for new projects in many domains, including ours. The dominance of Git is cemented by repository managers like GitHub and GitLab (Section 3.2.2). For a curated list of Git resources, see [13].

3.2.2.Repository manager

It is straightforward to install and run a Git server on any internet facing computer. The non-trivial restriction is “internet facing”, which requires a permanent URL and appropriate firewall settings. For collaborative software development it is convenient to use a web-based repository manager that combines Git with an issue tracker and additional functionality for work planning, continuous integration (CI, Section 3.2.3), code review (Section 3.2.5), and deployment (Section 3.2.6).

The best known of these services is GitHub [96]. As for many other cloud services, it has a “freemium” business model, offering basic services for free, especially for open-source projects, and charging money for closed projects or when additional features are required. An interesting alternative is GitLab which, beyond offering freemium hosting [100], also releases their own core software under a freemium model. New features first appear in an Enterprise edition, but after some time they all go into an open-source release [101]. Some important creators of open-source software like Kitware (CMake, ParaView, VTK [107]) are running their own GitLab instance, and so do several of our institutions [105,106,108]. For collaborative projects it may be argued that hosting on a “neutral ground” cloud service is preferable for avoiding perceived ownership by a single institution.

In spite of some differences in terminology and in recommended work flows, GitHub and GitLab are very similar. Each of them is used through a terse, no-nonsense web interface that appeals to power users but presents a certain barrier to newcomers [98,102]. As external users are more likely to be familiar with GitHub, it may help to mirror GitLab repositories at GitHub [103].

3.2.3.Workflow; continuous integration, delivery, and deployment

In a small or very stable software project, where no edit conflicts are to be expected, all development may take place in one single main (or master) branch. If more than one developer is contributing at the same time, then work is usually done in distinct feature branches of the code. At some point, successful developments are merged back into a common branch. Before the merger is enacted, possible edit conflicts must be resolved, integration tests must pass, and the changes should be reviewed.

Fig. 1.

(a) Development workflow according to the Git Flow model [65,186]. Feature branches are merged into develop. At some point, a release branch is started, which is frozen for new features. The release (yellow disk) is published after the release branch is merged into main. Occasionally, a hotfix is applied to main, and merged back into develop. (b) Development workflow with Continuous Integration (shortlived feature branches, frequent mergers) and Continuous Delivery (frequent releases, taken directly from the develop branch). (c) Ditto, but without develop branch; all changes go directly into main. This is promoted under the name Trunk Based Development [120]. (d) Workflow with Continuous Deployment, where the application is deployed after each successful merger (colored disks). If a critical bug is detected (red exclamation mark), and attributed to a recent merger (red disk), then it is easy to reset main to the last unaffected software state, and deploy that one.

![(a) Development workflow according to the Git Flow model [65,186]. Feature branches are merged into develop. At some point, a release branch is started, which is frozen for new features. The release (yellow disk) is published after the release branch is merged into main. Occasionally, a hotfix is applied to main, and merged back into develop. (b) Development workflow with Continuous Integration (shortlived feature branches, frequent mergers) and Continuous Delivery (frequent releases, taken directly from the develop branch). (c) Ditto, but without develop branch; all changes go directly into main. This is promoted under the name Trunk Based Development [120]. (d) Workflow with Continuous Deployment, where the application is deployed after each successful merger (colored disks). If a critical bug is detected (red exclamation mark), and attributed to a recent merger (red disk), then it is easy to reset main to the last unaffected software state, and deploy that one.](https://content.iospress.com:443/media/jnr/2022/24-1/jnr-24-1-jnr220002/jnr-24-jnr220002-g007.jpg)

The project specific workflow regulates the details of this. For quite a while, best practice was a workflow proposed in 2010 [65] and later termed Git Flow [186], summarized in Fig. 1a. This workflow is still appropriate if there is need to freeze a release branch during a period of manual testing.

More recently, the trend has gone towards shorter lived side branches, and more frequent integration into the common trunk. This is called Continuous Integration (CI) [224]. It heavily depends on fast and automated build and test procedures, discussed in the next Section 3.2.4. If CI procedures and developer discipline ensure that the common trunk is at any time in usable state, then it takes little additional automation to build all executables after each merger, including installers, and other deliverables that would be needed to deliver a new software version to the users. This is called Continuous Delivery (CD) [224], sketched in Fig. 1b. Automation of release procedures should also extend to the documentation, though human written content still needs to be kept up to date by humans.

With CI/CD, the old open-source advice “release early, release often” [246] is no longer thwarted by tedious manual release procedures, and we can “deploy early and often” [22]. In contrast, our end users who have to install the data analysis software on their own computers may perceive frequent releases more as an annoyance than as a helpful service. Each project needs to find the right balance.

When there are no more feature freezes and release branches, then there is no strong reason left to maintain separate develop and main branches. Rather, all changes can be merged directly into main, as sketched in Fig. 1c. This is promoted under the name Trunk Based Development [120].

Confusingly, the abbreviation CD is also used for Continuous Deployment [224], which goes beyond Continuous Delivery in that there are no releases anymore. Instead, new software versions are automatically deployed with high frequency, possibly after each single successful merger into the common trunk, and with no human intervention. One advantage is the ability to react swiftly and minimize damage after a critical bug is discovered (Fig. 1d). Arguably, Continuous Deployment is the best choice whenever deployment is under our own control. This is the case for cloud services (Section 5.2.1), or when data reduction software is only run on local machines.

3.2.4.Build and test automation

Continuous Integration (Section 3.2.3) depends on automation of build and test procedures. To control these procedures, some projects still use dedicated CI software like Jenkins. Nowadays, essential CI control is also provided by repository management software (RMS, Section 3.2.2). When a developer submits a Merge Request (GitLab) or Pull Request (GitHub), then the RMS (or other CI controller) launches build-and-test processes on one or several integration servers. Only if each of them has terminated successfully will the submitted change set be passed on for code review (Section 3.2.5) and then for merger into the common trunk (develop or main, Section 3.2.3).

Usually, the modified software has to be built and tested under all targeted operating systems, using as many integration servers, either on distinct hardware or in containers (e.g. Docker containers [63]). On each integration server a light background process runs, called the runner [97,104]. The runner frequently queries the RMS for instructions. Upon a Merge (Pull) Request, the RMS finds an available runner for each target platform and sends each of these runners the instruction to launch a new job. This then causes the runners to fetch the submitted change set, compile and build the software, run tests and possibly perform further actions according to a customized script. The progress log is continuously sent back to the RMS. Upon completion of the job, the runner sends the RMS a green or red flag that allows or vetoes the requested merger.

Groups or projects need to decide whether to run CI on their own hardware or in the cloud. For small projects the workstations under our desks may suffice. If there are several developers in a project, more fail-safe hardware is in order; if builds or tests are taking more than a few minutes, it is desirable to use a powerful multi-processor machine.

Alternatively, we could run our CI/CD processes on preconfigured cloud servers that are typically offered as freemium services [312]. Specifically, at the time of writing this paper, both GitHub and GitLab offer a practically unlimited number of build/integration/deployment jobs to be run on their free accounts. This may save us from buying and maintaining hardware, but at the expense of having less flexibility and occasional processing delays as free services are queued with low priority when computing centers are busy with payed jobs. Whether local or cloud based CI is less onerous to set up and keep running is an important question, which we must leave open.

Since the tests are an important part of a project’s code base, we discuss them in more detail below, in the section on software requirements (Section 5.8).

3.2.5.Change review

Projects should define rules for reviewing proposed changes. Typically, a review is required for each Merge (Pull) Request. It takes place after all tests passed and before the merger is enacted. Repository management software (RMS, Section 3.2.2) provides good support for annotations and discussions.

Usually, the invitation to review a changeset goes to the other members of the team. The main purpose of code review [14,253] is quality assurance: prevent accidents, discover misunderstandings, maintain standards, improve idiom, keep the code readable and consistent. In some cases, the reviewer should build and run the changed code to check its validity, performance or other technical aspects.

Reviewers must be mindful not to hurt human feelings, the more so as all comments in our open-source repository management systems will remain worldwide readable indefinitely. What language is appropriate is very much culture dependent, and should be attuned to the reviewee. Improper bluntness can start undesirable power games [60]. Questions are better than judgements [122]. As soon as general principles, esthetic concerns or emotions get involved, it is advisable to change the medium, talk with each other, and possibly ask other developers or superiors for pertinent rules or specific guidance.

Besides quality assurance and education of the submitter, another purpose of code review is knowledge transfer from the submitter to the reviewer who is to be kept informed about changes in the code.

The RMS can be configured such that explicit approval from authorized parties is needed before a merger can be enacted. In this respect, habits and opinions vary across our groups and projects. Some enforce a strict four-eyes principle, either through RMS configuration or convention, while others entrust experienced developers the discretion to merge trivial or recurring changes without waiting for formal approval.

3.2.6.Releases

A well-known adage from the early days of the open-source movement recommends “Release early, release often.” [232,246]. If a software stagnates without fresh releases for more than a year, users will wonder whether the project is still alive. In contrast, frequent releases can be inconvenient for users who can end up spending considerable time reinstalling the software and adapting to its changes. Typically, our projects are aiming for two to four releases per year. The frequency might increase if software is deployed as a cloud service (Section 3.2.3). Release previews may be sent out to special users for extended manual testing.

Avoid changes that break other people’s software or analysis pipelines [132,133,232]. If such changes become inevitable, discuss them in advance with your users, communicate them clearly, bundle them in one single release, and mark them by an incremented major version number [231]. If backward compatibility cannot be maintained it might still be possible to provide conversion scripts for user inputs.

It is good practice for a project to have a low-traffic moderated mailing list. In particular, this list should be used for announcing new releases. The announcement should contain an accurate but concise description of changes. The same information should also be aggregated in a change log (e.g. a file called “CHANGELOG” in the top-level directory). It “should be easily understandable by the end user, and highlight changes of importance, including changes in behavior and default settings, deprecated and new parameters, etc. It should not include changes that, while important, do not affect the end user, such as internal architectural changes. In other words, this is not simply the annotated output of git log” [118].

To facilitate citation, it is advisable to mint a digital object identifier (DOI) for each release. This can be accomplished using a free cloud service [77]. The most frequently used service, Zenodo [330], can be automatically triggered from GitHub.

4.Functional requirements

Functional requirements specify how the software shall transform input into output [281]. Obviously, these requirements are different for each application. Nonetheless, some fairly generic requirements can be formulated with respect to the more formal aspects of software control, data formats, logging, and plotting.

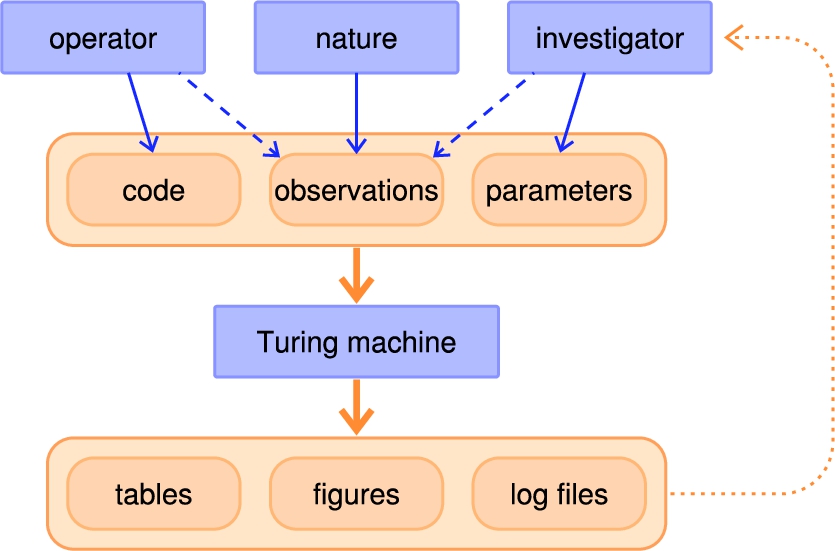

4.1.User interfaces

From the standpoint of theoretical computer science there is no fundamental difference between software, data, and parameters, they are all input to a Turing machine [134, Section 3.2], as outlined in Fig. 2. In practice, however, it matters that code, data, and parameters have a different origin and variability. Software for data reduction and analysis is ideally provided by the instrument operators, and is long-term stable with occasional updates. Data sets are immutable once generated; their format is defined by the instrument operators, their content comes from an experiment that is steered by an instrument user. Parameterization is chosen, often iteratively, by the investigators who thereby adapt the generic software to their specific needs. There are different ways to let users control the software, notably graphical user interface (GUI), shell commands, command-line interface (CLI), application programming interface (API) for scripting, and configuration files, to be discussed in the following subsections.

Fig. 2.

Agents (blue) and data (orange) in experiment analysis. While code, experimental data, and parameters are exchangeable from the standpoint of theoretical computer science, they have different lifetime and are under control of different agents (instrument operator vs instrument user). The dotted feedback arrow indicates that the investigator may refine the parameterization iteratively in response to the output.

4.1.1.Graphical user interface

A well-designed GUI gently guides a user through a workflow, organizes options in a clear hierarchy of menus, and provides a natural embedding for data visualization and parameter control. A GUI tends to have a flatter learning curve than a text-based interface, to the point that users familiarize themselves with a GUI through trial and error rather than by reading documentation.

These advantages are so strong that almost all modern consumer software is steered through a GUI. Most industry built laboratory instruments are also provided with some kind of GUI. This conditions the expectations of our users, who tend to regard a text-based interface as outdated and unpractical.

On the downside, serial data processing through a GUI can be tedious. Developers should be prepared to meet user demands for automating repetitive workflows. When there is need for high flexibility and for programmatic constructs like loops and conditionals, then it is not advisable to stretch the limits of what a GUI can do; rather we should provide a scripting interface – either instead of a GUI, or complementary to it.22

Be warned that a GUI is difficult and time-consuming to develop and maintain, the more so if developers from a science background are not trained for this kind of work. One hard problem is designing views and workflows to provide a good user experience, another one is getting the software architecture right. As several data representations (views) may need to change in response to user actions or external events, a naive use of the signalling mechanism provided by a GUI toolkit like Qt (Section 5.4.2) can result in hopeless spaghetti [268]. Cyclic dependences can stall or crash the GUI in ways that are difficult to debug. GUI tests take extra effort to automatize (Section 5.8.6).

Therefore, we recommend thinking carefully before allocating scarce resources to a GUI. A GUI is clearly a useful tool for supporting interactive, heuristic work, especially if the user is to interact with visualized data, e.g. by setting masks in histograms. For more involved visualization tasks, however, it may be best to refer users to some of the outstanding dedicated tools that exist already, especially so for imaging applications.

4.1.2.Shell commands

If a program for inspecting or transforming data does not require interactive control, then it should be packaged as an independent application that can be launched from the operating system’s command prompt. Parameters, including paths of input and output files, can be supplied as command-line arguments.

Use cases include tabulating metadata or statistics from a set of raw data, summarizing contents of a binary file and transforming data between file formats. A number of such tools are available for the HDF5 binary format [124], and more specifically for the NeXus format [304, Ch. 7].

4.1.3.Command-line interface

An application with command-line interface (CLI) is associated with a terminal window and prompts the user for keyboard input. It can take the form of a dialogue where the user has to answer questions, or it provides a single prompt where users enter commands defined by the software. Hybrid forms also exist. A CLI is much easier to develop than a GUI, and therefore is appropriate for prototypes and niche applications.

A CLI in dialogue form (and equivalently, a GUI based dialogue with multiple popup windows) is acceptable for configuration work that rarely needs to be repeated. As a simpler alternative, however, consider using instead a configuration file that can be modified in a text editor (Section 4.1.5).

A CLI with menu prompt can be a powerful tool that gives users full control through concise commands. The disadvantages are a steep learning curve and the risk of feature creep: To save users from repetitious inputs, the concise commands tend to evolve into a Turing-complete ad-hoc language “that has all the architectural elegance of a shanty town” [201, p. 161]. It is therefore preferable to base a CLI on an established general-purpose scripting language, as discussed in the next subsection.

4.1.4.Python interface

Python is the most widely known programming language in large areas of science [216]. It is taught in many universities, and comes with lots of libraries and modules for scientific applications (Section 5.4.2). Python scripts can also be run from the popular Jupyter notebook [75,116,217]. Such a notebook is able to display a large variety of graphical elements like charts, plots and tables. Notebooks are usually accessed through a web browser, and are therefore natural candidates for “software as a service” (Section 5.2.1). As many data treatment programs already support Python, consistent choice of this language favors interoperability so that data acquisition, data reduction, analysis and visualization can be entirely controlled through Python.

For these reasons, it is a good choice to equip data treatment software with an interface that can be called from a Python script, interpreter, or notebook. To this end, we package our software (potentially written in another, lower-level language like C, C++ or Fortran) as a module, which can be imported by Python. The functionality of our software is exposed through class and function calls that form an application programming interface (API). Technically, our users take the role of application programmers. To get them started, it is advisable to provide tutorials with lots of example scripts.

4.1.5.Configuration files

Parameterizing software through configuration files is cheap for developers and convenient for users, provided it is restricted to parameters that change only rarely. Good application cases are the customization of a software to local needs or personal taste.

An initial configuration should be provided by the software. It should be a text file in a simple format like INI or TOML, where each parameter is specified by a key/value pair. It is important that the format allows for ample comments for each parameter. Examples for configuration files with rich comments include the Doxyfile that steers the documentation extractor Doxygen [64], and many of the files in the /etc directory of a Unix system.

4.2.Data formats and metadata

Interoperable data formats are a long-standing concern in our community. Considerable effort has gone into the NeXus standard [162], used for raw data storage at many neutron, x-ray, and muon instruments. While NeXus is built on top of the binary HDF5 file format [123], some subcommunities prefer human-readable text formats. Reflectometry experts, for example, have recently agreed on a hybrid format for reduced data [203] that combines metadata in YAML [326] with a traditional plain table of q, I,

As developers of downstream data treatment software, we can adapt our loader functions to whatever raw data format is being used. Our concern is not so much the file format, but the completeness, unambiguousness, and correctness of metadata. If a data treatment step needs metadata that are not contained in the input data, then we must prompt the user to manually supply the missing information. This is tedious and error-prone. Therefore we should liaise with the developers of upstream instrument control and data acquisition software to ensure that all necessary metadata are provided.

Occasionally, automatically acquired metadata are incomplete or incorrect, perhaps because some state parameters were not electronically captured, or were captured incorrectly. Should data treatment programs provide means to correct such errors? We rather recommend that metadata correction and enrichment be implemented as a separate step that precedes data reduction and analysis. Our rationale is that data correction is a one-time action, whereas data reduction and analysis software may be run repeatedly as users are working towards reliable fits and informative plots; it would be inconvenient for them to enter the same raw data corrections again and again when rerunning reduction and analysis. Furthermore, if experimental data are to be published under FAIR principles (Section 1.5), then both the automatically acquired and the corrected or enriched metadata need to be findable.

File formats should have a version tag so that downstream software recognizes when the input data structure has changed. We may need version-dependent switches to support all input of any age. Modern self-documenting formats make it possible to add new data or metadata fields without breaking loaders. However, once these new entries are expected by our software, we need a fallback mechanism for older files.

4.3.Log files vs provenance and warnings

Decades ago, it was quite standard for batch processing software to deliver not only numeric results but also a file that logged the actions of the software and associated output during data treatment. With modern GUI or scripting interfaces, writing these log files has somewhat fallen into disuse, and their original purpose is now frequently better served by other mechanisms.

As discussed in connection with Fig. 2, data treatment is conditioned by software, data, and parameters. These inputs make up the provenance [211] of the output data. To document provenance efficiently, parameters ought to be stored verbatim, whereas software and data should be pointed to by persistent identifiers. Instead of writing provenance information to a log file, it is far better to store it as metadata in each output artifact. Downstream software reads these metadata along with data input, adds further provenance information, and stores the enriched metadata with its output. Thereby FAIR archival is possible after each data processing step.

Alternatively or additionally, provenance information can also be preserved in form of a script that allows the entire data treatment to be replayed. Such a mechanism has been successfully implemented in Mantid, in BornAgain, and in the tomography reconstruction softwares MuhRec [154] and Savu [310]. Maintaining a step-by-step history of user actions can also help to implement undo/redo functionality, which is an important requirement for a user-friendly GUI.

Data treatment usually involves physical assumptions and mathematical approximations that are justified for certain ranges of control parameters and certain types of experimental data. Hopefully, code functionality will have been validated for a representative suite of input data, but it should be recognised that it may fail when applied to data that were not anticipated by the developers. This is a particularly serious problem in a research context where versatile instruments are used to investigate new physical systems outside of the domain envisaged by the designer. As long as a data treatment software executes seamlessly its output will be believed by most users. Software authors therefore bear a heavy responsibility. We need to make our software robust in the hands of basic or intermediate users [168]. We need to detect invalid or questionable computations and warn users of the problem. Warnings written to a log file or a verbose monitor window are easily overlooked. We recommend clear and strong signalling, for instance by green-yellow-red flags, to mark output in a GUI as safe, questionable, or invalid.

Finally, the log files meantioned earlier can be the dump for background information that does not belong to the regular output artifacts. A good example is the log file written by the text processor TeX. Normally, it is safe to ignore such logs; however, users can consult them if some problem needs closer investigation.

4.4.Plots

Whenever a software includes some means of data visualization, there will be user demand to preserve plots for internal documentation or for publication. If this is not properly supported by software, users will fall back on desperate means such as taking a screen copy, which is likely to be a crudely rasterized image that will deteriorate in subsequent image processing. We therefore recommend that all software provide an export mechanism for every displayed plot. The export options should include a standard vector graphics format to enable later rescaling or editing. Also consider providing an option to export displayed data as number to a simple tabular text file so that users can replot the data using a program of their own choice. Another export option could be a Python script that draws a figure by sending commands to a plot API such as MatPlotLib [140], Bokeh [151], or Plotly [280]; users can then edit the script to adapt the figure to their needs.

Certain software products boast of their ability to export “publication-grade graphics.” Given the high variability of graphics quality in actual publications, this is a rather empty promise. Users who strive for above-average quality [300] may request (among other things) the following capabilities: free selection of plot symbols and colors [163]; free selection of aspect ratios [2]; sublinear scaling of supporting typefaces with image size; labels with Greek and mathematical symbols, subscripts and superscripts; free placement of a legend and other annotations in a plot; various ways to combine several plots into one figure. Clearly, it would be wasteful to implement all this functionality for each specialized piece of data reduction and analysis software. Instead, it is better to link our specialized software with a generic plotting library (possibly one of the aforementioned [140,151,280]) that is flexible enough to meet all the above demands and more.

5.Non-functional requirements

5.1.License

Choosing a license should be among the very first steps in a software project. Changing the license after the first code preview has gone public would seed confusion if not conflict. Changing the license after the first external contribution has been merged into the code can prove outright impossible. An example of extreme precaution is given by the Python Software Foundation [238], where new contributors need to sign a license agreement and to prove their identity before their patches are considered. While this would seem exaggerated in our field, it gives nonetheless a correct idea how essential it is for the juridical safety of a software that all contributions be made under the same, clearly communicated licensing terms [171].

We take for granted that all institutionally supported projects in our field will be published as free and open source software (FOSS), as the advantages for the facilities, the users, the scientific community, and the developers, are overwhelming [16,93,141,150,198,206,232]. Licenses must not include popular “academic” clauses like “non profit use” or “for inspection only”, which nullify “the many significant benefits of community contribution, collaboration, and increased adoption that come with open source licensing” [198]. Choose the license among those approved by the Open Source Initiative (OSI) [204]. Only OSI-approved licenses will give free access to certain services [307]. Moreover, to minimize interpretive risks [248, Ch. 19.4] and to facilitate future reuse of software components by other projects, avoid the more exotic ones of the many OSI approved licenses. Preferably choose a license that is already widely used in our field. We have no common position on the long-standing debate as to whether or not the GNU General Public License [109] with its “viral” [90,283] precautions against non-free re-use is a good choice. Working group 4 of LENS [167] and UK Research and Innovation [302] are recommending the BSD 3-clause licence [205]. For textual documentation (as opposed to executable computer code), the Creative Commons licenses [54,283] are widely used.

The software license should be clearly communicated in the documentation and in a file called “COPYING” or “LICENSE” in the top-level source directory. Additionally, some software projects insist that each source file have a standardized header with metadata such as copyright, license and homepage.

5.2.Technology choices

5.2.1.Cross-platform development vs software as a service

Before starting code development, decide which operating system(s) shall be supported, since this has influence upon the choice of programming languages, tools and libraries. The decision depends on the expected workflow. For some instruments, data reduction is always carried out in the facility, during or immediately after the experiment. In this case, it suffices to support just a single platform preferred by the facility. Otherwise, and especially for later data analysis, users will run the software either on their own equipment or remotely on a server of the experimental facility. To support installation on user computers, we usually need to develop cross-platform, and provide installers or packages for Windows, MacOS and Linux alike (Sections 5.10.3, 5.10.4).

However, the global trend in research, as in other fields, goes towards cloud computing. In the “software as a service” (SaaS) model, a user runs a “thin client”, most often just a web browser, to access an application that is executed in the host facility or a third-party server farm. Where raw data sets become too large to be taken home by the instrument users, SaaS is the only viable alternative to on-site data reduction. Once set up, SaaS will facilitate the data treatment in several ways, with users no longer needing to install the software locally or to download their raw data. In this configuration, software versions are controlled and updated centrally, and we no longer need to assist users in debugging unresolved dependencies and incompatible system libraries. Computations can be made faster by using powerful shared servers with a fast connection to the data source.

Until a few years ago, the user experience with SaaS for data analysis suffered from random delays in interactive visualization. Thanks to faster internet connections and to optimised client-side JavaScript libraries [136,177], this difficulty is essentially solved. A remaining difficulty is the administrative overhead for server operation and load balancing, for authentication and authorisation, and for managing access rights to input and output data. These topics are, for example, being addressed by the European Open Science Cloud [72] and by the joint photon and neutron projects ExPaNDS [74] and PaNOSC [210].

5.2.2.Programming languages

After deciding about the target platforms, and before starting to code, one needs to choose the programming language(s) to be used for the new software. In making this decision, intrinsic qualities of a language such as expressive power or ease of use play a relatively minor role. More important is the interoperability with extant products, social compatibility with the developer and user communities and the ease of recruiting.

Frequently used implementation languages in our field are C, C++, Fortran, Matlab, IDL and Python. Computing groups may need to support all of these, and are reluctant to add any new language to this portfolio. For new projects, the choice is even narrower as most of us would exclude Matlab and IDL for not being open source. The preferred scripting language in our community is Python. Hence, choosing Python as scripting language will facilitate collaboration and interoperablity as already discussed in Section 4.1.4 with regard to a user API.

When it comes to the choice between C, C++, and Fortran as languages for speed-critical core computations, our preference goes to C++ mostly because as an object oriented general-purpose language it also is an excellent platform for managing complex data hierarchies and for writing a GUI. For small libraries of general interest, plain C is still worth considering.33

To balance the conservatism of this guideline, we need to stay open to emerging alternative languages. Promising candidates include Rust, to deliver us from the technical debt and memory ownership issues of C++ [219], and Julia, which addresses various shortcomings of Python [66,218,329].

5.2.3.Keeping up with language evolution

Programming languages evolve in time. Compatiblity-breaking disruptions like the transition from Python2 to Python3 are very rare. More typical are revisions that fix unclear points in the language standard, introduce new constructs and provide new functionality in the standard library.

Developers need to keep up with this evolution. Projects that are under active development should encourage the use of new features once a language revision is supported on all target platforms. In the short term, it may seem wiser to freeze mature code at a certain language version; however, in the longer term, this may impair productivity and will be unattractive for developers. Inevitably then, any long-standing code base will be stylistically heterogeneous. Modularisation and encapsulation can help to keep different strata apart, and as long as a class or a library provides stable functionality through a well documented interface there is no need to modernize the internals.

5.3.Coding style

Here and in the next two sections we collect guidelines that apply to both C++ and Python, and possibly to other languages. Language specific guidelines follow in Sections 5.6 and 5.7.

5.3.1.Code formatting

Code formatting refers to layout decisions that affect the visual appearance of source files, but not the outcome of compilation. In most programming languages, the main degree of freedom is the insertion of white space (blank character and newline) between lexical tokens. White space governs indentation, line lengths, vertical alignment, and the placement of parentheses and braces. A coding style is a set of layout rules for a specific programming language. In C and C++, different combinations of rules for various syntax elements yield a huge number of possible coding styles, some of which have been popularised by books, corporate style guides and important open source projects. In Python, early adoption of the authoritative style guide PEP8 [306] eliminated some variability of layout, but left many finer points open.

Within a project, a binding coding style should be adopted in order to minimize distraction in reading and writing, and to prevent friction between developers. It should be chosen early, and modified only for very good reasons, in order to keep the git history clean. The formatting can and should [260,305] be done automatically by tools like clang-format [38] for C and C++, or yapf [327] for Python, with project-wide parameterization in the top-level files .clang-format and .style.yapf, respectively. To ensure that code changes respect the chosen style, the formatting tools should be run as part of the test suite or from a pre-commit hook [35, Ch. 8.3].

5.3.2.English style

For ease of international collaboration, all our writing should be in English. This concerns user-facing artifacts (such as graphical interface, scripting commands, log files and error messages, manual and website) as well as the code base (such as filenames, identifier names and comments) and internal documentation. As our users and developers need reading proficiency in technical English anyway, we would rather not spend scarce resources on translations into other languages. For consistency with external resources (such as operating system, libraries and literature) the spelling should be American [314]. Avoid slang and puns. Allusions to popular and high culture alike are not understood by everybody [286, NL.4].

5.3.3.File and identifier names

Expressive file and identifier names are of prime importance for the readability of large code bases. Use a dictionary and thesaurus to find accurate English designations. Employ grammatical categories consistently, as suggested in this recommendation: “Variables and classes should be nouns or noun phrases; Class names are like collective nouns; Variable names are like proper nouns; Procedure names should be verbs or verb phrases; Methods used to return a value should be nouns or noun phrases” [117].

It happens that a name was initially correct, but intention, scope or function of the designated object have drifted. Code review and refactoring rounds are occasions to get names right. Discussing naming rules and problematic cases can be a rewarding team exercise.

Camel case or connecting underscores can help to keep composite names readable. It is good practice to use typography for distinguishing categories of identifiers. For C++ and Python alike, we recommend the wide-spread convention that class names are in camel case (SpecularScan), and variables, including function arguments, in snake case (scan_duration) [115,301,306]. For function names, there is no consensus; projects have to choose between snake case and dromedar case (executeScan). Use these typographic devices with moderation. Don’t split words that may be written together in regular text: Prefer wavenumber and Formfactor over wave_number and FormFactor. Keep things simple: Rawfile is better than RawDataFile, Sofa better than MultiButtSupporter [48]. While Hungarian Notation (type designation by prefixes) is now generally discouraged [286, NL.5], [183,194,297], a few prefixes (or postfixes) may be helpful (e.g. m_ for C++ class member variables [301]).

Directory and namespace hierarchies can help to avoid overlong names. Short names are in order for variables with local scope [286, NL.7], [297]: In a loop, i and j are better than horizontal_pixel_index and vertical_pixel_index. Consider names in context; if names are too similar then they will be confused in cursory reading [286, NL.19], [71,152].

General advice goes against abbreviations [115]: createRawHistogramFile is better than crtRwHstgrFil. However, some domain-specific abbreviations for recurrent complex terms are fine: Prefer SLD and EISF over numerous repetitions of ScatteringLengthDensity or ElasticIncoherentStructureFactor.

5.3.4.Source comments and developer documentation

Documentation is difficult, and hardly ever completely satisfactory. This holds true even more so for the developer oriented than for the user oriented material. In the short term, developers neglect documentation because it does not help them in coding. In the long run, developer documentation is notoriously unreliable because there are no automatic tests to enforce that it stays in tune with the executable code. This well known fundamental problem [274] has no single comprehensive solution. We can however recommend the following strategies.

To keep documentation and code synchronized, generate documentation out of the code [190, p. 54]. To document an API, be it user or developer facing, use a standard tool like Doxygen for C++ [64] and Sphinx for Python [26].

Strive for the classic documentation style of Unix manual pages: telegraphic but complete. Equally avoid incomprehensibility and condescension. Assume an active reader who is able to deduce obvious consequences [248, Ch. 18.2]. Most importantly, don’t say in comments what can be said in code [286, NL.1], [115,128].

General advice is that comments should explain the intent of code segments rather than their internal workings [286, NL.2]. If the code is structured into files, classes and functions that have one clear purpose (single responsibility principle, Section 5.4.3), and have consistent, accurate and expressive names (Section 5.3.3), then no comment may be needed. The section on comments in the Google C++ style guide [115] gives valuable hints as for which information should be provided in function and class comments.

Developers who are new to a project should seize this opportunity to improve the documentation while familiarizing themselves with extant code [184].

Fig. 3.

Include dependencies between some library components (red frames, blue box) and subdirectories (within the blue box) of BornAgain. Such images, automatically generated by Doxygen [64] and therefore always up to date, provide documentation of a software’s architecture. They are most useful if care is taken to organize directory dependencies as a directed graphs.

![Include dependencies between some library components (red frames, blue box) and subdirectories (within the blue box) of BornAgain. Such images, automatically generated by Doxygen [64] and therefore always up to date, provide documentation of a software’s architecture. They are most useful if care is taken to organize directory dependencies as a directed graphs.](https://content.iospress.com:443/media/jnr/2022/24-1/jnr-24-1-jnr220002/jnr-24-jnr220002-g028.jpg)

For projects of a certain complexity, a software architecture document is helpful or even indispensable. It “is a map of the software. We use it to see, at a glance, how the software is structured. It helps you understand the software’s modules and components without digging into the code. It’s a tool to communicate with others – developers and non-developers – about the software” [309]. It needs to stay high-level. “It will quickly lose value if it’s too detailed (comprehensive). It won’t be up to date, it won’t be trusted, and it won’t be used” [309]. Any more detailed view of the software architecture should be automatically generated, for instance in form of the various diagrams produced by Doxygen, like the one shown in Fig. 3.

5.4.Architecture

5.4.1.Modularization

“Decomposition driven by abstraction is the key to managing complexity. A decomposed, modular computer program is easier to write, debug, maintain and manage. A program consisting of modules that exhibit high internal cohesion and low coupling between each other is considered superior to a monolithic one” [294]. If a component has limited scope and complexity, chances increase that users are able to extend its functionality [206].

Large projects should organize their code into several object libraries (or Python modules). A good library structure may reduce the time spent on recompiling and linking, and thereby accelerate development cycles. Functions and classes that handle file, database or network access should be isolated from application specific code by, for instance, putting them into a separate library [170].

As already mentioned in Section 4.1.1, GUI code has its own difficulties. Choose an established design pattern like Model-View-Presenter (MVP) [230] (used by Mantid [180]) or one of its variations (MVC, MVVM [56,271]). These patterns describe a layered architecture where the screen “view” forms a thin top layer with minimal logic. The “model” component must not be confused with the data model of the underlaying physics code. GUI and “business logic” (physics) should be strictly separated. The “model” component of the GUI design patterns is only meant as a thin layer that exposes the data structures and functions from the GUI-independent physics module to the “view”.

There should be no cyclic dependencies [170]; this holds equally for linking libraries [185, Ch. 14], for including C/C++ headers from different source directories,44 and for Python module imports [251].

Sometimes, part of our code solves a specific scientific problem and therefore may also be of interest for reuse in a concurrent project or in other application domains. In this case, we recommend configuring the code as a separate library (or Python module), moving it to a separate repository and publishing it as a separate project. For code that includes time-consuming tests this has the additional advantage that it saves testing time in dependent projects.

5.4.2.External components

Wherever possible, we reuse existing code. Preferentially, we choose solutions from well established, widely used components that can be treated as trusted modules [311, Section 8.4] for which we need not write our own unit tests. For compiled languages, external components are typically used in form of libraries that must be linked with our own application; the equivalent in Python are modules that are included through the import statement. In either case a dependency is created that adds to the burden of maintenance (Section 5.10.1) and deployment (Section 5.10.3).

For “dependency hygiene”, it “can be better to copy a little code than to pull in a big library for one function” [223]. Header-only C++ libraries may seem good compromise in that they create a dependency only for maintenance, not for deployment, but they come at an expense in compile time.

Standard C or C++ libraries for numeric computations include BLAS [315] and Eigen [70] for linear algebra, FFTW for Fourier transform [86,87], and GSL (GNU Scientific Library) [82,110] for quadrature, root finding, fitting and more. In Python, we consequentially use NumPy [121,199] for array data structures and related fast numerical routines. The library SciPy [262], built on top of NumPy, provides algorithms “for optimization, integration, interpolation, eigenvalue problems, algebraic equations, differential equations and many other classes of problems” [308].

As for a GUI library, the de facto standard in our community is Qt [243], which started as a widget toolkit and has become a huge framework. It is written in C++, but wrappers are available for a number of other languages, among them Python [244], Go [291] and Rust [252]. Qt, as well as all libraries mentioned in the previous library, is open source. It can be freely used in free and open source projects. As commercial licenses are also available, a broad industrial user base, especially from the automotive sector, will sustain Qt for many years to come.

5.4.3.Design principles

The object-oriented programming paradigm suggests ways of organizing data and algorithms in hierarchies of classes. As object-oriented languages came into wider use, it was learned that additional design principles are needed to achieve encapsulation, cohesion and loose coupling, and thereby arrive at a clear and maintainable code architecture [57,182,185,190,293]. In the C++ community, some principles became known by the mnemonic SOLID [185]:

single responsibility: a class should have one and only one reason to change;

open/close: classes should be open for extension, closed for change;

Liskov substitution: class method contracts must also be fulfilled in derived classes;

interface segregation: classes should be coupled through thin interfaces;

dependency inversion: high-level modules and low-level implementation should both depend on abstract interfaces.

5.4.4.Design patterns

Patterns “are simple and elegant formulations on how to solve recurring problems in different contexts” [296]. A catalog of 23 design patterns for object-oriented programming has been established in the widely known “gang-of-four book” [91]. They go by names like adapter, command, decorator, factory, observer, singleton, strategy and visitor pattern. Developers should know these patterns sufficiently well to recognize when they can be used. When a pattern is used this should be made transparent in the code by, for instance, choosing class names that contain the pattern name.

Design patterns are not always the optimal choice because they are meant to lay ground for later generalization. We have seen code bloated by premature generalization for hypothetical use cases that never came into being. Design patterns, therefore, should not be used just because they can be used; they should be preferred over equivalent, self-invented, solutions, but not over obviously simpler designs [11,12,30,126,295].

5.4.5.Error handling

A policy for handling errors and non-numbers (Section 5.5.1) should be laid out early in the development or renovation of a software module, as it can be hard to revise this later in the project [286, E.1].55 When integrating modules that have different policies, it may be necessary to wrap each cross-module function call to catch errors or non-numbers. This applies in particular to calls to the C standard library and to other mathematical libraries of C or Fortran legacy.

There are different ways to signal that a function cannot perform its assigned task:

The ultimate reaction to an exception depends on the control mode (Section 4.1). Batch processing programs should terminate upon error. In interactive sessions, immediate termination should be avoided if it is possible to return to the main menu without risk of data corruption. This gives users the opportunity to continue their work or at least to save some intermediate results before quitting the program.

To avoid data corruption when a computation terminates abnormally, subroutines should transform data out-of-place rather than in-place. We note, however, that in-place transformation can be simpler and faster when very large data blocks (e.g. image volumes of tens of GBytes) are handled.

5.4.6.Assertions

In our code we use assertions to check invariants and other implicit conditions that need to be true for a program to work. A failed assertion always reveals a bug. Function arguments can be checked by assertions if the caller is responsible for preventing invalid arguments. Invalid user input must be handled by other mechanisms. Assertions help to detect bugs early on, and to prevent software from delivering incorrect results. Furthermore, assertions are valuable as self-testing documentation [84,320]: Assert(x>0) is much better than a comment that says x is expected and required to be a nonzero positive number. Assertions are particularly useful for checking whether input and output values satisfy the implicit contract between a function and its callers [67]. In languages that lack strong typing, assertions can be used to check and document constraints on the supported type of function arguments; in Python, however, the standard approach for this is to use native type hints combined with one of the many tools for static or dynamic checking [50,94].