An efficient technique for CT scan images classification of COVID-19

Abstract

Nowadays, Coronavirus (COVID-19) considered one of the most critical pandemics in the earth. This is due its ability to spread rapidly between humans as well as animals. COVID-19 expected to outbreak around the world, around 70 % of the earth population might infected with COVID-19 in the incoming years. Therefore, an accurate and efficient diagnostic tool is highly required, which the main objective of our study. Manual classification was mainly used to detect different diseases, but it took too much time in addition to the probability of human errors. Automatic image classification reduces doctors diagnostic time, which could save human’s life. We propose an automatic classification architecture based on deep neural network called Worried Deep Neural Network (WDNN) model with transfer learning. Comparative analysis reveals that the proposed WDNN model outperforms by using three pre-training models: InceptionV3, ResNet50, and VGG19 in terms of various performance metrics. Due to the shortage of COVID-19 data set, data augmentation was used to increase the number of images in the positive class, then normalization used to make all images have the same size. Experimentation is done on COVID-19 dataset collected from different cases with total 2623 where (1573 training, 524 validation, 524 test). Our proposed model achieved 99,046, 98,684, 99,119, 98,90 in terms of accuracy, precision, recall, F-score, respectively. The results are compared with both the traditional machine learning methods and those using Convolutional Neural Networks (CNNs). The results demonstrate the ability of our classification model to use as an alternative of the current diagnostic tool.

1Introduction

COVID-19 is an infectious disease caused by critical acute respiratory syndrome. This disease was first identified in 2019 in Wuhan, the capital of Hubei province in central China, and spread globally which led to the 2019–20 coronavirus pandemic [1]. The outbreak of a novel coronavirus COVID-19 infection has posed significant threats to international health and the economy. It has popular symptoms including fever, cough, shortness of breath nevertheless, muscle pain; sputum production, diarrhea, and sore throat are less common. Computer-aided diagnostic (CAD) systems have been developed to benefit doctors in rapid diagnosis, using information from CT scan images [2]. Depending on the unique attributes presented in the medical images, CAD systems may provide the diagnosis [3, 4]. These systems typically use pre-processing steps, extraction attributes, selection and classification to categorize Infected/Not Infected CT scanning images. In the literature, several methods were proposed which employ classical machine learning algorithms to detect CT scan images [5, 6]. These studies have suggested solutions based on algorithms of deep learning. Chest CT scan of COVID-19 cases is based on detecting the opacity and peripheral lung consolidation [7–9]. These findings are totally different from Lung related cases, in which they are searching for either lung nodules or effusion [10]. The characteristic features of COVID-19 lung lesion are destructive to lung parenchyma, which radiologically seen as white patches starting from lung edges to its center. Artificial Intelligence techniques simulate the idea of human brain to classify 2D images by encoding object orientations to distinguish many objects. Recently, Machine Learning (ML) and Deep Learning (DL) algorithms have made great improvements [11] upon automatically diagnosing diseases and made diagnostics cheaper and more accessible [12]. DL algorithms can teach how to see samples similarly to the way doctors see them. It needs many firm inputs to learn such as Diagnosing covid-19 virus based on CT scan. DL models can analyze many cases to detect whether chest CT reveals any abnormalities in the lung [13]. Computer vision and DL are solving many vision problems in medicine and in all other fields. The lack of data is a common challenge especially when deep Convolutional Neural Networks (CNNs) was used on these small data Because of this challenge, using 2D CT scan images of the COVID-19 Virus became more complex. Contract Tomography (CT) images are an important tool in medical imaging to diagnose several diseases [14, 15]. Physicians usually relied on using manual or semi-automatic techniques to study organ shape and texture anomalies of CT scans. According to the main aim of this work which is classifying COVID-19, The primary diagnosis of the lung using CT is very important Deep learning starts overfitting when the data size is small. Pre-trained DCNN models with transfer learning were developed to solve this problem of overfitting, and so far, only few works have experimented them for CT scan images [16–18]. This paper explained the capability of the pre-trained DCNN models with transfer learning on different versions of Covid-19 cases.

The main contributions of this paper are:

1. Woried Deep Neural Network transfer learning (WDNN) is proposed to classify the patients as COVID Infected or Not Infected.

2. The proposed model is utilized to extract features by using its own learned weights on the ImageNet dataset along with a convolutional neural architecture.

3- Comparing between various models of deep convolutional networks is to deploy them for CT scan images classification using the concept of transfer learning and achieving a robust improvement with COVID-19 dataset.

This paper is organized as follows. Section 2 introduces the Related Works. Section 3 introduces the Methods. Section 4 introduces a proposed work. Section 5 Implementation and Evaluation. Section 6 presents conclusions and future works.

2Related works

We compare the performance of the proposed model with some of the related classification of COVID-19 CT images that used deep learning as shown in Table 1. Image classification infection involves a sequence of operations. Image Preprocessing, Feature extraction and Classification. Preprocessing removes undesired noise presented in the acquired CT image for better visualization and further analysis. Feature extraction extracts insights from the erythrocytes like color [19]. Extracted features are classified as infected and non-infected images by using deep learning algorithms. Different methods have been proposed for preprocessing CT images for enhancement especially parts of images [20]. Gao et al. [4] developed a Dual-branch Combination Network (DCN) for COVID-19 diagnosis. Their work reached a classification accuracy 92.87% on. Wang et al. [14] presented a deep learning methods for extract COVID-19’s for helping a clinical diagnosis ahead of the pathogenic test. They test their model by using a collected 453 CT images of COVID-19 patients where 217 images were used as the training set the validation achieved a total accuracy of 82.9% with specificity of 80.5% and sensitivity of 84% applied deep learning methods for extracting COVID-19’s specific graphical features and providing a clinical diagnosis ahead of the pathogenic test. The results proved that this work saves critical time for diagnosis. Jin et al. [16] applied typical data augmentation techniques to increase the diversity of data (randomly flipped, panned, and zoomed images for more variety), that had been shown to improve the generalization ability for the trained model. Ying et al. [17] developed a deep learning lung CT classification model to detection the cases with COVID-19. They automatically extract radiographic features of the ground-glass opacity from radiographs. They built a pneumonia architecture by using 100 bacterial pneumonia and 88 COVID-19 cases. They reached to AUC of 0.91 in the image level, and AUC of 0.95 in the human standard. Chao et al. [21] used holistic information of patients including both imaging and non-imaging data for outcome prediction. The experimental results demonstrated that adding non-imaging features can significantly improve the performance of prediction to achieve accuracy up to 0.884 and sensitivity 96.1%. Chen et al. [22] applied two data augmentation operations. In the first operation. They rotated each image into 0∘, 90∘, 180∘ and 270∘. In the second operation, the horizontal flipping, vertical flipping, and channel flipping were used. Ying et al. [23] proposed a model could identify the main lesion characteristics, ground-glass opacity, which can greatly help in assisting doctors in diagnosis. The diagnosis for a patient may be done in 30 seconds, and the Tianhe-2 supercomputer implementation enables simultaneous execution of thousands of tasks.

Table 1

Related studies for diagnosis of covid-19

| Literature | Method | Results | |||

| Accuracy | Sensitivity | Specificity | AUC | ||

| Gao et al. [4] | DCN | 95.99% | 89.14% | 98.04% | 0.9755 |

| Wang et al. [14] | CNN | 83% | 84% | 80.5% | ——– |

| Jin et al. [16] | U-Net+ | 82.9% | 97.4 % | 92.2 % | —– |

| Ying et al. [17] | DCNN | 90.1% | ——- | —— | 0.976 |

| Chao et al. [21] | CNN | ——— | 96.1% | ——- | 0.884 |

| Chen et al. [22] | CNN | 98.61% | —— | —– | ——- |

| Ying et al. [23] | CNN | ———- | 93% | ——– | 0.99 |

| Coudray et al. [2] | CNN | —– | —– | 0.97 | |

| Gozes et al. [29]. | CNN | ——— | 98.2% | 92.2% | 0.996 |

| Ko et al. [26] | CNN | 99.87% | 99.58% | 100% | —– |

Xu et al. [24] suggested a model for distinguishing COVID-19 pneumonia from Influenza-A viral pneumonia and healthy pulmonary CT images using deep learning techniques. The regions of the candidate infection were first segmented using a 3-dimensional deep learning model from a set of pulmonary CT images. Coudray et al. [2] proposed a deep learning model for detecting COVID-19 called (COV Net) in which visual characteristics from COVID-19 volumetric chest CT scans are extracted. To check the model’s robustness, Community Acquired Pneumonia (CAP) and other non-pneumonia CT tests were used. Gozes et al. [25]. proceed with an evaluation of the ability to detect at the case-level for COVID-19 vs non-COVID-19 virus cases. They implement the image classification on the Chinese patients. they used 56 cases with COVID-19 CT images and for non-COVID-19 cases. Using the positive ratio as a decision feature and achieving a sensitivity of 98.2% with 92.2% specificity. Ko et al. [26] Present a model by using a radiologists performance in COVID-19 from other viral pneumonia, the ranges of sensitivity and specificity were 83% and 96.5%, respectively. They aimed to rapidly develop an AI technique using all available CT images from our institution as well as publicly available data. Zhang et al. [27] suggested a CT scans of 2460 COVID-19 patients using AI Assistant Analysis architecture for accurately diagnosis various anatomical models of the lung to detect infection Lungs and calculate the percentage of infection in the lungs. They applied the model on CT scans of 2215 patients showed multiple lesions of which 36 and 50 patients had left and right lung infections.

This paper presents a Deep Learning Neural Network (WDNN) that uses different algorithms and techniques to automatically classify 2D CT scan mages of COVID-19 virus with high accuracy rate and low error rate. It uses a deep convolutional neural network for automated classification for CT scan image of COVID-19 virus. It applies transfer learning to three different pre-trained models [28–30].

3Methods

CNN contains several neural networks. Usually, the main two layers used in the deep network are convolutional and pooling layer [31, 32]. The depth of each filter in the deep network increases from left to right. The previous layer before the classification layer and after convolutional layers usually could be consisted of one or more fully connected layers. There are different ways for using a pre-trained model such as feature extraction, by replacing the number of layers from a pre-trained network and fine-tune all layers or Freeze initial layers and train the replaced layers only [21, 33].

3.1Transfer learning

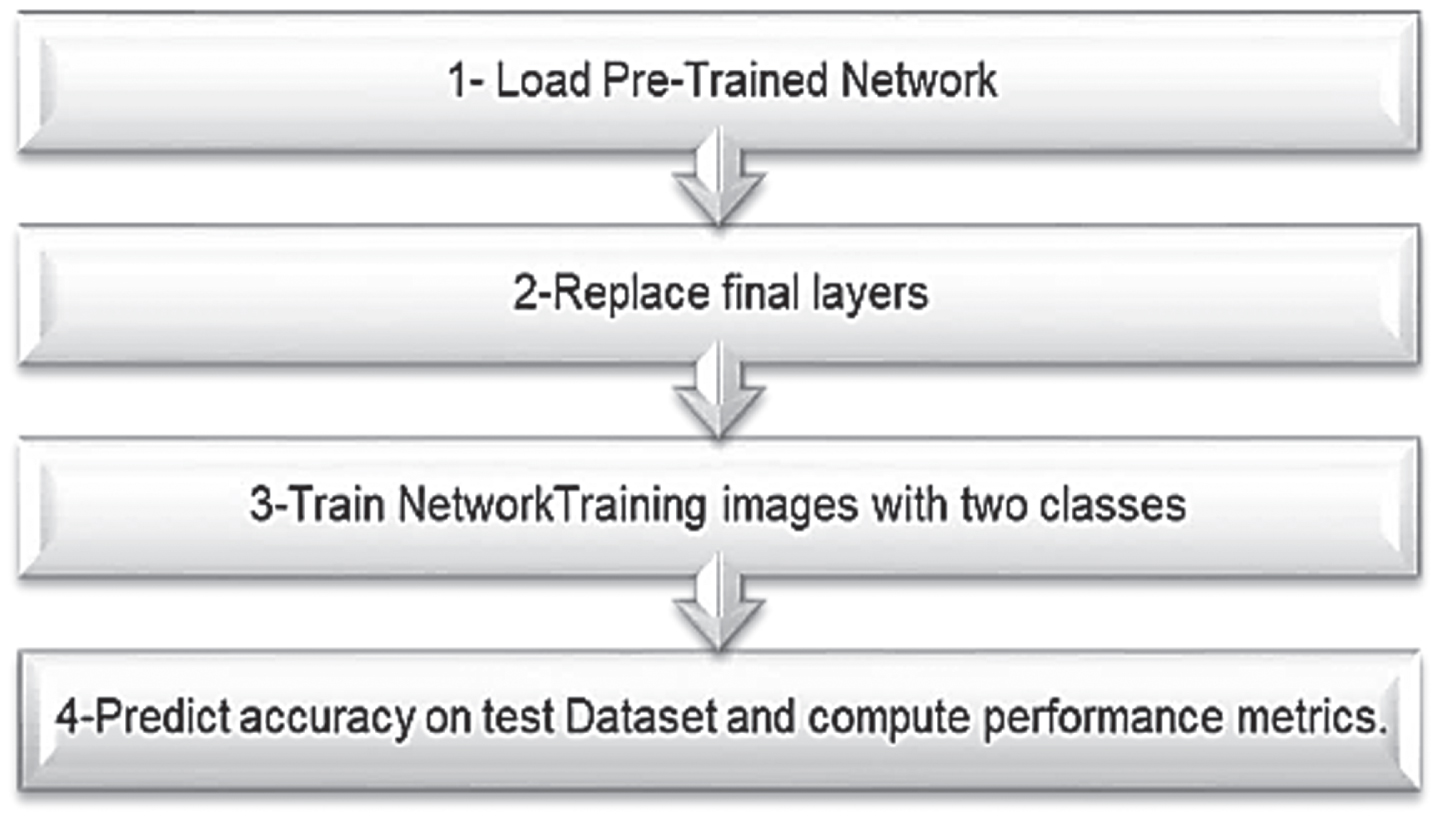

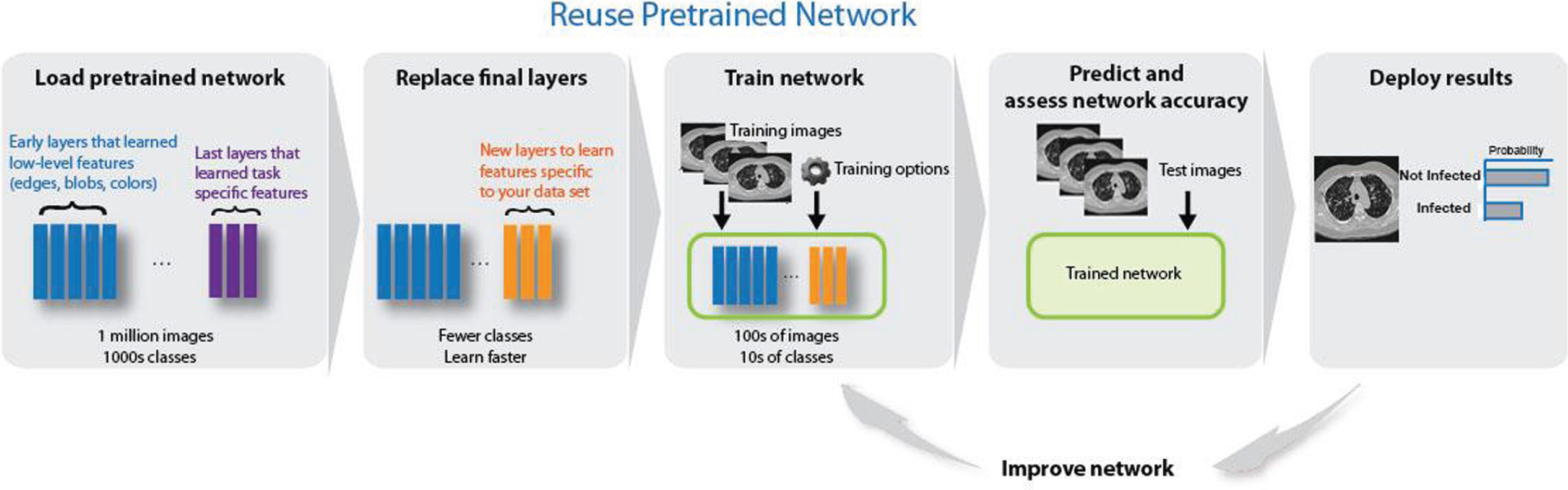

In computer vision, transfer learning is a good solution to use pre-trained models instead of building a deep model from scratch. A pre-trained model could be used with a different dataset but in the same domain [34]. Transfer learning has been applied to three common pre-trained models (InceptionV3, Residual50, and VGG19) to classify CT scan images to accelerate the training and testing processes for improving the overall accuracy and reducing error rate as shown in Fig. 1.

Fig. 1

A brief illustration of the mechanism for transfer learning.

4The proposed work

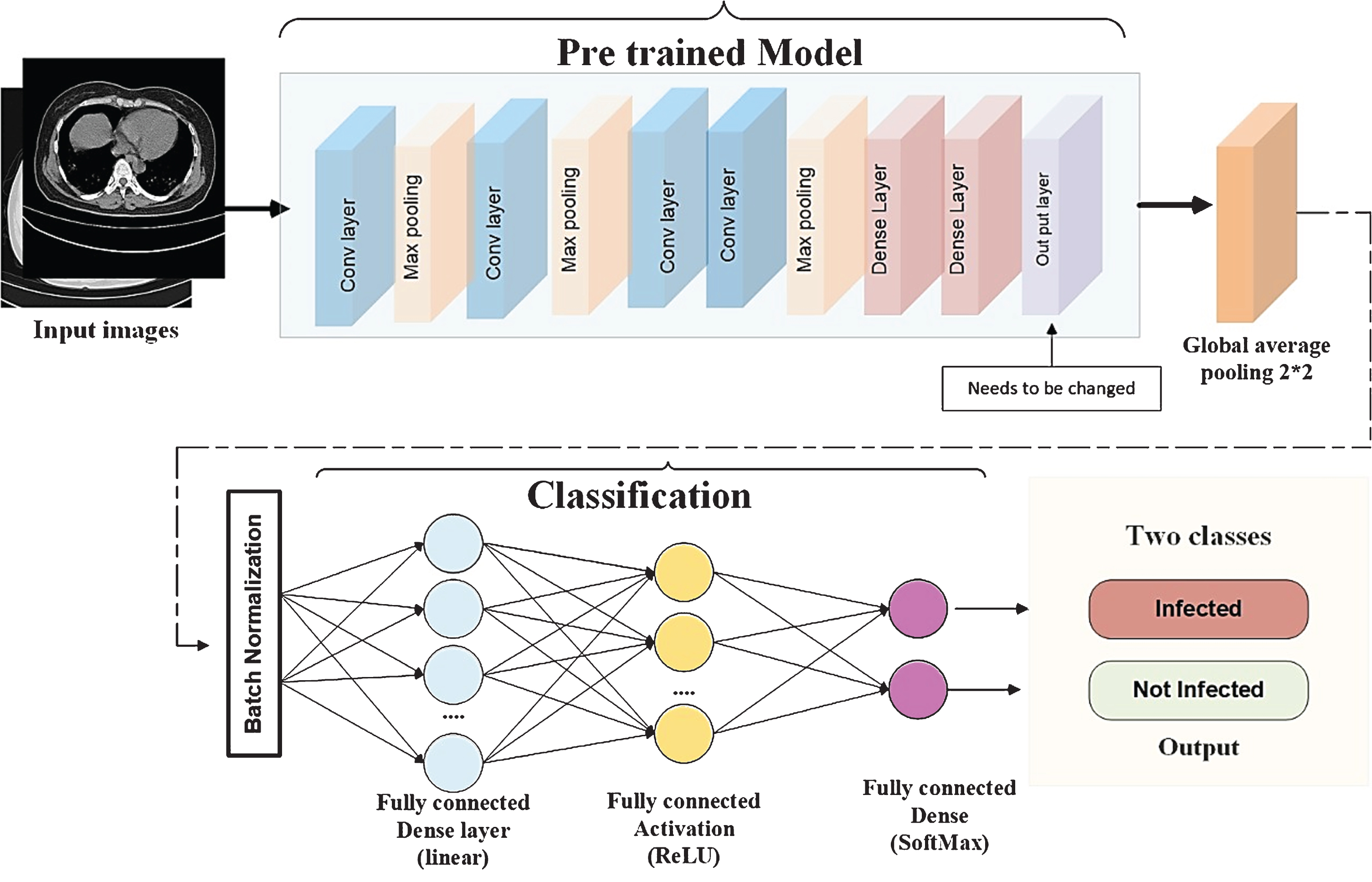

DL achieves a unique success in computer vision challenges including image classification This paper uses DL algorithms for diagnosing Covid-19 virus depends on 2D CT scan images. Figure 3 illustrates the proposed WDNN model, which is composed of four main steps, namely, (i) Data Preparation (DP) (ii) Pre-Trained model (PT), (iii) Feature Extraction (FS) and (iv) Classifier and loss function (CL). During DP, neural network processed to prepare the data by using pre-processing and data augmentation for use in training and testing. PT is applied for solving the problem of Lack training data and reducing the total training time. FS is used to elect the most effective features to be used for the detection purpose and accordingly reduces the detection model complexity as well as the dimensionality of the input data. CL minimize the error for each training data during the learning process [35]. The proposed model based on using transfer learning and applying WDNN model for classification CT scan images of Covid-19 virus. The four steps of the proposed WDNN will be explained in more details. The outline of proposed architecture is shown in Fig. 3.

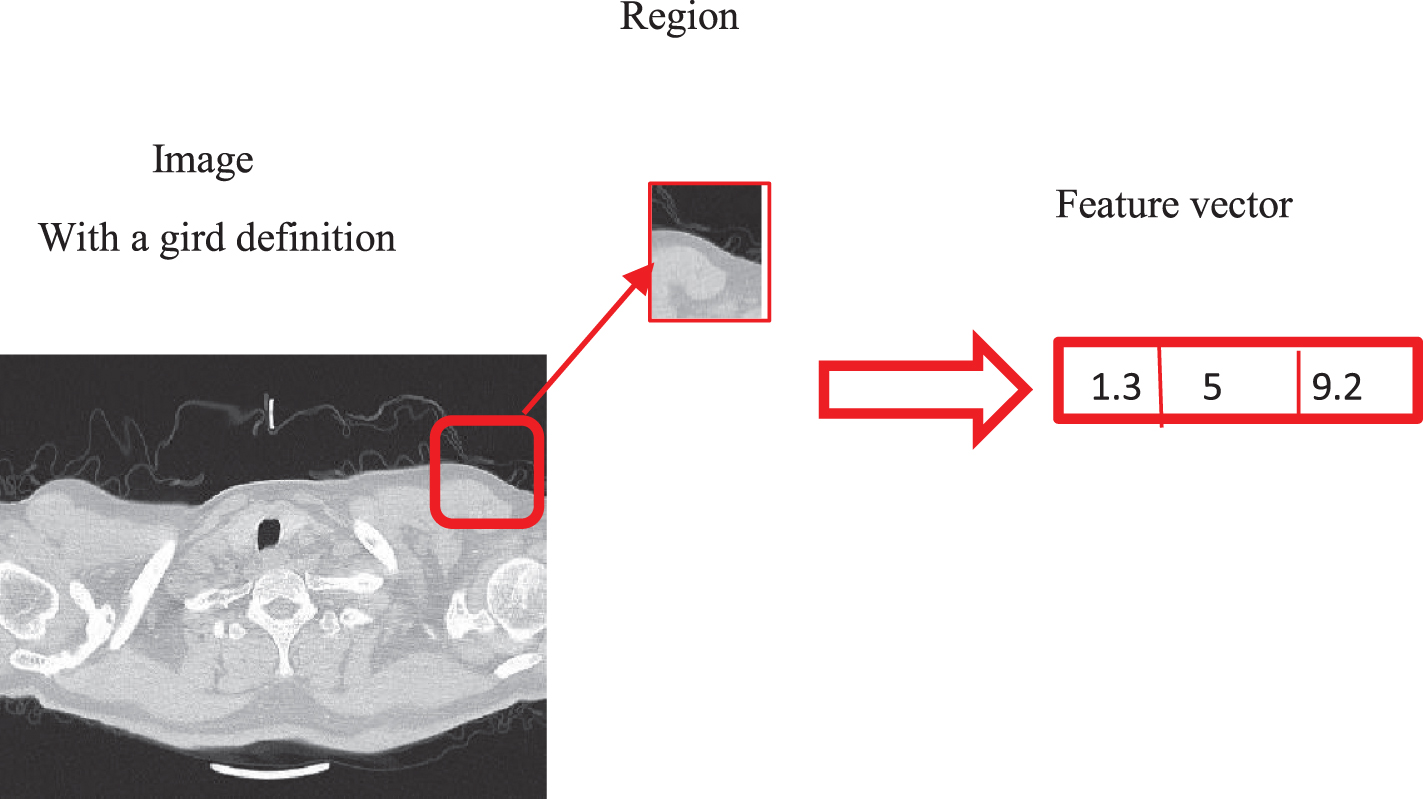

Fig. 2

The general example for feature extraction of CT scan image.

Fig. 3

The outlines steps for the proposed work, where it illustrated the sequence of our proposed work from the input layer after pre-possessing and reusing the pre-trained model(VGG-19 or Resnet-50 or Inceptionc3) by replacing the final layers according to the type of it, then using the Global average pooling to minimize overfitting by reducing the total number of parameters in the model by reducing the spatial dimensions of a 3-dimensional tensor. Batch normalization is important for helping the model faster and more stable after fully connected layers.

4.1Data preparation (DP)

In Data Preparation (DP) step, the data prepare for use during the model training and testing. The Collected data is then saved in csv excel file [12]. Then, further analysis is done for preparing the data to be used in next stages. Data analysis includes three rounds, which are (i) Data Augmentation that is used to artificially expand the dataset and to avoid over fitting of the model. (ii) Data Normalization, which converts non-numeric data elements into a standardized numeric representation. The effects of Data Preparation are shown in Fig. 4 and Algorithm I describe the steps of it.

Fig. 4

Samples of the data preparation before the training stage.

4.2Pre-trained model (PT)

A pre-trained model is already pre-trained on a large data set (ImageNet) with lots of diverse image classes. Given this fact, the model was assumed to have taught a robust feature hierarchy, which is static spatial, rotational, and translation in relation to the features that CNN models learned. Hence, the model, after learning a good feature representation of over a million images belonging to 1,000 different categories, can serve as a good extractor of new images suitable for computer vision problems. These new images may not be present at all in the ImageNet dataset or may be from completely different classes, but the model must still be able to extract the relevant features from these images. In Fig. 5 the main outlines of Reusing pre-trained models on image classification. The proposed model depends on loading pretrained models that trained in large number of images [36]. Replacing final layers according to every pre-trained model where new layer learn features specific to Covid-19 dataset. The model become suitable for training with training options, then predict and testing the performance of the model [37].

Fig. 5

The main steps of reusing the models on image classification.

4.2.1Inception V3

Inception V3 is used as pre-trained model by applying it with 1000 classes. The idea of transfer learning is based on reusing the feature extraction section and re-training the classification section with new dataset. In this paper, The DWDNN based InceptionV3 model for the classification of COVID-19 CT scan images to Infected or Not Infected. In addition, that applied transfer learning technique that was realized by using ImageNet data to overcome the insufficient data and training time. CT images are taken as input, Inception V3 is applied, convolution, pooling, softmax, and fully connected processes are performed. About to complete these tasks, they are classified according to different training modules.

4.2.2Residual neural network (ResNet50)

Residual blocks used to reparametrize features using the identity function. Adding residual blocks increases the function complexity in a well-defined manner. The proposed model trains an effective deep neural network by having residual blocks pass data through cross-layer. Skip connection in ResNet50 relieves vanishing gradient by adding an alternate shortcut way for gradient to flow over it [38]. It trains the model by using an identity function that shows that the higher layer will do at least as good as the lower layer, and not worse. It has 5 stages each with a convolution and Identity block. Each convolution block contains 3 convolution layers, and each identity block has 3 convolution layers. It has more than 23 million trainable parameters.

4.2.3Visual geometry group (VGG19)

VGG19 usually refers to a deep convolutional network for object recognition. It is used as good classification architecture for many other datasets. Since the authors made it available to the public, it can be modified for other similar tasks. The weights of VGG are easily available with other frameworks like keras. The proposed architecture depends on VGG19 that achieved by experimenting deeper and faster automatic classification 15.

4.3Feature extraction (FS)

The network requires input images of size 512-by-512-by-3, but the images in the image data stores have different sizes. To automatically resize the training and test images before they are input to the network, create augmented image data stores, specify the desired image size, and use these data stores as input arguments to activati [39, 40]. The network constructs a hierarchical representation of input images. Deeper layers contain higher-level features, constructed using the lower-level features of earlier layers. To get the feature representations of the training and test images, use activations on the global pooling layer at the end of the network. The global pooling layer pools the input features over all spatial locations, giving 512 features in total. FS is used to elect the most effective features to be used for the detection purpose, and accordingly reduces the detection model complexity as well as the dimensionality of the input data. The pre-trained model may be used as a standalone program to extract features from CT scan images. Specifically, the extracted features of an image may be a vector of numbers that the model will use to describe the specific features in an image. These features can then be used as input in the development of a new model. The last few layers of the VGG16 model are fully connected layers prior to the output layer [41]. These layers will provide a complex set of features to describe a given input. The pre-trained model may be used as a standalone program to extract features from CT scan images. Region feature extractors process square image neighborhoods and represent its central pixel by the resulting feature vector. This is useful to account for local spatial information and structure in images as shown in Fig. 2.

| Algorithm 1 introduces the proposed transfer model in detail. |

| Let D = Inception V3, Resnet (50), VGG19 be the set of transfer |

| models. Each deep transfer model is fine-tuned with the |

| COVID-19 CT Images dataset (X,Y); where X the set of N input |

| data, each of size, 512 lengths× 512 widths, and Y have the |

| identical class, Y = {y/y∈ {Infected;Not Infected }}. |

| Input data: COVID-19 CT Images (X, Y); where Y = |

| {y/y∈ {Infected; Not Infected}} |

| Output data: The transfer model that detected the |

| COVID-19 CT image x ∈ X |

| Begin: |

| // Pre-processing steps: |

| { |

| modify the CT input to dimension 512 height×512 width |

| Generate CT images using data preparation operations |

| download and reuse transfer models D = {Inception V3 or |

| Resnet (50) or VGG (19) |

| } |

| Replace the last layer of each transfer model by (4×1) |

| layer dimension. |

| For each d ∈ D do |

| μ = 0.0001 |

| for epochs = 1 to 50 do |

| for each mini-batch (Xi; Yi) ∈ (Xtrain;Ytrain) do |

| Modify the coefficients of the transfer d (·) |

| end |

| end |

| if the error rate is increased for five epochs then |

| μ=μ ×0.01 |

| End |

| // Testing steps: |

| for each ∈Xtest do |

| the outcome of all transfer architectures, d ∈ D |

5Implementation and evaluation

5.1Experimentation setup

Experiments are performed using a DELL computer equipped with a core i7 processor, 8 GB RAM. Python3.5 is used to execute the coded program with Tensor flow-keras environment.

5.2COVID-19 CT scan image dataset

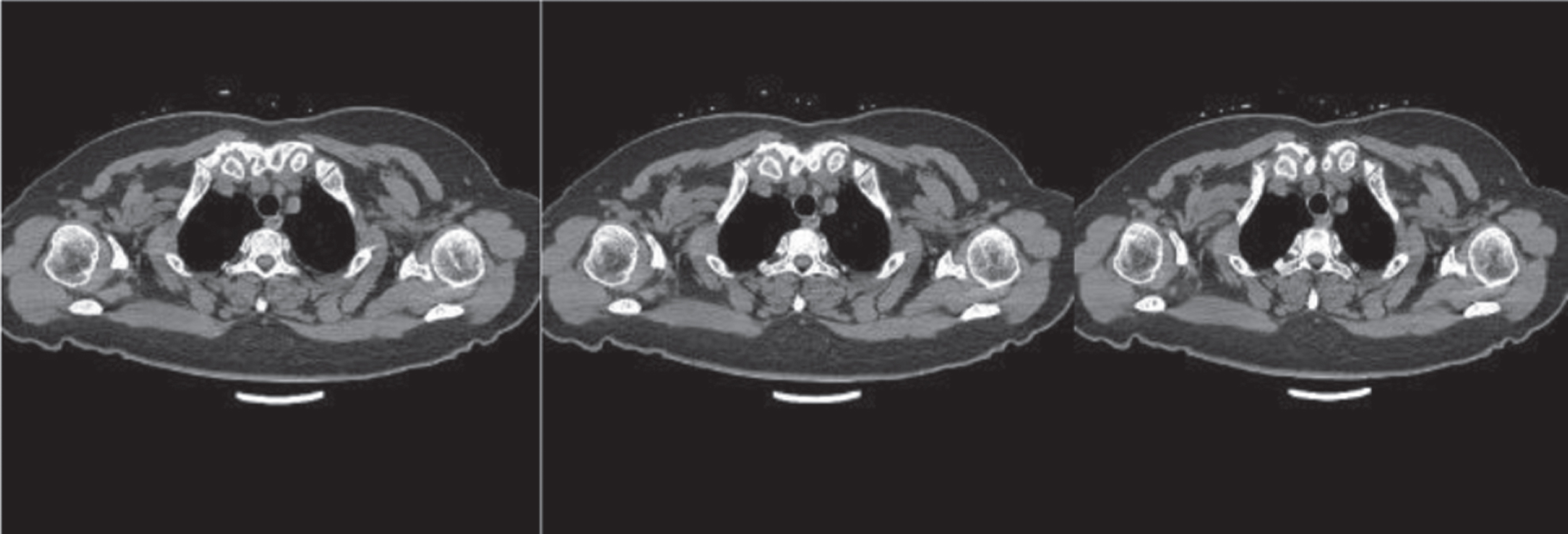

CT is currently the best medical imaging procedure for the correct diagnosis of COVID-19. Computer aided diagnosis can play a crucial role in controlling the pandemic. CNN is affected by the amount of data in the training stage, In the case of Diagnosing COVID-19 virus (Infected, Not Infected) as shown in Fig. 6 and lacking the datasets from the Internet. Kaggle site produces COVID-chest-CT scan dataset and creating two folders for two classes (Infected, Not Infected). It is dedicated to solving the automatic classification of 2D CT images to diagnose diseases and guide the image and the visualization of medical data. The dataset contains 2623 unlabeled data CT scan images into1573 training, 524 validation and 524 test sets with a ratio of 60:20:20.

Fig. 6

Samples of Slides of CT scan of COvid-19 cases.

5.3Performance measures

Several performance measures are used to validate the effectiveness of pre-trained DCNN models with transfer learning by using Sensitivity, specificity, and accuracy which are the most chosen measures. Precise classification is Related to the quality and specificity of the Terms: true positive (TP), true negative (TN) (FN), and (FP) false positive. TP is the abnormal number of images which are categorized as irregular, TN is the normal number Images which are classified as regular, FP is the normal number of Photos that are labeled abnormal, and FN is the number of abnormal photos that are labeled normal. Sensitivity to mathematics and its specificity is given as

(1)

(2)

(3)

(4)

The measures outlined above are effective only in The Balanced Dataset Scenario. Unbalanced data sets require further testing by means of additional performance metrics. Additional indices were calculated from the confusion matrix generated, which includes precision, false positives, Error, F-score; the method suggested in [45] was used for calculation.

5.4Training using several pre-trained architecture

In this section, comparing WDNN architecture with Inception, Residual and VGG common neural network architecture and by using 2624 slice CT scan images for 10 Covid-19 cases.

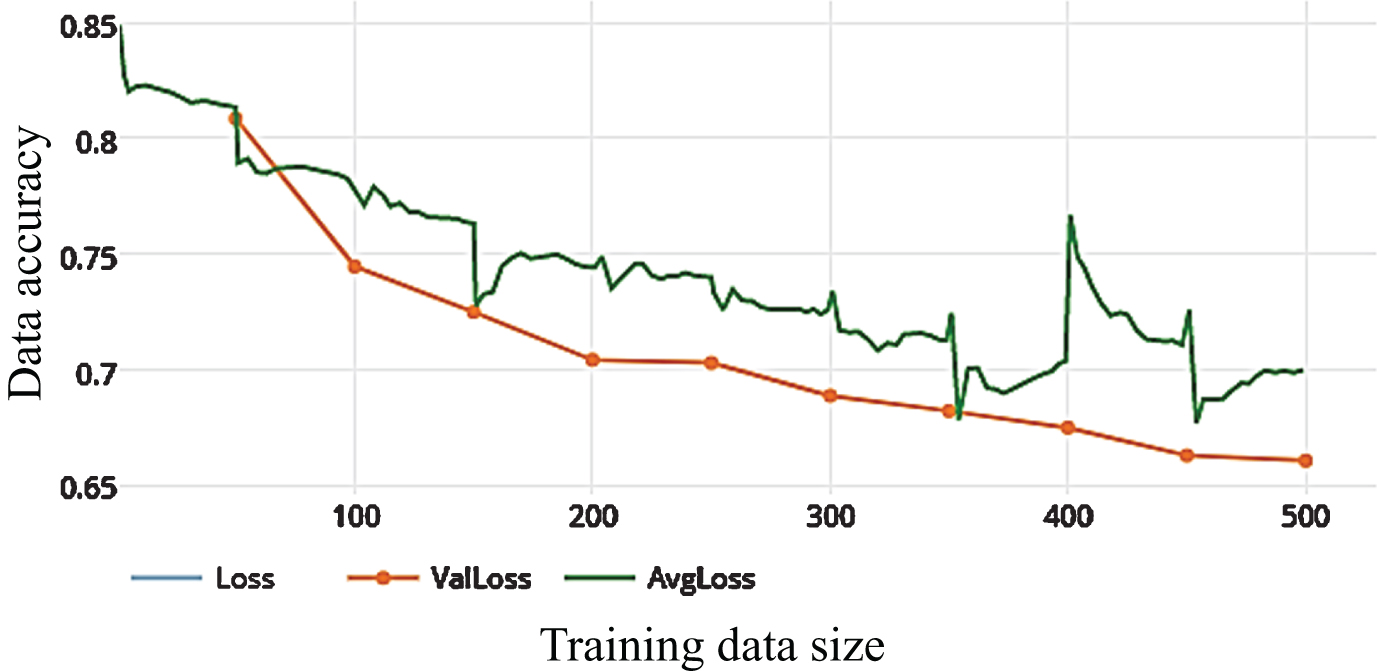

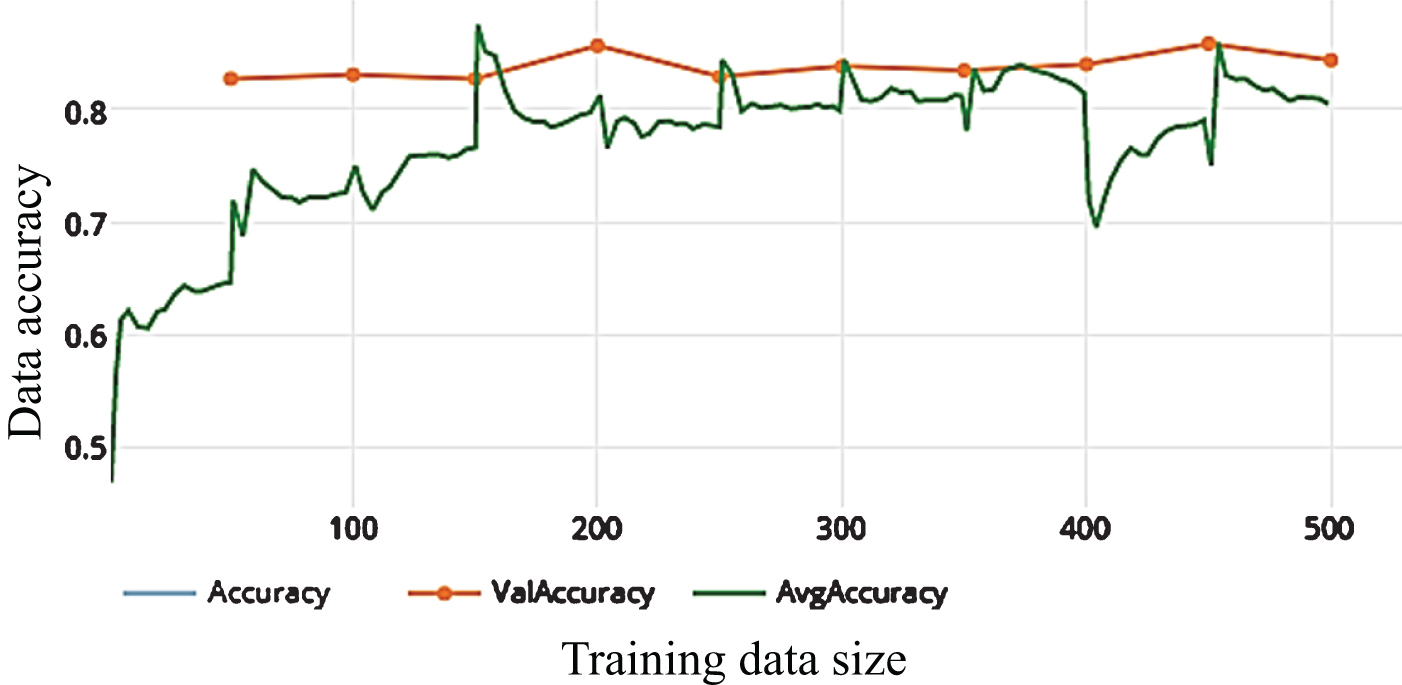

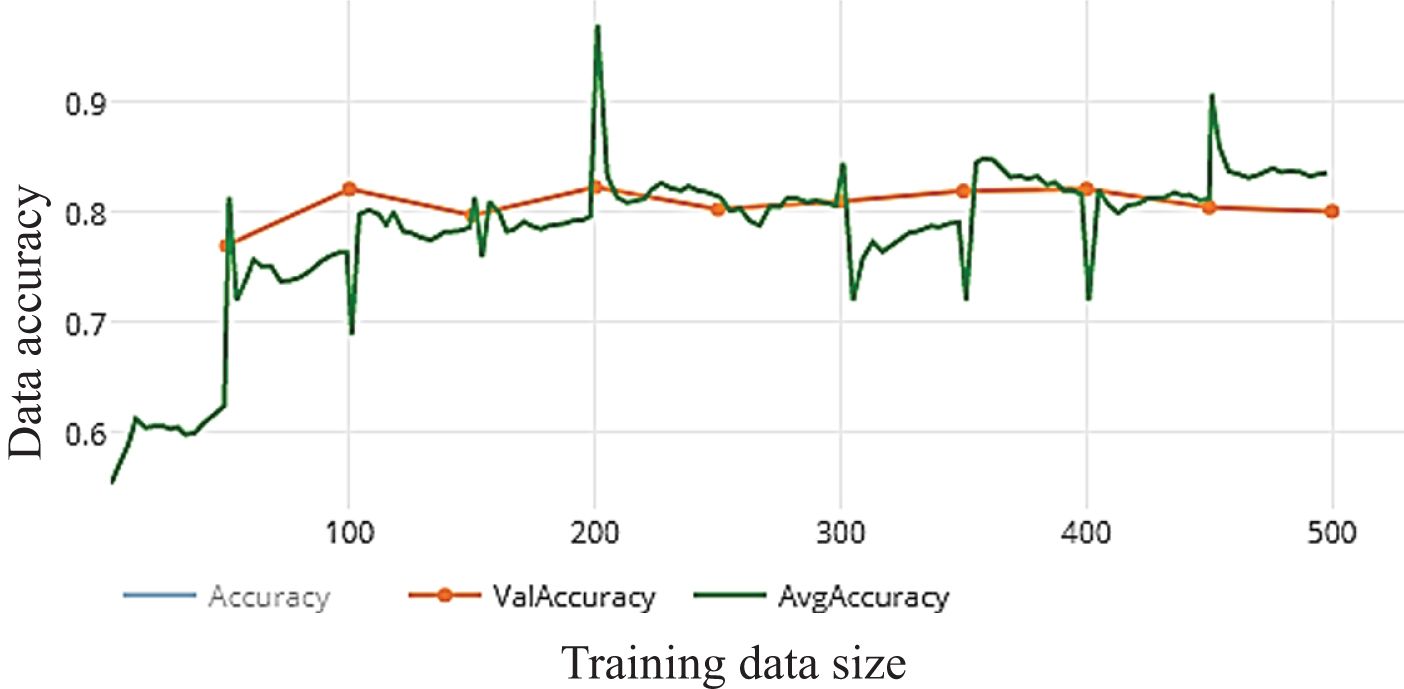

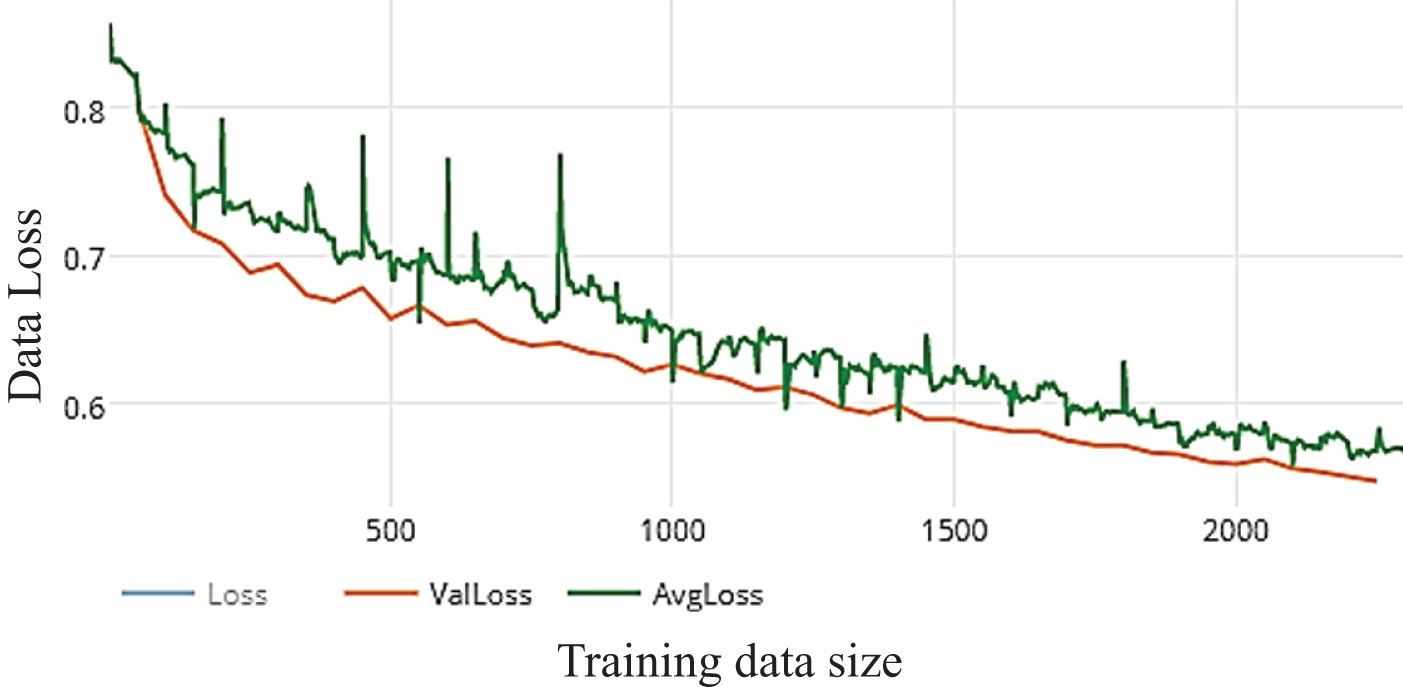

5.4.1Inception V3

In this paper, we use Inception V3 that focus on Salient parts in the image which may have very large variation in size. The classifiers did not contribute much until the end of the training process, when precision was nearing saturation so add the ad delta Optimizer, Batch Norm in the Auxiliary Classifiers for improving the network. After training WDNN model depends on Inception V3 pre-trained model and using 10 epochs only the average accuracy is not satisfied as shown in Fig. 7.a, Fig. 7.b the results of loss and accuracy. It reached to accuracy 86.26 %, 84.34%, 84.5%, 82.03%, Accuracy, precision, Recall, F-score, respectively. Enhancing the total accuracy on this proposed work depends on increasing the number of epochs to 50 epochs and observation the results. The average accuracy and total performance are increased to 96.19%, 95.28%, 96.8%,96.03%, Accuracy, precision, Recall, F-score, respectively as shown in Fig. 8.a and Fig. 8.b. The best performance of model by using Inception V3 is after 100 epochs that reached to 98.47%,98.68%, 97.83%, 98.25%, Accuracy, precision, Recall, F-score, respectively. Increasing the number of epochs of training is an efficient way for enhancement the performance of the model without overfitting as shown in Fig. 9.a and Fig. 9.b.

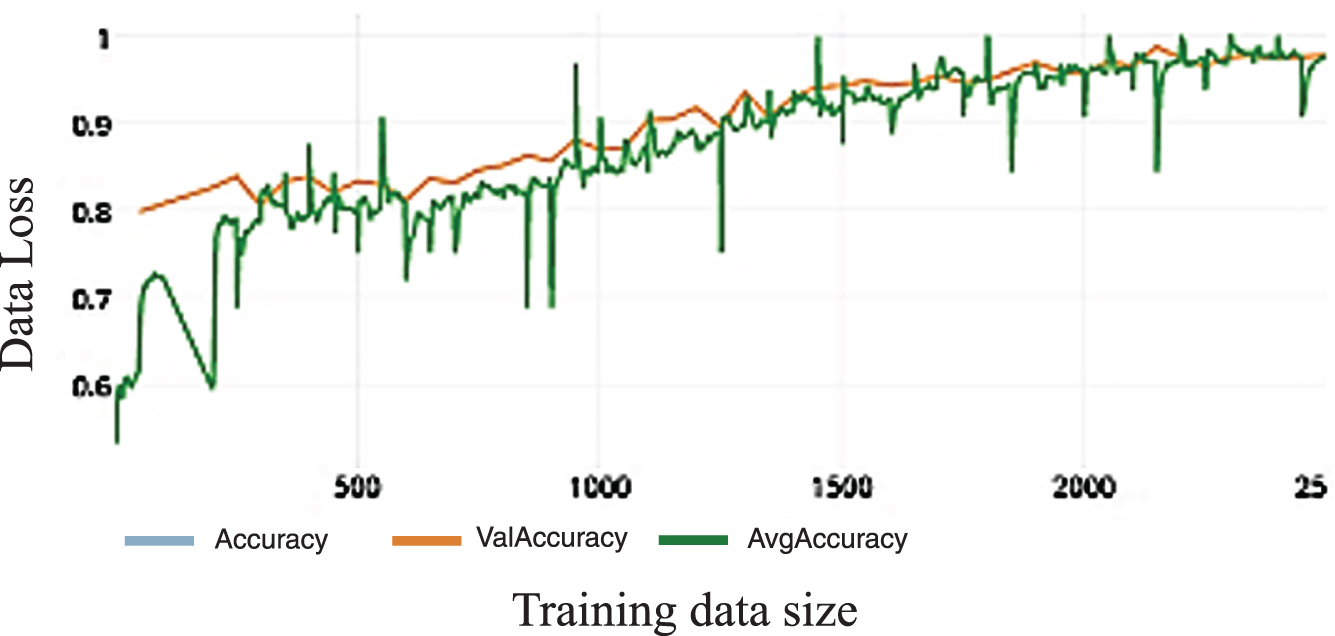

Fig. 7.a

Loss of WDNN model depends on Inception V3 and 10 epochs on training.

Fig. 7.b

The average accuracy of WDNN model depends on Inception V3 and 10 epochs on training.

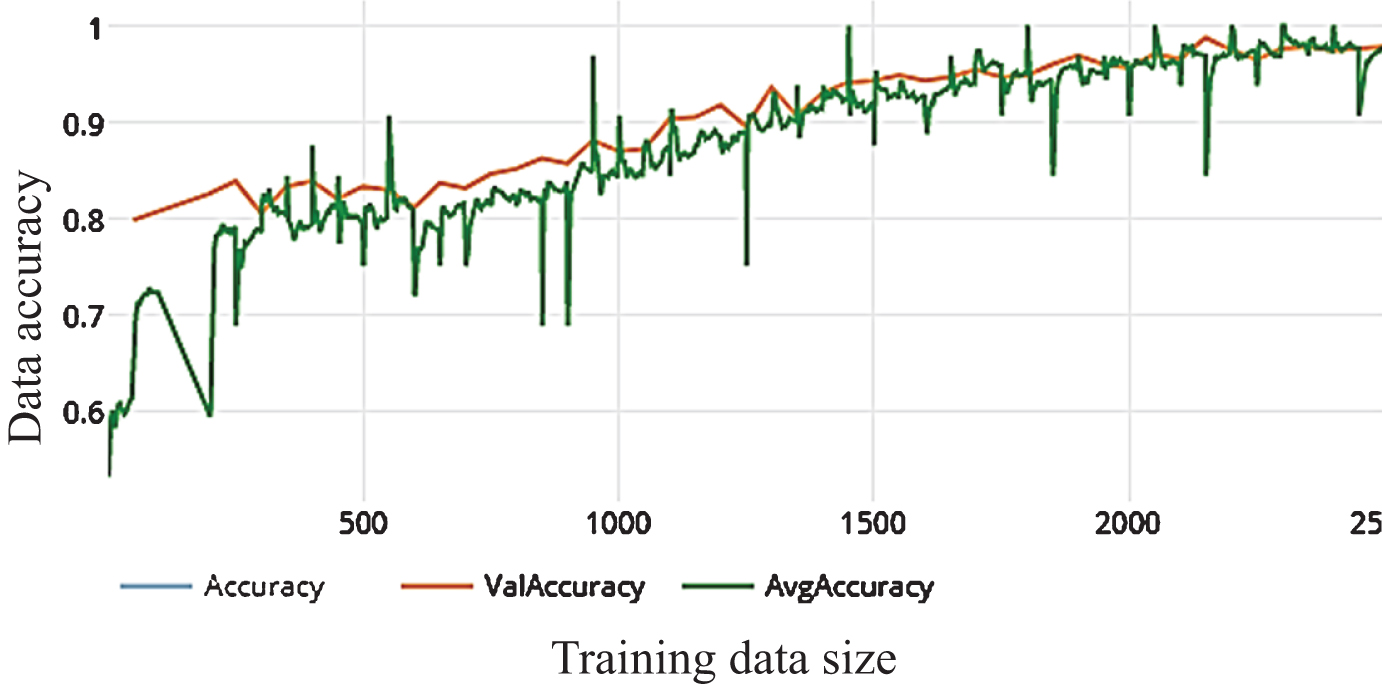

Fig. 8.a

Loss of WDNN model depends on Inception V3 and 50 epochs on training.

Fig. 8.b

The average accuracy of WDNN model depends on Inception V3 and50 epochs on training.

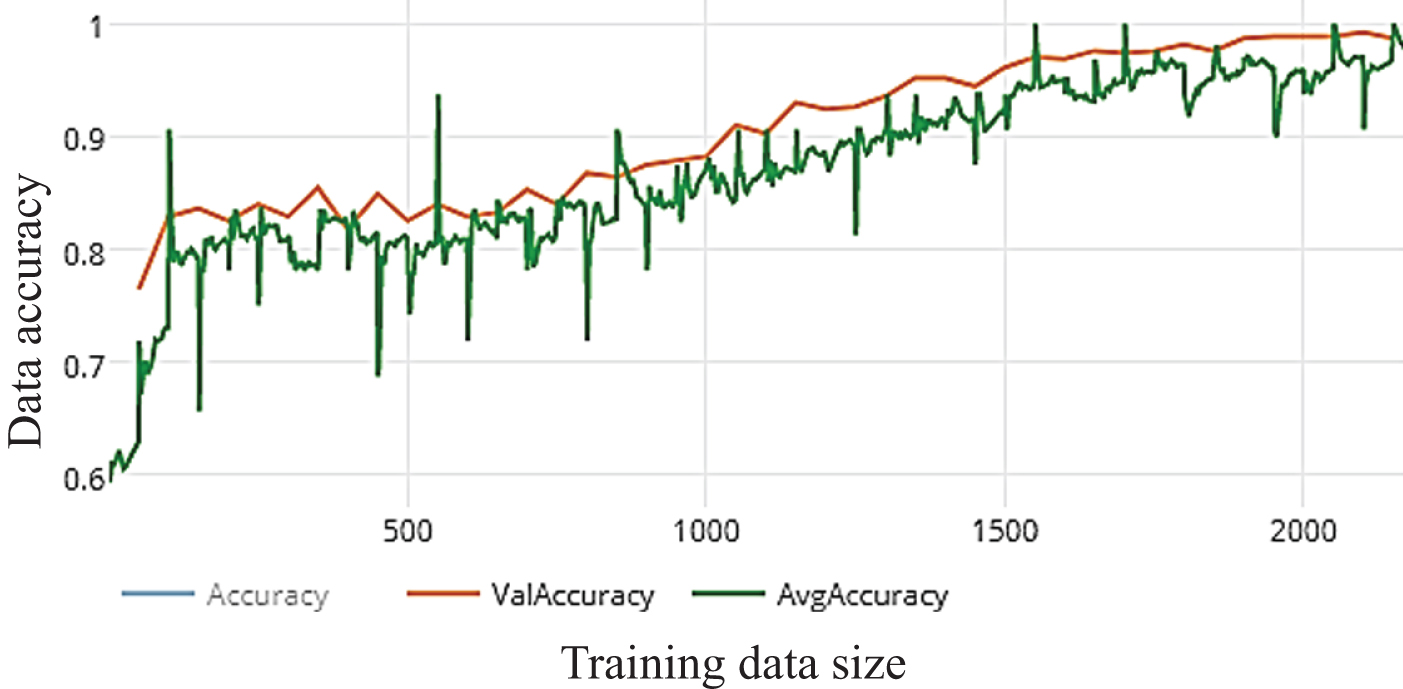

Fig. 9.a

The average accuracy of WDNN model depends on Inception V3 and100 epochs on training.

Fig. 9.b

The average accuracy of WDNN model depends on Inception V3 and100 epochs on training.

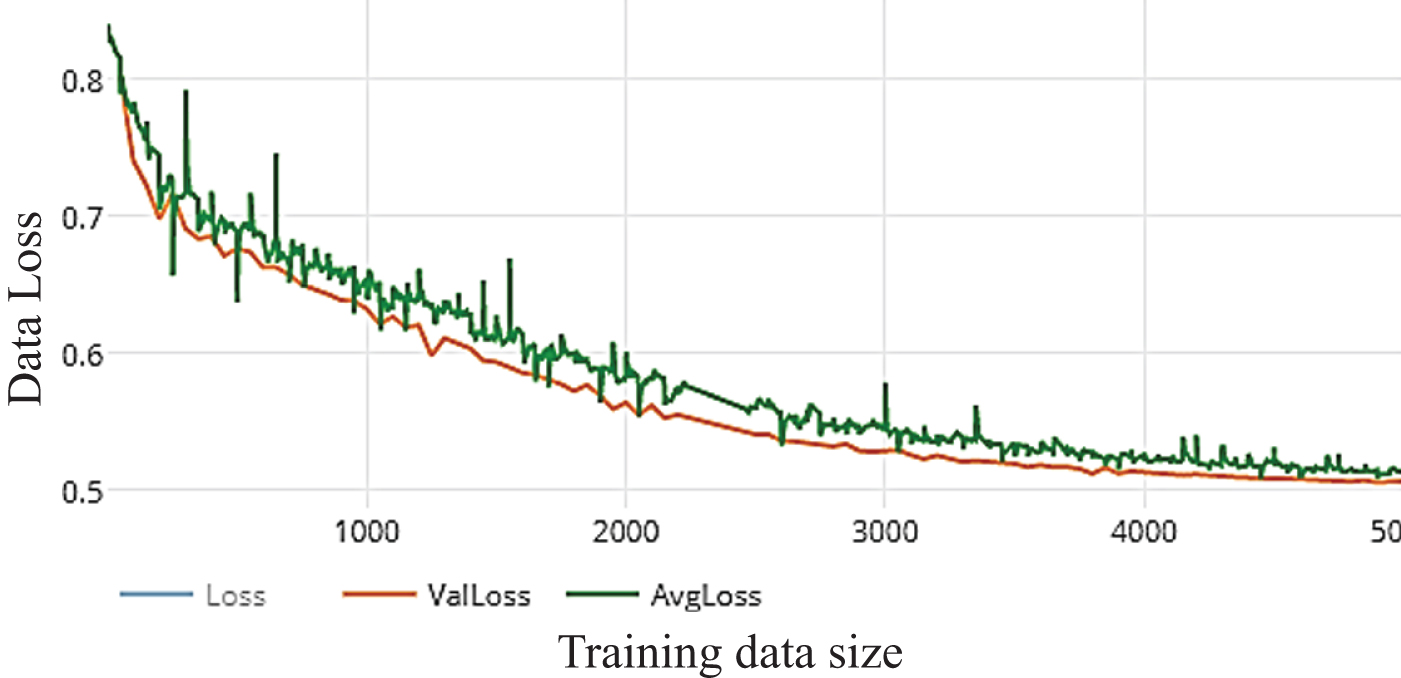

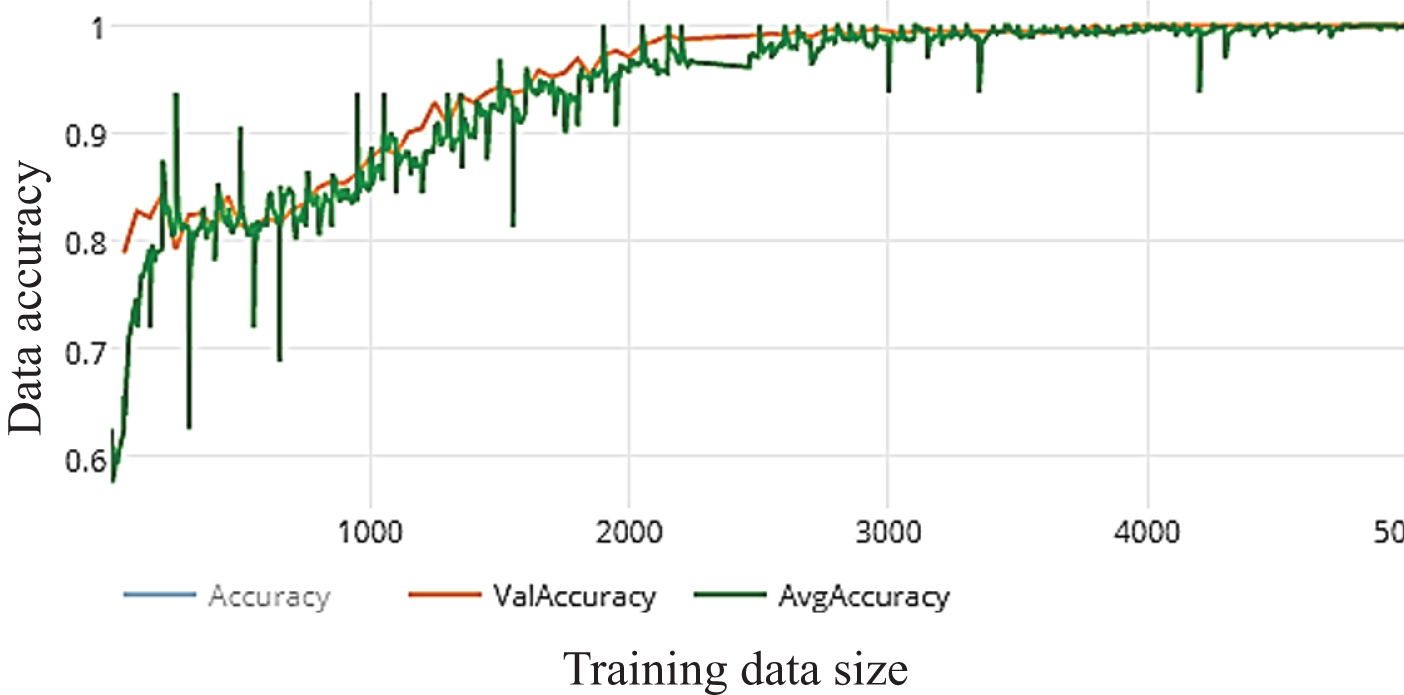

5.4.2Residual Neural Network (ResNet50)

The idea Residual (Skip Connection) is a very interesting extension to CNNs that have empirically shown to increase performance in ImageNet classification. These layers can be used in other tasks requiring Deep networks as well as image classification. After training WDNN model depends on Inception V3 pre-trained model and using 10 epochs only the average accuracy is not satisfied as shown in Fig. 10.a, Fig. 10.b the results of loss and accuracy. It reached to 86.83%,84.34%,87.5% 87.5% Accuracy, precision, Recall,F-score, respectively. Enhancing the total accuracy on this proposed work depends on increasing the number of epochs to 50 epochs and observation the results as shown in Fig. 11.a and Fig. 11.b. The total performance is increased to 96.95%,96.8%,97.19%,96.99% Accuracy, precision, Recall, F-score, respectively.The best performance of model by using Inception V3 is after 100 epochs that reached to 98.85%,99.12%,98.69%,98.91% Accuracy, precision, Recall, F-score, respectively.Increasing the number of epochs of training is an efficient way for enhancement the performance of the model without overfitting as shown in Fig. 12.a and Fig. 12.b.

Fig. 10.a

Loss of WDNN model depends Resnet (50) and 10 epochs on training.

Fig. 10.b

Accuracy of WDNN model depends Resnet (50) and 10 epochs on training.

Fig. 11.a

Loss of WDNN model depends Resnet (50) and 50 epochs on training.

Fig. 11.b

Accuracy of WDNN model depends Resnet (50) and 50 epochs on training.

Fig. 12.a

Loss of WDNN model depends Resnet (50) and 100 epochs on training.

Fig. 12.b

Accuracy of WDNN model depends Resnet (50) and 100 epochs on training.

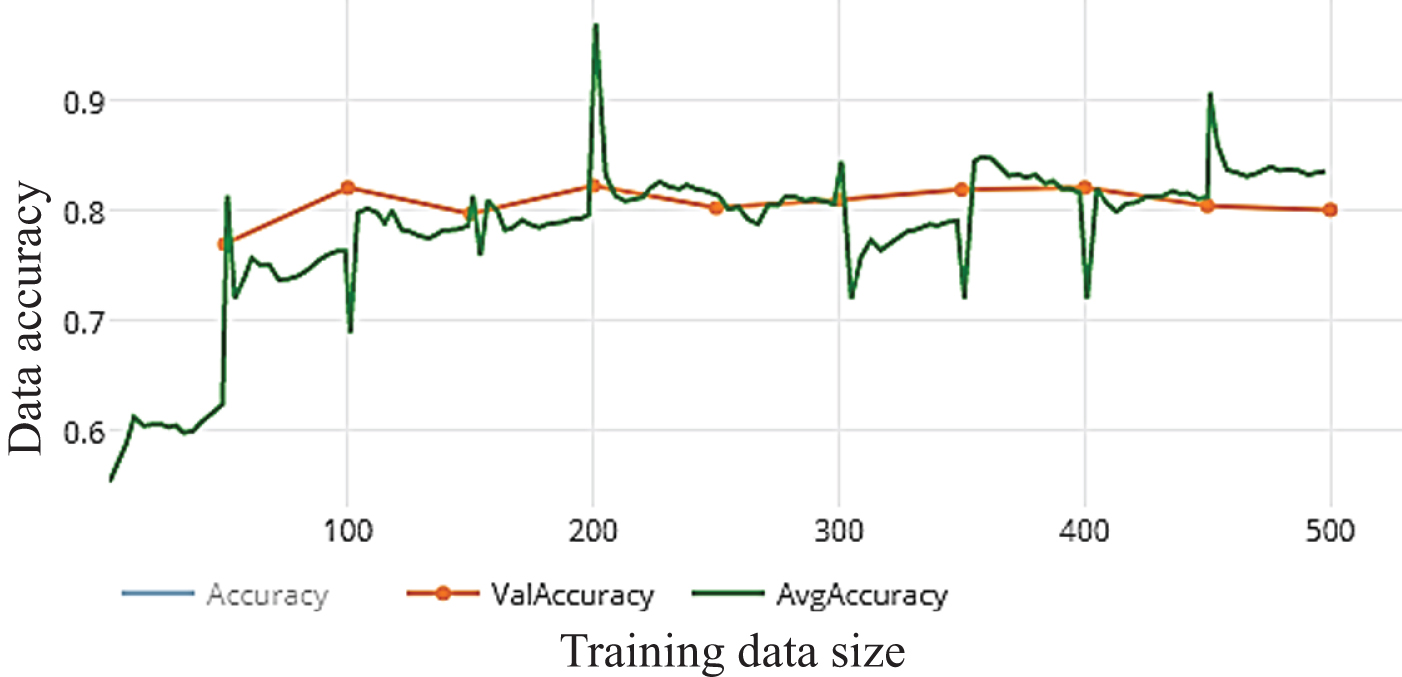

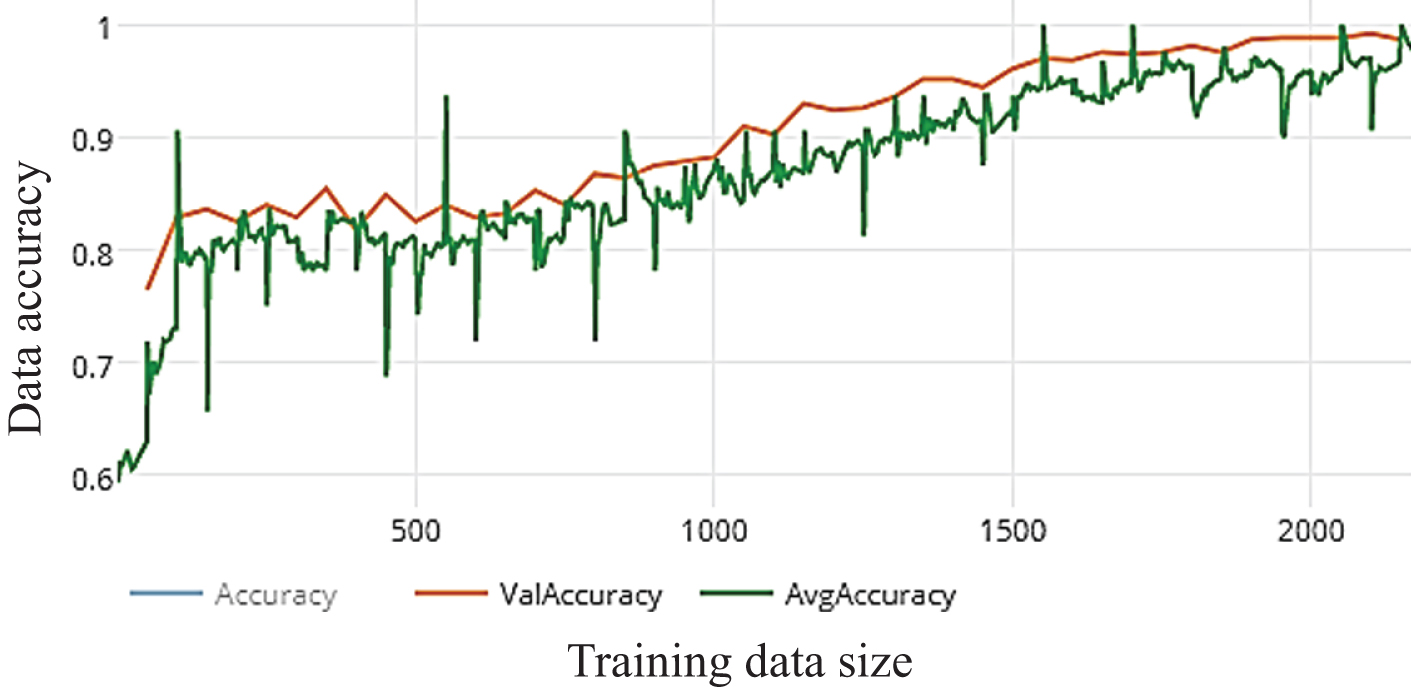

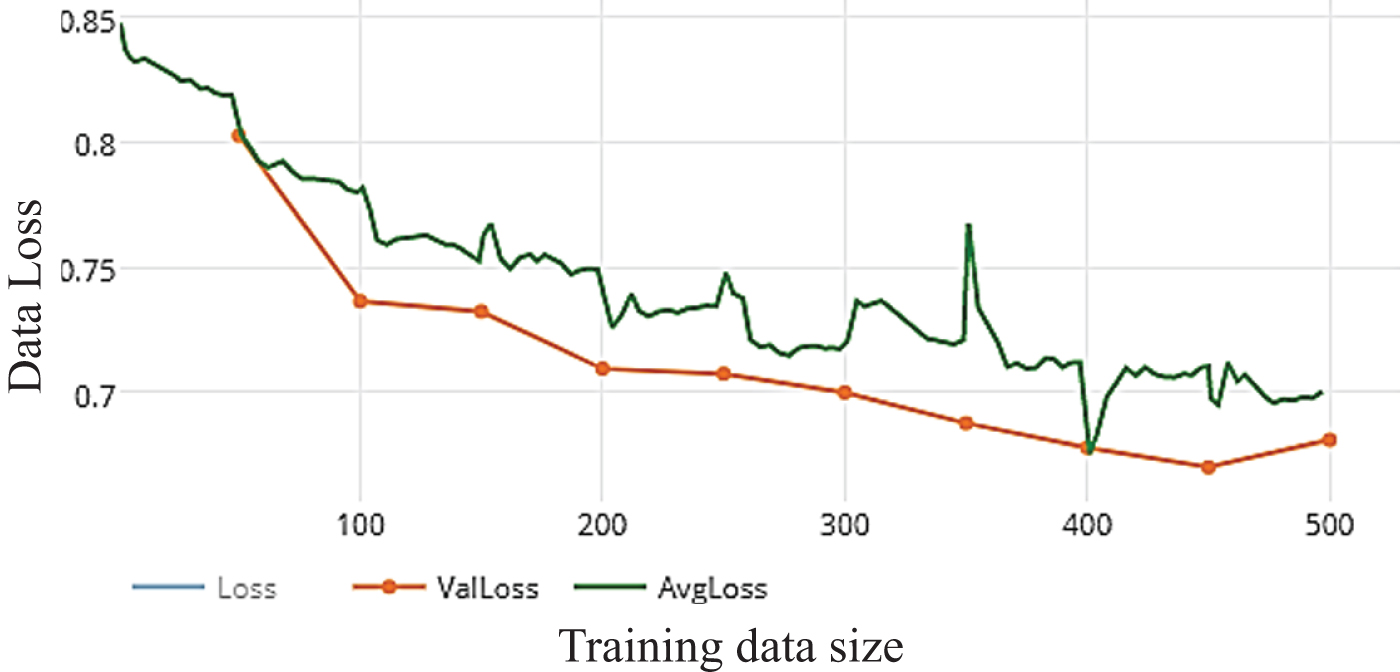

5.4.3Visual geometry group (VGG19)

VGG is focusing on smaller window sizes and strides in the first convolutional layer, the total architecture is mentioned above. VGG-19 has many weight layers leads to improve performance. It reached to 87.5%,87.5%,86.78%, 97.33% Accuracy precision, Recall F-score, respectively as shown in Fig. 13.a and Fig. 13.b.

Fig. 13.a

Loss of WDNN model depends VGG (19) and 10 epochs on training.

Fig. 13.b

Accuracy of WDNN model depends VGG (19) and 10 epochs on training.

Enhancing the total accuracy on this proposed work depends on increasing the number of epochs to 50 epochs and observation the results. The average accuracy and total performance are increased to 97.33% 97.45%,97.45%,97.45% Accuracy, precision, Recall, F-score, respectively as shown in Fig. 14.a and Fig. 14.b. The best performance of model by using Inception V3 is after 100 epochs that reached to 99,046%,98,684%,99,12%,98,90% Accuracy, precision, Recall,F-score, respectively as shown in Fig. 15.a and Fig. 15.b. Increasing the number of epochs of training is an efficient way for enhancement the performance of the model without overfitting.

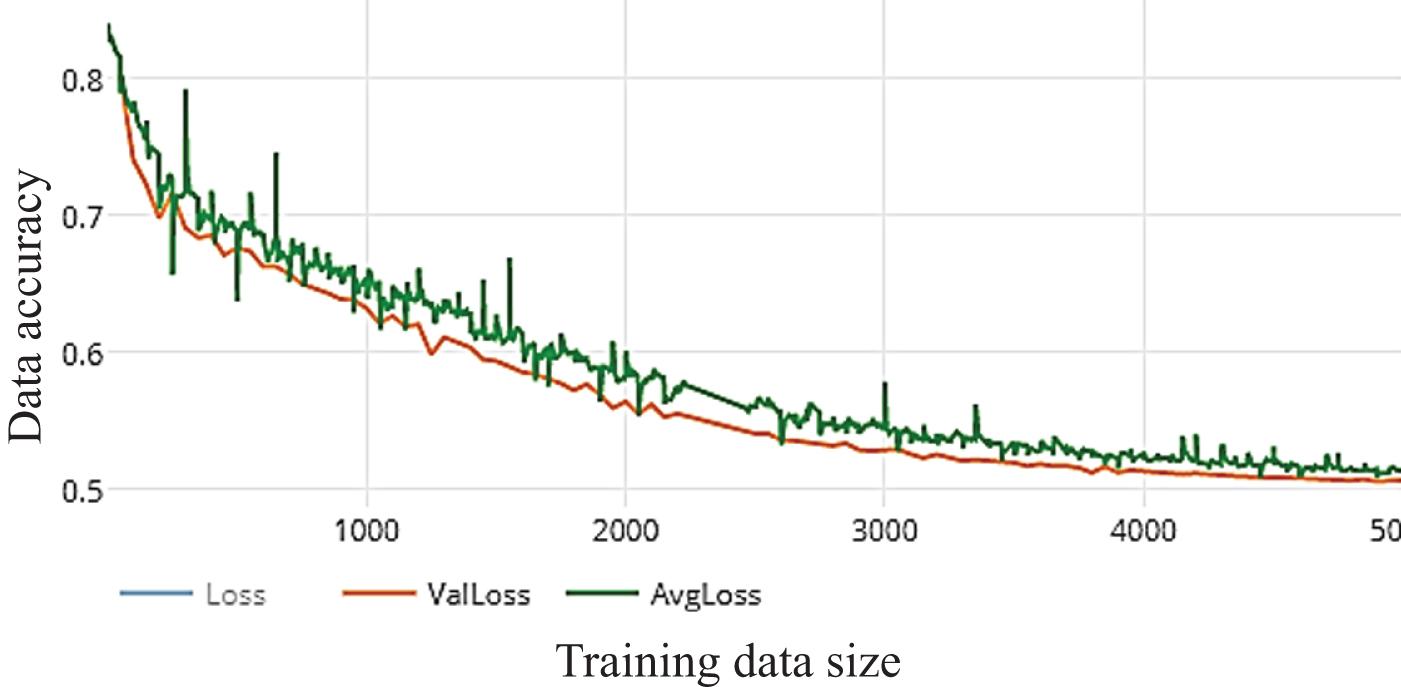

Fig. 14.a

Loss of WDNN model depends VGG (19) and 50 epochs on training.

Fig. 14.b

Accuracy of WDNN model depends VGG (19) and 50 epochs on training.

Fig. 15.a

Loss of WDNN model depends VGG (19) and 100 epochs on training.

Fig. 15.b

Accuracy of WDNN model depends VGG (19) and 100 epochs on training.

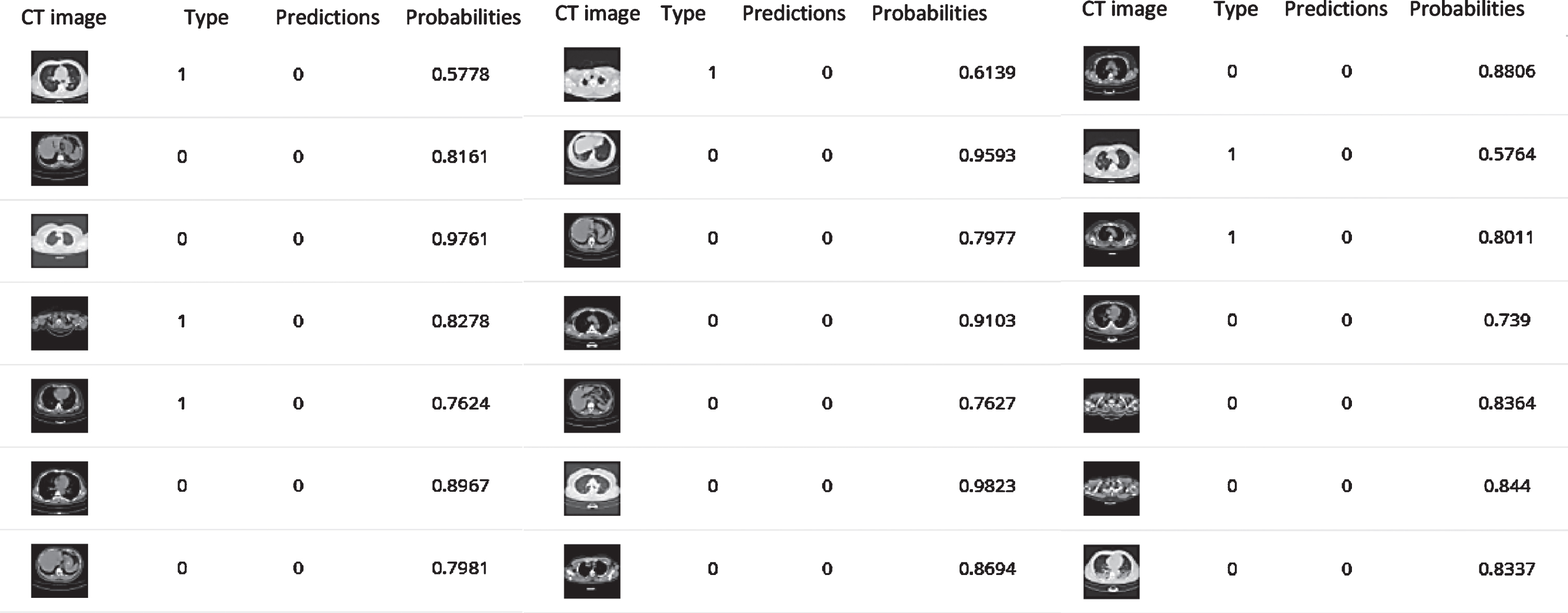

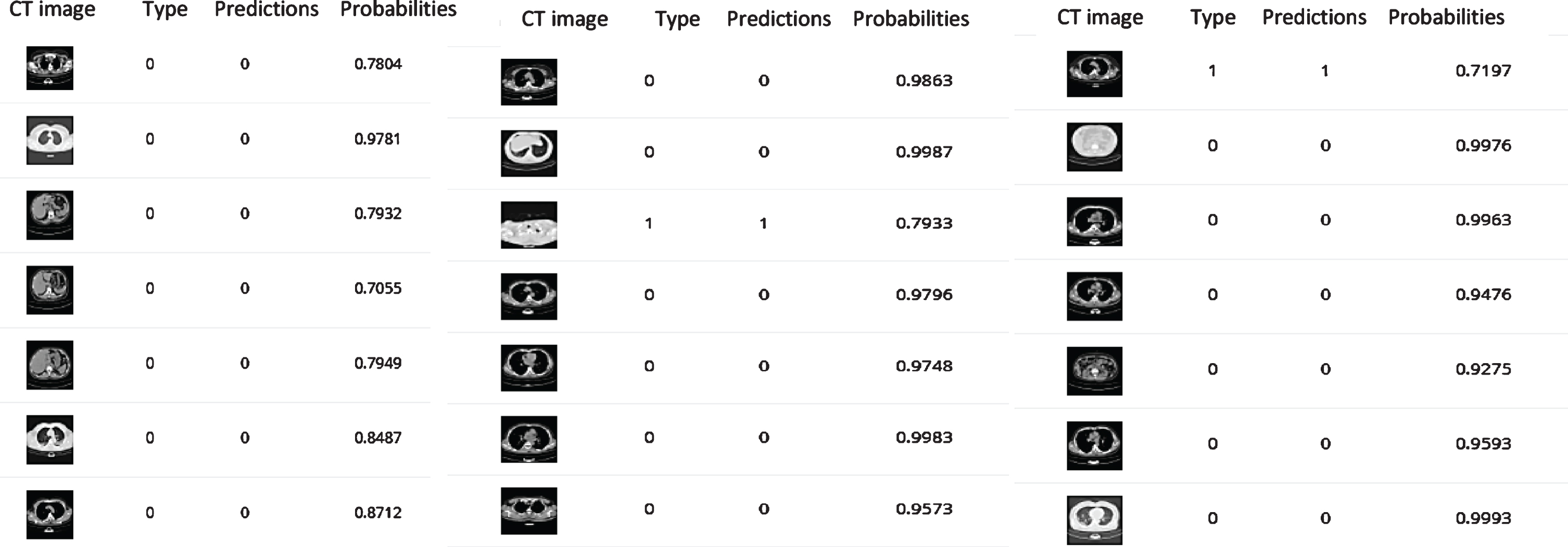

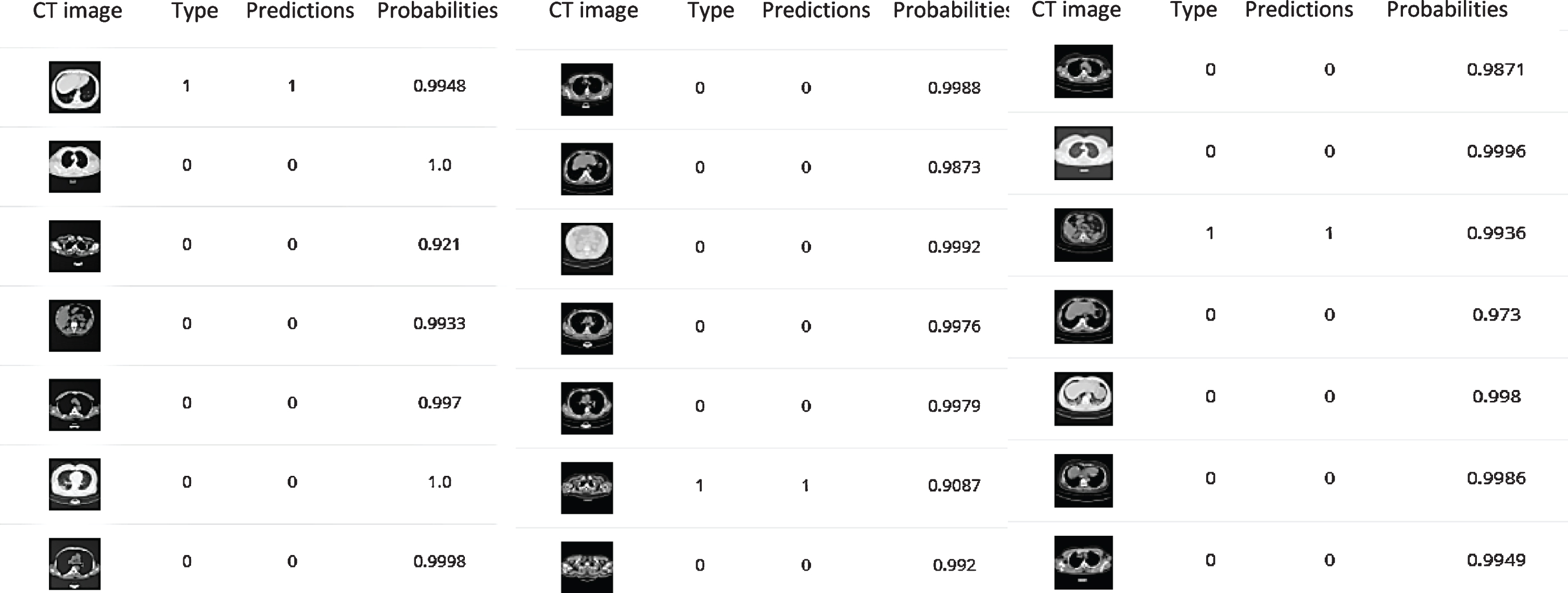

5.5Confusion matrix

The confusion matrix is for predictive analysis that gives information about how your machine classifier has performed [42]. It matrices are used to visualize important predictive analytics like Accuracy, precision, Recall, F-score. Confusion matrices are useful because they give direct comparisons of values like True Positives, False Positives, True Negatives and False Negatives. In contrast, other machine learning classification metrics like “Accuracy” give less useful information, as Accuracy is simply the difference between correct predictions divided by the total number of predictions. The predicted class probabilities of Inception V3, Resnet (50) and VGG (19) respectively after 10 epochs training as shown in Fig. 16 where it has some difference in prediction from three pre-trained models. the predicted class probabilities of Inception V3, Resnet (50) and VGG (19) respectively after 50 epochs training as shown in Fig. 17 where it more enhanced about the first 10-epochs training. Finally,WDNN model is trained about 100 epochs for CT scan images of COVID-19 that more robust and effection that is shown in Fig. 18 where the predicted class probabilities of Inception V3, Resnet (50) and VGG (19) respectively after 100 epochs training and clculation accuracy,Precision, Recall and F-score as mentiend above.

Fig. 16

The predicted class probabilities of Inception V3, Resnet (50) and VGG (19) respectively after 10 epochs training.

Fig. 17

The predicted class probabilities of Inception V3, Resnet (50) and VGG (19) respectively after 50 epochs training

Fig. 18

predicted class probabilities of Inception V3, Resnet (50) and VGG (19) respectively after 100 epochs training.

Table 2

| Pre-trained model | Result | |||||||

| ACC | Precision | Recall | F-score | ACC | Precision | Recall | F-score | |

| 10 epochs | 50 epochs | |||||||

| Inceptionv3 | 86.26 | 84.34 | 84.5 | 82.03 | 96.19 | 95.28 | 96.8 | 96.03 |

| ResNet50 | 86.83 | 84.34 | 87.5 | 87.5 | 96.95 | 96.8 | 97.19 | 96.99 |

| VGG19 | 87,79 | 87.5 | 87.5 | 86.78 | 97.33 | 97.45 | 97.45 | 97.45 |

6Discussion

Currently, the use of machine learning especially deep neural networks become more reliable and robustness to detect, discover, diagnosis, and classify COVID-19 cases. In this work, we used inception version three as well as ResNet50 and VGG19 to train and validate the input features extracted from COVID-19 CT images. Moreover, from the above results, we can conclude that the accuracy of the WDNN proposed model scenario is determined based on both loss and accuracy measures which depend on the error of the data including the loss function for training, testing, and validation data. We found that the data loss reached 100 epochs that indicated the more established proposed model. The resulting achieved 99,046, 98,684, 99,119, 98,90 In terms of Accuracy, precision, recall, F-score, respectively. In this paper, we present the ability of AI to help in the detection of the COVID-19 virus. This is the robust report to our knowledge in the searches developed to detect and characterize the progression of COVID19. A rapidly developed AI-based automated CT image model can achieve high accuracy in the detection of COVID-19 cases. Using deep-learning image analysis algorithms developed, we achieved classification results for Infected vs. Non-Infected cases per CT images of on datasets.

Table 3

Presents the overall comparison between using the pre-trained model and WDNN architecture for automatic classification of 2d CT scan of COVID-19 dataset with and without preprocessing

| Pre-trained model | Result | |||

| ACC | Precision | Recall | F-score | |

| 100 epochs | ||||

| Inception v3 | 98.47 | 98.68 | 97.83 | 98.25 |

| ResNet50 | 98.85 | 99.12 | 98.69 | 98.91 |

| VGG19 | 99,046 | 98,684 | 99,12 | 98,90 |

7Conclusions

COVID-19 is similar in the behaviors with viral pneumonia then, the spreading rate of the virus made the situation hard to be under control. CT imaging results of COVID-19 show that various findings according to other clinical studies. CT images help to diagnose COVID-19 easily. This paper presented WDNN model to classify COVID-19 virus based on CT images based on the pre-trained models Inception v3, Resnet(50), and VGG(19) that has been proved an effective and promising tool to extract and classify features from images. The results are compared with both the traditional machine learning methods and those using CNNs which reflects the feasibility of image classification based on deep learning. The related studies are generally medical studies. This paper has studied COVID-19 images in the classification field. For this aim, the dataset conversion needs to be increased. The machine learning methods should be implemented more on CT abdominal images when this data will be shared to literature.

References

[1] | Huang C. , et al., Clinical features of patients infected with 2019 novel coronavirus in Wuhan, China, Lancet 395: (10223) ((2020) ), 497–506. doi: 10.1016/S0140-6736(20)30183-5 |

[2] | Coudray N. , et al., Classification and mutation prediction from non–small cell lung cancer histopathology images using deep learning, Nat Med 24: (10) ((2018) ), 1559–1567. doi: 10.1038/s41591-018-0177-5 |

[3] | Dabeer S. , Khan M.M. and Islam S. , Cancer diagnosis in histopathological image: CNN based approach, Informatics Med Unlocked 16: ((2019) ), 100231–10.1016/j.imu.2019.100231 |

[4] | Gao K. , et al., Dual-branch combination network (DCN): towards accurate diagnosis and lesion segmentation of COVID-19 using CT images, Med Image Anal 67: ((2020) ), 101836–10.1016/j.media.2020.101836 |

[5] | Shi F. , et al., Large-Scale Screening of COVID-19 from Community Acquired Pneumonia using Infection Size-Aware Classification, (2020), [Online]. Available: http://arxiv.org/abs/2003.09860. |

[6] | Anthimopoulos M. , Christodoulidis S. , Ebner L. , Christe A. and Mougiakakou S. , Lung Pattern Classification for Interstitial Lung Diseases Using a Deep Convolutional Neural Network, IEEE Trans Med Imaging 35: (5) ((2016) ), 1207–1216. doi: 10.1109/TMI.2016.2535865 |

[7] | Li L. , et al., Using Artificial Intelligence to Detect COVID-19 and Community-acquired Pneumonia Based on Pulmonary CT: Evaluation of the Diagnostic Accuracy, Radiology 296: (2) ((2020) ), E65–E71. doi: 10.1148/radiol.2020200905 |

[8] | Yamashita R. , Nishio M. , Do R.K.G. and Togashi K. , Convolutional neural networks: an overview and application in radiology, Insights Imaging 9: (4) ((2018) ), 611–629. doi: 10.1007/s13244-018-0639-9 |

[9] | Shams M.Y. , Elzeki O.M. , Abd Elfattah M. , Medhat T. and Hassanien A.E. , Why Are Generative Adversarial Networks Vital for Deep Neural Networks? A Case Study on COVID-19 Chest X-Ray Images. Springer International Publishing, ((2020) ). |

[10] | Hoi S.C.H. , Jin R. , Zhu J. and Lyu M.R. , Batch mode active learning and its application to medical image classification, ACM Int Conf Proceeding Ser 148: ((2006) ), 417–424. doi: 10.1145/1143844.1143897 |

[11] | Hosny K.M. , Kassem M.A. and Foaud M.M. , Skin Cancer Classification using Deep Learning and Transfer Learning, 2018 9th Cairo Int. Biomed. Eng. Conf. CIBEC 2018 - Proc., (2019), 90–93. doi: 10.1109/CIBEC.2018.8641762 |

[12] | “Deep learning for computer-aided medical diagnosis,” Multimed. Tools Appl 79: 21–22 ((2020) ), 15073–15073. doi: 10.1007/s11042-020-08940-4 |

[13] | Song Y. , et al., Deep learning Enables Accurate Diagnosis of Novel Coronavirus (COVID-19) with CT images, (2020). doi: 10.1101/2020.02.23.20026930 |

[14] | Wang S. , et al., A deep learning algorithm using CT images to screen for Corona Virus Disease (COVID-19), (2020), 1–19. doi: 10.1101/2020.02.14.20023028 |

[15] | Christodoulidis S. , Anthimopoulos M. , Ebner L. , Christe A. and Mougiakakou S. , Multisource Transfer Learning with Convolutional Neural Networks for Lung Pattern Analysis, IEEE J Biomed Heal Informatics 21: (1) ((2017) ), 76–84. doi: 10.1109/JBHI.2016.2636929 |

[16] | Jin S. , et al., AI-assisted CT imaging analysis for COVID-19 screening: Building and deploying a medical AI system in four weeks, (2020), 1–22. doi: 10.1101/2020.03.19.20039354 |

[17] | Zheng C. , et al., Deep Learning-based Detection for COVID-19 from Chest CT using Weak Label, (2020), 1–13. doi: 10.1101/2020.03.12.20027185 |

[18] | Li X. , Shen H. , Zhang L. , Zhang H. , Yuan Q. and Yang G. , Recovering quantitative remote sensing products contaminated by thick clouds and shadows using multitemporal dictionary learning, IEEE Trans Geosci Remote Sens 52: (11) ((2014) ), 7086–7098. doi: 10.1109/TGRS.2014.2307354 |

[19] | Rashad M.Z. , Shams M.Y. and Nomir O. , I Ris R Ecognition B Ased on Lbp and, 3: (5) ((2011) ), 43–52. |

[20] | Shams M.Y. , Sarhan S.H. and Tolba A.S. , Adaptive deep learning vector quantisation for multimodal authentication, J Inf Hiding Multimed Signal Process 8: (3) ((2017) ), 702–722. |

[21] | Chao H. , et al., Integrative Analysis for COVID-19 Patient Outcome Prediction, Med Image Anal 67 (: ((2020) ), 101844–10.1016/j.media.2020.101844 |

[22] | Xue D. , et al., An Application of Transfer Learning and Ensemble Learning Techniques for Cervical Histopathology Image Classification, IEEE Access 8: ((2020) ), 104603–104618. doi: 10.1109/ACCESS.2020.2999816 |

[23] | Song Y. , et al., Deep learning Enables Accurate Diagnosis of Novel Coronavirus (COVID-19) with CT images, no. February, (2020), doi: 10.1101/2020.02.23.20026930 |

[24] | Qin J. , Pan W. , Xiang X. , Tan Y. and Hou G. , A biological image classification method based on improved CNN, Ecol Inform 58: ((2020) ), 101093. doi: 10.1016/j.ecoinf.2020.101093 |

[25] | Gozes O. , Frid M. , Greenspan H. and Patrick D. , Rapid AI Development Cycle for the Coronavirus (COVID-19) Pandemic: Initial Results for Automated Detection & Patient Monitoring using Deep Learning CT Image Analysis ArticleType: Authors: Summary Statement: Key Results: List of abbreviati,” arXiv:2003.05037, (2020), [Online]. Available: https://arxiv.org/ftp/arxiv/papers/2003/2003.05037.pdf. |

[26] | Ko H. , et al., COVID-19 pneumonia diagnosis using a simple 2d deep learning framework with a single chest CT image: Model development and validation, J Med Internet Res 22: (6) ((2020) ), 1–13. doi: 10.2196/19569 |

[27] | tao Zhang H. , et al., Automated detection and quantification of COVID-19 pneumonia: CT imaging analysis by a deep learning-based software, Eur J Nucl Med Mol Imaging 47: (11) ((2020) ), 2525–2532. doi: 10.1007/s00259-020-04953-1 |

[28] | Sajana T. and Narasingarao M.R. , Machine learning techniques for malaria disease diagnosis - A review, J Adv Res Dyn Control Syst 9: (6) ((2017) ), 349–369. |

[29] | Zhang J. , Xie Y. , Wu Q. and Xia Y. , Medical image classification using synergic deep learning, Med Image Anal 54: ((2019) ), 10–19. doi: 10.1016/j.media.2019.02.010 |

[30] | Hashmi M.R. , Riaz M. and Smarandache F. , m-Polar Neutrosophic Topology with Applications to Multi-criteria Decision-Making in Medical Diagnosis and Clustering Analysis, Int J Fuzzy Syst 22: (1) ((2020) ), 273–292. doi: 10.1007/s40815-019-00763-2 |

[31] | El-Rashidy N. , El-Sappagh S. , Islam S.M.R. , El-Bakry H.M. and Abdelrazek S. , End-To-End Deep Learning Framework for Coronavirus (COVID-19) Detection and Monitoring, Electronics 9: (9) ((2020) ), 1439. doi: 10.3390/electronics9091439 |

[32] | Jinia A.J. , et al., Review of Sterilization Techniques for Medical and Personal Protective Equipment Contaminated with SARS-CoV-2, IEEE Access 8: ((2020) ), 111347–111354. doi: 10.1109/ACCESS.2020.3002886 |

[33] | Mohamed A.R. , Dahl G.E. and Hinton G. , Acoustic modeling using deep belief networks, IEEE Trans Audio Speech Lang Process 20: (1) ((2012) ), 14–22. doi: 10.1109/TASL.2011.2109382 |

[34] | Wu C. , Li Y. , Zhao Z. and Liu B. , Image classification method rationally utilizing spatial information of the image, Multimed Tools Appl 78: (14) ((2019) ), 19181–19199. doi: 10.1007/s11042-019-7254-8 |

[35] | Cen F. and Wang G. , Boosting Occluded Image Classification via Subspace Decomposition-Based Estimation of Deep Features, IEEE Trans. Cybern 50: (7) ((2020) ), 3409–3422. doi: 10.1109/TCYB.2019.2931067 |

[36] | He K. , Zhang X. , Ren S. and Sun J. , SPPNet[PAMI].pdf,”, 37: (9) ((2015) ), 1904–1916. |

[37] | Meng Q. , et al., Weakly Supervised Estimation of Shadow Confidence Maps in Fetal Ultrasound Imaging, IEEE Trans Med Imaging 38: (12) ((2019) ), 2755–2767. doi: 10.1109/TMI.2019.2913311 |

[38] | Rajaraman S. , et al., Pre-trained convolutional neural networks as feature extractors toward improved malaria parasite detection in thin blood smear images, Peer J 2018: (4) ((2018) ), doi: 10.7717/peerj.4568 |

[39] | Sedik A. , et al., Deploying machine and deep learning models for efficient data-augmented detection of COVID-19 infections, Viruses 12: (7) ((2020) ), 1–29. doi: 10.3390/v12070769 |

[40] | Elaziz M.A. , Hosny K.M. , Salah A. , Darwish M.M. , Lu S. and Sahlol A.T. , New machine learning method for imagebased diagnosis of COVID-19, PLoS One 15: (6) ((2020) ), doi: 10.1371/journal.pone.0235187 |

[41] | T. Learning, “SS symmetry Within the Lack of Chest COVID-19 X-ray Dataset: A Novel Detection Model Based on GAN and Deep,” (2020). |

[42] | Sarhan S. , Nasr A.A. and Shams M.Y. , Multipose Face Recognition-Based Combined Adaptive Deep Learning Vector Quantization, Comput Intell Neurosci (2020). doi: 10.1155/2020/8821868 |