A creative prototype illustrating the ambient user experience of an intelligent future factory

Abstract

This article introduces user experience research that has been carried out by evaluating a video-illustrated science fiction prototype with process control workers. Essentially, the prototype ‘A remote operator’s day in a future control center in 2025’ was aimed at discovering opportunities for new interaction methods and ambient intelligence for the factories of the future. The theoretical objective was to carry out experience design research, which was based on explicit ambient user experience goals in the nominated industrial work context. This article describes the complete creative prototyping process, starting from the initial user research that included evaluations of current work practices, technological trend studies and co-design workshops, and concluding with user research that assessed the final design outcome, the science fiction prototype. The main contribution of the article is on the ambient user experience goals, the creation process of the video-illustrated science fiction prototype, and on the reflection of how the experience-driven prototype was evaluated in two research setups: as video sequences embedded in a Web survey, and as interviews carried out with expert process control workers. For the science fiction prototyping process, the contribution demonstrates how the method may employ video-illustration as a means for future-oriented user experience research, and how complementary user-centered methods may be used to validate the results.

1.Introduction

Enabled by advanced digitalization, industrial internet and intelligent technologies, such as ambient intelligence (AmI), it is expected that the 4th industrial revolution, often referred as to Industry 4.0, will soon be on its way [28,31]. In general, it is expected that Industry 4.0 will benefit from the AmI technologies and result e.g. in shorter development periods, individualization in demand for the customers, flexibility, decentralization and resource efficiency. In the production processes, there will be significantly greater demands made of all members of the workforce, in terms of managing complexity, abstraction and problem-solving [19]. For the industrial workers, the revolution is expected to provide opportunities by the qualitative enrichment of the factory work: a more interesting working environment and the greater autonomy and opportunities for self-development. Subsequently, the employees are likely to act much more on their own initiative, to possess excellent communication skills and to organize their personal work flow; i.e. in the future factories they are expected to act as strategic decision-makers and flexible problem-solvers [10].

The industry transformation is anticipated to be most relevant in the manufacturing industry, but it will also affect such industrial sectors as process control work, which is the main context environment of this article. The design research introduced presents an idea that the future control workers will monitor and supervise AmI systems with new interaction methods, and that the work tasks will be shared flexibly between the systems and human workers. The research has taken a stance that, in order to succeed, the Industry 4.0 requires more than merely introducing new technologies on the factory floor. In essence, for developing sustainable solutions there is a need for a shared vision of the future, which requires a clear and extensive view of how the new technologies will be utilized and what kind of work roles and practices will emerge as a result.

So as to create a shared vision of the future process control work, the research has focused on user experience (UX) design and employed a method called science fiction prototyping (SFP) [17] in delivering its design outcome. The article explains in detail the UX investigations that have followed the SFP framework [12,13], yet the main focus is on illustrating how the UX research has been implemented in a video-illustrated science fiction prototype, entitled ‘A remote operator’s day in a future control center in 2025’, in which AmI, computing and design play a critical role.

For the AmI community, this contribution is essential, as it demonstrates UX research resulting from the user-centered design (UCD) approach to ambient intelligence, which is an important topic raised by Aarts and De Ruyter [1] in their call for new research perspectives on AmI. In addition, the design research contributes to the second order ambient intelligence, which advocates new forms of experience, curiosity and engagement in AmI solutions [5]. Essentially, the article tackles a challenging problem in the barrier reduction for Industry 4.0. in the inclusion of smart intuitive systems explicitly targeted at process control work in future process plants. The main objective is to provide a practical example of the creative UX design methodology for early engagement with participants in future AmI systems.

2.Background of the study and key literature

The user experience (UX) research was seen to be timely and relevant in this case study, as, overall, the UX approach is currently receiving growing attention in the development of industrial working environments and services [18,44]. Basically, the UX approach suggests that, in contrast to problem- or technology-driven design, user experience should be the main force in driving the design [9,24,39]. In general, the aim is to guide the design towards positive and satisfying experiences that help in communicating important objectives, as proposed e.g. by [9,15,40,45]. In an industrial work context, the investigations have usually focused on a thorough understanding of what the employees want to achieve in their work, and how this can best be supported. Accordingly, in this domain, UX has been interpreted explicitly as: “The way a person feels about using a product, service, or system in a work context, and how this shapes the image of oneself as a professional” [18]. So far, the main difficulty in adopting the UX-driven design approach in a technology-driven industry has been the fact that the technological skills in a company often dictate the design space [38]. Therefore, the core proposition in UX design, ‘experience before product’ [15, p. 63], has not been realized. That is the reason why the research in this article has encouraged the idea that UX design investigations and outcomes should initially be in balance with the brand and image of the company and involve the characteristics of the services that are generally valued by its customers. To achieve this, the important experiences has been pursued by defining explicit ambient UX goals to which the industrial partner and the research group have commit themselves.

As for the primary means to create, deliver and evaluate the UX goals, the study has employed the science fiction prototyping (SFP) method that B.D. Johnson originally introduced as a tool for intelligent environment (IE) research [16,17]. After the method launch, the great majority of the SF-prototypes were published within the IE domain, although the method has later been widely adopted also by other fields, such as futures studies, foresight and business studies (a full literature synthesis on the SF-prototype topics can be found in [25]). It should be noted that the relation between science fiction and science fact has also been identified simultaneously by scholars, technology designers and researchers from diverse disciplines, e.g. by [4,6,7,14,30,37,41,42].

Principally, the prototypes created by the SFP method are stories grounded in current science and engineering research that are written for the purpose of acting as prototypes for people to explore a wide variety of futures [17]. In order to justify the use of the method in a UX research context, it is referred here to Forlizzi and Battarbee [9], who have confirmed that stories and storytelling provide a solid basis for UX research. They explain that, as a repository of experience, stories contain almost everything that is required for a deep, appreciative understanding of the strengths and weaknesses of a service, as well as what needs to be redesigned for the future. In this context, the SFP method seemed particularly expedient, since, as a design tool, it allows one to study alternative, potential futures and illustrate how to interact with AmI and emerging technologies after the transformation of the industrial work environment.

The earlier SF-prototypes that have referred to UX design can be found e.g. from the work of Egerton et al. [8] and Graham [11], but the role of the UX design has not been systematically described in either of those. Correspondingly, many other prototypes make reference to the broader concerns of UCD, e.g. [36,43,47], but do not explain in detail how these findings are converted into prototype creation. The most suitable previous example of a UX-driven SFP process was found from [23]. The approach in the UX research has nevertheless been introduced in a very different research context, and as it does not include the critical involvement of the company brand in the process, the background for the design process was sought from elsewhere, namely business sciences. In that domain, Wu, has introduced “imagination workshops” [46] and Zheng & Callaghan “Diegetic Innovation Templating” (DiT) [49] as potential SFP creation processes. The disadvantage of those for this research, however, seemed to be that they employed existing Science Fiction as their primary source of inspiration. Ultimately, the research for this article found solid ground for the UX-driven process from the framework introduced by Graham et al. [12,13]. This SFP framework intensifies the method by “expanded consumer experience development”, which seemed to be the most convenient approach to ambient UX design research.

3.Methodology – Design of the study and used methods

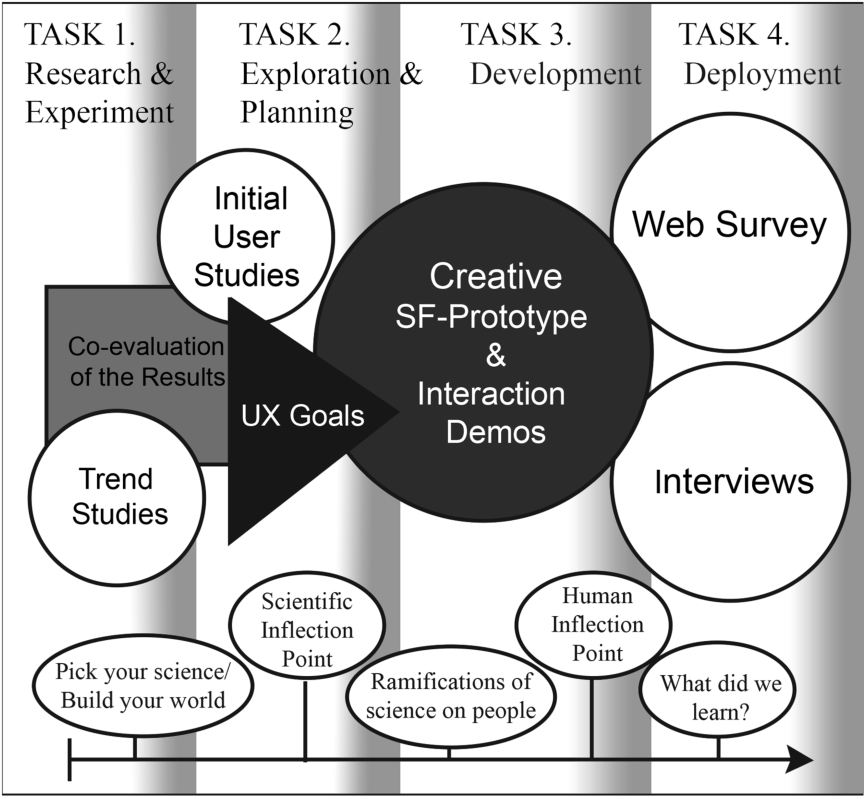

Accordingly, the research for this article has employed the SFP framework by Graham et al. for its UX design process and Johnson’s SFP method for creating its main design outcome, the SF-prototype. In brief, the SFP framework includes four tasks:

– Research and experimentation (what)

– Exploration and planning (who)

– Development (how), and

– Deployment

The first task of the framework is intended to support the world building process of the prototype by research and experimentation. In the practical UX research, during this task the research group investigated trend studies (business, technology and societal trends) and organized co-design workshops for selecting most suitable technologies and topics to be explored in the prototype. The second task involves people in the process by continuing the exploration and planning with that focus. In order to determine the specific people and locations in the nominated process control work, the research group conducted preliminary user studies on location. In the framework, this task concludes with an experience specification, which in the study has been understood as the defining of explicit ambient UX goals for the process control work.

In contemplating the third task, the development of the SF-prototype, the research group arranged several iterative workshops, in which the manuscript for the prototype was accomplished. The creation process followed rigorously Johnson’s SFP method, which consists of five fundamental steps [16]:

1. Select a technology, science or issue to be explored using the prototype. Set up the world in question; introduce people and locations.

2. Introduce the scientific inflection point.

3. Explore the science’s implications and ramifications for the world.

4. Introduce the human inflection point with the technology; modifications or fixing the problem; a new area of experimentation.

5. Explore the implications, solution or lessons learnt.

In general, the use of the method results in an SFP that takes a written form, but in this case the outcome was decided to be video sequences and supportive interaction demos. The final step of the method “exploring the implications, solution or lessons learnt” may also be understood as the last, fourth step in the SFP framework, the deployment. In the study, this part received special attention, as there were two complementary user evaluation setups organized for evaluating the SFP: a Web survey and expert interviews.

To summarize, in the pursuit of creating, delivering and evaluating the SFP the design process was constructed from the following actions (see Table 1).

Table 1

A summary of how the study was designed

| Task 1. Research and experimentation |

| Trend studies |

| Co-design workshops |

| Task 2. Exploration and planning |

| User studies in the nominated industrial work context |

| Defining of the ambient UX goals |

| Task 3. Development |

| Workshops for creating the manuscript |

| Creating of the SFP video |

| Creating the supportive interaction demos |

| Task 4. Deployment |

| User evaluations by a Web survey |

| User evaluations by expert interviews |

Figure 1 explicates how the timeline of the case study is attached to the SFP framework and SFP method.

Fig. 1.

Design process: on top are the tasks of the SFP framework, in the middle the case study settings, and at the bottom, the steps of the SFP method.

As the objective of the research was to carry out UX investigations by creative prototyping, the research questions were formed around this problem-space. Consequently, the design research was pursued in order to answer the following questions:

– How to carry out UX-driven research by employing the SFP method and SFP framework

– How to create and deliver video-illustrated SFP with ambient UX goals

– How to evaluate the ambient UX goals embedded in the SFP and by that, validate research

The remainder of this article will follow the structure of the SFP framework, at first, with a detailed description of the process, methods used and data sets of each of the tasks, and subsequently, by delivering the results and outcome of the process.

3.1.Research and experimentation

In the initial phase of the process, the research group arranged a series of co-design workshops for deliberating the world building procedure of the first framework task. The process began with a trend analysis that studied business, technology and the general societal trends of the nominated process control work domain. After that, the results were shared within the first co-design workshop, which involved researchers and company representatives. The participants assessed the importance of the trends presented, and the most popular of them were used as the basis for a discussion in groups that identified important themes relating to user experiences and possible technical solutions that supported them. The most interesting concepts, from academic and business perspectives, were in the concluding workshops further developed into usage scenarios. As a reference, in the SFP framework, the conceptual prototyping is encouraged as the outcome of the first task.

3.2.Exploration and planning

The first user research in an actual location focused on what kinds of experiences people currently had during the process control work, and what kinds of positive experiences they expect to have in the future. The research was carried out in an oil refinery focusing on the production of advanced, low-emission traffic fuels in Finland (details omitted to guarantee participant anonymity), in the autumn of 2012. The research was conducted in situ in the control center where the operators worked; 23 operators participated in the research (20 male/3 female; with work experience ranging from 1–15 years). The research consisted of a contextual inquiry [2], a user experience significance questionnaire, and critical experience interview [32]. There were altogether six contextual inquiry sessions and five critical experience interviews. The research methods were selected so that the user and work experiences were handled both directly and indirectly during the information gathering.

3.3.Development

During the workshops, the research group considered alternative means for describing and illustrating the UX goals by using the SFP method. To support this aim, the means were selected primarily to be video sequences and, secondarily, interaction demos, which were hypothesized to provide an effective delivery of the content for the evaluation participants. Videos were chosen to illustrate, in particular, experiences relating to AmI, new technical possibilities for remote control work, the remote presence of employees and new collaboration practices. The videos were also considered to be an effective way to communicate about the overall design, which was aimed to be a comfortable and flexible working space that supported collaboration. The interactive demo was created for speech- and gesture-based interaction. For this work, the fundamental framework came from [29]. The screenplay was collaboratively developed by means of visual scripts and early test videos, as these techniques were identified as being relevant during the trend analysis.

The video-illustrated SFP ‘A remote operator’s day in a future control center in 2025’ included altogether six video sequences. The iterative work was carried out by a team comprising researchers, company representatives and video production professionals. To make the videos, the research group first organized a workshop for creating the screenplays for the video sequences. For this work, the grounding framework came from [35]. The sequences were filmed in an interactive collaborative environment that was staged with a set of monitors, wall-sized projected displays and large touchscreens. The imaginary visual content was exclusively created for all the displays seen on the video. Technically, the videos were shot by using a combination of minimally interactive prototypes and green screen technique, where envisioned screen content was added to the video at the post production stage. Display contents were added by using digital compositing and animations, e.g. an on-screen cursor following hand pointing, were synchronized to the filmed material. Spoken dialogue and a narrator’s voice were added into the video at post production.

The speech- and gesture-based interaction demo was implemented by adding new interaction modalities into an existing process automation system and, consequently, the prototype did not exactly match with the interactions seen on the video-illustrated SFP. However, the same automation system was in use in the organization where the on-site evaluations took place, so the participants were already familiar with the system and could, therefore, focus simply on the new interaction techniques. Technically, the supportive interaction demos were implemented by using Microsoft Kinect and Microsoft Speech Recognition. A wireless clip on a microphone attached to the user’s clothes was used for the speech input. The prototype enabled window and view manipulation; participants could point at windows using hand gestures and, using an on-screen pointer, grab and move windows by closing a pointing hand into a fist, resize windows by grabbing with two hands, close and resize windows with speech, and point at and change views and open new ones by using speech commands. The actual process control operations were omitted from the supported functionality.

3.4.Deployment

For evaluating the video-illustrated SFP, two complementary user research setups with expert process control operators and workers were established. In the first setup, the participants were introduced to the SFP via the videos uploaded to YouTube and embedded in a Web questionnaire. The questionnaire included a discussion space that was active for a two-month period, in late 2014. In all, 58 experts participated in the Web survey, 16 of whom were active commentators. The participants were selected from among the customer companies of the project’s participating company. The participants’ background in process control work was diverse, as the domains related to the chemical industry, energy distribution, energy production, food industry, forest industry, manufacturing and nuclear power. The participants had work experience of up to 41 years; all were interested or very interested in new technologies.

The second evaluation setup included interviews conducted in situ in a municipal power plant in a city in southern Finland (details omitted to guarantee participant anonymity), during October 2015. In addition to seeing the SFP via YouTube videos, the participants were also able to try out the speech- and gesture-based interaction demo. The evaluations included six operators (all male) aged 27–34, who described their occupational titles as: automation manager, process operator, power plant operative, service engineer, automation engineer, and electricity instrument manager. The participants had experience of working in a control center environment ranging from 1–7 years; all were interested or very interested in new technologies.

The Web survey consisted of both closed and open-ended questions; the interview setup consisted of a video interview with user analysis [48] and a semi-structured interview [22]. In both groups, the participants assessed six video scenes, one at a time; the main difference between the evaluation setups was that, in the Web survey, the participants could choose which of the six scenes they wanted to see and comment on first. In order to gain quantitative data about the UX goals, the users were, after seeing each video scene, requested to assess whether they could identify with the UX goals by answering a UX significance questionnaire (using a 5-point Likert scale) specifically created for this project. The users in both research setups answered the same open-ended questions relating to the SFP; in addition, they were requested to analyze the new interaction methods and deliver new ideas. As a final part, the participants were allowed to provide overall feedback on the presented future control environment. The Web survey and interview data were transcribed and qualitatively analyzed.

4.Analysis and findings

4.1.Results of the research and experimentation

The aim of the first workshops was to identify and discuss the results of the trend studies, analyze the user research results and to create future control center usage concepts for the SFP. Visions of the new user interaction tools were of especial interest, as it was seen that they may improve the work processes and support new ways of working. In the first workshop, the following ideas concerning business renewal and related development possibilities were identified for further consideration:

– Distributed production: smart mobile interaction tools,

– Highly automated production: a centralized remote expert competence center,

– Temporary plant with less well educated users: extremely intuitive control with safety ensured,

– Novice operators: help from a social network of other operators, intelligent agents and knowledge management,

– Quickly changing production plans: co-creation with the customer.

In addition, during the first co-design workshops the potential ambient UX goals that based on trend analysis and current understanding of the operator work in the participating company were developed.

4.2.Results of the exploration and planning

The initial user research focused on defining the building blocks of the user experiences in the nominated process control work environment. The contextual inquiry focused on work characteristics, as defined in the core-task analysis method [20,33,34]. The analysis was thorough; it included the definition of the work environment by inspecting special occasions, events, feelings and experiences related to it. The current state of UX was also polled with a user experience significance questionnaire, especially created for this case study, which requested the operators to rate 19 user experience goals developed in the co-design workshops.

The critical experience interview [32] was developed by adapting the critical decision method by Klein et al. [21]. While the method in general focuses on decisions and elements of decision-making, the critical experience interviews focused on the feelings and experiences and their development during the participants’ work activities. The structure of the critical experience interview mainly followed the structure of the critical decision method, i.e. obtaining an unstructured incident account, constructing an incident timeline, experience moment identification, and experience moment probing. In addition, the operators were asked about the tools used in different phases of the incident, possibilities of new technologies for improving the operators’ work during similar incidents, and information sharing needs and practices relating to the incidents.

The rich field research data was further analyzed by identifying operators’ remarks concerning the UX goals and their categorization. Altogether, 216 excerpts were identified and all of them, excluding 7 general product improvement suggestions, were also classified into 19 predefined UX goals. The UX goals were again analyzed and their dynamics, context of emergence and building blocks described. Consequently, as a result of the initial user studies, there was a relatively extensive list of relevant UX goals for the explicit AmI domain; however, the information was not yet focused enough to guide the concept video phase. Therefore, it was essential to narrow the list down into more distinct ambient UX goals.

4.3.Results leading to SFP development

Together with the user study findings, the results of the initial workshops were employed in determining the UX goals and for inspiring further ideation for the SFP. Subsequently, it was defined that the one primary, higher level ambient UX goal in the process control work should be “Peace of mind”, which was interpreted as: “The operator knowing what is going on in the production process and how to intervene when needed”. In essence, “Peace of mind” included the following seven broader-spectrum UX goals that guided the final SFP creation:

– Sense of control

– Trust in human-automation cooperation

– Sense of freedom

– Ownership of the process

– Relatedness to the work community

– Meaningfulness of the work

– Success and achievement.

The ideas gradually begun to form into scenarios that all related to the topic: ‘A remote operator’s day in a future control center in 2025’. The selected usage scenarios introduced a future plant where:

– The control center changed into a flexible space, which could be shaped to support different work tasks and responsibilities, such as group or individual work;

– The AmI system was able to predict the process disturbances through simulations; and, as a result, many disturbances could be prevented before they took place;

– The AmI system enabled proactive and future-oriented process control work;

– User interfaces provided visual and situation-aware descriptions of current and upcoming process events;

– The AmI system enabled collaborative problem solving and continuous development.

Consequently, each scenario was written as a UX-driven scene: the starting point was the targeted user experience, and essentially the scene described how the experience was facilitated by design.

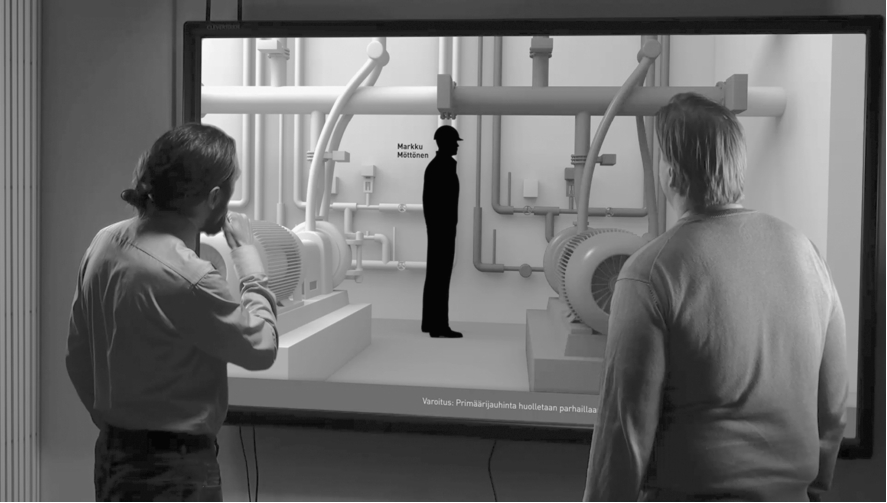

Fig. 2.

Production monitoring.

5.The science fiction prototype

As a storyline, the SFP video “A remote operator’s day in a future control center in 2025” has been built from six scenes that take place during an operator’s work shift. The live action scenes and voice-over explanations deliberately aimed to be provocative in order to stimulate discussion between the participants. In what follows, there is a brief explanation of each SFP scene and a link to the YouTube videos (presented in Finnish, the original language, with English subtitles). The scenes introduce: routine production supervision, operator guidance, preparation for a production change, carrying out the production change, incident management, and a concluding shift change.

5.1.Production monitoring

‘Production monitoring’ depicts process control operation settings where an operator monitors the status of the process from their personal workstation (see Fig. 2). The AmI system is presented as a responsive and intelligent partner assisting the operator in predicting and reacting to changes in process status. The goal is to emphasize the user’s sense of freedom and control by allowing them to use interaction methods of choice. These qualities are exemplified through speech-based interactions, which allow the operator to issue commands to retrieve information and enable intelligent agents to monitor the process on behalf of the operator. This frees the operator to focus on other tasks.

Link to the scene: https://www.youtube.com/watch?v=wy-3AwfiY-A.

5.2.Guidance

‘Guidance’ focuses on portraying how new interaction techniques enable operators to move freely around the control center and collaborate with one another (see Fig. 3). Their tasks are supported by the intelligent AmI system. In the scene, an experienced operator provides guidance to a novice operator on a specific part of the production process. The guidance is given by using gestural and spoken interaction on a wall-sized display. The use of the system blends in as a natural element of the collaboration.

Fig. 3.

Guidance.

Fig. 4.

Preparation for a production change.

Link to the scene: https://www.youtube.com/watch?v=JRjRJIpwQN8.

5.3.Preparation for a production change

Major production changes are procedures that require careful planning in order to avoid disturbances or unnecessary deviations in production quality. This scene illustrates how the future control center facilitates collaboration between the center and field personnel through improved situational awareness, such as personnel locations and ongoing maintenance operations (see Fig. 4). The personnel are going through a production change plan, which they verify by communicating with the field personnel. The technologies include speech-based interaction, large touchscreen displays, synchronous voice communication and live video with augmented reality and 3D elements.

Link to the scene: https://www.youtube.com/watch?v=9rnB96tq_QY.

5.4.Production change

Fig. 5.

Production change.

Fig. 6.

Disturbance management.

‘Production change’ focuses on operator’s tasks during an ongoing production change (see Fig. 5) and the use of internal social media tools. The main focus is on the role of the AmI system, which, as an active partner, supports the human operator in situations that arise during production change. The system is able to anticipate potential disturbances, records the operator’s troubleshooting activities, and suggests various solutions that the operator can choose from. Successful operations shared by operators allow the system to make future recommendations.

The new technologies demonstrated in the scene include speech commands and intelligent and proactive information collection, prediction and recommendation functionalities.

Link to the scene: https://www.youtube.com/watch?v=guMgYnPoI1Y.

5.5.Disturbance management

‘Disturbance management’ illustrates how mobile interaction technologies may be used to access the automation system remotely, outside of the control center, and enable collaboration on- and off-site (see Fig. 6).

The scene demonstrates how dialogue with the AmI system helps operators manage troubleshooting and disturbance situations. By tracking best practices used in operating the automation system, operators can be connected to available resources and other – even remotely located – operators to enable collaborative analysis and the resolution of problems. The troubleshooting actions are automatically stored by the system so that they can be of use should the same situation arise in the future.

Fig. 7.

Shift change.

Link to the scene: https://www.youtube.com/watch?v=8BiNS8dA-xo.

5.6.Shift change

The final scene portrays the opportunities for mobile work afforded by new technologies (see Fig. 7). The shift change between operators is facilitated by the automation system, which automatically tracks operators’ locations within the facility. The system ensures that responsibility for the process is seamlessly transferred without a face-to-face handover.

The new technologies illustrated in the SFP include the use of mobile devices to monitor and control the process, new sensor technologies that recognize and track people, and the functionality to monitor operators’ activities and facilitate the handover process – without compromising safety or operators’ control over the system.

Link to the scene: https://www.youtube.com/watch?v=fz2wyn6Xzgw.

6.Results of the deployment

In Johnson’s method, the SFP creation process concludes with an exploration of the implications and revisits the lessons learnt. In this article, the reflection part presents the main findings of the user research and UX goal evaluations, and, as such, it also demonstrates the final step of the SFP framework. In what follows will be the most important findings from both evaluation setups in detail. The findings are based on the written feedback (Web survey) and verbal discussions (interviews) that took place after the participants had seen each of the video scenes.

6.1.Scene 1: Production monitoring

This scene illustrates the use of speech commands and smart automation system and its user interface. The AmI system was described as supporting freedom of choice, and, according to the participants, the system presented certainly provided interesting opportunities for interaction. In general, the control center was stated to be pleasant in appearance, and the user interface color scheme well composed.

Speech commands were generally seen to be suitable for navigation, opening appropriate views, monitoring of plans and predictions; but not for operations, except in critical situations. Especially in the field work, the speech commands were considered helpful for searching relevant information. The commands were expected to be very simple and customizable for each operator’s preferences. One respondent in the Web questionnaire nevertheless speculated critically: “As speech-based interaction doesn’t work very well between humans, it could be even worse in the communication between humans and machines”. The potential for speech recognition errors also raised some concerns. Still, it was believed that the smart automation system would be able to pick out some important keywords from conversations and provide relevant, context-aware information. In general, the AmI system was considered to make practical work more effortless and efficient, and consequently reduce the probability of human errors in the operation work.

The greatest value resulting from the AmI was expected to be achieved in fieldwork. According to the participants, automation is currently not at the level illustrated in the video, and quite a number of the tasks presented are carried out manually. One concern was that, when the AmI offered information about alarms, it only presented the extremes, although there would also be a need for more comprehensive alarm information. In the interviews, some participants stated that they were used to “fixed” alarm notifications, but even currently some preferred to customize them. In the Web survey, some respondents criticized that too much automation might erode the professional skills of the workforce; nevertheless, this could be compensated with simulation training.

6.2.Scene 2: Guidance

This scene introduced the gesture control and use of different types of displays. In general, gesture control and large screen displays were considered suitable for operations and training. Gesture control seemed to work efficiently, especially for zooming in on images and for finding trends quickly. One critical interviewee commented that, in all of the cases presented he would rather use a mouse. Another critical interviewee remarked that gesture control worked better in the video than in the demonstration. In general, there was some concern about how the system would react to unintended gestures.

The use of different kinds of displays in the control center was seen to be a positive thing, although it was stated that, even currently, some operators preferred printed paper instructions. The use of tablet devices is not yet common in process operations; their use would seem especially appropriate in fieldwork. The trustworthiness of the mobile displays was seen to be a key factor in their deployment; namely, that the displays presented up-to-date information. The participants stated that it was not evident from the scene whether the displays could be used for field operations; this triggered opinions both for and against such use. Some concerns were related to the fact that, in the work environment, there were often similar devices – e.g. a pump and a spare-pump – and their exact location was often of critical importance.

Guidance seemed to work in the video scene, although some participants commented that the tutoring situation is not very different from the present case, where new settings are taught by watching them together on a computer screen. It was also considered that a larger display would have been advantageous, especially in problematic situations. A user in the Web questionnaire speculated that: “The best way to guide and rehearse actions for troublesome situations would be based on utilizing simulations”. For training simulations, the participants highlighted the need for flexible/efficient datamining operations.

6.3.Scene 3: Preparation for a production change

This scene illustrated the use of augmented reality (AR) and 3D models in the process control work. Participants commented that the AR features and the detailed 3D model of the plant would provide valuable and useful information for operators and maintenance personnel. An AR interface was considered to be especially valuable in critical situations. In fieldwork, one could use AR glasses for observing schemes and diagrams, and this would support the feeling of being in control. 3D images were stated to be valuable in the previously mentioned guidance and simulation situations. One participant speculated: “In a team meeting, it [the system] could offer a possibility to inspect the model together and combine values from the process control system”. If the process data were linked to a 3D model that utilizes AR, e.g. for demonstrating local measurements (such as temperature and ventilator states), the improvement on the current state would be enormous. Also, nowadays a large proportion of the information is tacit; maintenance personnel simply follow the pipelines to find the source of a problem.

The participants were doubtful of how the system would respond to false notifications, and how the information presented was selected from the huge amount of data. One participant mentioned that, as an improvement, AR information could present the location of groups of people working on-site; as often there might be dozens of groups in the working area simultaneously. The capability of the system to detect the location of individual persons was seen to violate privacy, and consequently dilute the UX goal “Sense of freedom”. On the other hand, the feature provided valuable information to the control center operators, as one of them mentioned: “There was a constant challenge of knowing the accurate location of the fieldworkers; e.g. who is closest to the problematic location”. In essence, the scene was considered to support the relatedness to the work community.

6.4.Scene 4: Production change

The ‘Production change’ scene illustrated AmI as an intelligent partner and how colleagues may be contacted remotely via internal social media tools. AmI as an intelligent partner was a well-received concept, especially because currently the list of alerts requires completely independent decisions by the operator. It was, however, stressed that, when the system acts as such a partner, it should mainly focus on informing the operator about forthcoming situations and give the operator the authority to decide what to do. In some of the situations presented, the solutions suggested by the AmI system seemed to work quite well. Participants debated how many alternatives can be shown to an operator – should other alternatives be shown only when the situation is new or infrequent? The system was considered advantageous for inexperienced operators – and even more experienced operators might approve the possibility of learning new procedures. For some, the scene demonstrated a lack of operator initiative – as in process control work that is seen to be of critical importance, since there is a constant need to execute personally defined procedures. For advancing the SFP, the participants stated that, if the AmI system suggests a process change, it should be based on reliable statistics, e.g. how the last five shifts have operated in similar situations. The system could employ big data and historical statistics from a long time period, and thus the operator could better trust the estimations.

The main deviation between responses was regarding the use of social media, with some considering this to be inappropriate for professional process control work. One participant nevertheless speculated that this might represent the viewpoint of only some 5%–10% of operators, with younger personnel being more open to the social media use. Another participant reported that the use of social media (as a separate system) had been investigated in another plant, and was rarely used. Nevertheless, it was reflected that social media might offer a means of learning from the previous process control tasks and actions of other operators. Some encouraged the use of social media in more of a diary-keeping context: “The system could create a log of definite alterations and tasks in the process; more trivial data could be handled as a group of information”.

6.5.Scene 5: Disturbance management

This scene illustrated real-time access control and remote communication. These issues, and especially the location information, were considered to be useful from a safety point of view. Remote communication was considered to be important in situations when the distance between locations was large. There was, however, criticism that, in the SFP, none of the operators or maintenance personnel went to the actual location to verify that there really was a problem; “Having an alarm is only part of the problem”, they stated, “There is a need for the presence of some personnel in the field, all the time”. Based on the video scene, the participants highlighted that contacting different experts seemed to be a practical solution: it advances the idea that “the portable device is carried around even during the lunch hour”. Contacting a person through remote video communication was considered helpful, especially in the quiet hours (e.g. during weekends), as it would allow displaying the live video feed to personnel who are not on duty. The participants highlighted that a person should not, however, have to be alert and available all the time.

In this scene, the AmI was illustrated as being highly advanced (as compared to the current situation), and this led to considering that the system would contain a huge amount of data. The justification for increasing the level of automation was stated to be the fact that, in the control center environments, similar situations arise all the time. An essential concern in disturbance control is that the data must be up to date. In order to better facilitate this, the system should analyze earlier scenarios and process descriptions and find comparisons. There was criticism that the current system does not include all devices (e.g. analogue devices and local meters); it was advised that these would also have to be included in the system. It was pointed out that the license to operate certain machinery is only with the operators, but in some cases, there would certainly be a need for sharing responsibilities (e.g. in emergency situations) and then the system might allocate work, for example, based on the operators’ work experience.

6.6.Scene 6: Shift change

This scene focuses on security issues and shift briefing. Shift change reports were automatically generated, which was stated to be a very useful feature. According to the operators, the real-world problem was the huge number of operations during a shift. It was anticipated that, especially in new process plants, the amount of data would become intolerable. The participants speculated about how, in general, the AmI system selects the main operations and actions during a shift. They highlighted that the system should not be left to decide that alone; at least the operator or the manager should give the final confirmation about the shift briefing.

Regarding personnel identification, the participants reported that effortless identification was very important, as currently all the software is password-protected. Otherwise, security issues were seen to be more important at the plant entrance, as unauthorized persons rarely have access to a control center. The participants speculated on how the identification of multiple personnel influenced the system. They severely criticized the fact that the control center was left unoccupied; this should never happen in current or future control centers. The participants also criticized the fact that there was no real face-to-face communication, and requested that the AmI system should support human-human communication, especially during critical situations.

6.7.New ideas

After seeing the SFP, the participants were requested to express and elaborate on their own opinions, expectations and new ideas aroused by the future concept. Customization – e.g. setting personal alarm limits or personal desktop settings (colors, stroke thickness, scaling possibilities) – was described as being a useful feature in several responses. One participant explained: “As the number of displays increases all the time, there is a need to personalize their layouts and, for example, combine trends in different ways. The settings for each individual operator should further be convertible to different devices (e.g. portable tablets). Also, the station in the control center should automatically be adjusted based on the identification of a user. The same user could also create several profiles for different situations (such as ramp-up, ramp-down, etc.). Another participant explained that these customizations could be retrieved by using voice commands. One participant described that, in the troubleshooting process (namely, with the paper machine): “It would be useful to receive data from the automation system to support what you see and sense in the environment”.

In many of the responses, new ideas were stimulated by Scene 3, “Preparation for a production change”, with respect to the video communication/camera surveillance of field workers. The participants discussed different camera-related solutions that could benefit their work. In the same scene, it was suggested that the virtual 3D model be used as an interface with which operators could point at and select the physical objects. Furthermore, gamification was mentioned as apparently playing a strong role in the future in motivating young process operators. The participants speculated that the gamification features could be exploited especially in guidance, simulation and when introducing new tools. Some would be willing to use these features also in the fieldwork; in situations when the teams are, in some manner, competing against each other.

6.8.Speech and gesture interaction demos

In the power plant interviews, the participants were introduced to a speech and gesture interaction demo, as the opportunity for hands-on experience was considered important when assessing the new interaction techniques. The participants were given this opportunity as the last part of the evaluation, so that their experience with the prototype would not affect their feedback on the videos. The evaluation situation was semi-structured and the tasks included were implicit.

Participants’ feedback on the prototype varied greatly depending on how the system operated at the time. Speech recognition rates, in particular, varied from unusable to almost perfect between sessions. The reason for the failures in operation related to a badly placed microphone, resulting in audio signal distortion. The limited precision of the permitted hand pointing was also considered to be a restriction; particularly since it was used to operate a system that was designed to be used with a mouse. Still, for many participants the concrete, working example of speech and gesture control provided confidence that such new interaction techniques could be part of a future control center. In particular, speech recognition was seen to have more potential than in the cases presented in the videos. Having direct access to a large number of screens was considered efficient and potentially useful even as part of a traditional desktop interface.

Table 2

Mean results of the UX Goal questionnaires. The six scenes of the concept video (top row) are each divided in two cells, presenting the mean results of the Web-questionnaire (left) and interviews (right). The sum variables were calculated using a five-level scale (5 = strongly experiencing the UX goal

| UX Goal | Scene 1 | Scene 2 | Scene 3 | Scene 4 | Scene 5 | Scene 6 | ||||||

| Sense of control | 2.6 | 3.83 | 2.0 | 3.67 | 3.86 | 4.67 | 3.2 | 4.0 | 3.45 | 4.17 | 3.44 | 4.33 |

| x¯ | 3.215 | 2.835 | 4.265 | 3.6 | 3.81 | 3.885 | ||||||

| Trust in human-automation cooperation | 4.1 | 3.83 | 4.25 | 3.5 | 4.71 | 4.5 | 4.2 | 3.33 | 4.44 | 4.0 | 4.33 | 4.33 |

| x¯ | 3.965 | 3.875 | 4.605 | 3.765 | 4.22 | 4.33 | ||||||

| Sense of freedom | 4.2 | 3.0 | 4.0 | 3.67 | 4.71 | 4.33 | 4.2 | 3.5 | 4.33 | 4.0 | 4.22 | 4.17 |

| x¯ | 3.6 | 3.835 | 4.52 | 3.85 | 4.165 | 4.195 | ||||||

| Ownership of the process | 3.8 | 4.17 | 4.25 | 4.0 | 4.0 | 3.17 | 3.8 | 3.17 | 4.2 | 3.83 | 4.22 | 4.17 |

| x¯ | 3.985 | 4.125 | 3.585 | 3.485 | 4.015 | 4.195 | ||||||

| Relatedness to the work community | 4.2 | 3.17 | 4.0 | 3.33 | 4.14 | 3.83 | 3.8 | 3.17 | 4.1 | 4.0 | 4.22 | 3.67 |

| x¯ | 3.685 | 3.665 | 3.985 | 3.485 | 4.05 | 3.945 | ||||||

| Meaningfulness of the work | 2.9 | 3.0 | 4.13 | 3.33 | 4.43 | 4.0 | 3.8 | 2.83 | 4.56 | 3.83 | 4.44 | 4.33 |

| x¯ | 2.95 | 3.73 | 4.215 | 3.315 | 4.195 | 4.385 | ||||||

| Success and achievement | 3.7 | 3.83 | 4.38 | 3.83 | 4.43 | 3.83 | 4.6 | 3.5 | 4.44 | 3.67 | 4.44 | 4.0 |

| x¯ | 3.765 | 4.105 | 4.13 | 4.05 | 4.055 | 4.22 | ||||||

| Peace of mind | 4.3 | 3.83 | 4.38 | 4.0 | 4.71 | 4.0 | 4.6 | 3.5 | 4.56 | 4.17 | 4.44 | 4.33 |

| x¯ | 4.065 | 4.19 | 4.355 | 4.05 | 4.365 | 4.385 | ||||||

6.9.Assessment of the UX goals

After seeing each of the SFP scenes, the Web questionnaire and interview, participants were requested to fill in an UX goal evaluation questionnaire. Table 2 presents the mean results of the questionnaires, which were similar in both user groups. As the Web user group could choose which scenes to watch (or not watch them at all), the number of responses varies between scenes.

In essence, the table demonstrates how the most interesting finding in the UX goal evaluations was concerned with the inclusive ambient UX goal “Peace of mind”. In the SFP creation phase, “Peace of mind” was nominated to be the higher level UX goal, but in the evaluations, it was treated as one of the eight experiences. However, as Table 2 shows, the participants unanimously scored “Peace of mind” highest in all the video scenes, which demonstrates that the participants identified the ambient UX goal in all of the scenes. The Table 2 also reveals that scenes 3, “Preparation for production change”, and 6, “Shift change”, were the most successful scenes, as they received the highest scores in three to four UX goals.

6.10.Overall experience

Before the participants of the Web survey and interviews were introduced to the SFP, they were asked to express, in their own words, their expectations regarding the future control center environment. By answering the question: “How would you describe your overall experience of the future control center?” the participants spontaneously used the following terms: sense of control, skillfulness, happiness, confidence, motivation, enthusiasm, interactivity, situational awareness, easiness, flow, peacefulness, effortlessness, high visual quality, high intelligence and advanced automation.

After seeing the SFP, the participants were asked to choose the experience that best described their overall experience relating to the future concept; Table 3 presents the results. In the open-ended questions, the participants confirmed that the future concept presented in the SFP was well received overall. According to the participants, the SFP was, for the most part, believable, and certainly desirable. The technological concepts were seen to be attractive, as were the visual appearances. The selected interaction methods were stated to be inclusive, as they covered new methods from identification, location, proactive process control and quantified self-data that supported the control center work with innovative means. According to the participants, the level of automation and communication was described convincingly: the machine was left to do what it is good at: retrieve information and analyze it, in order to support the human decision-making. Some criticized that the future technological advances presented would not be accomplished by 2025, rather by 2035.

Wearable electronics and head-mounted displays were seen to be the key interaction technologies in future process control work although, according to the participants, these were not described appropriately in the video concepts. Overall, the illustrated concepts were seen to be useful, especially for learning and simulation purposes.

Table 3

The overall experience

| Overall experience | Web survey | Interviews |

| Wow | 8 | – |

| Very good | 4 | 2 |

| Pleasant | 22 | 4 |

| Conventional | 2 | – |

| Unpleasant | 2 | – |

| Very bad | 1 | – |

7.Discussion

The science fiction prototype presented a future control center where, despite the increased automation, human to human contacts are still seen as important. An illustrative comment from a participant was: “When the automation system suggests to all the alternatives how to proceed, there should always be ‘an operator’s choice’ among the alternatives”. This point of view is justified when considering that the operator is the responsible part of the operation.

In the future, the role of AmI, as a supporting component in the operations, is undoubtedly estimated to increase. In this, the user research participants expected the information visualization to play a key role, and because of this they embraced the integrated system that the SFP presented. The AmI system was stated to be coherent, consistent and pleasant, specifically because all the information – reports, trends, process measurements, phone calls, etc. – passed through a single interface. Overall, the SFP was still criticized for not containing enough production-related issues, such as safety, security (warnings); economic objectives (raw materials, stock, energy, waste) or the quality objectives. These were seen as the most important issues for the process control workers who the participants emphasized as being “dignified personnel”, “dedicated to their work”, and “who expected all the safety precautions to be taken care of”. The participants also expressed important criticisms of the fact that the SFP did not describe how the responsibilities between the employees were shared during the process, as, in this line of work, the duties should always be clearly indicated.

For most participants, the SFP presented valuable novelties, but some speculated that their implementation in future factories might fail, because of some external reasons or design tensions that relate e.g. to the inflexibility of the existing systems, security problems, lack of resources or simple resistance towards the change. The participants considered it to be important that the operating organization participates in the development of the system, especially as regards the information presentation and automatic operations. This was nevertheless expected to contain a severe challenge for the system designers: how to identify the appropriate needs and requirements, especially from some parties of the organization that are not automation-oriented? Another important concern was associated with the competence transformation, i.e. how to communicate about the previously learned knowledge and skills – the deep understanding of the processes – that only the experienced operators possessed, and how this “silent knowledge” is collected, stored and retrieved. This was expected to be partly taken care of by the new skills of the next generation, the “diginatives”; who, nevertheless, created another problem for the system designers; as, for example, one interviewee mentioned: “How to engage the novelty requirements of the young employees to the operation tasks?”

In general, the best part of the SFP was stated to be the fact that it supported the most important current experience the employees had regarding their present-day environment. They described the control center as “a crossroads” or “a node in the process control work”: “All workers, including the maintenance personnel, gather there to drink their coffee and receive their new work assignments”, as one interviewee said. Although the current control centers were seen as an important place for face-to-face communication, the need for all kinds of communications was expected to increase in future working environments. It was stated that, in the future factories, face-to-face communication should be supported even more, as: “The contact with other operators and personnel is extremely important in this line of work”.

8.Conclusion

The UX goal-driven SFP “A remote operator’s day in a future control center in 2025” illustrated vividly how future knowledge workers could act and collaborate in future “Industry 4.0” factories. The six scenes raised the role of the workers as strategic decision-makers and flexible problem-solvers in a global work community where the workers can obtain support and constantly develop their competences. It is expected that this line of work may also be applicable to other similar industrial expert work environments, and that the creative approach could be adopted to assist in engaging the early participation of users and thus gain better insight into the needs of the potential smart AmI system users of the future. In theory, this could develop a more successful design and installation of new smart AmI systems that can be used more effectively in the work places.

As the main objective in research was to carry out UX investigations by the creative prototyping activity, the research questions were formed around this problem-space. As a conclusion, the article made three contributions to the development of the SFP method and SFP framework. First, the article explained in a concrete manner how the SFP framework may be employed for the ambient UX design process. The second contribution was to demonstrate how the SFP may employ video-illustrated means as a formal method of inquiry. The third contribution was to demonstrate in detail how SFP can be employed for validating the (ambient) UX goals through user research. With respect to the latter task, the article highlighted the role of reflection, which is normally the final task in the prototyping process. This line of work has been introduced earlier, e.g. in [26,27], and the validation of SFPs has been demonstrated in [3,8]. As compared to this earlier research, this article nevertheless emphasized the user research results, thus allowing the process control workers’ opinions to be justifiably heard. Consequently, the SFP offered the participating process control workers a platform to share their experiences and expectations concerning their future working environments: identify the benefits and disadvantages, elaborate on its concepts, and suggest new ideas.

In essence, the participants’ responses emphasized the importance of human decision-making, even when the intelligence of the control system increases with the new technological advances. With respect to the technologies to be supported in future process control work, the participants highlighted e.g. increasing mobility, wearable electronics and AR headsets. In the evaluations, the following potential benefits of the new technologies were revealed:

– Speech commands can be used for navigation, opening appropriate views and monitoring;

– Gesture control with wide screen displays is advantageous for training and simulation;

– Real-time access control and location information may improve work safety;

– Game-like experiences may be expected to have a role in motivating future “digital native” operators.

In addition to the results presented, the industrial partner found the video-illustrated SFP to be useful for demonstration and discussion purposes between the different stakeholders within the organization. In general, sharing the SFP within the organization is expected to help in committing the company to UX goals and keeping the users’ perspective in mind when shifting design and development towards the Industry 4.0. According to the company representative of the case study: “Seeing the video-illustrated SFP and feeling the tangible interaction tools facilitated a personal experience of the future potentials of the new technologies. Videos for demonstrating purposes develop common understanding about the user experience of our future products”.

Consequently, one lesson learned from the case study was that the UX-driven science fiction prototype offered a powerful tool for illustrating and sharing the future-oriented technology vision. For purposes of further discussion, the research group also created a shorter version of the SFP, which can be found at: https://youtu.be/kgLiCR6jCf0.

Acknowledgements

The work was carried out under the UXUS (User Experience and Usability in Complex Systems) program, supported by the Finnish Metals and Engineering Competency Cluster (FIMECC), which studied new interaction concepts and innovative practices when developing user and customer experience excellence. We thank Intopalo, especially Antti Sinnemaa, for their creative contribution to the work and Katariina Tiitinen and Sari Yrjänäinen for contributing the voice-overs for the videos.

References

[1] | E. Aarts and B. De Ruyter, New research perspectives on ambient intelligence, Journal of Ambient Intelligence and Smart Environments 1: (1) ((2009) ), 5–14. |

[2] | H.R. Beyer and K. Holtzblatt, Apprenticing with the customer, Communications of the ACM 38: (5) ((1995) ), 45–52. doi:10.1145/203356.203365. |

[3] | T. Birtchnell and J. Urry, 3D, SF and the future, Futures 50: ((2013) ), 25–34. doi:10.1016/j.futures.2013.03.005. |

[4] | J. Bleecker, Design Fiction, A short essay on design, science, fact and fiction, Near Future Laboratory, 2009. |

[5] | M. Böhlen, Second order ambient intelligence, Journal of Ambient Intelligence and Smart Environments 1: (1) ((2009) ), 63–67. |

[6] | P. Dourish and G. Bell, Resistance is futile: Reading science fiction alongside ubiquitous computing, Personal and Ubiquitous Computing 18: (4) ((2014) ), 769–778. doi:10.1007/s00779-013-0678-7. |

[7] | A. Dunne, Hertzian Tales: Electronic Products, Aesthetic Experience and Critical Design, RCACRD Research Publications, London, (1999) . |

[8] | S. Egerton, M. Davies, B.D. Johnson and V. Callaghan, Jimmy: Searching for free-will (a competition), in: Workshop Proceedings of the 7th International Conference on Intelligent Environments, IOS Press, (2011) , pp. 128–141. |

[9] | J. Forlizzi and K. Battarbee, Understanding experience in interactive systems, in: Proceedings of Designing Interactive Systems DIS, ACM, (2005) , pp. 261–268. |

[10] | D. Gorecky, M. Schmitt, M. Loskyll and D. Zühlke, Human-machine-interaction in the industry 4.0 era, in: 12th IEEE International Conference on Industrial Informatics (INDIN), IEEE, July (2014) , pp. 289–294. doi:10.1109/INDIN.2014.6945523. |

[11] | G. Graham, Interaction space, in: Workshop Proceedings of the 7th International Conference on Intelligent Environments, IOS Press, (2011) , pp. 145–154. |

[12] | G. Graham, A. Greenhill and V. Callaghan, Creative prototyping, Technological Forecasting and Social Change 84 (2014). |

[13] | G. Graham, A. Greenhill, G. Dymski, E. Coles and P. Hennelly, The science fiction prototyping framework: Building behavioural, social and economic impact and community resilience, Future City & Community Resilience Network, 2015. |

[14] | A. Greenfield, Everyware – The Dawning Age of Ubiquitous Computing, New Riders, USA, (2006) . |

[15] | M. Hassenzahl, Experience Design – Technology for All the Right Reasons, Morgan and Claypool Publishers, (2010) . |

[16] | B.D. Johnson, Science fiction prototypes. Or: How I learned to stop worrying about the future and love science fiction, in: Proceedings of the 5th International Conference on Intelligent Environments, IOS Press, (2009) , pp. 3–8. |

[17] | B.D. Johnson, Science Fiction Prototyping: Designing the Future with Science Fiction, Morgan and Claypool Publishers, (2011) . |

[18] | E. Kaasinen, V. Roto, J. Hakulinen, J.P. Jokinen, H. Karvonen et al., Defining user experience goals to guide the design of industrial systems, Behaviour & Information Technology 3001: ((2015) ), 976–991. doi:10.1080/0144929X.2015.1035335. |

[19] | H. Kagermann, W. Wahlster and J. Helbig, Securing the future of German manufacturing industry. Recommendations for implementing the strategic initiative INDUSTRIE 4.0, Final report of the Industrie 4.0 Working Group, Forschungsunion, 2013. |

[20] | H. Karvonen, I. Aaltonen, M. Wahlström, L. Salo, P. Savioja and L. Norros, Hidden roles of the train driver: A challenge for metro automation, Interacting with Computers 23 (2011). |

[21] | G. Klein, R. Calderwood and D. MacGregor, Critical decision method for eliciting knowledge, IEEE Transactions on Systems, Man and Cybernetics 19: ((1989) ), 462–472. doi:10.1109/21.31053. |

[22] | M. Kuniavsky, Observing the User Experience, a Practitioner’s Guide to User Research, Morgan Kaufmann, (2003) , pp. 419–437. |

[23] | T. Kymäläinen, Science Fiction Prototypes as Design Outcome of Research – Reflecting Ecological Research Approach and Experience Design for the Internet of Things, Aalto ARTS Books, Aalto University, (2015) . |

[24] | T. Kymäläinen, The design methodology for studying smart but complex do-it-yourself experiences, Journal of Ambient Intelligence and Smart Environments ((2015) ), IOS Press. |

[25] | T. Kymäläinen, Science fiction prototypes as a method for discussing socio-technical issues within emerging technology research and foresight, Athens Journal of Technology & Engineering (ATINER) (2016). |

[26] | T. Kymäläinen, P. Perälä, J. Hakulinen, T. Heimonen, J. James and J. Perä, Evaluating a future remote control environment with an experience-driven science fiction prototype, in: 11th International Conference on Intelligent Environments, IEEE Computer Society, (2015) , pp. 81–88. |

[27] | T. Kymäläinen, E. Kaasinen, M. Aikala, J. Hakulinen, T. Heimonen, P. Mannonen, H. Paunonen, J. Ruotsalainen and L. Lehtikunnas, Evaluating future automation work in process plants with an experience-driven science fiction prototype, in: 12th International Conference on Intelligent Environments, IEEE Computer Society, (2016) . |

[28] | H. Lasi, P. Fettke, H.G. Kemper, T. Feld and M. Hoffmann, Industry 4.0. Business & Information Systems Engineering 6(4) (2014). |

[29] | L. Lehtikunnas, Puhe- ja eleohjaus prosessinhallinnassa (Speech and gesture interaction in process control work, in Finnish), Diploma thesis, Tampere University of Technology, Tampere, Finland, 2014. |

[30] | C. Linehan, B.J. Kirman, S. Reeves, M.A. Blythe, J.G. Tanenbaum, A. Desjardins and R. Wakkary, Alternate endings: Using fiction to explore design futures, in: CHI’14 Extended Abstracts on Human Factors in Computing Systems, ACM, (2014) , pp. 45–48. |

[31] | W. MacDougall, Industrie 4.0: Smart manufacturing for the future, Germany Trade & Invest (2014). |

[32] | P. Mannonen, M. Aikala, H. Koskinen and P. Savioja, Uncovering the user experience with critical experience interviews, in: Proceedings of the 26th Australian Computer-Human Interaction Conference on Designing Futures: The Future of Design (OzCHI ’14), ACM, New York, NY, USA, (2014) , pp. 452–455. |

[33] | L. Norros, Acting under uncertainty, the core-task analysis in ecological study of work, Espoo, VTT, Finland, 2004. |

[34] | M. Nuutinen and L. Norros, Core task analysis in accident investigation: Analysis of maritime accidents in piloting situations, Cognition, Technology & Work 11: ((2007) ), 129–150. doi:10.1007/s10111-007-0104-x. |

[35] | H. Paunonen, Roles of informating process control systems, Ph.D. Dissertation, Tampere University of Technology, Tampere, Finland, Publication 225, 1997. |

[36] | R. Peldszus, Surprise payload rack: A user scenario of a conceptual novelty intervention system for isolated crews on extended space exploration missions, in: Workshop Proceedings of the 7th International Conference on Intelligent Environments, IOS Press, (2011) , pp. 290–300. |

[37] | P. Purdy, From science fiction to science fact: How design can influence the future, User Experience Magazine 13(2) (2013). |

[38] | V. Roto, Y. Lu, H. Nieminen and E. Tutal, Designing for user and brand experience via company-wide experience goals, in: Proceedings of the 33rd Annual ACM Conference Extended Abstracts on Human Factors in Computing Systems, ACM, (2015) , pp. 2277–2282. |

[39] | E.B.N. Sanders and U. Dandavate, Design for experiencing: New tools, in: Proceedings of the First International Conference on Design and Emotion, C.J. Overbeeke and P. Hekkert, eds, Delft University of Technology, Delft, The Netherlands, (1999) , pp. 87–91. |

[40] | N. Shedroff, Experience Design, New Riders Publishing, Indianapolis, (2001) . |

[41] | N. Shedroff and C. Noessel, Make It So: Interaction Design Lessons from Science Fiction, O’Reilly Media Inc., (2012) . |

[42] | J. Tanenbaum, Design fictional interactions: Why HCI should care about stories, Interactions 21: (5) ((2014) ), 22–23. doi:10.1145/2648414. |

[43] | K. Tassini, The magician’s assistant, in: Workshop Proceedings of the 7th International Conference on Intelligent Environments, IOS Press, (2011) , pp. 267–278. |

[44] | H. Väätäjä, T. Olsson, P. Savioja and V. Roto, UX goals – How to utilize user experience goals in design, Tampere University of Technology, Department of Software Systems Tampere, Finland, 2012. |

[45] | P. Wright and J.J. McCarthy, Technology as Experience, MIT Press, Cambridge, (2007) . |

[46] | H.Y. Wu, Imagination workshops: An empirical exploration of SFP for technology-based business innovation, Futures 50: ((2013) ), 44–55. doi:10.1016/j.futures.2013.03.009. |

[47] | H.Y. Wu and V. Callaghan, The spiritual machine, in: Workshop Proceedings of the 7th International Conference on Intelligent Environments, IOS Press, (2011) , pp. 155–166. |

[48] | S. Ylirisku and J. Buur, Designing with Video, Focusing the User-Centred Design Process, Springer Science & Business Media, (2007) . |

[49] | P. Zheng and V. Callaghan, Diegetic innovation templating, in: Workshop Proceedings of the 12th International Conference on Intelligent Environments, IOS Press, (2016) . |