The Dementia Knowledge Assessment Scale, the Knowledge in Dementia Scale, and the Dementia Knowledge Assessment Tool 2: Which Is the Best Tool to Measure Dementia Knowledge in Greece?

Abstract

Background:

Measuring dementia knowledge can be a valuable tool for assessing the effectiveness of dementia awareness activities, identifying the potential benefits of dementia training programs, and breaking down common myths and stereotypes about dementia.

Objective:

To compare the psychometric properties of three widely used dementia knowledge tools, the Dementia Knowledge Assessment Tool 2 (DKAT2-G), the Dementia Knowledge Assessment Scale (DKAS-G), and the Knowledge in Dementia Scale (KIDE-G) in the Greek adult population.

Methods:

A convenience sample of 252 participants from the general population completed the survey online. Statistical analyses included Cronbach’s internal reliability, retest reliability, factor analysis, concurrent and construct validity, and floor and ceiling effects.

Results:

The DKAS-G had the most appropriate reliability levels (Cronbach’s alpha = 0.845; retest reliability = 0.921), whereas the DKAT2-G had satisfactory indexes (Cronbach’s α= 0.760; retest reliability = 0.630). The KIDE-G showed unsatisfactory reliability (Cronbach’s α= 0.419; retest reliability = 0.619). Construct validity was confirmed for all questionnaires, showing that all of them detected participants with pre-existing knowledge of dementia. Confirmatory factor analysis revealed a four-factor model for the DKAS-G and proposed the removal of 5 items. Floor and ceiling effects were found for the DKAT2-G and the KIDE-G, mainly among those who had previously participated in dementia training.

Conclusions:

The DKAS-G was found to have the highest levels of reliability and validity. The results prove that the DKAS-G meets the requirements for measuring dementia knowledge and evaluating dementia training programs in health professionals, caregivers, and the general population.

INTRODUCTION

Dementia is a long-lasting condition with increasing prevalence at a global level, mainly in the last decade, and is expected to increase with the aging of the population. According to data from the Alzheimer’s Association in 2023, approximately the number of American people living with Alzheimer’s disease (AD) aged > 65 is expected to reach 13.8 million by 2060 [1]. World Health Organization report in 2023 [2] states that the 80% of older adults will be living with dementia in low and middle-income countries (LMICs) in 2050. It is worth mentioning that Lian et al. (2017) [3] highlight that the diagnosis in LMICs is 5–10%, compared to the existing rate in higher income countries. Therefore, dementia care can be assumed as a global health priority that needs interdisciplinary collaboration between health professionals, researchers, family members, and the general population.

Dementia prevalence is increasing despite declining age-specific incidence in European populations, which is most likely due to preventive measures and improved pharmacological treatment of cardiovascular and cerebrovascular risk factors [4]. It is therefore necessary to promote knowledge about dementia for many purposes, such as access to early detection [5] and subsequent treatment [6]. In fact, Wang et al. (2017) [7] stated that the lack of dementia knowledge among health professionals is one of the main reasons for the unmet needs of PwD. Increasing dementia knowledge can also be beneficial in reducing stigma and stereotypes about dementia, as well as increasing community support. In particular, young adults, such as college students, can be considered as a target group for dementia awareness programs to reduce stigma. In this context, the Dementia Friends program, originally developed by the Alzheimer Society in the UK [8], has been widely used to increase dementia friendliness, dispel common myths, and improve attitudes toward dementia. Finally, increasing knowledge about dementia can also improve the quality of life of PwD [1], with consequent benefits in reducing the burden of dementia.

Accordingly, dementia awareness is incorporated into national strategic plans implemented around the world, including Greece. Therefore, training activities that increase public knowledge about dementia and promote action and involvement are inseparable parts of national dementia strategies. Hence, it can be concluded that dementia care services have a crucial role in adapting training programs to educate the general population about dementia. Health professionals and caregivers would benefit from feeling more confident and able to provide care and support for PwD. According to the literature [3, 7], difficulty in recognizing early signs of dementia and subsequent access to diagnosis, as well as lack of awareness about post-diagnostic management among health professionals, are the main consequences of inadequate dementia knowledge. Therefore, insufficient diagnosis of dementia is inextricably linked to inadequate treatment, management of behavioral disorders, and dementia care [9, 10].

In addition, measuring dementia knowledge among health care professionals and the general population can be seen as a critical step in identifying the efficacy of dementia awareness programs. Since dementia education plays an essential role in national and global dementia plans [11], measuring effective ways to promote dementia knowledge can reduce knowledge deficits, increase confidence in dementia care, and improve dementia friendliness in the community.

In summary, lack of knowledge about dementia is strongly related to negative attitudes toward dementia and subsequent negative effects on early dementia diagnosis and treatment of dementia. In fact, increased knowledge about dementia is strongly associated with positive attitudes toward dementia [12, 13] as well as with increased levels of self-confidence and perceived competence [14].

According to studies conducted in Greece, the prevalence of dementia was estimated at 5%, with the vast majority attributed to AD [15, 16]; however, another study with triple the population estimated the prevalence of dementia at 10.8%, with a higher percentage among people aged 80–84 years [17]. Studies show that dementia specialists self-report a lack of knowledge to distinguish cognitive impairment due to normal aging from that of AD [18]. This may explain why dementia is diagnosed earlier in urban areas than in rural areas [19]. Due to the limited knowledge of the epidemiologic and clinical profile of PwD, especially in rural areas [20], there is an increasing need to provide structured educational programs for health professionals and the public to facilitate early diagnosis and appropriate treatment. Increased quality of life, well-being indices in PwD as well as targeted support to their caregivers constitute additional benefits [19]. According to a Greek study measuring attitudes toward dementia, there was a large consensus among dementia experts, caregivers, and the general population that the government should play a crucial role in providing dementia knowledge to the public, with subsequent benefits for caregivers and PwD.

In terms of existing dementia knowledge tools, seven widely used measures were identified. In more detail, the Alzheimer’s Disease Knowledge Test (ADKT) [21] and the Dementia Quiz (DQ) [22] appear to be more suitable for family members [10]. Additionally, the Knowledge of Aging and Memory Loss and Care (KAML-C) [23], the University of Alabama Alzheimer’s Disease Knowledge Test for Health Professionals (UAB-ADKT) [24], and the Alzheimer’s Disease Knowledge Scale (ADKS) [25]—the updated version of the ADKT—have all an acceptable reliability and validity. The ADKS is a popular measure for assessing knowledge about dementia among health professionals, caregivers, and the general public. Even though ADKS has adequate psychometric properties such as reliability and validity indices, it is widely used to measure knowledge about AD in health professionals, caregivers, the general population, and PwD. To date, Prokopiadou et al. (2013) [26] validated the Greek version of the ADKS with marginal internal consistency (α= 0.65) but perfect repeatability (α= 1.0) and satisfactory factor analysis. Moreover, tools such as the Dementia Quiz [22] are mainly administered to caregivers and PwD and focus on problems arising in dementia care, so their results are not generalizable, while the KAML-C [23] focuses less on the early of dementia, and the UAB-ADKT has questionable construct validity [24].

The Dementia Knowledge Assessment Tool version 2 (DKAT2) [27] has been widely used to measure dementia knowledge in health professionals, family members, and people living with dementia. At the same time, it has been validated in Greek by Gkioka et al. (2020) [28] in psychology students showing acceptable reliability. In addition, the Knowledge in Dementia (KIDE) Scale, developed by Elvish et al. (2014) [29], was employed to evaluate staff training and was validated, showing good internal consistency, face validity, and content validity. Finally, we utilized the Dementia Knowledge Assessment Scale (DKAS), which was initially designed by Annear et al. (2016) [30] to address the shortcomings of previous tools, as it is more up-to-date since it was also administered through a Delphi study, and, therefore, experts’ consensus was also taken into account [30]. It is also worth mentioning that the DKAS was found to be significantly related to the ADKS [30], which means that they both measure a relevant construct. However, the DKAS has better construct validity and also performs better when administered in large cohorts worldwide [30]. The systematic review by Thu-Huong and Huang (2020) [31], which included articles from 2009–2017, suggested four main dementia knowledge scales: ADKS, the DKAS, DK-20 [32], and the DKAT2, based on their psychometric properties, that is, reliability, validity as well as feasibility. According to their results, ADKS and the DKAS demonstrate good psychometric properties, while the remaining two (DK-20 and the DKAT2) had moderate psychometric properties.

Given the fact that knowledge of dementia is the key for raising awareness, an appropriate tool is necessary to measure dementia knowledge. Therefore, the aim of this study is to determine which is the most appropriate psychometric tool to assess dementia knowledge, namely the DKAT2, DKAS, and the KIDE in the Greek adult population. In order to achieve this goal, we measured the psychometric properties of the aforementioned tools. The best tool of this validation study will be used to determine the effectiveness of dementia awareness programs in the general population, health professionals, and caregivers.

METHODS

Design

The current study is quantitative and cross-sectional and aims to validate three tools to measure dementia knowledge by identifying their psychometric properties, i.e., internal reliability, retest reliability, construct and concurrent validity, item analysis, factor analysis, floor, and ceiling effects. The present study adheres to the EQUATOR guidelines for reporting research using the “Strengthening the Reporting of Observational Studies in Epidemiology” (STROBE) checklist (Supplementary Material 1).

Participants

The study’s participants belonged to the general population and were recruited from different regions of Greece—urban and/or non-urban areas—as well as from the Greek islands through relevant notifications from the researchers’ social media accounts, personal contacts, announcements in public universities and dementia care centers across Greece, and in social media accounts of the Greek Association of Alzheimer’s Disease and Related Disorders (GAADRD) between March and June 2023. No inclusion criteria were set for the current study, except for the minimum age to participate, which was 18 years. In addition, power analysis was performed to select at least the minimum number of participants in line with the design of our research according to a sample size of 10 participants per item [33]. Since the DKAS is the largest scale with 25 items, a minimum sample size of 250 participants was required. The final sample consisted of 252 individuals, with an additional subset of 72 participants who completed the three tools one month after their initial completion again to measure the retest reliability of the tools.

Data collection and procedure

The tools were administered digitally via Google Forms. Along with them, a sociodemographic questionnaire was prepared (including items on demographics, educational level, and occupation, previous experience with PwD (yes/no), experience of being a caregiver of PwD (yes/no), work experience with PwD (yes/no), and previous participation in dementia training programs (yes/no). Participants who agreed to participate a second time were asked to provide their e-mail address to receive a reminder e-mail for their second participation in the study. The tools took approximately 15-20 min to complete.

There was no missing data, as Google Forms only accepted completed sets of data.

Instruments

Dementia Knowledge Assessment Tool 2 (DKAT2)

The Dementia Knowledge Assessment Tool (DKAT2), a 21-item questionnaire, was developed by Toye et al. (2013) [27] to measure the basic level of dementia knowledge in the adult population, mainly among care workers and family members [13]. It is a unidimensional tool, consisting of two main conceptual variables: ‘dementia and its progression’ and ‘support and care’. The DKAT2 was adapted and validated in Greek by Gkioka et al. (2020) [28] in psychology students (α Cronbach = 0.68). Items include statements to measure information about dementia and its subtypes, with response options of “Yes”/“No”/“Don’t know”. Thirteen items are true statements, and eight items are false statements, which are reverse scored (5, 6, 7, 8, 12, 16, 18, and 20). Correct answers are scored as one (1), and incorrect or “Don’t know” answers are scored as zero (0). The final score is calculated by adding the scores of the correct items, with higher scores indicating greater knowledge about dementia. Scores from 0–7 are considered as poor, 8–14 as average, and 15–21 as good.

Dementia Knowledge Assessment Scale (DKAS)

The DKAS [34, 30] is a 25-item tool of general dementia knowledge consisting of statements about dementia that can be rated as factually correct or incorrect. The initial version of the tool has four subfactors, measured by four subscales; “Causes and Characteristics”, “Communication and Behaviors”, “Care Considerations”, and “Risks and Health Promotions”. Each item is followed by a modified Likert scale with respondents choosing the answer from five response options: “False”, “Probably false”, “Probably true”, “True”, and “Don’t know”. Each correct answer is worth 2 points, while the probably correct answers are worth 1 point. The remaining answers are scored as zero. Items 1, 3, 4, 5, 6, 7, 9, 12, 13, 14, 15, 16, 19, and 20 are reverse-scored as they are false statements. Similar to the DKAT2, the higher the score, the better the knowledge of dementia. The Greek version of the DKAS (DKAS-G) was provided by Demosthenous and Constantinidou (2023) [35]. After the initial translation, it was further reviewed by a bilingual dementia expert, and the resulting translation was sent to the developers of the scale, where another bilingual expert made the final suggestions. To date, there is no validation study for the DKAS-G.

Knowledge in Dementia Scale (KIDE)

The KIDE was initially designed by Elvish et al. (2014) [29] and contains 16 items, in which respondents are asked to answer “Agree”/“Disagree”. In accordance with the previous tools, correct answers are given one point, and incorrect answers are given zero points, with a higher score being representative of better knowledge about dementia. The KIDE was translated into Greek by Gkioka (unpublished data, 2020) (KIDE-G), but since the alpha Cronbach was < 0.60, their data were not published. To date, the KIDE has been validated in the German population, showing poor reliability and a tendency to ceiling effect [36]. To our knowledge, it has not been validated in other languages.

Statistical analysis

Statistical analysis was initially performed using SPSS software version 27 (IBM; SPSS Statistics for Windows, Version 27.0. Armonk, NY, USA: IBM Corp 27.0) to assess the following psychometric properties: internal reliability using Cronbach’s alpha coefficient and retest reliability using the intraclass correlation coefficient (ICC) index. Internal reliability ranges from 0 to 1 and has accepted values between 0.70 and 0.95, with higher values indicating increased items homogeneity, i.e., a high degree of internal consistency [37, 38]. Test-retest reliability was also calculated to assess the reliability of the tools over time. Similar to the Cronbach’s alpha coefficient, ICC’s accepted values range from 0.70 to 0.95, with a value greater indicating excellent retest reliability.

In order to measure construct validity, also known as known-group analysis, we wanted to determine whether people with experience of dementia and previous dementia training would have increased dementia knowledge based on their scores on the aforementioned tools. Therefore, we hypothesized that those who had previous experience with dementia as well as dementia training would have increased dementia knowledge, as measured by their performance on dementia knowledge tests. To this end, a two-sample Kolmogorov-Smirnov test was applied to determine whether the summed scores of the DKAT-G, DKAS-G, and KIDE-G were normally distributed. According to the results, the participants’ responses were not normally distributed and, therefore, the Wilcoxon-Mann-Whitney test was performed to test our hypothesis. In addition, concurrent validity, assessed by Pearson’s correlation, was conducted across the three tools to determine whether they all measured the same construct, namely dementia knowledge.

To test the structural validity of the tools and to determine whether they retained the same factor structure as their original English form, we conducted confirmatory factor analysis (CFA) using the Structural Equation Modeling program AMOS 20.0 [39]. Structural validity refers to the ability of the tool to maintain the construct that was originally designed to measure. The CFA was performed using maximum likelihood estimation and calculated the covariance matrix between the SDS items [40, 41]. The fit of the model was assessed according to the criteria proposed by Hu and Bentler (1999) [42].

To report the CFA results, we followed the recommendations of Schreiber et al. (2006) [43]. More specifically, CFA was performed for the DKAS-G as well as for the KIDE-G, which has a controversial factor structure, as mentioned by the authors of the original version [29, 44]. Since the DKAT2 is unidimensional, as mentioned previously, no CFA was performed for this scale.

Item analysis was also conducted for the three tools to measure the level of difficulty of each item and the ability of each item to discriminate between respondents based on their knowledge about dementia. Another reason is to determine the extent to which incorrectly suggested answers succeeded in attracting respondents with insufficient knowledge to give an incorrect answer. The difficulty index for each item was categorized into six clusters: very easy correct answers (> 90%), easy correct answers (75.1%–90%), somewhat easy answers (50.1%–75%), somewhat difficult answers (25.1%–50%), difficult answers (10.1%–25%), and very difficult answers (< 10%). Since the DKAT2-G and DKAS-G have an “I don’t know” option, these responses were labeled as “item ignorance” and, therefore, were also calculated. Both item difficulty and item ignorance were estimated separately for those who participated in the dementia training groups and those who did not. We also estimated item-total correlation, which is a correlation between each item and the total item score. In fact, the item-total correlation is the correlation between a single item and the item-total score without that item. By calculating the item-total score, we aim to find out which items are weakly loaded on the total score. Inter-item correlations examine item redundancy, i.e., the extent to which each item is related to all other items in a psychometric instrument. Therefore, inter-item correlation reflects the extent to which all items assess the same content. Inter-item correlations between 0.2 and 0.4 indicate that each item makes a significant contribution to the total score, thus higher values indicate that some items are redundant and do not provide sufficient information to the tool.

Finally, ceiling and floor effects were also calculated. These types of effects occur when more than 10–15% of participants’ sum scores are accumulated in the perfect or zero score and are, therefore, skewed by their distribution. Ceiling and floor effects were also measured for those who had previously participated in dementia training programs.

In addition, descriptive statistics (means and SDs) were calculated to describe respondents’ demographic data, experience with dementia (“I know a person with dementia”, “I care for a person with dementia”, and “I work with people with dementia”), and previous participation in dementia training (“I have already attended a dementia training program”). Pearson correlation and chi-square tests were used to determine whether the dementia knowledge tools were significantly associated with demographics, experience with dementia, and previous participation in dementia training programs. The significance level was set at p < 0.05.

Ethics

Before administering the questionnaires, participants read the information sheet, including the research purpose, and signed the consent form about data protection and anonymity. The study was approved by the Scientific and Ethics Committee of the GAADRD (Approved Meeting Number: 87-9/3/2023). The approval follows the new General Data Protection Regulation (EU) 2016/679 of the European Parliament and of the Council of 27 April 2016 on the protection of natural persons with regard to the processing of personal data and on the free movement of such data. Additionally, the approval aligns with the principles outlined in the Declaration of Helsinki.

RESULTS

The final sample consisted of two hundred and fifty-two (252) adults, who were native speakers. Means and standard deviations for the DKAT2-G, DKAS-G, and the KIDE-G are shown in Table 1. The majority of participants are female (84.1%), with an average age of 45.5 years. Most of them hold a master’s degree (38.9%), know a PwD (82.5) but do not care for a PwD (71.4%) or work with PwD (85.7%). Regarding previous dementia training, a high percentage of 61.9% have not attended a dementia awareness course.

Table 1

Participants’ demographics and various characteristics

| Full sample | Subgroup1 | |||

| (N = 252) | (n = 72) | |||

| Characteristics | n | % | n | % |

| Age | ||||

| Mean | 45.56 | 43.97 | ||

| SD | 13.28 | 13.81 | ||

| Gender | ||||

| Male | 40 | 15.9% | 14 | 20.0% |

| Female | 212 | 84.1% | 56 | 80.0% |

| Education | ||||

| 12 years or less | 7 | 2.8% | 1 | 1.4% |

| 12 years | 32 | 12.7% | 9 | 12.9% |

| Vocational training | 27 | 10.7% | 5 | 7.1% |

| Bachelor | 70 | 27.8% | 20 | 28.6% |

| Master | 98 | 38.9% | 30 | 42.9% |

| PhD | 18 | 7.1% | 5 | 7.1% |

| Occupation | ||||

| College student | 17 | 6.7% | 6 | 8.6% |

| Unemployed | 23 | 9.1% | 8 | 11.4% |

| Retiree | 25 | 9.9% | 7 | 10.0% |

| Nurses | 7 | 2.7% | 2 | 2.9% |

| Therapeutical profession | 31 | 12.3% | 11 | 15.7% |

| Doctor | 7 | 2.7% | 2 | 2.9% |

| Others | 142 | 56.3% | 34 | 48.6% |

| Experience with PwD (yes/no) | ||||

| I know one or more persons with dementia | 208 | 82.5% | 61 | 87.1% |

| I care for a person with dementia | 72 | 28.6% | 21 | 30.0% |

| I work with PwD | 36 | 14.3% | 12 | 17.1% |

| Participation in a program about dementia | ||||

| Yes, I participated | 96 | 38.1% | 38 | 54.3% |

| I did not participate | 156 | 61.9% | 32 | 45.7% |

Subgroup1 has participated in dementia training.

DKAT2

Reliability

As measured by the Cronbach’s alpha coefficient, the internal reliability of the DKAT2-G for the 21 items was high (Cronbach’s α= 0.760), indicating acceptable internal reliability. This means that each item is positively and significantly correlated with the sum of the other items of the scale (p < 0.0001). There are no items that, if deleted, would increase the Cronbach’s α, except for item 5, the absence of which would increase the Cronbach’s α to 0.763.

ICC from the initial sample was also acceptable (Cronbach’s α= 0.630) after the retest period. Specifically, the average ICC was 0.76 with a 95% confidence interval from 0.62 to 0.85 [F(69,69) = 4.220, p < 0.001]. However, it is essential to note that the Cronbach’s alpha coefficient is questionable (Cronbach’s α= 0.610) for the groups of respondents who had a previous dementia training. This finding can be explained by the fact that items 1 and 2 were answered correctly by most of the participants (> 91%), which means that there is no variance in their answer to these two items. More information can be found in Table 2.

Table 2

Psychometric properties of the DKAT2-G, DKAS-G, and KIDE-G

| Cronbach’s alpha | Mean item-total correlation | Mean inter-item correlation | Mean item difficulty | Mean item ignorance | |||

| Total sample | NoDT1 | DT2 | |||||

| Total sample | Total sample | Total sample | Total sample | ||||

| DKAT2-G | 0.760 | 0.730 | 0.610 | 0.313 | 0.122 | 61.70% | 38.20% |

| DKAS-G | 0.845 | 0.807 | 0.752 | 0.392 | 0.182 | 64.90% | 35.02% |

| KIDE-G | 0.419 | 0.415 | 0.323 | 0.140 | 0.053 | 71.50% | 28.41% |

NoDT1, has not participated in dementia training; DT2, has participated in dementia training.

Validity

According to the previous study by Gkioka et al. (2020) [28], who validated the DKAT2-G, no structural validity was extracted due to the specific structure of the tool, which is unidimensional.

Construct validity, as measured by the known groups’ method, was used to determine any differences in the DKAT2-G sum score between those who had previous experience with dementia and those who had previous dementia training. Since the DKAT2-G sum score was not normally distributed, the non-parametric Mann-Whitney test was utilized to compare dementia knowledge between the two groups. According to the results, those who had previous experience with dementia, had increased dementia knowledge (“I know a person with dementia”: U = 2527.5, z = -4.681, p < 0.001; “I care for a person with dementia”: U = 4116.5, z = -4.539, p < 0.001; “I work with a PwD”: U = 1470.0, z = -5.995, p < 0.001), and previous dementia training (U = 3449.5, z = -7.215, p < 0.001) using the DKAT2-G sum scores.

The concurrent validity, one of the main types of criterion validity, of the DKAT2 was assessed by testing whether its scores were significantly correlated with the DKAS-G, and the KIDE-G, which also measure dementia knowledge. As shown in Table 3, the DKAT2 was positively correlated with the DKAS-G and the KIDE-G. Therefore, it seems that all scales measure dementia knowledge at a significant level.

Table 3

Correlation between DKAT2-G, DKAS-G and KIDE-G

| Screening tests | 1 | 2 | 3 |

| 1. DKAT2-G | – | 0.779** | 0.157* |

| 2. DKAS-G | – | 0.245** | |

| 3. KIDE-G | – |

Item analysis

To determine the level of difficulty of the DKAT2-G, two different indices were extracted: the percentage of correct answers and the level of ignorance calculated by the number of participants who gave the “I don’t know” answers [45]. Difficulty and ignorance levels for the total sample and according to previous attendance in dementia training programs are presented in Table 4. For the total sample, three items were very easy, six items were easy, six items were somewhat easy, four items were somewhat difficult, and two were difficult answers. In summary, the results showed that nine items were answered correctly by at least 70% of the study’s participants, while three items were answered uncertainly by 65% of them. Regarding the subgroup who had previously participated in dementia training programs, they gave more correct answers overall compared to those who had similar training, especially in items 4, 14, and 21.

Table 4

DKAT2-G: Item analysis

| Characteristics | Total sample (N = 252) | Participation in dementia training programs (n = 96) | No participation in dementia training programs (n = 156) | |||

| Difficulty index % | Ignorance index % | Difficulty index % | Ignorance index % | Difficulty index % | Ignorance index % | |

| Correct answers | ||||||

| 1 | 91.7 | 8.3 | 96.1 | 3.1 | 88.5 | 11.5 |

| 2 | 96.0 | 4.0 | 97.9 | 2.1 | 94.9 | 5.1 |

| 3 | 33.7 | 66.3 | 46.9 | 53.1 | 25.6 | 74.4 |

| 4 | 54.0 | 46.0 | 75.0 | 25.0 | 41.0 | 59.0 |

| 9 | 59.9 | 40.1 | 68.8 | 31.3 | 54.5 | 45.5 |

| 10 | 63.1 | 36.9 | 70.8 | 29.2 | 58.3 | 41.7 |

| 11 | 88.1 | 11.9 | 93.8 | 6.3 | 84.6 | 15.4 |

| 13 | 90.1 | 9.9 | 97.9 | 2.1 | 85.3 | 14.7 |

| 14 | 75.8 | 24.2 | 89.6 | 10.4 | 67.3 | 32.7 |

| 15 | 81.3 | 18.7 | 91.7 | 8.3 | 75.0 | 25.0 |

| 17 | 40.1 | 59.9 | 51.0 | 49.0 | 33.3 | 66.7 |

| 19 | 87.7 | 12.3 | 92.7 | 7.3 | 84.6 | 15.4 |

| 21 | 78.6 | 21.4 | 94.8 | 5.2 | 68.6 | 31.4 |

| Reverse coded answers | ||||||

| 5 | 69.0 | 31.0 | 78.1 | 21.9 | 63.5 | 36.5 |

| 6 | 83.3 | 16.7 | 91.7 | 8.3 | 78.2 | 21.8 |

| 7 | 13.1 | 86.9 | 18.8 | 81.3 | 9.6 | 90.4 |

| 8 | 52.4 | 47.6 | 66.7 | 33.3 | 43.6 | 56.4 |

| 12 | 7.3 | 89.7 | 10.3 | 92.7 | 12.2 | 87.8 |

| 16 | 41.7 | 58.3 | 57.3 | 42.7 | 32.1 | 67.9 |

| 18 | 60.3 | 39.7 | 77.1 | 22.9 | 50.0 | 50.0 |

| 20 | 27.4 | 72.6 | 39.6 | 60.4 | 19.9 | 80.1 |

Concerning the mean item difficulty level, 61.7% of the total sample answered correctly on average, while 38.2% of the participants chose the “I don’t know” option on average. Among those who had received dementia training, 63% gave a correct answer, while 37% were unsure. On the other hand, those who did not participate in dementia training groups responded similarly with correct (55.4%) and unsure (44.6%) answers. As for the overall item correlation, it was calculated at 0.313 with a range from 0.093 to 0.503, while the mean inter-item correlation was 0.122. Item 12 had the lowest correlation value of 0.093. Therefore, the results showed that item 12 did not contribute significantly to the overall measure of dementia knowledge as calculated by the DKAT2-G.

Floor and ceiling effects

Floor and ceiling effects are related to content validity, which indicates the presence or absence of extreme values, and are considered to be present when more than 10–15% of participants score at the lowest or highest level of the scale [46]. From the total sample of the 252 participants, no participant scored the maximum of 21, but two participants scored 20. Similarly, two people gave only three correct answers. Thus, no ceiling or floor effects were observed in the DKAT2-G. For the dementia training group, similar to the total sample, no participant scored 21, while two participants scored 20. In addition, there were no participants scoring lower than 9. In summary, no considerable percentage of participants obtained either the highest or the lowest score available. Therefore, there are neither too easy nor too difficult items on the DKAT2-G total score in the total sample as well as in the dementia training group.

We used Chi-squared and Spearman correlation to determine whether the DKAT2-G was affected by gender, age, education level, and other variables such as previous experience with dementia and previous participation in dementia training. The DKAT-2 was not correlated with gender [χ2(18) = 22.337, p = 0.217]; age [(r = -0.089, p = 0.161)]; and education [(r = 0.056, p = 0.402)], but a significant relationship was found between the DKAT2-G and previous dementia experience [(“I know a person with dementia”: χ2(18) = 36.870, p = 0.005; “I care for a person with dementia”: χ2(18) = 45.518, p < 0.001; “I work with a person with dementia”: χ2(18) = 48.298, p < 0.001)] and previous dementia training [χ2(18) = 60.580, p < 0.001)].

DKAS-G

Reliability

The DKAS-G was found to have a high Cronbach’s alpha value (Cronbach’s α= 0.845), indicating good internal reliability with no evidence of redundancy. In terms of retest reliability, the ICC from the initial sample was excellent (Cronbach’s α= 0.921) after one month. Specifically, the average measure ICC was 0.92 with a 95% confidence interval of 0.87 to 0.95 [F(69,69) = 12.646, p < 0.001]. Similar to the DKAT2-G, there are no items that would increase Cronbach’s alpha if deleted, with the exception of item 4, the absence of which would increase Cronbach’s α to 0.852.

Validity

Structural validity

The original DKAS consisted of four separate constructs: Causes and Characteristics (items 1-7), Communication and Behavior (items 8-13), Care Considerations (items 14-19), and Risks and Health Promotion (items 20-25). CFA was performed using maximum likelihood estimation and calculated the covariance matrix among the DKAS-G items [39, 42].

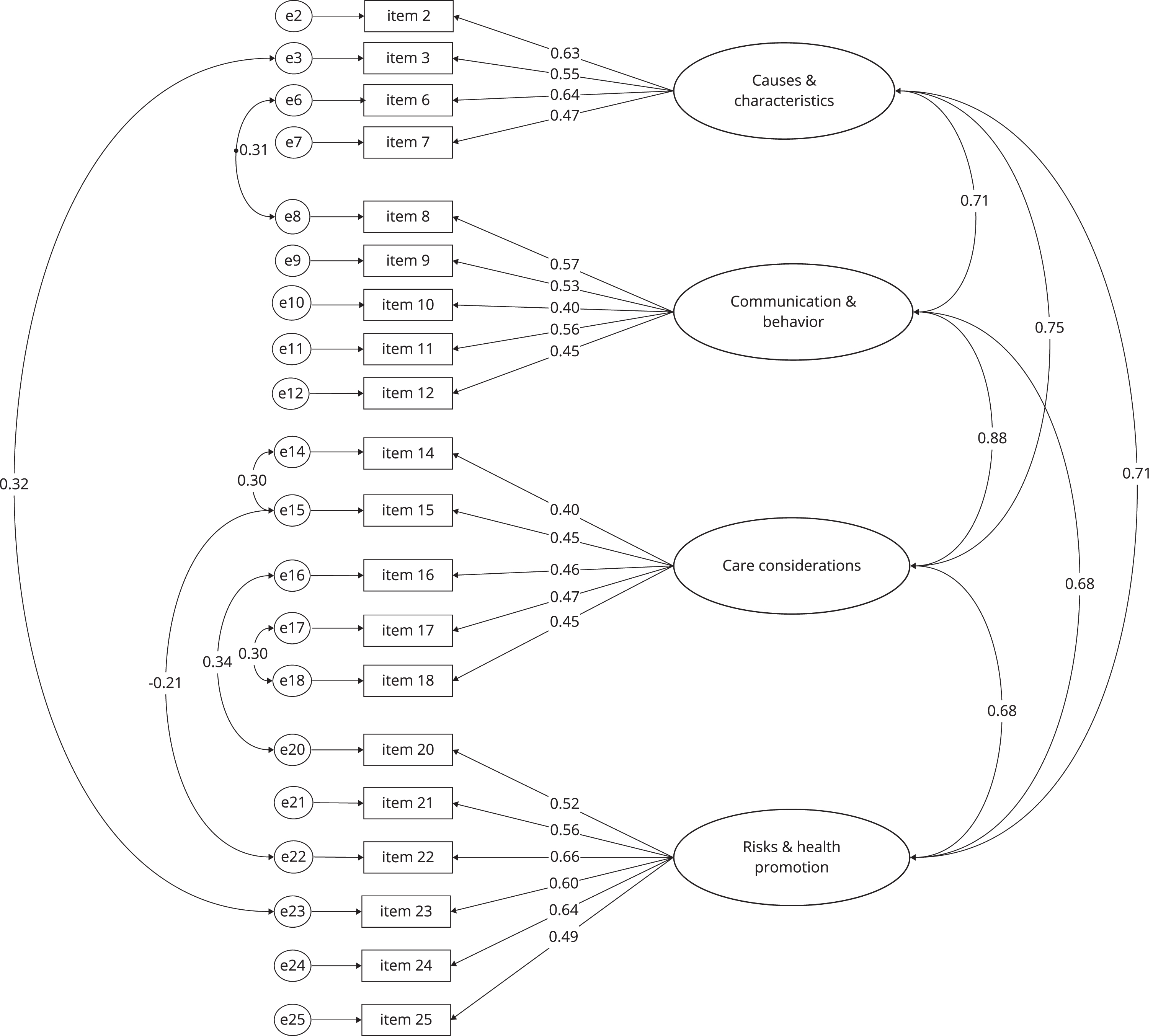

The DKAS-G CFA model included 20 items and provided a sufficient model fit. The χ2 test was significant, as expected, with a large sample size (χ2 = 253.99, df = 158, p < 0.001, CFI = 0.913, RMR = 0.031, TLI = 0.90, GFI = 0.912, AGFI = 0.883, RMSEA = 0.049). Specifically, the CFI and TLI were below the recommended thresholds of > 0.95 and > 0.90, respectively. For model fit criteria [43], the RMSEA indicated a good fit, with the upper confidence interval < 0.06, and the RMR also indicated a good fit, with a value < 0.08. According to the standardized regression weight records, the following items were excluded from the model: 1, 4, 5, 13, and 19 because they all had very low regression weights < 0.4. In addition, items 1, 4, and 19 had the lowest mean inter-item correlations, all below < 0.1, supporting their removal. The error variances of items 3 and 23, items 6 and 8, items 15 with 14 and 22, items 16 and 20, and, eventually, items 17 and 18 were covaried because they had high covariance. It is noteworthy that no high loadings were observed. In particular, all the items loading on Factor 3 were substantially low (between 0.40- 0.47). However, the 4-factor model showed an acceptable fit to the data for the DKAS-G. Based on these results, it can be concluded that the DKAS-G seems to have a similar factor structure to the original English scale. The CFA DKAS-G model along with the items’ loading are presented in Fig. 1.

Fig. 1

Confirmatory factor analysis of the DKAS-G without Items 1, 4, 5, 13, and 19.

Construct validity

According to the known-group analysis, DKAS-G successfully discriminated those who had previous experience with dementia (“I know a person with dementia”: U = 3048, z = -3.481, p < 0.001; “I care for a person with dementia”: U = 4781, z = -3.253, p = 0.001; “I work with a person with dementia”: U = 1470.0, z = -5.995, p < 0.001), and previous dementia training (U = 1627, z = -5.587, p < 0.001).

Item analysis

We applied item analysis to calculate the level of difficulty. By clustering the easy and difficult responses, we found that two items were very easy, nine items were easy, nine items were somewhat easy, two items were somewhat difficult, and three items were difficult to answer. The difficulty and ignorance levels are presented in Table 5. Regarding the subgroup that had previously participated in dementia training programs, they provided more correct answers overall compared to those who did not receive similar training. Specifically, they performed better in items 4, 6, and 16.

Table 5

DKAS-G: Item analysis

| Characteristics | Total sample (N = 252) | Participation in dementia training programs (n = 96) | No participation in dementia training programs (n = 156) | |||

| Difficulty index % | Ignorance index % | Difficulty index % | Ignorance index % | Difficulty index % | Ignorance index % | |

| Correct answers | ||||||

| 1 | 71.8 | 28.2 | 76.0 | 24.0 | 69.2 | 30.8 |

| 3 | 83.4 | 16.7 | 94.8 | 5.2 | 76.3 | 23.7 |

| 4 | 57.5 | 42.5 | 87.5 | 37.5 | 54.5 | 45.5 |

| 5 | 90.9 | 9.1 | 97.9 | 2.1 | 86.5 | 13.5 |

| 6 | 31.0 | 69.0 | 51.1 | 49.0 | 18.6 | 81.4 |

| 7 | 57.9 | 42.1 | 70.9 | 29.2 | 50.0 | 50.0 |

| 9 | 59.1 | 40.9 | 70.8 | 29.2 | 51.9 | 48.1 |

| 12 | 84.1 | 15.9 | 85.4 | 14.6 | 83.4 | 16.7 |

| 13 | 15.5 | 84.5 | 25.0 | 75.0 | 9.6 | 90.4 |

| 14 | 34.9 | 65.1 | 47.9 | 52.1 | 26.9 | 73.1 |

| 15 | 25.0 | 75.0 | 38.6 | 61.5 | 16.7 | 83.3 |

| 16 | 66.3 | 33.7 | 84.3 | 15.6 | 55.1 | 44.9 |

| 19 | 25.0 | 75.0 | 32.3 | 67.7 | 20.5 | 79.5 |

| 20 | 84.6 | 15.5 | 92.7 | 7.3 | 79.5 | 20.5 |

| Reverse coded answers | ||||||

| 2 | 77.8 | 22.2 | 85.4 | 14.6% | 73.1 | 26.9 |

| 8 | 51.6 | 48.4 | 66.7 | 33.3 | 42.3 | 57.7 |

| 10 | 75.4 | 24.6 | 86.5 | 13.5 | 68.6 | 31.4 |

| 11 | 91.2 | 8.7 | 97.9 | 2.1 | 87.2 | 12.8 |

| 17 | 62.7 | 37.3 | 71.9 | 28.1 | 57.0 | 42.9 |

| 18 | 60.8 | 39.3 | 66.6 | 33.3 | 57.0 | 42.9 |

| 21 | 72.6 | 27.4 | 78.2 | 21.9 | 69.2 | 30.8 |

| 22 | 88.5 | 11.5 | 96.9 | 3.1 | 83.3 | 16.7 |

| 23 | 86.9 | 13.1 | 92.7 | 7.3 | 83.3 | 16.7 |

| 24 | 87.6 | 12.3 | 91.7 | 8.3 | 85.2 | 14.7 |

| 25 | 82.5 | 17.5 | 88.6 | 11.5 | 78.9 | 21.2 |

Regarding the level of average item difficulty, 64.9% gave a correct answer (specifically, they answered correctly, which was scored with 2 points, or possibly correctly, which was scored with 1 point). The percentage of “I don’t know” answers averaged 35%. In the dementia training group, 75.1% answered correctly, while 24.9% of the sample was uncertain. In contrast, those who did not participate in the dementia training groups responded similarly with correct (59.3%) and unsure (40.6%) answers.

Floor and ceiling effects

According to the results, the lowest score observed was 5, while no participant achieved the highest score of 50. The highest score, 48, was achieved by one person. Thus, no ceiling or floor effects were observed on the DKAS-G. In the dementia training group, as in the total sample, one participant scored 48, the highest score observed in the current study, while no participant scored lower than 17.

Chi-squared test and Spearman correlation were employed to determine whether the DKAS-G was affected by gender, age, education level, and other variables such as previous experience with dementia and previous participation in dementia training. The DKAS-G was not correlated with gender [χ2(41) = 35.158, p = 0.727] and previous experience with dementia [(“I know a person with dementia”: χ2(41) = 55.505, p = 0.065; “I care for a person with dementia”: χ2(41) = 14.570, p = 0.446)], however, a significant relationship was found between the DKAT2-G and age (r = -0.196, p = 0.002) and education (r = 0.141, p = 0.034), previous work experience with dementia [“I work with a person with dementia”: χ2(41) = 71.520, p = 0.002)], and previous dementia training [χ2(41) = 89.541, p < 0.001)].

KIDE-G

Reliability

The Cronbach’s alpha coefficient of the KIDE-G for the 16 items was unacceptable (Cronbach’s α= 0.619), indicating unacceptable internal reliability. The ICC from the initial sample was also unacceptable (Cronbach’s α= 0.763) after the retest period. Specifically, the average measure ICC was 0.619, with a 95% confidence interval of 0.44 to 0.74 [F(69,69) = 4.216, p < 0.001]. According to the analysis, deleting items 1, 6, 9, and 13 would increase Cronbach’s α to 0.450, 0.461, 0.423, 0.425, respectively.

Validity

Structural validity

According to the original study [29] as well as the study of Melchior and Teichmann (2023) [36], no coherent structure was revealed for KIDE. Therefore, the structural validity of KIDE-G was not conducted.

Construct validity

In contrast to the previous tools, the KIDE-G was not able to discriminate between those who had previous experience with dementia and those who had not “(I know a person with dementia”: U = 4266.5, z = -0.712, p = 0.476; “I care for a person with dementia”: U = 6393, z = -0.168, p = 0.866). However, those who had work experience with PwD (“I work with a person with dementia”: U = 2871.5, z = -2.538, p = 0.011) or previous dementia training (U = 5414, z = -3.732, p < 0.001) scored significantly higher on the KIDE-G.

Item analysis

For the total sample, four items were very easy, four items were easy, four items were somewhat easy, and four items were somewhat difficult. No difficult or very difficult answers were observed. Regarding the subgroup who had previously participated in dementia training programs, they gave more correct answers overall, but the differences were at a mean level of less than 10%. The largest differences, approximately 10–12%, between those who had received dementia training and those who had not were found in items 3, 7, and 9.

Of the total sample, the average correct response was 71.6%, while the average “I don’t know” response was 28.3%. Among those who had received dementia training, 75.5% gave a correct answer, while 24.4% were unsure. In contrast, of those who did not participate in the dementia training group, 69.1% gave a correct answer, while 30.8% were unsure. The inter-item correlation was 0.053, with a range of -0.164 to 0.497, and the mean item-total correlation was 0.140, ranging from -0.05 to 0.029. Item 6 had the lowest correlation with the total scale.

According to the inter-item correlation table, many small negative correlations between items were observed, especially in item 6, which is negatively correlated with about 10 items. In addition, each item had a maximum of 5 correlations that were not close to zero, meaning that the items do not fit the construct they were originally intended to measure. Given that the average inter-item correlation for a total of items should be between 0.20 and 0.40, the current value of 0.053 suggests that the items are not reasonably homogeneous, nor do they contain enough unique variance to appear isomorphic to one another. In addition, the presence of negative correlations affects the reliability of the tool.

Floor and ceiling effects

According to the results, two participants reached the minimum score of 5, while in conjunction with the previous tools, 3 participants reached the highest score of 16. According to this, no ceiling or floor effects were observed in the KIDE-G, as the percentages of those scoring the minimum and the maximum were 0.8% and 1.2%, respectively. In the dementia training group, no participant scored less than 8, while two participants scored 16.

Chi-squared test and Spearman correlation were used to determine whether the KIDE-G was affected by gender, age, education level, and other variables such as previous experience with dementia and previous participation in dementia training. The KIDE-G was correlated with gender χ(11) = 116.498, p < 0.001 and with age (r = -0.232, p < 0.001), but not with education (r = 0.091, p = 0.173). Significant relationships were found between the KIDE-G and previous dementia experience (“I know a person with dementia”: χ(1) = 108.466, p < 0.001; “I care for a person with dementia”: χ(1) = 45.614, p < 0.001; “I work with a person with dementia”: χ(1) = 127.653, p < 0.001) as well as with previous dementia training χ(1) = 13.869, p < 0.001).

To date, several studies [21, 24, 32] have used scales such as ADKT, DK-20, and UAB-ADKT to measure dementia knowledge in family carers, health professionals, and students. However, only a few studies validated dementia knowledge tools in the general population, which is the key point for evaluating dementia training seminars. To our knowledge, with the exception of ADKS [26] and DKAT2 [28], which have been validated in Greek, especially among general practitioners and college students, respectively, no similar validation studies have been conducted in Greece and mainly in the general population.

DISCUSSION

The aim of this cross-sectional study was to validate three tools for measuring dementia knowledge in the Greek population: DKAT2, DKAS, and the KIDE, in order to determine which is the most appropriate tool to use.

The results showed that all tools had acceptable to good internal reliability, except for the KIDE-G. It is noteworthy that the Cronbach’s alpha across the three tools was slightly lower for those who had dementia training compared to those who did not have similar training; this indicates a lack of variance on specific items that had a high percentage of correct responses. In terms of whether all three tools were stable over time, the retest reliability ranged from good to very good, with DKAS-G having the more satisfactory Cronbach’s alpha value, similarly to the German validation study of the DKAS-D and DKAT2-G. The KIDE-G [36] had similar levels of consistency over time, however, in this initial Greek validation study by Gkioka (personal communication), internal reliability was not calculated and therefore our results are not comparable. Factor analysis was only performed for the DKAS-G, as the DKAT2-G is unidimensional, whereas the KIDE-G did not reveal a coherent structure. According to our results, the DKAS-G retains the same factor structure as the original version.

Regarding validity indices, all three tools discriminated those who had previously attended dementia training programs, whereas the DKAT2-G and the DKAS-G also discriminated those with previous experience of dementia. Moreover, Spearman analysis showed statistically significant correlations between the three tools, and therefore all of them had satisfactory concurrent validity, meaning that they measure the same construct. Finally, in terms of content validity, no floor or ceiling effects were observed in any of the three tools for the total sample.

Moving to the DKAT2-G, statistics showed a mean score of 12.97 ranging from 0 to 21 (SD 3.5), which is slightly lower compared to other versions [47, 48]. The Cronbach’s alpha was good, being higher than in the first Greek validation study by Gkioka et al. (2020) [28]. This difference can be explained by the fact that our study was conducted in the general population, while Gkioka’s research team recruited bachelor students who constitute a homogenous sample. According to the literature [28], Cronbach’s alpha of the Greek DKAT2 was 0.68. This value is lower compared to the Spanish version administered to nurses [48], the German version (0.78) [36, 48], and the original version (0.86) [30]. Finally, the Australian version was found to have the lowest Cronbach’s alpha (0.7) [49]. The DKAT2 has also been translated into Brazilian Portuguese [50] and was also administered in the Nigerian population using the English translation [51], but no psychometric properties, other than item analysis, are available from their studies. To our knowledge, the DKAT2 has not been validated in other countries.

Regarding item analysis, the DKAT2-G had the lowest percentage score of correct responses (61.7%) compared to the previously mentioned validation studies [27, 47–50]. It is noteworthy that our study revealed three very easy questions, particularly items 1, 2, and 13, which is consistent with the study of Teichmann et al. (2022) [48]. In line with our research design, their study was an online survey, while the characteristics of the sample data were similar to ours, which may explain the similarity between the two studies in terms of item analysis. Item 1 was also the easiest answer for Nigerian physiotherapists, as mentioned by Onyekwuluje et al. (2023) [51]. The Spanish version by Parra-Anguita et al. (2018) [47] revealed six very easy items, which is the highest percentage in the already existing studies. Item 12 Sudden increase in confusion is a characteristic of dementia was the most difficult item in our study, the Greek study by Gkioka et al. [28], and the Spanish DKAT2 [47]. It was answered correctly only by less than 10% of our total sample and the dementia training group. Since item 12 had the lowest item-total correlation, it should probably be removed from the DKAT2-G, however, it belongs to the acceptance rate. Item 7 also had a low item-total correlation, and given that previous studies [36, 48] have found item 7 to have conspicuous scores, it is still controversial whether it is actually a false item. It is noteworthy that item 2 was answered more than 95% correctly by most of the total sample and the dementia training group, while item 13 was also answered correctly by the vast majority of the dementia training group. Finally, 4, 16, 18, and 21 were answered correctly by those who had received dementia training.

Contrary to our results, in the study by Gkioka et al. (2020) [28], there were no very easy items, as most of them were somewhat easy or somewhat difficult, which is consistent with the Spanish study [47] and, specifically, the subgroup of nursing college students. A possible explanation is that both studies recruited college students. Regarding the level of ignorance of our study, it was found to be lower in those with previous knowledge about dementia, except for item 12. This tendency was observed in the Australian version [49] but not in the Spanish [47] and the German versions [48]. Regarding the floor and ceiling effect, contrary to the study of Melchior and Teichmann (2023) [36], we did not find any aggregation of item scores, especially near the maximum score, for the total sample as well as for the subgroup that had previously participated in a dementia training program. Almost half of the participants of the total sample, namely 51.1%, scored between 10 and 15 points, while 63.3% of those who had received dementia training scored between 14 and 18.

In summary, the DKAT2-G has good internal reliability and seems to be consistent over time. In addition, it has good validity indices measuring dementia knowledge and identifying those with previous dementia experience and dementia training. Furthermore, the DKAT2 is more suitable for administration in the general population, which has a higher possibility of heterogeneous responses. Hence, it can be used in diverse populations to assess dementia knowledge and measure the effectiveness of dementia awareness programs.

The mean score of the DKAS-G was 25.87, with a range of 0 to 50 (SD 8.6), which is again slightly lower than the German [38] and Spanish versions, specifically among professional caregivers and students [36, 52] but the same as the Chinese version [53]. Additionally, the mean score of our participants’ DKAS-G was higher than that of Spanish non-professional caregivers. [54]. The Japanese version [54] validated the 18-item DKAS and, therefore, our results are not comparable. The DKAS-G had a very good Cronbach’s alpha value (0.84) for the total sample, which is also comparable with previous versions from Japan [54], Spain [52], China [53], and Turkey [55], except for the German version [36], which was found to be the highest among the aforementioned studies, with good Cronbach’s alpha values of 0.79, 0.81, 0.93, 0.83, 0.87, respectively. In terms of structural validity, our study confirmed the four-factor structure provided by the original version [30]. The matrix of loadings between the items and the factors for the DKAS-G had overall lower loadings than those of the German version. However, similar loadings were not calculated in the original study by Annear et al. (2016) [30]. Specifically, our CFA model excluded items 1, 4, 5, 13, and 19, which can be removed to improve the DKAS-G model fit. Item 4 is excluded in the German [36] and Turkish [55] versions, which verifies that it does not increase the factor structure of the DKAS model. In agreement with our findings, item 5 is excluded in the Turkish version [55], while item 19 was removed from the German version, reinforcing the reason for excluding these two items from the DKAS-G.

In our model, all the fit indices were > 0.90, while the RMSEA was 0.49, indicating a good fit. In addition, all factor loadings were found to be > 0.30. When comparing our CFA model with other versions, the Turkish version consisting of 17 items [55] shows a better fit. The goodness of fit of our CFA model is similar to the Chinese version of the DKAS [53], which retains the original version of 25 items but better than the German version [36]. Nevertheless, the different samples in the aforementioned studies may partially explain the existing controversies; for example, the Turkish version recruited nurses, professionals as well as students, whereas the Chinese participants had previous experience with people with dementia as caregivers. Therefore, increased dementia knowledge due to dementia training and previous experience could possibly explain the observed increased goodness of the CFA model.

Regarding the item analysis, items 1 and 4 of the DKAS-G were poorly correlated with the total score because they obtained poor inter-item correlation values, which is consistent with the results of our CFA that these items should be removed. In addition, item 13 was answered correctly by only 15.5% of the respondents, and since it did not improve the fit of the CFA model, it can be assumed controversial and was, therefore, removed from the DKAS-G.

In contrast to the German version of the DKAS [36], the DKAS-G had several items with low inter-item correlation, so, overall, there is no coherence between the items of the tool. Regarding the difficulty of the DKAS-G, the item analysis showed that the mean item level of correct responses is higher than that of the DKAT2-G, but both are lower than that of the KIDE-G. However, items 5 and 11 were answered correctly by about 90% of the participants and are, therefore, considered to be very easy answers. The percentage of correct answers in our study was lower than in the German version [36]. The Chinese version by Sung et al. (2021) [53] as well as the Turkish study by Akyol et al. (2021) [55] do not provide item difficulty levels; therefore, our results are not comparable. In addition, the level of correct responses on the DKAS-G was found to be increased in those with previous knowledge of dementia, across all items. Finally, the DKAS-G seems to have a good ability to discriminate those groups that are expected to have increased levels of dementia knowledge, specifically those with previous experience of dementia and those with dementia training similar to previous studies, and therefore has good construct validity.

In conclusion, the DKAS-G has very good internal reliability and seems to be reliable over time. It also has satisfactory validity indices, providing a coherent four-factor model similar to the original version. The DKAS-G consists of 20 items instead of 25, which increases the structural validity of the factor model. Three out of the five excluded items are considered to be very easy items, while item 13 proved to be very difficult for our sample. In conclusion, the DKAS-G has very satisfactory psychometric properties and can be used to measure dementia knowledge in the general population, health professionals, college students, and caregivers. Additionally, in line to the study by Annear et al. 2016 [30], the DKAS-G is considered to be an improved version of the DKAT2-G, which is also supported by Melchior and Teichmann (2023) [36], who also found that the German version of the DKAS-D has better psychometric properties than the DKAT2-D.

Regarding the KIDE-G, Cronbach’s alpha is unacceptable, in contrast to the original study by Elvish et al. 2014 [29], who found a good internal reliability index. Therefore, its low internal reliability levels reflect low consistency between items of the scale, which means that the KIDE-G is not consistent within itself as a tool for measuring dementia knowledge. Finally, although the KIDE-G has good stability, according to the results of the current study, the German version had low stability, while no previous studies have been reported regarding retest reliability. The average inter-item correlation for the KIDE-G is 0.140, while the acceptable range is between 0.15 and 0.50. Therefore, it seems that its items are not well correlated and do not measure the construct of dementia knowledge well. Due to the lack of previous research, no clear comparisons can be made regarding the item analysis of the KIDE-G. However, it can be concluded that the KIDE-G has many items that do not contribute significantly to the total score due to the poor inter-item correlations. As for the average item difficulty, the KIDE-G achieved the highest number of correct responses. This finding is consistent with previous studies in the field [36, 56], which suggest that the main issue in obtaining a very high score is the absence of the “I don’t know” response. This type of response forces participants to guess when they do not know the correct answer, which is a significant error in the consistency of the tool. In addition, it is noteworthy that, unlike the other tools, the KIDE-G was not able to discriminate overall among those with previous dementia experience, but it was easier for those with previous dementia training. Data on the ability of the KIDE to discriminate between those with and without previous knowledge of dementia are still controversial.

On the one hand, Elvish et al. (2014 & 2016) [29, 44] as well as Jack-Waugh et al. (2018) [57] were able to identify those with dementia training. On the contrary, Schneider et al. (2020) [56] were not able to detect any differences between those who participated in relevant dementia training and those who did not participate in dementia training. When examining the floor and ceiling effects of the KIDE-G, we found an aggregation of scores, specifically between 14-16 at 25% for both the total sample and the dementia training group. When extracting the distribution plot, we found that one in three of the total sample as well as almost half of the participants were able to achieve very high scores between 13 and 16, which implies the presence of ceiling effects and downgrades the accuracy of the scale to measure dementia knowledge. Consistent with previous findings [36], those who were educated about dementia had a significant ceiling effect, in line with the study by Gamble et al. (2022) [58], who observed significant ceiling effects recruiting differential samples, mainly among those who had previous knowledge about dementia due to their participation in dementia training groups.

Conclusion

The aim of this study was to identify the most appropriate psychometric tool to assess dementia knowledge in the Greek adult population by measuring the psychometric properties of the DKAT2-G, DKAS-G, and KIDE-G tests administered in general population, health professionals, and caregivers.

Finally, we suggest that the DKAS-G, especially its short version of 20 items, has very satisfactory psychometric properties and a very good model fit. For this purpose, it is highly recommended to be used to assess dementia knowledge in the general public with subsequent use in dementia awareness programs and research protocols, and to evaluate the efficacy of various psychoeducational interventions implemented in the general population and caregivers.

Limitations

Despite recruiting a large sample with controversies across demographic data as well as socioeconomic and professional status, selection bias still exists in our study’s population. In specific, education level was extremely high in comparison to the general population, which probably affected our results. Another limitation is the high percentage of people who knew someone with dementia. Finally, all measurement tools across cultures and languages should be evaluated to determine the best tool for assessing knowledge about dementia.

ACKNOWLEDGMENTS

This study is independent research. The views expressed in this publication are those of the authors. We want to thank Constantina Demosthenous and Fofi Constantinidou from the University of Cyprus, Center for Applied Neuroscience, for providing the already translated the DKAS-G and Mara Gkioka for providing the translated KIDE-G as well as information regarding her unpublished validation study. Finally, we also want to thank Baysalova Taisiya for providing English proofreading to the manuscript.

FUNDING

The authors have no funding to report.

CONFLICT OF INTEREST

The authors have no conflict of interest to report.

DATA AVAILABILITY

The data supporting the findings of this study are available on request from the corresponding author. The data are not publicly available due to privacy or ethical restrictions.

SUPPLEMENTARY MATERIAL

[1] The supplementary material is available in the electronic version of this article: https://dx.doi.org/10.3233/ADR-230161.

REFERENCES

[1] | Rajan KB , Weuve J , Barnes LL , McAninch EA , Wilson RS , Evans DA ((2021) ) Population estimate of people with clinical AD and mild cognitive impairment in the United States (2020-2060). Alzheimers Dement 17: , 1966–1975. |

[2] | World Health Organization ((2023) ) Ageing and health. https://www.who.int/news-room/fact-sheets/detail/ageing-and-health |

[3] | Lian Y , Xiao LD , Zeng F , Wu X , Wang Z , Ren H ((2017) ) The experiences of people with dementia and their caregivers in dementia diagnosis. J Alzheimers Dis 59: , 1203–1211. |

[4] | Frisoni GB , Altomare D , Ribaldi F , Villain N , Brayne C , Mukadam N , Abramowicz M , Barkhof F , Berthier M , Bieler-Aeschlimann M , Blennow K , Brioschi Guevara A , Carrera E , Chételat G , Csajka C , Demonet J-F , Dodich A , Garibotto V , Georges J , Hurst S , Jessen F , Kivipelto M , Llewellyn DJ , McWhirter L , Milne R , Minguillón C , Miniussi C , Molinuevo JL , Nilsson PM , Noyce A , Ranson JM , Grau-Rivera O , Schott JM , Solomon A , Stephen R , van der Flier W , van Duijn C , Vellas B , Visser LNC , Cummings JL , Scheltens P , Ritchie C , Dubois B ((2023) ) Dementia prevention in memory clinics: Recommendations from the European task force for brain health services. Lancet Reg Health Eur 26: , 100576. |

[5] | Knopman D , Donohue JA , Gutterman EM ((2000) ) Patterns of care in the early stages of Alzheimer’s disease: Impediments to timely diagnosis. J Am Geriatr Soc 48: , 300–304. |

[6] | Edwards RM , Plant MA , Novak DS , Beall C , Baumhover LA ((1992) ) Knowledge about aging and Alzheimer’s disease among baccalaureate nursing students. J Nurs Educ 31: , 127–135. |

[7] | Wang Y , Xiao LD , Ullah S , He G-P , Bellis A de ((2017) ) Evaluation of a nurse-led dementia education and knowledge translation programme in primary care: A cluster randomized controlled trial. Nurse Educ Today 49: , 1–7. |

[8] | Alzheimer’s Society ((2023) ) Dementia-friendly communities, https://www.alzheimers.org.uk/get-involved/dementia-friendly-communities, Last updated October 12, 2023, Accessed October 12, 2023. |

[9] | León-Salas B , Olazarán J , Cruz-Orduña I , Agüera-Ortiz L , Dobato JL , Valentí-Soler M , Muñiz R , González-Salvador MT , Martínez-Martín P ((2013) ) Quality of life (QoL) in community-dwelling and institutionalized Alzheimer’s disease (AD) patients. Arch Gerontol Geriatr 57: , 257–262. |

[10] | Spector A , Orrell M , Schepers A , Shanahan N ((2012) ) A systematic review of ‘knowledge of dementia’ outcome measures. Ageing Res Rev 11: , 67–77. |

[11] | Eccleston C , Doherty K , Bindoff A , Robinson A , Vickers J , McInerney F ((2019) ) Building dementia knowledge globally through the Understanding Dementia Massive Open Online Course (MOOC). NPJ Sci Learn 4: , 3. |

[12] | Zimmerman S , Williams CS , Reed PS , Boustani M , Preisser JS , Heck E , Sloane PD ((2005) ) Attitudes, stress, and satisfaction of staff who care for residents with dementia. Gerontologist 45 Spec No 1: , 96–105. |

[13] | Teichmann B , Gkioka M , Kruse A , Tsolaki M ((2022) ) Informal caregivers’ attitude toward dementia: The impact of dementia knowledge, confidence in dementia care, and the behavioral and psychological symptoms of the person with dementia. A cross-sectional study. J Alzheimers Dis 88: , 971–984. |

[14] | Hughes J , Bagley H , Reilly S , Burns A , Challis D ((2008) ) Care staff working with people with dementia. Dementia 7: , 227–238. |

[15] | Kosmidis MH , Vlachos GS , Anastasiou CA , Yannakoulia M , Dardiotis E , Hadjigeorgiou G , Sakka P , Ntanasi E , Scarmeas N ((2018) ) Dementia prevalence in Greece: The Hellenic Longitudinal Investigation of Aging and Diet (HELIAD). Alzheimer Dis Assoc Disord 32: , 232–239. |

[16] | Fountoulakis KN , Tsolaki M , Mohs RC , Kazis A ((1998) ) Epidemiological dementia index: A screening instrument for Alzheimer’s disease and other types of dementia suitable for use in populations with low education level. Dement Geriatr Cogn Disord 9: , 329–338. |

[17] | Zaganas IV , Simos P , Basta M , Kapetanaki S , Panagiotakis S , Koutentaki I , Fountoulakis N , Bertsias A , Duijker G , Tziraki C , Scarmeas N , Plaitakis A , Boumpas D , Lionis C , Vgontzas AN ((2019) ) The Cretan Aging Cohort: Cohort description and burden of dementia and mild cognitive impairment. Am J Alzheimers Dis Other Demen 34: , 23–33. |

[18] | Tsolaki M , Paraskevi S , Degleris N , Karamavrou S ((2009) ) Attitudes and perceptions regarding Alzheimer’s disease in Greece. Am J Alzheimers Dis Other Demen 24: , 21–26. |

[19] | Jelastopulu E , Giourou E , Argyropoulos K , Kariori E , Moratis E , Mestousi A , Kyriopoulos J ((2014) ) Demographic and clinical characteristics of patients with dementia in Greece. Adv Psychiatry 2014: , 36151. |

[20] | Kontodimopoulos N , Nanos P , Niakas D ((2006) ) Balancing efficiency of health services and equity of access in remote areas in Greece. Health Policy 76: , 49–57. |

[21] | Dieckmann L , Zarit SH , Zarit JM , Gatz M ((1988) ) The Alzheimer’s disease knowledge test. Gerontologist 28: , 402–407. |

[22] | Gilleard C , Groom F ((1994) ) A study of two dementia quizzes. Br J Clin Psychol 33: , 529–534. |

[23] | Kuhn D , King SP , Fulton BR ((2005) ) Development of the knowledge about memory loss and care (KAML-C) test. Am J Alzheimers Dis Other Demen 20: , 41–49. |

[24] | Barrett J.J. , Haley W.E. , Harrell L.E. , Powers R.E. ((1997) ) Knowledge about Alzheimer’s disease among primary care physicians, psychologists, nurses and social workers. Alzheimer Dis Assoc Disord 11: , 99–106. |

[25] | Carpenter BD , Balsis S , Otilingam PG , Hanson PK , Gatz M ((2009) ) The Alzheimer’s Disease Knowledge Scale: Development and psychometric properties. Gerontologist 49: , 236–247. |

[26] | Prokopiadou D , Papadakaki M , Roumeliotaki T , Komninos ID , Bastas C , Iatraki E , Saridaki A , Tatsioni A , Manyon A , Lionis C ((2015) ) Translation and validation of a questionnaire to assess the diagnosis and management of dementia in Greek general practice. Eval Health Prof 38: , 151–159. |

[27] | Toye C , Lester L , Popescu A , McInerney F , Andrews S , Robinson AL ((2014) ) Dementia Knowledge Assessment Tool Version Two: Development of a tool to inform preparation for care planning and delivery in families and care staff. Dementia (London) 13: , 248–256. |

[28] | Gkioka M , Tsolaki M , Papagianopoulos S , Teichmann B , Moraitou D ((2020) ) Psychometric properties of dementia attitudes scale, dementia knowledge assessment tool 2 and confidence in dementia scale in a Greek sample. Nurs Open 7: , 1623–1633. |

[29] | Elvish R , Burrow S , Cawley R , Harney K , Graham P , Pilling M , Gregory J , Roach P , Fossey J , Keady J ((2014) ) ‘Getting to Know Me’: The development and evaluation of a training programme for enhancing skills in the care of people with dementia in general hospital settings. Aging Ment Health 18: , 481–488. |

[30] | Annear MJ , Eccleston CE , McInerney FJ , Elliott K-EJ , Toye CM , Tranter BK , Robinson AL ((2016) ) A new standard in dementia knowledge measurement: Comparative validation of the Dementia Knowledge Assessment Scale and the Alzheimer’s Disease Knowledge Scale. J Am Geriatr Soc 64: , 1329–1334. |

[31] | Thu-Huong PT , Huang T-T ((2019) ) The psychometric properties of dementia knowledge scales: A systematic review. J Nurs Health Care 4: , doi: 10.5176/2345-7198_4.1.119 |

[32] | Shanahan N , Orrell M , Schepers AK , Spector A ((2013) ) The development and evaluation of the DK-20: A knowledge of dementia measure. Int Psychogeriatr 25: , 1899–1907. |

[33] | Polit DF ((2008) ) Nursing research: Generating and assessing evidence for nursing practice, 8. ed., Wolters Kluwer Health/Lippincott Williams & Wilkins, Philadelphia, PA. |

[34] | Annear MJ , Toye CM , Eccleston CE , McInerney FJ , Elliott K-EJ , Tranter BK , Hartley T , Robinson AL ((2015) ) Dementia Knowledge Assessment Scale: Development and preliminary psychometric properties. J Am Geriatr Soc 63: , 2375–2381. |

[35] | Demosthenous C, Constantinidou F (2023, 3-6 July) Exploring illness perceptions and knowledge of disease in informal dementia caregivers [Invited Symposium].. 18th European Congress of Psychology: Psychology: Uniting communities for a sustainable world, Brighton, United Kingdom. |

[36] | Melchior F , Teichmann B ((2023) ) Measuring dementia knowledge in German: Validation and comparison of the Dementia Knowledge Assessment Scale, the Knowledge in Dementia Scale, and the Dementia Knowledge Assessment Tool 2. J Alzheimers Dis 94: , 669–684. |

[37] | Tavakol M , Dennick R ((2011) ) Making sense of Cronbach’s alpha. Int J Med Educ 2: , 53–55. |

[38] | Taber KS ((2018) ) The use of Cronbach’s alpha when developing and reporting research instruments in science education. Res Sci Educ 48: , 1273–1296. |

[39] | Arbuckle JL Amos, IBM SPSS, Chicago. |

[40] | Arbuckle JL , Wothke W ((1999) ) AMOS 4.0 User’s Guide. Smallwaters Corp, Chicago, IL. |

[41] | Thompson B , Daniel LG ((1996) ) Factor analytic evidence for the construct validity of scores: A historical overview and some guidelines. Educ Psychol Meas 56: , 197–208. |

[42] | Hu L , Bentler PM ((1999) ) Cutoff criteria for fit indexes in covariance structure analysis: Conventional criteria versus new alternatives. Struct Equ Modeling 6: , 1–55. |

[43] | Schreiber JB , Nora A , Stage FK , Barlow EA , King J ((2006) ) Reporting structural equation modeling and confirmatory factor analysis results: A review. J Educ Res 99: , 323–338. |

[44] | Elvish R , Burrow S , Cawley R , Harney K , Pilling M , Gregory J , Keady J ((2016) ) ‘Getting to Know Me’: The second phase roll-out of a staff training programme for supporting people with dementia in general hospitals. Dementia (London) 17: , 96–109. |

[45] | Waltz CF ((2010) ) Measurement in nursing and health research, 4th ed., Springer Pub, New York. |

[46] | Terwee CB , Bot SDM , Boer MR de , van der Windt DAWM , Knol DL , Dekker J , Bouter LM , Vet HCW de ((2007) ) Quality criteria were proposed for measurement properties of health status questionnaires. J Clin Epidemiol 60: , 34–42. |

[47] | Parra-Anguita L , Moreno-Cámara S , López-Franco MD , Pancorbo-Hidalgo PL ((2018) ) Validation of the Spanish Version of the Dementia Knowledge Assessment Tool 2. J Alzheimers Dis 65: , 1175–1183. |

[48] | Teichmann B , Melchior F , Kruse A ((2022) ) Validation of the Adapted German Versions of the Dementia Knowledge Assessment Tool 2, the Dementia Attitude Scale, and the Confidence in Dementia Scale for the General Population. J Alzheimers Dis 90: , 97–108. |

[49] | Robinson A , Eccleston C , Annear M , Elliott K-E , Andrews S , Stirling C , Ashby M , Donohue C , Banks S , Toye C , McInerney F ((2014) ) Who knows, who cares? Dementia knowledge among nurses, care workers, and family members of people living with dementia. J Palliat Care 30: , 158–165. |

[50] | Piovezan M , Miot HA , Garuzi M , Jacinto AF ((2018) ) Cross-cultural adaptation to Brazilian Portuguese of the Dementia Knowledge Assessment Tool Version Two: DKAT2. Arq Neuropsiquiatr 76: , 512–516. |

[51] | Onyekwuluje CI , Willis R , Ogbueche CM ((2023) ) Dementia knowledge among physiotherapists in Nigeria. Dementia 22: , 378–389. |

[52] | Carnes A , Barallat-Gimeno E , Galvan A , Lara B , Lladó A , Contador-Muñana J , Vega-Rodriguez A , Escobar MA , Piñol-Ripoll G ((2021) ) Spanish-dementia knowledge assessment scale (DKAS-S): Psychometric properties and validation. BMC Geriatr 21: , 302. |

[53] | Sung H-C , Su H-F , Wang H-M , Koo M , Lo RY ((2021) ) Psychometric properties of the dementia knowledge assessment scale-traditional Chinese among home care workers in Taiwan. BMC Psychiatry 21: , 515. |

[54] | Annear MJ , Otani J , Li J ((2017) ) Japanese-language Dementia Knowledge Assessment Scale: Psychometric performance, and health student and professional understanding. Geriatr Gerontol Int 17: , 1746–1751. |

[55] | Akyol MA , Gönen Şentürk S , Akpınar Söylemez B , Küçükgüçlü Ö ((2021) ) Assessment of Dementia Knowledge Scale for the nursing profession and the general population: Cross-cultural adaptation and psychometric validation. Dement Geriatr Cogn Disord 50: , 170–177. |

[56] | Schneider J , Schönstein A , Teschauer W , Kruse A , Teichmann B ((2020) ) Hospital staff’s attitudes toward and knowledge about dementia before and after a two-day dementia training program. J Alzheimers Dis 77: , 355–365. |

[57] | Jack-Waugh A , Ritchie L , MacRae R ((2018) ) Assessing the educational impact of the dementia champions programme in Scotland: Implications for evaluating professional dementia education. Nurse Educ Today 71: , 205–210. |

[58] | Gamble CM ((2022) ) Dementia Knowledge – Psychometric Evaluation in Healthcare Staff and Students, Doctoral Thesis. |