An overview of the NFAIS Conference: Artificial intelligence: Finding its place in research, discovery, and scholarly publishing

Abstract

This paper offers an overview of the highlights of the NFAIS Conference, Artificial Intelligence: Finding Its Place in Research, Discovery, and Scholarly Publishing, that was held in Alexandria, VA from May 15–16, 2019. The goal of this conference was to explore the application and implication of Artificial Intelligence (AI) across all sectors of scholarship. Topics covered were, among others: the amount of data, computing power, and the technical infrastructure required for AI and Machine Learning (ML) processes; the challenges to building effective AI and ML models; how publishers are using AI and ML in order to improve discovery and the overall user search experience; what libraries and universities are doing to foster an awareness of AI in higher education; and an actual case study of using AI and ML in the development of a recommendation engine. There was something for everyone.

1.Introduction

“Just as electricity transformed almost everything 100 years ago, today I actually have a hard time thinking of an industry that I don’t think AI (Artificial Intelligence) will transform in the next several years [1]”.

The scientific publishing industry is no exception to the above quote. This conference provided a glimpse as to how the use of Artificial Intelligence (AI) and Machine Learning (ML) are allowing innovative publishers to mine their data and provide information seekers with knowledge rather than a list of answers to queries. Fortunately for me, this was not a conference for the AI computer-savvy, rather it was for non-techies who wanted to learn how ML and AI are being used within the Information Community - by publishers, librarians, and vendors - and as you read this article I hope that you will agree with me that the goal was met.

But listening to the speakers and subsequently writing this article motivated me to learn more about AI and ML simply because I needed to understand the jargon and how it was being used. How old are the technologies? Do they differ and if so, how? I found the answers and will briefly share them with you before you move on to the meat of this paper.

The concept of AI was captured in a 1950’s paper by Alan Turing, “Computing Machinery and Intelligence [2]”, in which he considered the question “can machines think”. It is well-written, understandable by a non-techie like me, and worth a read. Five years later a proof-of-concept program, Logic Theorist [3], was demonstrated at the Dartmouth Summer Research Project on Artificial Intelligence [4] where the term “Artificial Intelligence” was coined and the study of the discipline was formally launched (for more on the history and future of AI, see, “The History of Artificial Intelligence [5]”).

A concise one-sentence definition of AI is as follows: “Artificial Intelligence is the science and engineering of making computers behave in ways that, until recently, we thought required human intelligence [6]”. The article from which that definition was extracted went on to define “Machine Learning” as “the study of computer algorithms that allow computer programs to automatically improve through experience [7]”. ML is one of the tools with which AI can be achieved and the article goes on to explain why the two terms are so often incorrectly interchanged (ML and AI are not the same. ML, as well as Deep Learning, are subsets under the overarching concept of AI). That same article defines a lot of the ML jargon and is worth a read as a complement to some of the more technical papers that appear elsewhere in this issue of Information Service and Use.

AI and ML are critical to scholarly communication in general. On January 16, 2019 the Forbes Technology Council posted the top thirteen industries soon to be revolutionized by AI, one of which is business intelligence;

“Enterprises are overwhelmed by the volume of data generated by their customers, tools and processes. They are finding traditional business intelligence tools are failing. Spreadsheets and dashboards will be replaced by AI-powered tools that explore data, find insights and make recommendations automatically. These tools will change the way companies use data and make decisions [8]”.

The term “enterprises” can easily be replaced by “publishers”, “funding agencies” research labs”, “universities”, etc. One of the conference speakers, Rosina Weber, stated that the richest source of data is the proprietary information held within scientific publications. With access to this information AI can generate the most accurate information with which to make informed decisions - including research decisions for the advancement of science. So read on learn about some of the more recent AI-related initiatives in publishing. Enjoy!

2.Opening keynote

The conference opened with a presentation by Christopher Barbosky, Senior Director, Chemical Abstracts Service (CAS; see: https://www.cas.org/). The point of his presentation was that Machine Learning (ML) has always required high quality data and lots of it. However, data alone is not enough. Successful implementation of AI technologies also requires significant investments in talent, technology, and the underlying processes essential to maximizing the value of all that data.

He began by giving some examples of the amount of data required. It takes four million facial images to obtain 97.35% accuracy in facial recognition; eight hundred thousand grasps are required to train a robotic arm; fifty thousand hours of voice data is required for speech recognition; and it takes one hundred and fifty thousand hours to train an ML model to recognize a hot dog. To get the data, clean it, and maintain it requires a great deal of computing power.

And why is so much data required? He said that in the past the process was rules (human devised algorithms) plus data gave answers. Today it is answers plus data creates the rules - computers can now learn functions by discovering complex rules through training. He suggested that those interested read the book, Learning from Data - a Short Course [9]. I took a quick look at the book and it is technical, but it also has excellent examples of how to build ML models and provides understandable definitions of the jargon used in the field (and by some of the speakers at this conference; e.g., supervised modelling vs. unsupervised modelling, overfitting, etc.). Worth a look!

Barbosky said that ML, AI, and computing power are necessary to keep up with the amount of information being generated today. The volume of discovery and intellectual property is increasing dramatically. He added that 90% of the world’s data was created in the past two years at a rate of 2.5 quintillion bytes per day [10]. In addition 73% of the world’s patent applications are not written in the English language and they are increasing both in their number and in their complexity. He said that today publishers not only must invest in building a robust data collection, but they must also take the time to structure and curate that data. In addition, they must allot appropriate resources to support ongoing data maintenance. Once the “factual” data collection has been structured they must create “knowledge” from those facts to generate understanding, and the third step is to apply AI and ML to create search and analytic services that ultimately supply the user with “reasoning.” The goal is to minimize the time and effort required by the user to discover, absorb, and make “sense” of the information that is delivered to them.

Using CAS as an example he noted that they gather journals, patents, dissertations, conference materials, and technical reports. They then perform concept indexing, chemical substance indexing, and reaction indexing to gather facts. And then, using machines and humans, perform analytics in order to provide the users with insights. The machines handle the low-hanging fruit and the humans handle the more complex material. Using AI and ML, CAS builds knowledge graphs and is able to discern increased connections across scientific disciplines.

Barbosky said that one of the biggest problems today is finding people with the right skills as there is a shortage of data scientists. Indeed, 27% of companies cite a skills gap as the major impediment to data-related initiatives. He compared the data scientist to a chef who needs a pool of talented people to support him/her. The expertise of the team must cover: data acquisition and curation; data governance stewards; data modelers; data engineers; data visualizers (reports/descriptive analytics); and those who develop and maintain the requisite infrastructure. But in addition to the skilled team, a technology “stack” is required to make it all happen. He compared the stack to a pyramid: the foundation is the hardware, the next layer Hadoop [11], the third layer NoSQL [12], the next layer data analytics software; with the resulting Peak being the value provided to users. The peak is where the business opportunities appear: in product data features, in better search results, and in value from unstructured data.

Barbosky summarized as follows: (1) Big data technologies offer vast potential for innovation and efficiency gains across a wide array of industries; (2) AI/ML projects require high quality and varied data; (3) Scientific information will continue to grow in size and complexity; and (4) Invest in a data platform with support from technical and domain experts.

He closed with the following quote:

“The computer is incredibly fast, accurate and stupid. Man is unbelievably slow, inaccurate, and brilliant. The marriage of the two is a challenge and opportunity beyond imagination”.

Barbosky used the quote when showing a photo of a painting entitled Edmond de Belamy [14]. It is believed to be the first piece of artwork created by Artificial Intelligence to be auctioned at Christie’s. It sold for a whopping $432,500!!!

Barbosky’s slides are not available on the NFAIS web site.

3.Building AI and machine learning models

The second speaker was Dr. Daniel Vasicek, Data Scientist and Engineer, from Access Innovations, Inc. (see: https://www.accessinn.com/) who discussed how the application of artificial intelligence (AI) to the indexing process can make discovery services more valuable (although he primarily focused on the challenges faced when building ML models). He noted that Machine Learning (ML) and AI have great potential in every field, including publishing, as text is simply one type of data. Potential applications include optical character recognition (OCR), sentiment analysis, trend analysis, concept extraction, entity extraction, topic assignment, quality analysis, and more.

Vasicek talked about neural networks - a set of algorithms modeled loosely after the human brain - that are designed to recognize patterns. They interpret sensory data through a kind of machine perception, labeling or clustering raw input. He uses them for analysis to fit complex models to the high dimensional data that often occurs in the publishing industry. High dimensionality arises in publishing because we are usually interested in a large number of concepts; for example, a moderate thesaurus will contain thousands of concepts. Useful text will have a large number of words. Therefore, the utilization of AI and ML for the discovery of ideas, sentiments, tendencies, and context requires that the algorithms be aware of many different features such as the words themselves, the length of sentences (and paragraphs), word frequency counts, phrases, punctuation, number of references, and links.

He noted that all data has noise - even publishing data - and that large numbers of adjustable parameters can result in the “overfitting” of analysis models such as those used in ML and AI. Overfitting occurs when a proposed predictive model becomes so complex that it fits the noise in the data and this is a very common problem encountered in ML. Two critical factors lead to overfitting - data uncertainty and model uncertainty. To reinforce these factors he added that that real data has measurement errors and that only the use of quantum mechanics guarantees uncertainty. He noted that even if a room was built to be a perfect square it probably is not “perfect”, just as the orbits of the planets are not perfect ellipses because they are perturbed by Jupiter, Saturn, etc. The point is, models are never perfect!

He said that AI goes back at least two hundred years. In support of this fact he talked about the asteroid Ceres (originally thought to be a planet) that was discovered January 1, 1801 by Giuseppe Piazzi. Piazzi followed it every day as it moved, but had to stop due to an illness. When he returned to his efforts in February of that same year he could not find it (Ceres was “lost” due to the glare of the sun). One of his students, Carl Friedrich Gauss, using mathematics to predict its location, rediscovered Ceres on December 31, 1801 [15]. In his attempt to find it, Piazzi used a circular orbit as a model while Gauss used ellipses as the orbit model. This is an example of how “regularization” methods offer techniques that will reduce the tendency of the model to overfit the data. As a result of regularization, models may do a much better job on new data that is not part of the original training data set. It can force the model to be smoother and reduce the impact of noise.

Vasicek then went on to provide detailed examples that clarified the concepts of “overfitting” and “regularization”. He gave examples of how the same data can be matched perfectly by different models and asked which is correct? He answered that technically all of them are correct, but that the simpler model is most likely to be the better one.

He said that models are always imperfect and that data is always noisy and that we must be aware that both of these problems can affect analysis and reduce the usefulness of our predictions. You need to really “know” the data that you are using and its level of uncertainty. It is important to remember that models that are complex enough to fit noise are not useful and can prove to be useless. When we have an imperfect model and erroneous data, we need to balance the cost associated with the imperfect model against the cost of the erroneous data. He gave the following example. A publisher has five million articles in its databases and wants to develop author profiles for peer review. They apply ML to develop author profiles. Unfortunately, overfitting of the model used leads the AI to assign specific concepts to authors; e.g., “Quantum entanglement” rather than the general term “Physics”. The generation of very specific topics can lead the machine to not suggest an author for peer review because they are not an expert in broader topics such as “Physics”.

In closing, Vasicek said that overfitting is a constant challenge with any ML task as most ML applications may generate very complex models which will actually fit the data “better”, but are actually fitting the noise. He added that the model can learn the noise as well as it can learn the real information and that using concept identification rules for text helps mitigate overfitting. He suggested that those interested take a look at Google TensorFlow. This is an open source ML tool with tutorials and the software is ready to use out of the box. It can be used to build multi-layer neural networks and is good tool with which to experiment with deep learning [16].

Vasicek’s slides are available on the NFAIS website and an article based upon his presentation appears elsewhere in this issue of Information Services and Use.

4.AI trends around the globe

The next speaker was Dr. Bamini Jayabalasingham, Senior Analytical Product Manager at Elsevier (see: https://www.elsevier.com/), who spoke on the use of A rtificial Intelligence to create, transfer and use knowledge in addition to highlighting the current trends for the use of AI around the globe. She noted that AI is often used as an umbrella term to describe computers applying judgment just as a human being would. Yet, there is no universally agreed-upon definition of AI as the term is frequently interchanged (incorrectly, I must add) with Machine Learning (ML). She believes that such a definition is required to ensure that policy objectives are correctly translated into research priorities, that student education matches job market needs, and that the media can compare knowledge being developed. She said that Elsevier is the first to characterize the field of AI in a comprehensive, structured manner using extensive datasets from their own and public sources. These datasets were examined by Elsevier’s data scientists through the application of ML principles and the results were validated by domain experts from around the world.

The body of data was extracted from textbooks, funding information, patents, and media reports and resulted in eight hundred thousand keywords. Eight hundred query terms were mapped against six million articles in Scopus and the output was filtered based on metadata, resulting in six hundred thousand documents that were then analyzed to identify topics.

The results showed that globally, research in the field of AI clusters around seven topics: search and optimization; fuzzy systems; planning and decision making; natural language processing and knowledge representation; computer vision; neural networks; and ML and probabilistic reasoning. The data that they analyzed showed that there are more than sixteen million active authors in the field who are affiliated with more than seventy-thousand organizations, and that there are more than seventy thousand articles, conference papers, and book records related to AI. The number of AI research papers is growing at 12% per year globally. China is the most prolific, followed by the USA, India, Germany, Japan, Spain, Iran, France, and Italy. The share of world publications on AI is growing. The data from 2013 to 2017 show that China has 24%, Europe 30%, the USA 17%, and all other 29%.

She noted that China is very focused on the applied side of AI, with publications targeted towards the topics of computer vision, neural networks, planning and decision making, fuzzy systems, natural language processing, and knowledge representation. Europe is the most diverse, and the USA is very strong in the corporate sector, with a lot of research coming out of IBM and Microsoft. Also, the USA is attracting a lot of talent from overseas to both the corporate and academic sectors.

She said that Elsevier plans to continue its research in the hopes of answering the following questions:

How can we further improve the AI ontology and field definition?

Is there a relationship between research performance in AI and research performance in the more traditional fields that support AI (such as computer science, linguistics, mathematics, etc.)?

How does AI research translate into real-life applications, societal impact, and economic growth? How sustainable is the recent growth in publications, and how will countries and sectors continue to compete and collaborate?

The results of the first stage of research has been published in a report, Artificial Intelligence: How Knowledge is Created, Transferred, and Used: Trends in China, Europe, and the United States and is freely-available to download as a full report [17] and as an Executive Summary [18].

Jayabalasingham’s slides are available on the NFAIS website and her article that discusses global AI trends appears elsewhere in this issue of Information Services and Use. Note that her article has links to many AI reports and studies that you may find to be of interest. Also, another Elsevier speaker at the conference, Ann Gabriel, has a paper in this issue on a similar topic.

5.Reinforcing the need for publishers to evolve

The next speaker was Sam Herbert, Co-founder, 67 Bricks (see: https://www.67bricks.com), who spoke on how publishers need to change in order to remain relevant in an era of Artificial Intelligence. He opened with a quote from Sergey Brin, Co-Founder of Google:

“AI is the most significant development in computing in my lifetime. Every month, there are stunning new applications and transformative new techniques [19]”.

Herbert noted that the time it takes to do an AI analysis has gone from weeks to hours. He mentioned an organization, Open AI (see: https://openai.com), whose charter is to see that Artificial Intelligence benefits all of humanity and the resource section of their website offers free software for training, benchmarking, and experimenting with AI - definitely worth taking a look. He gave the example of how medical researchers today are able to generate data from brain scans (Note: the importance of this data was discussed at the 2019 NFAIS Annual Conference by Dr. Daniel Barron, a resident psychiatrist at Yale University. An overview of the conference will be published in Information Services and Use this fall [20]. I was fascinated by the use of AI to decode brain data and found an article that discusses software developed in 2017 that allows for ‘decoding digital brain data’ to reveal how neural activity gives rise to learning, memory, and other cognitive functions [21].)

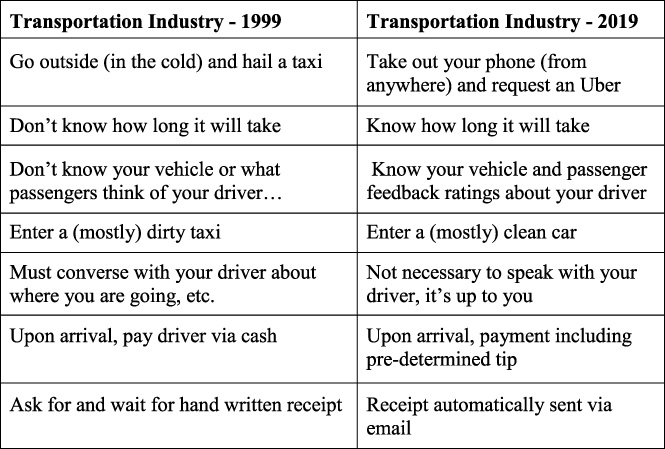

Herbert went on to say that if publishers want to remain relevant, they have to provide a better user experience. He gave Uber, Google, and Amazon as examples of major innovators who have revised traditional industries by doing just that. The following is an example that he provided of how the transportation industry has been transformed:

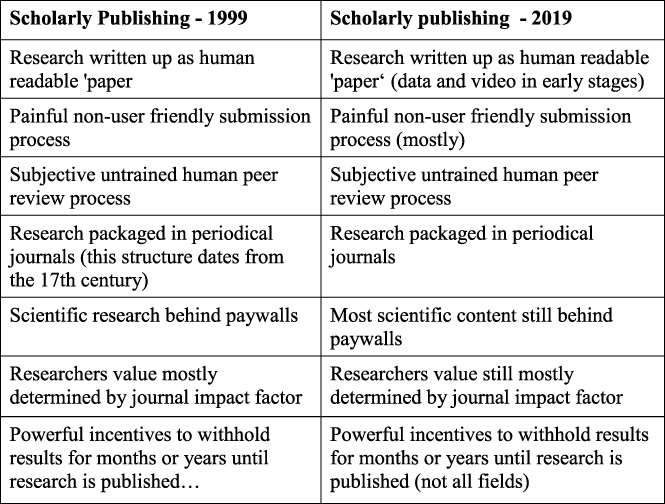

In comparison, he said that in his opinion, the user experience offered by scholarly publishers has not really changed much since 1999, and offered the following example:

In comparison, he said that in his opinion, the user experience offered by scholarly publishers has not really changed much since 1999, and offered the following example:

He said that the importance of ease, speed, and access to content is as important as the quality of the information, and noted that academic publishers can deliver better user experiences by developing a product mindset underpinned by data “maturity” and new software paradigms. He said that data maturity is defined by the publisher’s ability to store, manage, create, and use data to deliver value to their users. He said that “low” maturity (e.g., document-based storage and access), requires much effort on the part of the user to access, digest, and use the data. On the other hand “high” maturity (/e.g., information in context, insights (both predictive and prescriptive) offers an experience that requires far less effort on the part of the user. He added that users now expect customized knowledge and tailored recommendations delivered digitally. Data is at the heart of everything and by increasing their data maturity publishers will be able to develop the innovative products and that users demand. (This echoes the comments made during the opening keynote!)

He said that the importance of ease, speed, and access to content is as important as the quality of the information, and noted that academic publishers can deliver better user experiences by developing a product mindset underpinned by data “maturity” and new software paradigms. He said that data maturity is defined by the publisher’s ability to store, manage, create, and use data to deliver value to their users. He said that “low” maturity (e.g., document-based storage and access), requires much effort on the part of the user to access, digest, and use the data. On the other hand “high” maturity (/e.g., information in context, insights (both predictive and prescriptive) offers an experience that requires far less effort on the part of the user. He added that users now expect customized knowledge and tailored recommendations delivered digitally. Data is at the heart of everything and by increasing their data maturity publishers will be able to develop the innovative products and that users demand. (This echoes the comments made during the opening keynote!)

Herbert gave an example of how his organization was able to help SAGE Publishing improve their customer relations. Their sales team worked to create tailored value reports containing detailed information about how SAGE products fit each customer’s information needs. In academic institutions this involved examining course curricula together with research profiles of each institution and analyzing them against SAGE content - a highly-manual, time-consuming effort. SAGE asked 67 Bricks to undertake an R&D project to create a working prototype tool that would take a description of an educational course and return a list of SAGE content relevant to the course. Using Neuro-linguistic Programming Techniques, a working prototype was developed and has since been rolled-out to members of the sales, marketing, and editorial teams [22]. This has allowed SAGE to save time and money and has removed some tedious manual tasks from the editorial staff.

In closing, Herbert noted that the publishing industry is one of many that is rapidly changing as a result of AI applications. Scholarly publishers need to focus on delivering new and improved user experiences and he believes that by becoming a data-driven product company publishers will be well- positioned in this changing environment. He concluded by saying that the future of data-driven products is as follows:

| ∙ Predicting high impact research | ∙ Understanding the links between |

| ∙ Improving marketing communications | research objects |

| ∙ Delivering customized/personalized | ∙ Augmenting/automating peer review |

| experiences | ∙ Predicting emerging subject areas |

| ∙ Helping researchers discover content | ∙ Identifying peer reviewer |

| ∙ Delivering information services rather | ∙ Adaptive learning products Cross selling |

| than documents | across content domains |

| ∙ Selling data to machine learning | ∙ Unlocking value in legacy content |

| companies | ∙ Automated creation of marketing |

| ∙ Creating automated or semi-automated | materials Improve internal content |

| content | discovery and research |

Herbert’s slides are available on the NFAIS website and a brief article based upon his presentation appears elsewhere in this issue of Information Services and Use.

6.Managing manuscript submissions and Peer review

The next speaker was Greg Kloiber, Senior User Experience Designer, ScholarOne Manuscripts, at Clarivate Analytics (see: https://clarivate.com/), who spoke about a pilot project that they implemented that uses Artificial Intelligence (AI) to help authors submit higher-quality papers and also speeds up the editorial evaluation process by providing insightful statistics. ScholarOne Manuscript is an online tool that allows hundreds of publishers to manage their submission and peer-review workflows and currently has ten million active users. He noted that the volume of papers going through the publishing workflow continues to rise and that there has been a 6.1% annual growth of submissions since 2013. In the 2015–2017 time period 5.5million new papers have been submitted to ScholarOne journals. 33% were accepted after review; 27% were rejected after review; and 40% were rejected without review. While screening is important, it takes a lot of time and effort for editors [23]. He noted that 2.3 reviewers are needed for each submission and that it takes five hours for each review [24]. In addition, it takes a little over sixteen days (16.4) to turnaround a review [25]. He referred the audience to another in which similar statistics were shown [26].

What ScholarOne wanted to do was to reduce the time required to check and screen every paper and also reduce the number of papers that are rejected after peer review. They partnered with UNISILO (see: https://unsilo.ai), a company in Denmark that provides AI tools for publishers, and entered into a pilot project using UNISILO’s Evaluate, a suite of APIs (Application Programming Interfaces) that help publishers screen and evaluate incoming manuscript submissions, assisted by Artificial Intelligence (AI) and Neuro-Linguistic Programming tools.

The six-month pilot consisted of seven major publishers with forty-three participating editors from around the world. More than twenty qualitative interviews were conducted before, during, and after the pilot. The tool had six screening widgets: key statements, key words (topics), technical checks, related papers, journal matching, and reviewer finder (the latter was not used as ScholarOne already had a similar tool). More widgets are planned and include industry trends and a language qualifier. The initial response from editors was the following statement: “Usually people have a suspicion that something might need to be checked, not the other way around. This (tool) changes that!”

Kloiber said that as a result of the pilot they learned that the title “editor” does not always translate into the same job function - those participating had multiple roles: Editor, Associate Editor, Senior Editor, Managing Editor, Executive Editor, Editor-in-Chief, Clinical Editor, Development Editor, Section Editor, Publisher, Publishing Editor, Senior Publishing Editor, Head of Education, International Audience Editor, and Journal Manager/Manager Online Submission Systems. They found the widgets to be valuable and believe that authors also might benefit by the tool to help with abstracts, keywords, and the identification of journals to which they should submit their manuscript. He said that ScholarOne will begin with the key statement and keyword widgets as reviewers said that the combination of those two were sufficient to make a decision for accepting/rejecting a manuscript. They also used “key statements” in preparing rejection letters.

He said that an interesting side fact learned was that 25% of the editors fear that AI will eliminate their jobs and he had to reinforce the fact that this was to assist them and improve productivity, not take away positions. Some AI lessons learned were that:

Can a keyword have two or more words? The answer was “yes” and AI had to determine if it was a sentence or what.

The AI needs to address row/page numbering within a PDF proof.

The AI needs to consider when a dot (.) is a decimal point or a period.

The AI needs to be able to handle the various taxonomies within a research field.

Kloiber said that the next steps include using authors and editors to “train” the AI and to roll out the keyword and key statement widgets. He recommended the following additional reading material for those interested in learning more:

Using AI to solve business problems in scholarly publishing Author: Michael Upshall, UKSG, https://insights.uksg.org/articles/10.1629/uksg.460/, accessed September 12, 2019.

Mythbusting AI: What is all the fuss about? Author: Michael Upshall, Wiley Online Library, https://www.onlinelibrary.wiley.com/doi/full/10.1002/leap.1211, accessed September 12, 2019.

Video: The Silverchair Universe Presents: UNSILO, available at: https://www.youtube.com/watch?v=u4U5TM7APLs, accessed September 12, 2019.

Kloiber’s slides are available on the NFAIS website.

The final speaker of the day was Donald Samulack, President, U.S. Operations, Editage/Cactus Communications (see: https://www.editage.com), who also discussed a decision-making tool for manuscript evaluation, entitled Ada (see: https://www.editage.com/Ada-by-editage.html).

Samulack opened with a description of the evolution of the modern information era as follows:

The Internet: If only I had access to more information

Computing Power: If only I could process more information

Semantic Search: If only I could extract the right information easier/faster

Machine Learning/Artificial Intelligence: If only I was presented with information that was relevant, digested, and tailored to my needs

Wearable/Implanted Technologies: If only I was integrated with the knowledge and world around me

Editage serves both authors and publishers - the former by providing diverse editing services and the latter by providing a manuscript evaluation tool that can be integrated into their workflow systems. They also have a Data Science lab, a Natural Language Processing lab, and an Artificial Intelligence lab.

He noted that the challenge that they address for publishers is that it can take up to four months from the time that an author submits their manuscript to the time when they are informed if the manuscript has been accepted or rejected. Also, during that process the screening by editors is largely manual and subjective. Their goal was to provide editors with an objective tool with which to make their decisions and, as mentioned by Kloiber earlier, to reduce the processing time. He reinforced the prior speaker’s comments by saying that the scientific research output doubles every six years; that editorial screening is increasingly critical, but time-consuming; that one out of five manuscripts are rejected during “desk review”; and that there is a downward pressure on publishing time and costs. As a result of these trends the mission of Editage’s Ada is to be “an industry-first, automated, customizable, manuscript screening solution that saves publication costs and time downstream for academic publishers”. “Ada” stands for Automated Document Assessment”, but the name is also in honor of Augusta Ada King, Countess of Lovelace, who was an English mathematician and writer, chiefly known for her work on Charles Babbage’s proposed mechanical general-purpose computer, the Analytical Engine [27].

One of their key focuses was being able to perform a readability assessment of manuscripts. They defined readability as the ease with which a reader can understand a written text. Samulack said that according to Peter Thrower, PhD, Editor-in-Chief of Carbon, one of the top reasons of manuscript rejection is language comprehension [28]. Editage started with public algorithms, but soon realized that none of them provided the fine-tuning required for academic content. In addition, the accuracy was very low and inconsistent with human assessment. So they went back to the drawing board with their own in-house linguists and studied hundreds of manuscript - a effort that resulted in a proprietary formula that looks at more than thirty-plus different metrics of language structure, composition, spelling, sentence and paragraph lengths, and writing complexity (think back to Vasicek’s comments about the ML and AI problems that are related to modelling large numbers of variables). Their algorithm has four components: manuscript assessment, publication ethics, peer review assistance, and post-acceptance assistance.

The output is a very clear, visual “report card” that ranks a document’s overall readability and compliance with the various elements checked; e.g. spelling, reference structure, potential plagiarism, etc.

He noted that Ada has a secure RESTful API (Application Programming Interface) that can be integrated into any publisher manuscript management system, and that it offers a secure web-based portal that can be used by a publisher’s editorial desk to upload/bulk upload and get assessment reports of the manuscript(s). They have now partnered with Frontiers, an open access publisher, who currently uses the system as part of their own workflow [29]. It has been integrated into their manuscript management workflow and intercepts manuscript pre-editorial desk workflow via an API. More than twenty thousand manuscripts were processed in the first three months.

Samulack said that other enhancements are coming down the road. These include:

Automated discoverability using concept tagging and meta tagging; a custom report to verify specific publisher checks (computer assisted - expert led; an expert verifies automated checks, performs some manual ones, and creates a custom report that allows journal editors to reach quicker decision); the provision of badges for readability, publication ethics, reproducibility, statistical, image integrity, presentation quality, research quality, and data availability; and an outsourced, expert-led technical check/peer review. He noted that some alternate use cases for the system are: (1) Instant feedback to authors on submission quality (an editing service recommender); (2) Automatic identification of required copyedit level; and (3) Automated copyedit quality assessment to improve efficiency and reduce manual error through a pre/post quality comparison.

He added that a demonstration of the system can be requested at: https://www.editage.com/Ada-by-editage.html.

Samulack’s slides are available on the NFAIS website.

7.AI and the science & technology cycle

The second day of the conference began with a plenary session given by Dr. Rosina O. Weber, Associate Professor, Department of Information Science, College of Computing and Informatics, Drexel University, who spoke about the challenges and potential benefits of applying Artificial Intelligence in scholarship. She opened her presentation with a definition of Artificial intelligence as follows:

“A field of study dedicated to the design and development of software agents that exhibit rational behavior when making decisions.”

She added that AI agents make decisions and exhibit rational behavior as they execute complex tasks such as classification, planning, design, natural language, prediction, image recognition, etc. She added that there are three waves of AI: describe, categorize, and explain. The first wave describes represented knowledge (intelligent help desks, ontologies, content-based recommenders, etc.). But she added that as a Society we have accumulated a great deal of data and it changes quickly, so that the original methods of converting data into information and letting humans make decisions no longer work effectively. The second wave, “categorize”, is based on statistical learning and, according to Weber, is where AI and Machine Learning (ML) are today (image and speech recognition, sentiment analysis, neural networks, etc.). The data deluge can only be managed with automated decision-making agents. But again, this wave has limitations, with the major problem being that humans do not want a machine to make decisions for them [30]. The third wave, “explain”, is where software agents and humans become partners, with humans serving as “managers” of the work performed by multiple decision-making agents. It is in this wave that the AI systems are capable of interacting with humans, explaining themselves, and adapting to difference contexts.

Weber then went on to describe the Science and Technology Cycle (S&T Cycle) in which legislators identify societal demands and then provide funding agencies with the resources with which to support the requisite research. Researchers then use the funds for the efforts required to create novel scientific output that ultimately will be summarized in scientific publications. Researchers teach new scientists, disseminate their research results, and transfer the copyright of their manuscripts in order to be published in peer reviewed journals. The new knowledge that is an outcome of the funded research is then expected to be converted into wealth as it provides the predicted solutions to societal demands.

Weber noted that the S&T Cycle has problems:

Neither the U.S. Congress nor the Funding Agencies really know exactly how much money is required to obtain the outcomes needed to meet specific societal demands.

A great deal of money supports the administration of funding agencies and their processes.

Education itself is expensive. Teachers continually design, deliver, and revise existing and new courses, design new assignments, grade them, and provide repetitive feedback.

Textbooks are continually being revised and updated and new ones created - often being out of date by the time they make it through the publishing infrastructure.

The production of scientific knowledge is inefficient - much research is actually redundant.

Scientific publishing, like other industries, are at risk to losing their niche markets to the big Tech Giants such as Microsoft, Google, Amazon, etc. (e.g. Microsoft has 176 million papers; Elsevier has 75 million papers!)

No one knows how many or which specific scientific contributions actually fulfill the desired needs.

Weber said that the use of Artificial Intelligence can solve these problems. It can be used to automate literature reviews [31]. Doing this would have the added benefit of being able to answer the following questions: What are the open research questions? Which research contributions are redundant? And which research contributions are contradictory? AI can be used to determine the effectiveness and efficiency of funding allocations by associating scientific publications to funding projects and analyzing the outcomes. What is the impact of funded vs. non-funded research? An automated manipulation of the contents of scientific publications will ultimately enable studies to determine how much wealth is driven by federally-funded research. AI can help to populate expert-locator systems by analyzing the most recent projects of researchers. AI can help identify the true cost of research. The data will reveal patterns in expenditures combined with scientific contributions. AI can provide the basis for textbook content, curricula, etc. based upon an analysis of the most recent scientific contributions and job market predictions.

Weber said that leveraging the combination of scientific data and AI will have many benefits. It will allow humans to maximize their expertise while managing AI agents to do the work. New automated services will emerge to help all those working in the S&T Cycle. Aligning education content to the job markets will increase the effectiveness and efficiency of education in populating requisite workforces. Indeed, applying AI to the S&T cycle will;

Increase the understanding of the Cycle itself

Make the Cycle truly transparent

Identify research gaps

Identify redundant work

Identify conflicting results

Provide easy access to scientific facts

Facilitate better planning and budgeting of scientific research

Identify which scientific disciplines generate wealth and which do not

According to Weber the future of AI is the third wave, explainable AI (XAI). Humans will supervise their partners, the AI agents, who can explain their decisions, adapt to specific contexts, learn from experience, adopt ethical principles, and comply with regulations. The challenges to the realization of the third wave are: the change in the nature of work - are humans ready to be the “boss” of AI agents; the need for humans to be able to trust the agents; and collaboration among publishers.

In closing Weber said that the S&T Cycle is a cycle of data and of users in need of services. The richest source of data is the proprietary information held in scientific publications - all of the information, not only the abstracts. Without this data AI cannot generate the best information that will inform the best decisions and she made a plea to the publishers in the audience to create alliances to make the third wave of AI a reality. She also suggested the following reading materials for those interested in learning more:

• Qazvinian, V., Radev, D. R., Mohammad, S. M., Dorr, B., Zajic, D., Whidby, M., Moon, T., “Generating Extractive Summaries of Scientific Paradigms,” Journal of Artificial Intelligence Research, Vol. 46, pp. 165–201, February 20, 2013, available at: https://www.jair.org/index.php/jair/article/view/10800/25780, accessed September 13, 2019.

• Weber, R. O., Johs, A. J., Li, J., Huang, K., “Investigating Textual Case-Based XAI,” the 26th International Conference on Case-based Reasoning, Stockholm, Sweden, July 9–12, 2018, available at: https://www.researchgate.net/publication/327273653_Investigating_Textual_Case-Based_XAI, accessed September 13, 2019.

• Weber, R. O., Gunawardena, S., “Designing Multifunctional Knowledge Management Systems,” Proceedings of the 41st Annual Hawaii International Conference on System Sciences (HICSS-41), Jan. 2008 Page(s): 368–368.

• Weber, R. O., Gunawardena, S., “Representing Scientific Knowledge. Cognition and Exploratory Learning in the Digital Age,” Rio de Janeiro, Brazil. 6–8 November, 2011, available at: https://idea.library.drexel.edu/islandora/object/idea:4146, accessed September 13, 2019.

Weber’s slides are available on the NFAIS website and an article based upon her presentation appears elsewhere in this issue of Information Services and Use.

8.Adding value to the research process

The next speaker was Ann Gabriel, Senior Vice President Global Strategic Networks, Elsevier who discussed how Elsevier is using Artificial Intelligence (AI) and Machine Learning (ML) to bring new value to research. She said that Elsevier is attempting to evolve from a traditional publisher into a technology information company with AI included in its arsenal of technologies. (Note: her presentation complemented the earlier talk by Dr. Bamini Jayabalasingham).

She said that their goal is to provide their customers with the right insights based on Elsevier’s high quality content combined with deep data science and domain expertise. By 2021 they plan to deliver AI capabilities that are scalable, re-usable, and at the best quality possible through: enabling digital data enrichments and structuring at the earliest point and in the most automated ways; by moving from computer-assisted to human-assisted enrichment; by incorporating usage data for the best recommendations and personalizations; by identifying the best features and algorithms to be able to do state-of-the-art predictive analytics; and by offering AI as a service. They are using new capabilities (ML, AI and Natural Language Processing (NLP) to increase the utility of their content via text mining and data analytics and have built a data science team of approximately one hundred full time equivalent employees. Elsevier has about seven thousand employees around the globe, and one thousand of them are technologists. Why? Users want knowledge that is tailored to their exact needs of the moment in their workflow and their wants and expectations are technology-driven having been shaped by the emergence of AI, Knowledge Graphs, and new user experiences.

She noted that when users come across an unknown term in an article, they stop reading, open up Wikipedia, and look up the unknown term to get definitions and background information about the concept. What Elsevier has done via a combination of data, AI, and product development, is to make the top pages of ScienceDirect, Elsevier’s website that provides subscription-based access to a large database of scientific and medical research [32], far more user-friendly so users get exactly what they need when they need it - summarized article content, additional relevant, enriched content with links, and good definitions. She admitted that they faced challenges in doing this; e.g., the need to automatically identify good definitions from text; the fact that they are dealing with a large amount of data; that most sentences are not definitions and that sentences that look like definitions often are not; being able to rank definitional sentences; and the fact that many concepts are ambiguous. Elsevier relies on automation, strong predictive ML models; human feedback; and disambiguation tools. The automated processes make Topic Page creation and content enrichment scalable; about one hundred thousand Topic Pages are generated from ScienceDirect content and more than a million articles are being enriched with links to Topic Pages. They have plans to further expand the coverage to more domains. As of the conference there had been more than three million page views and it is freely-accessible to ScienceDirect customers. An article describing the technology and examples of output can be accessed at: https://www.elsevier.com/solutions/sciencedirect/topics/technology-content-a-better-research-process.

In addition, Elsevier is using technology to identify scientific topics that are gaining momentum and therefore are likely to be funded. Their ground-breaking, new technology takes into consideration 95% of the articles available in Elsevier’s abstract, indexing and citation database, Scopus [33] and clusters them into nearly ninety-six thousand global, unique research topics based on citation patterns. Aimed at portfolio analysis, Elsevier has identified these “Topics of Prominence” in science using direct citation analysis on the citation linkages in the full Scopus database. As a result, they have created an accurate model that is suitable for portfolio analysis (the methodology can be easily reproduced, but requires a full database). Gabriel added that they have created a topic-level indicator - Prominence - that is strongly correlated with future funding and noted that funding-per-author increases with increasing topic prominence. This tool [34] (Topics and the Prominence Indicator) enables stakeholders in the science system to have the knowledge necessary to make portfolio decisions.

Gabriel closed by saying that Elsevier has additional AI initiatives planned, and recommended that the audience take the time to read the Elsevier report on AI that was noted earlier by Dr. Bamini Jayabalasingham.

Gabriel’s slides are not on the NFAIS website, but a paper based upon her presentation appears elsewhere in this issue of Information Services and Use.

9.Using AI to connect content, concepts and people

The next speaker in this session was Bert Carelli, Director of Partnerships, TrendMD (see: https://www.trendmd.com), a company that uses Artificial Intelligence (AI) and Machine Learning (ML) to help publishers and authors expand their readership to very targeted audiences.

Carelli opened his presentation with a brief overview of TrendMD. The company was founded in 2014 by professionals from academic research, scholarly publishing, and digital technology. It was nurtured by Y Combinator - the startup accelerator that incubated Reddit, Dropbox, and Airbnb (see: https://www.ycombinator.com/). As of the conference the company had sixteen employees, with management in Toronto and California, and was being used by more than three hundred scholarly publishers on nearly five thousand websites.

He noted that more than 2.5 million scholarly articles are published each year - more than 8,000+ each day. Fifty percent of the articles are never read [36] and a much higher percentage are never cited [37]. He said that there are two sides to the problem: (1) researchers want to find papers that are most useful to their work and (2) publishers want to ensure that their journal articles are found and read. He said that a recent report [38] indicates that browsing is core to discovery. Whether it’s the Table of Contents of a journal or a search results page, browsing is something that we all do - information seekers are open to serendipity. That same report indicates that users find that the most useful feature on a publisher’s website is an indication of content that is related to the articles that they retrieve from a search (the “recommender” feature). TrendMD does just that: it allows users of a publisher website to discover new content, based on what they are reading; what other users like them have read; and on what they have read in the past. He added, however, that simply displaying “Related Content” is not particularly a new or unique idea; e.g. PubMed related content and other “More Like This” links have been around for many years.

TrendMD generates recommendations via collaborative filtering - similar to how Amazon product recommendations are generated. The Journal of Medical Internet Research (JMIR) performed a six-week A/B test comparing recommendations generated by the TrendMD service, incorporating collaborative filtering, with recommendations generated using the basic PubMed similar article algorithm, as described on the NCBI website [39]. The test showed that the quality of recommended articles generated by TrendMD outperforms PubMed related citations by 272% [40]. But it is not just a recommender system. What is truly unique (and what impressed me most) is that their system has created a cross-publisher network that helps to increase article readership significantly [41], and a system that is supported by the TrendMD credit system. Publishers earn credits when they recommend (sponsor) an article on another publisher’s site and “spend” credits when the reverse happens.

Carelli noted that recommendations are broken into two sections when displayed on the screen: internal links (non-sponsored articles on the publisher’s own site) on the left of the screen (or on top in a vertical widget) and sponsored links (recommended articles on another publisher’s site) on the right of the screen. Publishers earn one-half a traffic credit for each reader that they send to another website within the TrendMD network and spending one traffic credit gets them one new reader to their website from other websites within the TrendMD network (Note: is this perhaps the beginning of the potential collaboration across publishers for which Rosina Weber gave a plea during her presentation??)

Why do so many publishers use TrendMD? Carelli said that it boils down to one thing – the company helps them maximize their growth. The statistics that he quoted were impressive: five million monthly clicks by one hundred twenty-five million unique users around the world; eighty-five percent of U.S. academics are reached as well as ninety percent of U.S. doctors (note: usage is forty percent via mobile devices and sixty percent via desktops). But users are not limited to the USA. While almost forty-nine percent of users are U.S.-based, he noted that access is from twenty-three countries, including Malaysia, China, Indonesia, etc. He noted that the TrendMD traffic increase is correlated with a seventy-seven percent increase in Mendeley saves [42], and that TrendMD readers view more articles per visit than all other traffic sources. He also noted that TrendMD readers have the lowest bounce rate of all traffic sources.

Carelli added that TrendMD has increased the number of authors submitting to journals published by participants in the TrendMD network. JMIR Publications used TrendMD to drive author submissions and the TrendMD campaign resulted in twenty-six author submissions at a conversion rate of 0.26%. Their return on investment with TrendMD was nearly ten times and Trend MD outperformed Google Ads by more than two times and Facebook by more than five times.

In closing, Carelli added that while TrendMD identifies those readers most likely to be interested in a publisher’s content, finer targeting is available under three Enterprise Plans: (1) Country or region (Global); (2) Institutional (Global) - target hospital networks, universities, colleges, organizations; and (3) User-targeting (Global) - target specific types of researchers and/or Health Care Professionals in the network.

Carelli’s slides are not available on the NFAIS website, but an article based upon his presentation appears elsewhere in the issue of Information Services and Use.

10.AI and academic libraries

The next presentation was done jointly by Amanda Wheatley, Liaison Librarian for Management, Business, and Entrepreneurship, and Heather Hervieux, Liaison Librarian for Political Science, Religious Studies and Philosophy, both from McGill University (see: https://www.mcgill.ca/). The primary focus of their talk was to discuss the results of a survey that they initiated in order to determine what role the librarian will play in an Artificial Intelligence (AI)-dominated future, as well as to reinforce the importance of autonomous research skills.

They opened with a discussion of the role of reference librarians saying that information seekers are looking to find, identify, select, obtain, and explore resources. Libraries clearly enable those efforts - specifically the reference librarians who recommend, interpret, evaluate, and/or use information resources to help those information seekers. Wheatley and Hervieux put forth six literary concepts: (1) information has value; (2) information creation as a process; (3) authority as constructed and contextual; (4) research as inquiry; (5) searching as strategic exploration; and 6) scholarship as conversation [43]. Their discussion focused on “research as inquiry” and “searching as strategic exploration” within the context of the role of AI and the role of librarians.

With regards to “research as inquiry”, the practices of researchers are to: formulate questions based on information gaps; determine appropriate research; use various research methods; organize information in meaningful ways; deal with complex research by breaking complex questions into simple ones. Researchers consider research as an open-ended exploration; seek multiple perspectives during information gathering; value intellectual curiosity in the development of their questions; and they demonstrate intellectual humility.

Similarly, with regards to “searching as strategic exploration”, the practices of researchers are to: identify interested parties who might produce information; utilize divergent and convergent thinking; match information needs and search strategies; understand how information systems are organized; and use different types of searching language. Researchers exhibit mental flexibility and creativity; understand that first attempts at searching do not always produce the desired results; realize that information sources vary greatly; seek guidance from experts (such as librarians); and recognize the value in browsing and other serendipitous methods.

Wheatley and Hervieux then asked the following questions: Is AI prepared to allow researchers to continue their information literacy process? And is AI capable of being information-literate?

AI is used to a certain extent in libraries; e.g., agent technology is used to streamline digital searching and suggest articles; “conversational agents” or chatbots using natural language processing have been implemented; AI supports digital libraries and information retrieval techniques; and libraries’ use of radio frequency identification (RFID) tags in circulation. AI is also used to a certain extent in higher education; e.g. digital tutors and online immersive-learning environments; programs and majors dedicated to the study across disciplines; and student researchers utilizing AI hubs.

Wheatley and Hervieux noted that smart speaker ownership (e.g. Alexa, Siri, etc.) in the United States increased dramatically between 2017 and 2018, growing from 66.7 million to 118.5 million units, an increase of almost 78 percent [44]. Since humans are basically creatures of habit, they expressed concern that as younger people become more and more used to simply asking a question to these smart devices rather than doing their own research, not only will perhaps the quality of research inquiries and strategic searching diminish, but also the role of librarians may need to shift to ensure that the research quality is maintained. It is inevitable that as these devices become a common part of people’s everyday lives, their use will extend from personal space to the professional and academic environments. Asking Google for the news could soon become asking for the latest research on a given subject. The potential for Virtual Assistants to become pseudo-research assistants is a reality of which all information professionals should be aware. Their goal became to see if this type of AI has the potential to accurately provide research support at the level of an educated librarian with a Master’s degree.

By determining whether or not these devices are capable of providing high quality answers to reference questions, they hope to begin to understand how users might utilize them within the research cycle. Their research plan consists of six steps: (1) An environmental scan; (2) the identification of librarians’ perceptions of AI; (3) device testing (phase 1); (4) identification of student perceptions; (5) device testing (phase 2); and (6) an evaluation of the AI experience. Only the first step had been completed as of the conference.

The methodology used for the first step was to evaluate the university and university library websites of twenty-five research-intensive institutions in the USA and Canada. They searched for keywords such as Artificial Intelligence, Machine Learning, Deep Learning, AI hub, etc. And they looked at library websites to see if they could find mention of AI in strategic plans/mission/vision; in topic/research/subject guides; in programming; and in partnerships. Similarly, they looked at the University websites for mention of AI hubs, courses, and mention of major AI researchers.

Of the twenty-five universities reviewed not one mentioned Artificial Intelligence in their strategic plans. However, all did have some sort of AI presence (e.g., AI hubs or course offerings). Only one of the academic libraries has a subject guide on AI (Calgary University). Three libraries offer programming and activities related to AI. Sixty-eight percent of the universities have significant researchers in the field of AI. And although some libraries have digital scholarship hubs, these hubs are not involved with AI. Of the twenty-five academic libraries sampled, only two are collaborating with AI hubs.

Wheatley and Hervieux went on to discuss three case studies that were undertaken by three of the top universities actively looking at AI: the Massachusetts Institute of Technology, Stanford University, and Waterloo University.

MIT started a project in 2006 entitle SIMILE (see: http://simile.mit.edu/) with the goal to create “next-generation search technology using Semantic Web standards”. The site is no longer updated or supported.

Stanford has implemented a library initiative to “‘identify and enact applications of Artificial Intelligence [...] that will help us (the university) make our rich collections of maps, photographs, manuscripts, data sets and other assets more easily discoverable, accessible, and analyzable for scholars”. The site also sponsors talks and discussions about AI (see: https://library.stanford.edu/projects/artificial-intelligence). They also have an AI Studio (see: https://library.stanford.edu/projects/artificial-intelligence/sul-ai-studio) that applies AI in projects to make their collections more discoverable and analyzable. The studio is staffed by volunteer librarians and the library provides access to Yewno, discovery tool that provides a graphical display of the interrelationships between concepts (Note: Yewno was discussed by its founders at both the 2017 [45] and 2018 [46] NFAIS Annual conferences. It is a very interesting concept that uses AI to augment the information discovery process).

Waterloo University has established the Waterloo Artificial Intelligence Institute: Centre for Pattern Analysis and Machine Learning. While not affiliated with any library projects, current initiatives have great potential for impact on the research process (see: https://uwaterloo.ca/centre-pattern-analysis-machine-intelligence/research-areas/research-projects).

Wheatley and Hervieux said that their conclusions at this stage of their research are the following: (1) AI is already operating behind the scenes in libraries and the larger research process; (2) Universities and libraries are not doing enough to work together in this field; and (3) Research habits are indicative of personal habits and that the personal use of virtual assistants is growing exponentially.

They also raised the following questions:

As AI builds within the research process do these two concepts become one and the same?

How do we adjust our standards and frameworks for teaching to account for this change?

Are citation counts and impact factors still the preferred metric or does AI impact the way we perceive search results and scholarship?

What does this mean for reference librarians and job security going forward?

They hope to gain insights to these questions when they ultimately conclude their study. Their next step is to focus on the second phase of their plan - to identify the perceptions of librarians with regards to AI.

Wheatley and Hervieux’s slides are available on the NFAIS website and an article based upon their presentation appears elsewhere in this issue of Information Services and Use.

11.Increasing the awareness of AI in higher education

The next speaker was James J. Vileta, Business Librarian, University of Minnesota Duluth, who spoke passionately and articulately about the need to include the topic of Artificial Intelligence in diverse curricula. He was in total agreement with the prior speakers, Wheatley and Hervieux, who expressed concern that of the twenty-five universities that they surveyed, only one of the libraries (Calgary University) have a subject guide on AI. He then went on to mention three books:

Rise of the Robots: Technology and the Threat of a Jobless Future by Martin Ford

The End of Jobs: Money, Meaning and Freedom Without the 9-5, by Taylor Pearson

The Second Machine Age: Work, Progress and Prosperity in a Time of Brilliant Technologies by Erik Brynjolfsson and Andrew McAfee

He said that reading them raised a lot of questions for him regarding the impact of AI, such as: Is it just math, computer science, and engineering or does it touch all disciplines? How is it applied to professions, commerce, and industry? How is it applied to careers and personal life planning? How will it impact our social, political and economic world? Should young people, in particular, be more aware of its potential impact on their lives? He noted that the business school at his university does not address these issues at all. Their focus is on globalization since so much of U.S. business is conducted out of the United States. They give no attention at all to Artificial Intelligence. He believes that universities and libraries need to do more - that they should “AI & Robots” all feasible parts of the curriculum. Institutions of higher education have a duty - they need to prepare students for real life - a life that is even now being impacted by AI.

Vileta noted that Northeastern University’s President, Joseph E. Aoun, has written a book on how colleges need to reform their entire approach to education, Robot-Proof: Higher Education in the Age of Artificial Intelligence published by MIT Press. The book was highlighted in The Washington Post within the context of today’s job market and the article stated that the nearly nine in ten jobs that have disappeared since the year 2000 were lost to automation [47]. He said that Aoun lays out the framework for a new discipline, “humanics”, that builds on our innate strengths and prepares students to compete in a labor market in which smart machines work alongside human professionals (does this sound like Weber’s Third Wave of AI?).

Vileta agrees with Aoun, but said that we need to do more. We need to consider the following questions: What kind of world do we want to live in? How do we live without incomes from work? How do we achieve a balanced society? How do we find meaning without work? What do we do with our abundant leisure? What new laws and regulations do we want? He said that he himself wants to do more and, as a librarian, he wants to use his expertise to create an awareness of AI on his campus. So he first studied the landscape of the AI topic and then went out and bought some books!

He bought books on all sorts of subjects that touched on AI: universal basic income [48], AI in video games, autonomous cars, AI and robots in the military, AI and robots in healthcare, AI ethics, AI in management, AI in banking, the Internet of things, etc. What he found was that there are a number of recent books and articles related to Artificial Intelligence that touch on all aspects of work and play and that most people are unaware of the variety and quality of these books. Also, he noted that large libraries get most of these books automatically, while smaller libraries have to be mindful of budgets and be more selective in their purchases. He warned, however, that when you are developing new programs in the curriculum, you must be proactive and direct. You cannot be passive.

Taking his own advice he created a web page using Dreamweaver. The page currently has eighteen sections, over two hundred and eighty books and five hundred and fifty clickable links. The page was launched to positive reviews around the time of the conference and will ultimately be transformed into its own website. He has been advertising the page via selective dissemination of information, through Social Media Marketing, strategic viral marketing, and traditional marketing. In addition he has formed alliances with key faculty members at the university, set up special lectures and presentations, and testing in the university’s College Writing Courses.

Vileta added that there are many good AI-related books on diverse topics that can be brought into library collections, along with countless scholarly articles on all levels. There are many opportunities for libraries and librarians to bring awareness of AI into higher education.

Libraries can provide focused finding aids, and also initiate innovative projects, such as the AI Lab at the University of Rhode Island Library (subject of the following presentation). He said that librarians should help everyone prepare for the future and that this applies to all types of libraries - academic and public. Librarians need to take the initiative and do more - kudos to Wheatley and Hervieux for their call to action as well!! He added that organizations and vendors who work with libraries should help them in this work by providing more content, better indexing, and more AI-focused online products.

In closing, Vileta suggested that in addition to Auon’s book mentioned earlier, those in the audience read The Future of the Professions: How Technology Will Transform the Work of Human Experts [49].

Vileta’s slides are not available on the NFAIS website.

12.University launches a new AI laboratory

The final speaker of the morning was Karim Boughida, Dean of University Libraries at the University of Rhode Island, who spoke about the University’s Artificial Intelligence Laboratory. He opened by saying that a library’s brand is a book, but libraries are so much more than books! (Note: library identification with books was the subject of a presentation by Scott Livingston of OCLC at the 2019 NFAIS Annual Conference [50]).

The lab opened in the fall of 2018 and, unlike AI labs on other campuses that are usually housed in computer or engineering departments, this is housed in the library and it is believed to be the first one placed in such an environment. The location is strategic as the organizers hope that students majoring in diverse fields will visit the lab and use it to brainstorm about important social and ethical issues today and create cutting-edge projects. Also, when surveyed recently about topics they wished to see in their curriculum, AI was among the top requests from the university’s students. This was what motivated the university to create the lab and to put it where the majority of students would have access - the library.

The lab has two complementary goals: “On the one hand, it will enable students to explore projects on robotics, natural language processing, smart cities, smart homes, the Internet of Things, and big data, with tutorials at beginner through advanced levels. It will also serve as a hub for ideas - a place for faculty, students, and the community to explore the social, ethical, economic and even artistic implications of these emerging technologies [51]”.

The six-hundred square foot lab has three zones. The first zone has AI workstations where a student or a team of students can learn about AI and relevant subject areas. The second zone has a hands-on project bench where, after students receive basic training on AI and data science, they can move on to advanced tools to design hands-on projects in which they can apply AI algorithms to various applications such as deep learning robots, the Internet of things for smart cities, and big data analytics. Located in the center of the lab, the third zone is an AI hub where groups can get together for collaborative thinking.

The lab was funded by a $143,065 grant from the Champlin Foundation, one of Rhode Island’s oldest philanthropic organizations, and unlike AI labs focused on research, this lab is focused on providing students and faculty with the chance to learn new computing skills. Also, through a series of talks and workshops, it encourages them to deepen their understanding of AI and how it might impact their lives.

In closing, like Vileta, Wheatley, and Hervieux before him, Boghida stressed the importance of embedding AI in curricula and he referred to the fact that Finland is being aggressive in training its citizens in AI. In May of 2018, Finland launched Elements of AI (see: https://course.elementsofai.com), a first-of-its-kind online course that forms part of an ambitious plan to turn Finland into an AI powerhouse [52]. It’s free and anyone can sign up (I just did!). It is definitely work a look.

Boghida did not use slides for his presentation.

13.A call to publishers to act now

The lunch speaker was Michael Puscar, Founder and CEO of Oiga Technologies. He has partnered with Mike Hooey, CEO and Founder of Source Meridian, to provide their customers with early access to state-of-the-art technology (AI, Blockchain, Big Data analytics etc.), helping them to create and maintain their competitive advantage in the market. Puscar focuses on the publishing industry and Hooey focuses on the healthcare industry. Puscar spoke last year at the NFAIS conference on the use of Blockchain technology in scientific publishing [53].

Puscar opened by saying that Artificial Intelligence is no longer the future - it is the now! He created a sense of urgency by reminding the audience that the information industry is very dynamic. For publishers, content is the crown jewel, and they have spent the last decade refining how it is stored, processed, and transformed. He added that use of AI can provide competitive advantages and that publishers can either be a part of shaping the future or simply adjust and adapt after their competitors have already done so.

He noted that his organization already has had success in healthcare where much of the content is now in digital format. By applying AI to that content, their customers are working to find unexpected drug interactions; detect and eliminate insurance fraud; identify the best doctors, hospitals, and treatment plans; predict the outcome of clinical trials and reduce costs; conduct derivative clinical trials; more efficiently address R&D budgets in healthcare; and to create objective, quantitative predictive performance measures.

He added that most of us are unaware of what we don’t know and AI can bring that knowledge to the surface. Technology is ahead of industry applications, and the software already exists that publishers can implement now. It is not as expensive as one would think, but an understanding of how AI works is necessary to set realistic expectations (perhaps the online course from Finland will help?).

He pointed out that when a publisher’s content has defined data structures, they need to know the questions for which AI will be used to find the answers (echoing Vasicek and Ciufetti). He added that content does not necessarily need to be unified or normalized, and that AI algorithms often find trends where least expected, noting that human reinforcement is often, but not always, necessary.

Puscar used the example of the process of learning. Often we do not know exactly what we will learn, but we do so through trial and error, or reinforcement. When we achieve a goal, it is positively reinforced. With neural networks, machines can learn in the same way, building unique neural pathways. Machines learn to achieve a stated goal through trial and error. Each success and failure builds neural pathways that improve subsequent tries. Actions in an environment maximize a cumulative reward and optimality criterion governs success or failure.

In closing he said that there is no need to wait to get started. Open Source software is available, but the bad news is that computing time and expertise will require a substantial investment. He added that cloud-based services such as those available from Google, Microsoft, and Amazon all have Machine Learning platform services. For example take a look at Google’s offerings - AutoML Natural Language and Natural language API - at https://cloud.google.com/natural-language/. He added that whether you choose Google, Amazon, IBM or Microsoft Azure - they all have what is needed: horizontal scalabilities, API-based access, Machine Learning tools, out-of-the-box algorithms, examples, and supportive communities. (Note: the following speaker provides a perfect case study!)

Puscar’s slides are not available on the NFAIS website, but a brief article based upon his presentation appears elsewhere in this issue of Information Services and Use.

14.Case study: Using machine learning to build a recommendation engine

The next speaker was Peter Ciufetti, Director of Product Development at ProQuest, who discussed how ProQuest used Amazon’s SageMaker, a fully-managed Machine Learning system [54], to build a video recommendation system. He opened by saying that he is not giving a commercial for Amazon Web Services, although it may seem so at points, and that he will try not to be overly technical, though it’s a bit hard to avoid this given the topic, especially since his goal was to make Machine Learning concepts accessible and inspire those in the audience to try it on their own. He noted that he did receive a degree in computer science from Harvard a long time ago, but that he is not a data scientist, which is an important take-away for this presentation.