Reinforcement learning for personalization: A systematic literature review

Abstract

The major application areas of reinforcement learning (RL) have traditionally been game playing and continuous control. In recent years, however, RL has been increasingly applied in systems that interact with humans. RL can personalize digital systems to make them more relevant to individual users. Challenges in personalization settings may be different from challenges found in traditional application areas of RL. An overview of work that uses RL for personalization, however, is lacking. In this work, we introduce a framework of personalization settings and use it in a systematic literature review. Besides setting, we review solutions and evaluation strategies. Results show that RL has been increasingly applied to personalization problems and realistic evaluations have become more prevalent. RL has become sufficiently robust to apply in contexts that involve humans and the field as a whole is growing. However, it seems not to be maturing: the ratios of studies that include a comparison or a realistic evaluation are not showing upward trends and the vast majority of algorithms are used only once. This review can be used to find related work across domains, provides insights into the state of the field and identifies opportunities for future work.

1.Introduction

For several decades, both academia and commerce have sought to develop tailored products and services at low cost in various application domains. These reach far and wide, including medicine [5,71,77,84,178,179], human-computer interaction [61,100,118], product, news, music and video recommendations [163,164,217] and even manufacturing [39,154]. When products and services are adapted to individual tastes, they become more appealing, desirable, informative, e.g. relevant to the intended user than one-size-fits all alternatives. Such adaptation is referred to as personalization [55].

Digital systems enable personalization on a grand scale. The key enabler is data. While the software on these systems is identical for all users, the behavior of these systems can be tailored based on experiences with individual users. For example, Netflix’s11 digital video delivery mechanism includes tracking of views and ratings. These ease the gratification of diverse entertainment needs as they enable Netflix to offer instantaneous personalized content recommendations. The ability to adapt system behavior to individual tastes is becoming increasingly valuable as digital systems permeate our society.

Recently, reinforcement learning (RL) has been attracting substantial attention as an elegant paradigm for personalization based on data. For any particular environment or user state, this technique strives to determine the sequence of actions to maximize a reward. These actions are not necessarily selected to yield the highest reward now, but are typically selected to achieve a high reward in the long term. Returning to the Netflix example, the company may not be interested in having a user watch a single recommended video instantly, but rather aim for users to prolong their subscription after having enjoyed many recommended videos. Besides the focus on long-term goals in RL, rewards can be formulated in terms of user feedback so that no explicit definition of desired behavior is required [12,79].

RL has seen successful applications to personalization in a wide variety of domains. Some of the earliest work, such as [122,174,175] and [231] focused on web services. More recently, [107] showed that adding personalization to an existing online news recommendation engine increased click-through rates by 12.5%. Applications are not limited to web services, however. As an example from the health domain, [234] achieve optimal per-patient treatment plans to address advanced metastatic stage IIIB/IV non-small cell lung cancer in simulation. They state that ‘there is significant potential of the proposed methodology for developing personalized treatment strategies in other cancers, in cystic fibrosis, and in other life-threatening diseases’. An early example of tailoring intelligent tutor behavior using RL can be found in [124]. A more recent example in this domain, [74], compared the effect of personalized and non-personalized affective feedback in language learning with a social robot for children and found that personalization significantly impacts psychological valence.

Although the aforementioned applications span various domains, they are similar in solution: they all use traits of users to achieve personalization, and all rely on implicit feedback from users. Furthermore, the use of RL in contexts that involve humans poses challenges unique to this setting. In traditional RL subfields such as game-playing and robotics, for example, simulators can be used for rapid prototyping and in-silico benchmarks are well established [14,21,50,97]. Contexts with humans, however, may be much harder to simulate and the deployment of autonomous agents in these contexts may come with different concerns regarding for example safety. When using RL for a personalization problem, similar issues may arise across different application domains. An overview of RL for personalization across domains, however, is lacking. We believe this is not to be attributed to fundamental differences in setting, solution or methodology, but stems from application domains working in isolation for cultural and historical reasons.

This paper provides an overview and categorization of RL applications for personalization across a variety of application domains. It thus aids researchers and practictioners in identifying related work relevant to a specific personalization setting, promotes the understanding of how RL is used for personalization and identifies challenges across domains. We first provide a brief introduction of the RL framework and formally introduce how it can be used for personalization. We then present a framework to classify personalization settings by. The purpose of this framework is for researchers with a specific setting to identify relevant related work across domains. We then use this framework in a systematic literature review (SLR). We investigate in which settings RL is used, which solutions are common and how they are evaluated: Section 5 details the SLR protocol, results and analysis are described in Section 6. All data collected has been made available digitally [46]. Finally, we conclude with current trends challenges in Section 7.

2.Reinforcement learning for personalization

RL considers problems in the framework of Markov decision processes or MDPs. In this framework, an agent collects rewards over time by performing actions in an environment as depicted in Fig. 1. The goal of the agent is to maximize the total amount of collected rewards over time. In this section, we formally introduce the core concepts of MDPs and RL and include some strategies to personalization without aiming to provide an in depth introduction to RL. Following [188], we consider the related multi-armed and contextual bandit problems as special cases of the full RL problem where actions do not affect the environment and where observations of the environment are absent or present respectively. We refer the reader to [188,220] and [190] for a full introduction.

An MDP is defined as a tuple

With these definitions in place, we now turn to methods of finding

Having introduced RL briefly, we continue by exploring some strategies in applying this framework to the problem of personalizing systems. We return to our earlier example of a video recommendation task and consider a set of n users

An alternative approach is to define is a single agent and MDP with user-specific information in the state space S and learn a single

A third category of approaches can be considered as a middle ground between learning a single

3.Algorithms

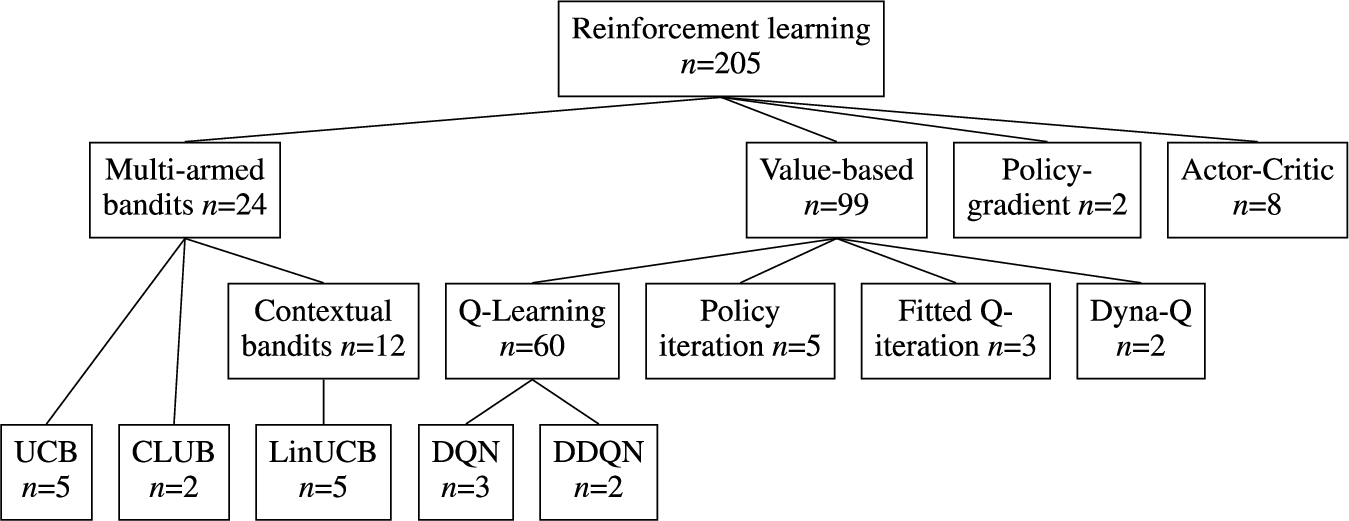

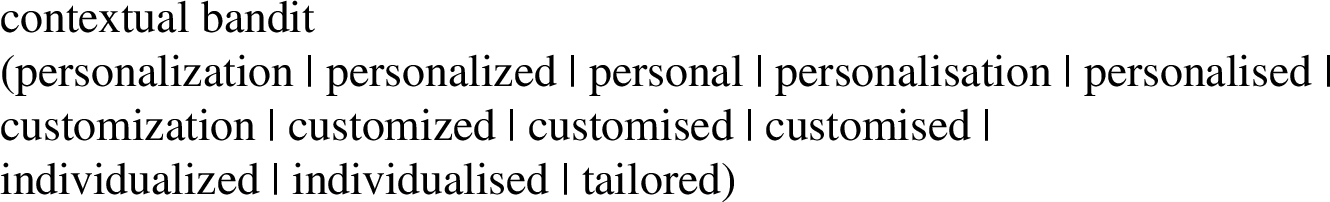

In this section we provide an overview of specific RL techniques and algorithms used for personalization. This overview is the result of our systematic literature review as can be seen in Table 4. Figure 2 contains a diagram of the discussed techniques. We start with a subset of the full RL problem known as k-armed bandits. We bridge the gap towards the full RL setting with contextual bandits approaches. Then, value-based and policy-gradient RL methods are discussed.

Fig. 2.

Overview of types of RL algorithms discussed in this section and the number of uses in publications included in this survey. See Table 4 for a list of all (families of) algorithms used by more than one publication.

3.1.Multi-armed bandits

Multi-armed bandits is a simplified setting of RL. As a result, it is often used to introduce basic learning methods that can be extended to full RL algorithms [188]. In the non-associative setting, the objective is to learn how to act optimally in a single situation. Formally, this setting is equivalent to an MDP with a single state. In the associative or contextual version of this setting, actions are taken in more than one situation. This setting is closer to the full RL problem yet it lacks an important trait of full RL, namely that the selected action affects the situation. Both associative and non-associative multi-armed bandit approaches do not take into account temporal separation of actions and related rewards.

In general, multi-armed bandit solutions are not suitable when success is achieved by sequences of actions. Non-associative k-armed bandits solutions are only applicable when context is not important. This makes them generally unsuitable for personalizaton as it typically utilizes different personal contexts for different users by offering a different functionality. In some niche areas, however, k-armed bandits are applicable and can be very attractive due to formal guarantees on their performance. If context is of importance, contextual bandit approaches provide a good starting point for personalizing an application. These approaches hold a middle ground between non-associative multi-armed bandits and full RL solutions in terms of modeling power and ease of implementation. Their theoretical guarantees on optimality are less strong than their k-armed counterparts but they are easier to implement, evaluate and maintain than full RL solutions.

3.1.1.k-Armed bandits

In a k-armed bandit setting, one is constantly faced with the choice between k different actions [188]. Depending on the selected action, a scalar reward is obtained. This reward is drawn from a stationary probability distribution. It is assumed that an independent probability distribution exists for every action. The goal is to maximize the expected total reward over a certain period of time. Still considering the k-armed bandit setting, we assign a value

In a trivial problem setting, one knows the exact value of each action and selecting the action with the highest value would constitute the optimal policy. In more realistic problems, it is fair to assume that one cannot know the values of the actions exactly. In this case, one can estimate the value of an action. We denote this estimated value with

At each time step t, estimates of the values of actions are obtained. Always selecting the actions with the highest estimated value is called greedy action selection. In this case we are exploiting the knowledge we have built about the values of the actions. When we select actions with a lower expected value, we say we are exploring. In this case we are improving the estimates of values for these actions. In the balancing act of exploration and exploitation, we opt for exploitation to maximize the expected total reward for the next step, while opting for exploration could results in higher expected total reward in the long run.

3.1.2.Action-value methods for multi-armed bandits

Action-value methods [188] denote a collections of methods used for estimating the values of actions. The most natural way of estimating the action-values is to average the rewards that were observed. This method is called the sample-average method. The value estimate

Greedy action selection only exploits knowledge built up using the action-value method and only maximizes the immediate reward. This can lead to incorrect action-value approximations because actions with e.g. low estimated but high actual values are not sampled. An improvement over this greedy action selection is to randomly explore with a small probability ϵ. This method is named the ϵ-greedy action selection. A benefit of this method is that, while it is relatively simple, in the limit

3.1.3.Incremental implementation

In Section 3.1.2 we discussed a method to estimate action-values using sample-averaging. To ensure the usability of these method in real-world applications, we need to be able to compute these values in an efficient way. Assume a setting with one action. At each iteration j a reward

Using this approach would mean storing the values of all the rewards to recalculate

3.1.4.UCB: Upper-confidence bound

The greedy and ϵ-greedy action selection methods were discussed in Section 3.1.2 and it was introduced that exploration is required to establish good action-value estimates. Although ϵ-greedy explores all actions eventually, it does so randomly. A better way of exploration would take into account the action-value’s proximity to the optimal value and the uncertainty in the value estimations. Intuitively, we want a selected action a to either provide a good immediate reward or else some very useful information in updating

k-Armed bandit approaches address the trade-off between exploitation and exploration directly. It has been shown that the difference between the obtained rewards and optimal rewards, or the regret, is at best logarithmic in the number of iterations n in the absence of prior knowledge of the action value distributions and in the absence of context [102]. UCB algorithms with a regret logarithmic in and uniformly distributed over n exist [7]. This makes them a very interesting choice when strong theoretical guarantees on performance are required.

Whether these algorithms are suitable, however, depends on the setting at hand. If there is a large number of actions to choose from or when the task is not stationary k-armed bandits are typically too simplistic. In a news recommendation task, for example, exploration may take longer than an item stays relevant. Additionally, k-armed bandits are not suitable when action values are conditioned on the situation at hand, that is: when a single action results in a different reward based on e.g. time-of-day or user-specific information such as in Section 2. In these scenarios, the problem formalization of contextual bandits and the use of function approximation are of interest.

3.1.5.Contextual bandits

In the previous sections, action-values where not associated with different situations. In this section we extend the non-associative bandit setting to the associative setting of contextual bandits. Assume a setting with n k-armed bandits problems. At each time step t one encounters a situation with a randomly selected k-armed bandits problem. We can use some of the approaches that were discussed to estimate the action values. However, this is only possible if the true action-values change slowly between the different n problems [188]. Add to this setting the fact that now at each time t a distinctive piece of information is provided about the underlying k-armed bandit which is not the actual action value. Using this information we can now learn a policy that uses the distinctive information to associate the k-armed bandit with the best action to take. This approach is called contextual bandits and uses trial-and-error to search for the optimal actions and associates these actions with situation in which they perform optimally. This type of algorithm is positioned between k-armed bandits and full RL. The similarity with RL lies in the fact that a policy is learned while the association with k-armed bandits stems from the fact that actions only affect immediate rewards. When actions are allowed to affect the next situation as well then we are dealing with RL.

3.1.6.Function approximation: LinUCB and CLUB

Despite the good theoretical characteristics of the UCB algorithm, it is not often used in the contextual setting in practice. The reason is that in practice, state and action spaces may be very large and although UCB is optimal in the uninformed case, we may do better if we use obtained information across actions and situations. Instead of maintaining isolated sample-average estimates per action or per state-action pair such as in Sections 3.1.2 and 3.1.5, we can estimate a parametric payoff function approximated from data. The parametric function takes some feature description of actions for k-armed bandit settings and state-action pairs for the contextual bandit setting and output some estimated

LinUCB (Linear Upper-Confidence Bound) uses linear function approximation to calculate the confidence interval efficiently in closed form [107]. Define the expected payoff for action a with the d-dimensional featurized state

Similar to LinUCB, CLUB (Clustering of bandits) utilizes the linear bandit algorithm for payoff estimation [69]. In contrast to LinUCB, CLUB uses adaptive clustering in order to speed up the learning process. The main idea is to use confidence balls of user models estimate user similarity and share feedback across similar users. CLUB can thus be understood as a cluster-based alternative (see Section 2) to LinUCB algorithm.

3.2.Value-based RL

In value based RL, we learn an estimate V of the optimal value function

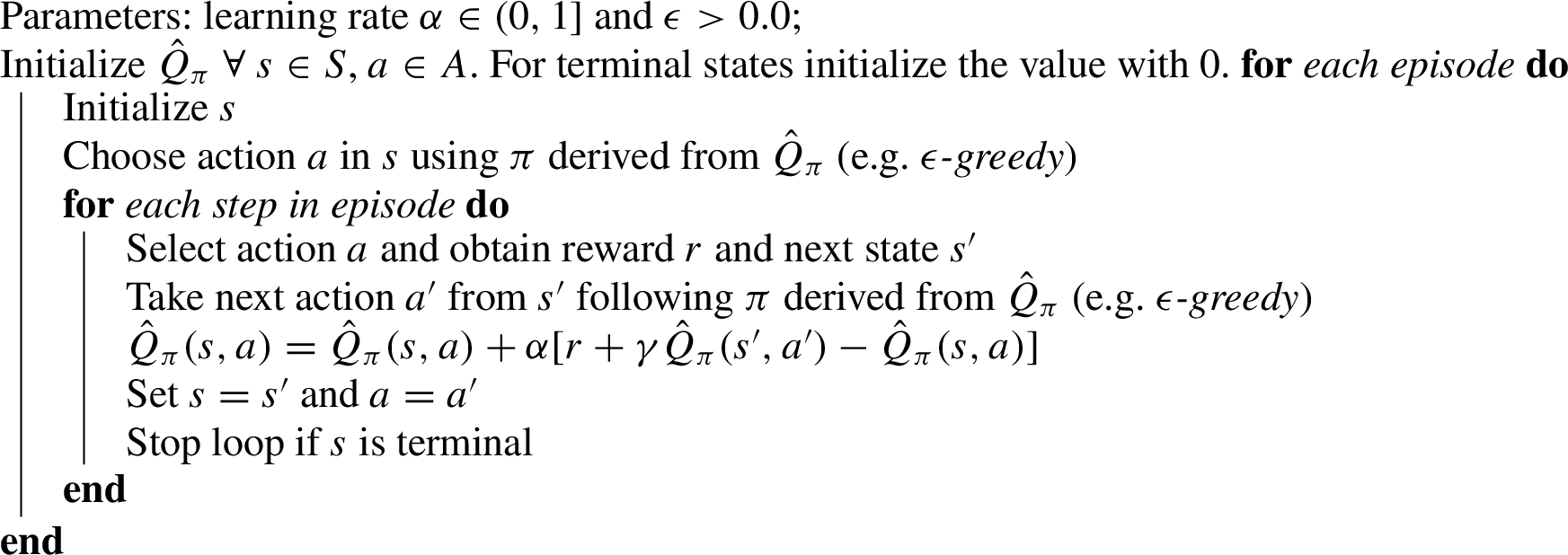

3.2.1.Sarsa: On-policy temporal-difference RL

Sarsa is an on-policy temporal-difference method that learns an action-value function [181,188]. Given the current behaviour policy π, we estimate

This update rule is applied after every transition from

Algorithm 1:

Sarsa – An on-policy temporal-difference RL algorithm

3.2.2.Q-learning: Off-policy temporal-difference RL

Q-learning was one of the breakthroughs in the field of RL [188,219]. Q-learning is classified as an off-policy temporal-difference algorithm for control. Similar to Sarsa, Q-learning approximates the optimal action-value function

Algorithm 2:

Q-Learning – An off-policy temporal-difference RL algorithm

3.2.3.Value-function approximation

In Sections 3.2.2 and 3.2.1 we discussed tabular algorithms for value-based RL. In this section we discuss function approximation in RL for estimating state-value functions from a known policy π (i.e. on-policy RL). The difference with the tabular approach is that we represent

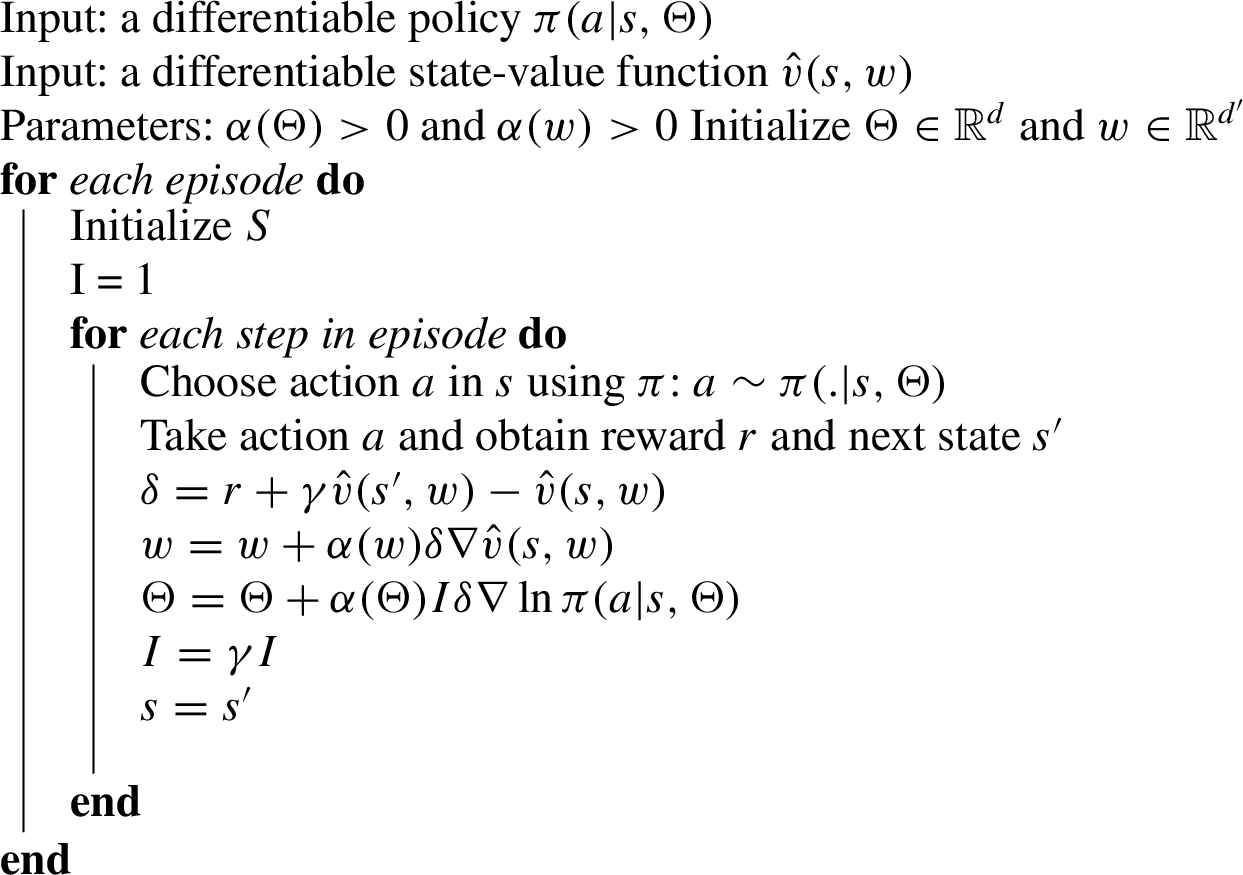

3.3.Policy-gradient RL

In value-based RL values of actions are approximated and then a policy is derived by selecting actions using a certain selection strategy. In policy-gradient RL we learn a parameterized policy directly [188,189]. Consequently, we can select actions without the need for an explicit value function. Let

Consider a function

Algorithm 3:

One-step episodic actor-critic

3.4.Actor-critic

In actor-critic methods [98,188] both the value and policy functions are approximated. The actor in actor-critic is the learned policy while the critic approximates the value function. Algorithm 3 shows the one-step episodic actor-critic algorithm in more detail. The update rule for the parameter vector Θ is defined as follows:

4.A classification of personalization settings

Personalization has many different definitions [30,55,165]. We adopt the definition proposed in [55] as it is based on 21 existing definitions found in literature and suits a variety of application domains: “personalization is a process that changes the functionality, interface, information access and content, or distinctiveness of a system to increase its personal relevance to an individual or a category of individuals”. This definition identifies personalization as a process and mentions an existing system subject to that process. We include aspects of both the desired process of change and existing system in our framework. Section 5.4 further details how this framework was used in a SLR.

Table 1 provides an overview of the framework. On a high level, we distinguish three categories. The first category contains aspects of suitability of system behavior. We differentiate settings in which suitability of system behavior is determined explicitly by users and settings in which it is inferred by the system after observing user behavior [172]. For example, a user can explicitly rate suitability of a video recommendation; a system can also infer suitability by observing whether the user decides to watch the video. Whether implicit or explicit feedback is preferable depends on availability and quality of feedback signals [89,143,172]. Besides suitability, we consider safety of system behavior. Unaltered RL algorithms use trial-and-error style exploration to optimize their behavior yet this may not suit a particular domain [78,92,136,153]. For example, tailoring the insulin delivery policy of an artificial pancreas to the metabolism of an individual requires trial insulin delivery action but these should only be sampled when their outcome is within safe certainty bounds [44]. If safety is a significant concern in the systems’ application domain, specifically designed safety-aware RL techniques may be required, see [149] and [64] for overviews of such techniques.

Table 1

Framework to categorize personalization setting by

| Category | A# | Aspect | Description | Range |

| Suitability outcome | A1 | Control | The extent to which the user defines the suitability of behavior explicitly. | Explicit – implicit |

| A2 | Safety | The extent to which safety is of importance. | Trivial – critical | |

| Upfront knowledge | A3 | User models | The a priori availability of models that describe user responses to system behavior. | Unavailable – unlimited |

| A4 | Data availability | The a priori availability of human responses to system behavior. | Unavailable – unlimited | |

| New Experiences | A5 | Interaction availability | The availability of new samples of interactions with individuals. | Unavailable – unlimited |

| A6 | Privacy sensitivity | The degree to which privacy is a concern. | Trivial – critical | |

| A7 | State observability | The degree to which all information to base personalization can be measured. | Partial – full |

Aspects in the second category deal with the availability of upfront knowledge. Firstly, knowledge of how users respond to system actions may be captured in user models. Such models open up a range of RL solutions that require less or no sampling of new interactions with users [81]. As an example, user pain models are used to predict suitability of exercises in an adaptive physical rehabilitation curriculum manager a priori [208]. Models can also be used to interact with the RL agent in simulation. For example, dialogue agent modules may be trained by interacting with a simulated chatbot user [47,95,105]. Secondly, upfront knowledge may be available in the form of data on human responses to system behavior. This data can be used to derive user models and can be used to optimize policies directly and provide high-confidence evaluations of such policies [111,202–204].

The third category details new experiences. Empirical RL approaches have proven capable of modelling extremely complex dynamics, however, this typically requires complex estimators that in turn need substantial amounts of training data. The availability of users to interact with is therefore a major consideration when designing an RL solution. A second aspect that relates to the use of new experiences is privacy sensitivity of the setting. Privacy sensitivity is of importance as it may restrict sharing, pooling or any other specific usage of data [9,76]. Finally, we identify the state observability as a relevant aspect. In some settings, the true environment state cannot be observed directly but must be estimated using available observations. This may be common as personalization exploits differences in mental [22,96,217] and physical state [67,125]. For example, recommending appropriate music during running involves matching songs to the user emotional state and e.g. running pace. Both mental and physical state may be hard to measure accurately [2,17,152].

Although aspects in Table 1 are presented separately, we explicitly note that they are not mutually independent. Settings where privacy is a major concern, for example, are expected to typically have less existing and new interactions available. Similarly, safety requirements will impact new interaction availability. Presence of upfront knowledge is mostly of interest in settings where control lies with the system as it may ease the control task. In contrast, user models may be marginally important if desired behavior is specified by the user in full. Finally, a lack of upfront knowledge and partial observability complicates adhering to safety requirements.

5.A systematic literature review

A SLR is ‘a form of secondary study that uses a well-defined methodology to identify, analyze and interpret all available evidence related to a specific research question in a way that is unbiased and (to a degree) repeatable’ [23]. PRISMA is a standard for reporting on SLRs and details eligibility criteria, article collection, screening process, data extraction and data synthesis [135]. This section contains a report on this SLR according to the PRISMA statement. This SLR was a collaborative work to which all authors contributed. We denote authors by abbreviation of their names, e.g. FDH, EG, AEH and MH.

5.1.Inclusion criteria

Studies in this SLR were included on the basis of three eligibility criteria. To be included, articles had to be published in a peer-reviewed journal or conference proceedings in English. Secondly, the study had to address a problem fitting to our definition of personalization as described in Section 4. Finally, the study had to use a RL algorithm to address such a personalization problem. Here, we view contextual bandit algorithms as a subset of RL algorithms and thus included them in our analysis. Additionally, we excluded studies in which a RL algorithm was used for purposes other than personalization.

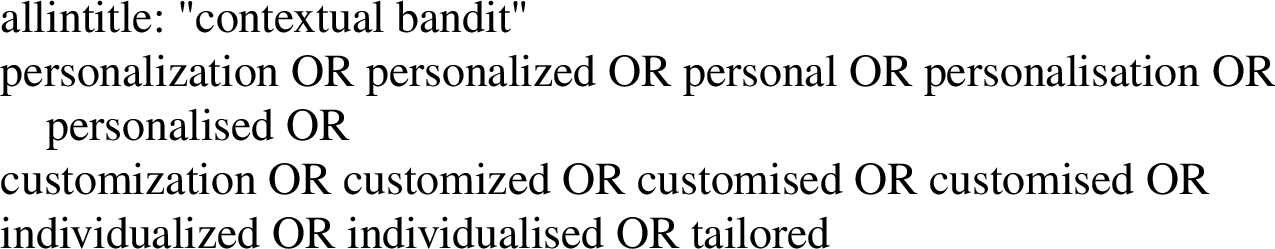

5.2.Search strategy

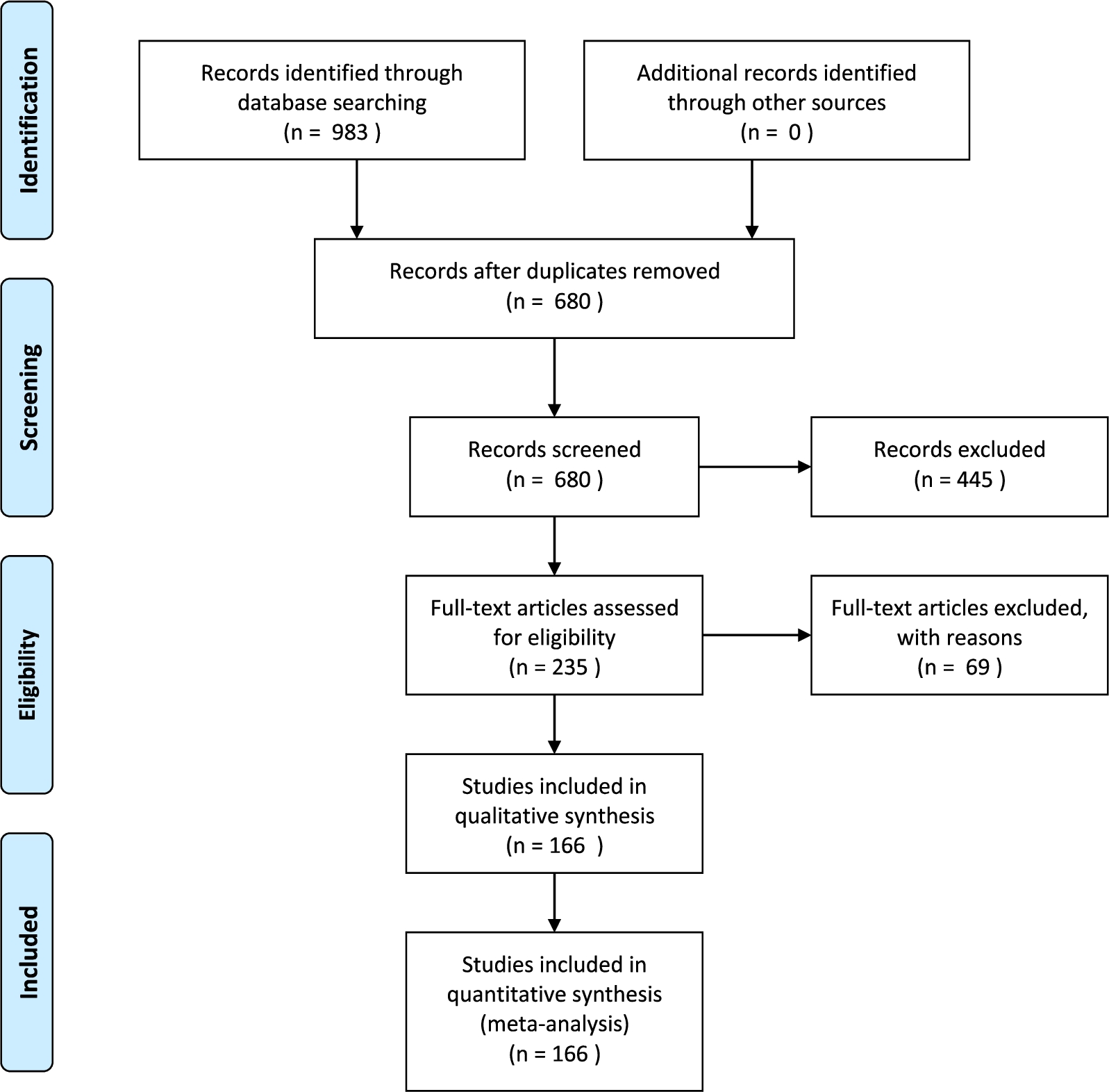

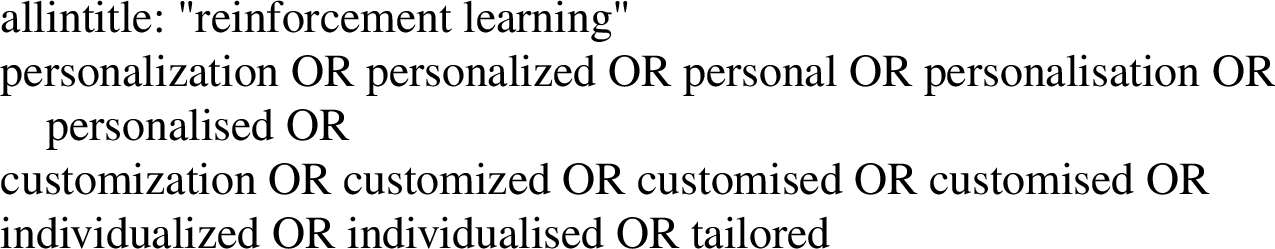

Fig. 3.

Overview of the SLR process.

Figure 3 contains an overview of the SLR process. The first step is to run a query on a set of databases. For this SLR, a query was run on Scopus, IEEE Xplore, ACM’s full-text collection, DBLP and Google Scholar on June 6, 2018. These databases were selected as their combined index spans a wide range, and their combined result set was sufficiently large for this study. Scopus and IEEE Xplore support queries on title, keywords and abstract. ACM’s full-text collection, DBLP and Google scholar do not support queries on keywords and abstract content. We therefore ran two kinds of queries: we queried on title only for ACM’s full-text collection, DBLP and Google Scholar and we extended this query to keywords and abstract content for Scopus and IEEE Xplore. The query was constructed by combining techniques of interest and keywords for the personalization problem. For techniques of interest the terms ‘reinforcement learning’ and ‘contextual bandits’ were used. For the personalization problem, variations on the words ‘personalized’, ‘customized’, ‘individualized’ and ‘tailored’ were included in British and American spelling. All queries are listed in Appendix A. Query results were de-duplicated and stored in a spreadsheet.

5.3.Screening process

In the screening process, all query results are tested against the inclusion criteria from Section 5.1 in two phases. We used all criteria in both phases. In the first phase, we assessed eligibility based on keywords, abstract and title whereas we used full text of the article in the second phase. In the first phase, a spreadsheet with de-duplicated results was shared with all authors via Google Drive. Studies were assigned randomly to authors who scored each study by the eligibility criteria. The results of this screening were verified by one of the other authors, assigned randomly. Disagreements were settled in meetings involving those in disagreement and FDH if necessary. In addition to eligibility results, author preferences for full-text screening were recorded on a three-point scale. Studies that were not considered eligible were not taken into account beyond this point, all other studies were included in the second phase.

In the second phase, data on eligible studies was copied to a new spreadsheet. This sheet was again shared via Google Drive. Full texts were retrieved and evenly divided amongst authors according to preference. For each study, the assigned author then assessed eligibility based on full text and extracted the data items detailed below.

5.4.Data items

Data on setting, solution and methodology were collected. Table 2 contains all data items for this SLR. For data on setting, we operationalized our framework from Table 1 in Section 4. To assess trends in solution, algorithms used, number of MDP models (see Section 2) and training regime were recorded. Specifically, we noted whether training was performed by interacting with actual users (‘live’), using existing data and a simulator of user behavior. For the algorithms, we recorded the name as used by the authors. To gauge maturity of the proposed solutions and the field as a whole, data on the evaluation strategy and baselines used were extracted. Again, we listed whether evaluation included ‘live’ interaction with users, existing interactions between systems and users or using a simulator. Finally, publication year and application domain were registered to enable identification of trends over time and across domains. The list of domains was composed as follows: during phase one of the screening process, all authors recorded a domain for each included paper, yielding a highly inconsistent initial set of domains. This set was simplified into a more consistent set of domains which was used during full-text screening. For papers that did not fall into this consistent set of domains, two categories were added: a ‘Domain Independent’ and an ‘Other’ category. The actual domain was recorded for the five papers in the ‘Other’ category. These domains were not further consolidated as all five papers were assigned to unique domains not encountered before.

Table 2

Data items in SLR. The last column relates data items to aspects of setting from Table 1 where applicable

| Category | # | Data item | Values | A# |

| Setting | 1 | User defines suitability of system behavior explicitly | Yes, No | A1 |

| 2 | Suitability of system behavior is derived | Yes, No | A1 | |

| 3 | Safety is mentioned as a concern in the article | Yes, No | A2 | |

| 4 | Privacy is mentioned as a concern in the article | Yes, No | A6 | |

| 5 | Models of user responses to system behavior are available | Yes, No | A3 | |

| 6 | Data on user responses to system behavior are available | Yes. No | A4 | |

| 7 | New interactions with users can be sampled with ease | Yes, No | A5 | |

| 8 | All information to base personalization on can be measured | Yes, No | A7 | |

| Solution | 9 | Algorithms | N/A | – |

| 10 | Number of learners | 1, 1/user, 1/group, multiple | – | |

| 11 | Usage of traits of the user | state, other, not used | – | |

| 12 | Training mode | online, batch, other, unknown | – | |

| 13 | Training in simulation | Yes, No | A3 | |

| 14 | Training on a real-life dataset | Yes, No | A4 | |

| 15 | Training in ‘live’ setting | Yes, No | A5 | |

| Evaluation | 16 | Evaluation in simulation | Yes, No | A3 |

| 17 | Evaluation on a real-life dataset | Yes, No | A4 | |

| 18 | Evaluation in ‘live’ setting | Yes, No | A5 | |

| 19 | Comparison with ‘no personalization’ | Yes, No | – | |

| 20 | Comparison with non-RL methods | Yes, No | – |

5.5.Synthesis and analysis

To facilitate analysis, reported algorithms were normalized using simple text normalization and key-collision methods. The resulting mappings are available in the dataset release [46]. Data was summarized using descriptive statistics and figures with an accompanying narrative to gain insight into trends with respect to settings, solutions and evaluation over time and across domains.

6.Results

The quantitative synthesis and analyses introduced in Section 5.5 were applied to the collected data. In this section, we present insights obtained. We focus on the major insights and encourage the reader to explore the tabular view in Appendix B or the collected data for further analysis [46].

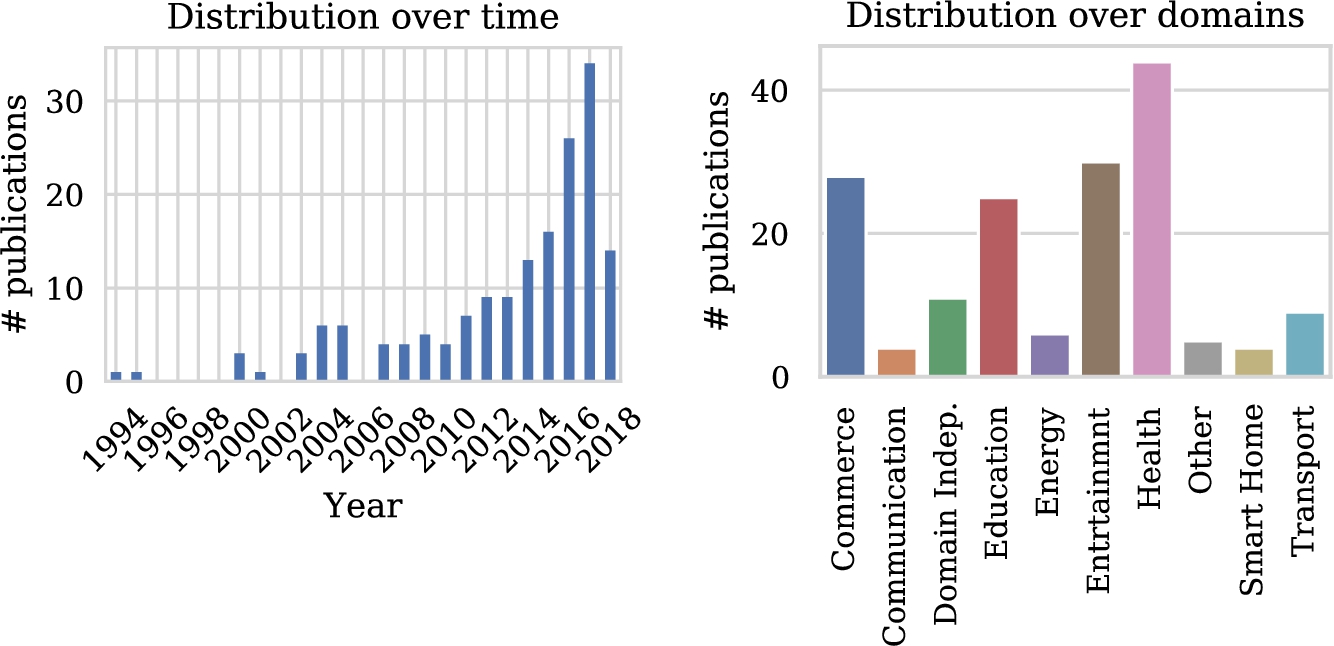

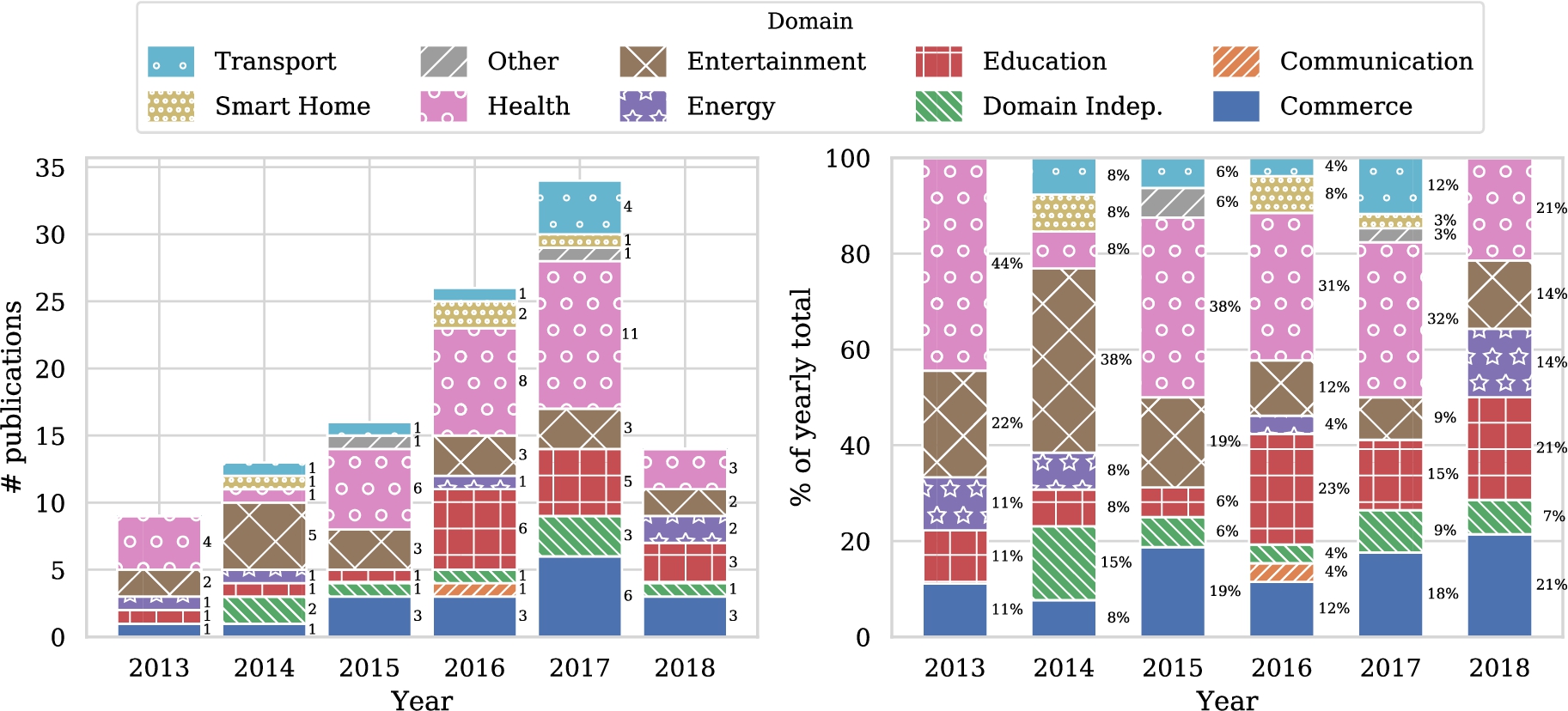

Before diving into the details of the study in light of the classification scheme we have proposed, let us first study some general trends. Figure 4 shows the number of publications addressing personalization using RL techniques over time. A clear increase can be seen. With over forty entries, the health domain contains by far the most articles, followed by entertainment, education and commerce with all approximately just over twenty five entries. Other domains contain less than twelve papers in total. Figure 5(a) shows the popularity of domains for the five most recent years and seems to indicate that the number of articles in the health domain is steadily growing, in contrast with the other domains. Of course, these graphs are based on a limited number of publications, so drawing strong conclusions from these results is difficult. We do need to take into account that the popularity of RL for personalization is increasing in general. Therefore Fig. 5(b) shows the relative distribution of studies over domains for the five most recent years. Now we see that the health domain is just following the overall trend, and is not becoming more popular within studies that use RL for personalization. We fail to identify clear trends for other domains from these figures.

Fig. 4.

Distribution of included papers over time and over domains. Note that only studies published prior to the query date of June 6, 2018 were included.

Fig. 5.

Popularity of domains for the five most recent years.

6.1.Setting

Table 3 provides an overview of the data related to setting in which the studies were conducted. The table shows that user responses to system behavior are present in a minority of cases (66/166). Additionally, models of user behavior are only used in around one quarter of all publications. The suitability of system behavior is much more frequently derived from data (130/166) rather than explicitly collected by users (39/166). Privacy is clearly not within the scope of most articles, only in 9 out of 166 cases do we see this issue explicitly mentioned. Safety concerns, however, are mentioned in a reasonable proportion of studies (30/166). Interactions can generally be sampled with ease and the resulting information is frequently sufficient to base personalization of the system at hand on.

Table 3

Number of publications by aspects of setting

| Aspect | # |

| User defines suitability of system behavior explicitly | 39 |

| Suitability of system behavior is derived | 130 |

| Safety is mentioned as a concern in the article | 30 |

| Privacy is mentioned as a concern in the article | 9 |

| Models of user responses to system behavior are available | 41 |

| Data on user responses to system behavior are available | 66 |

| New interactions with users can be sampled with ease | 97 |

| All information to base personalization on can be measured | 132 |

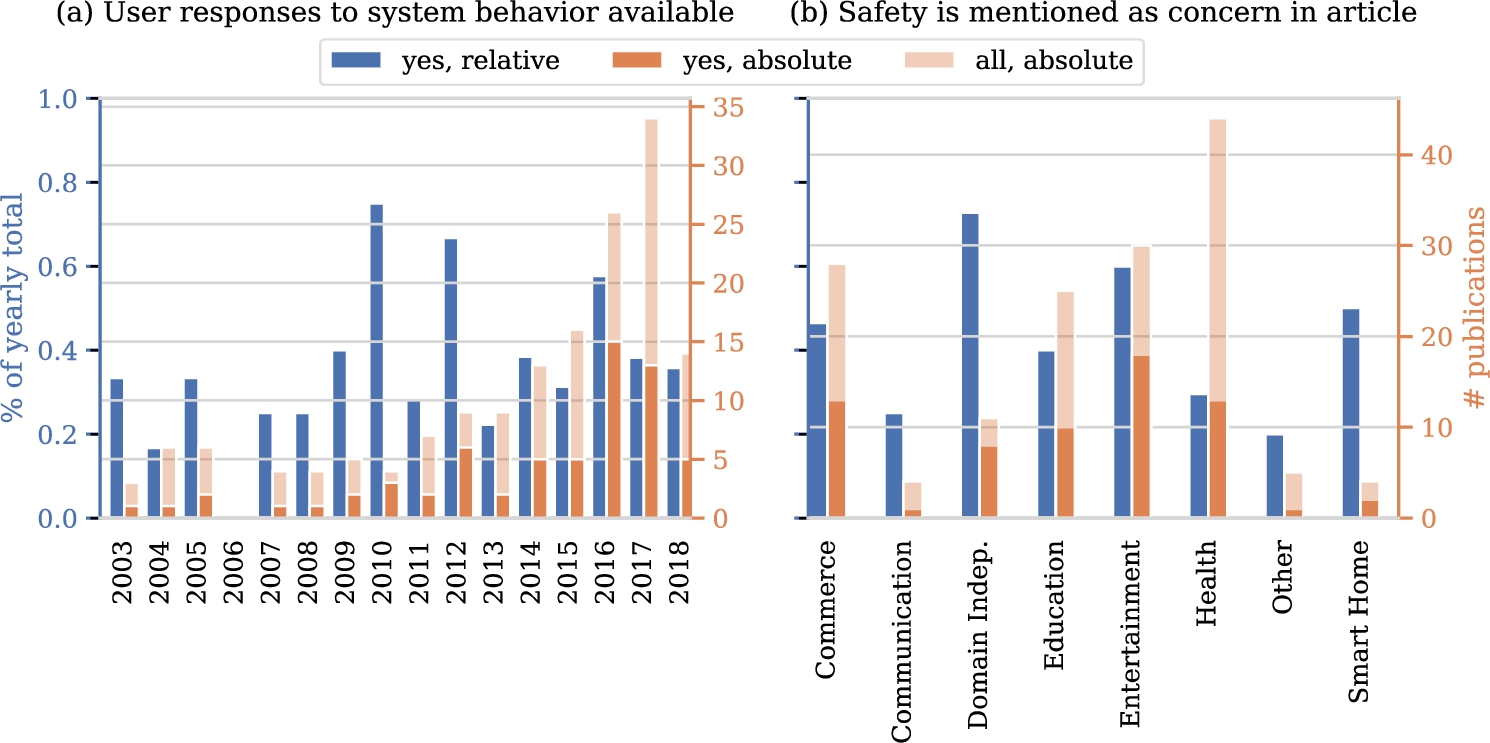

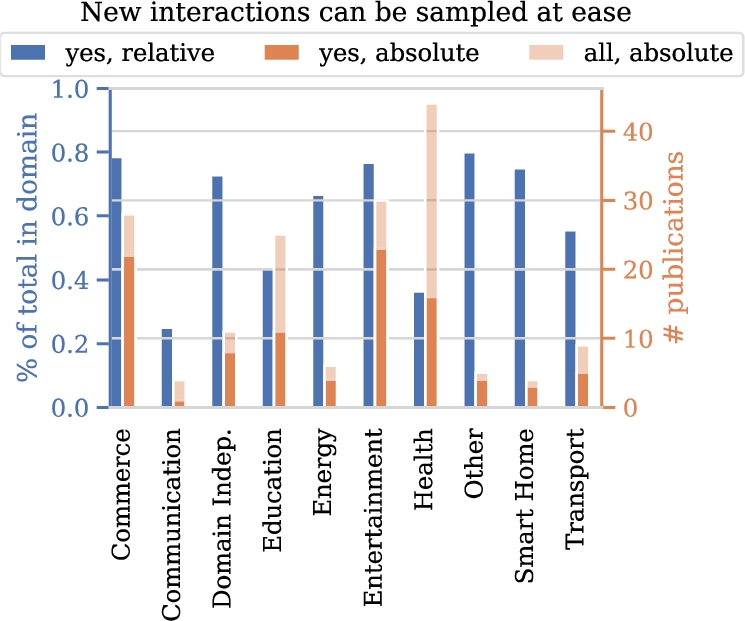

Let us dive into some aspects in a bit more detail. A first trend we anticipate is an increase of the fraction of studies working with real data on human responses over the years, considering the digitization trend and associated data collection. Figure 6(a) shows the fraction of papers for which data on user responses to system behavior is available over time. Surprisingly, we see that this fraction does not show any clear trend over time. Another aspect of interest relates to safety issues in particular domains. We hypothesize that in certain domains, such as health, safety is more frequently mentioned as a concern. Figure 6(b) shows the fraction of papers of the different domains in which safety is mentioned. Indeed, we clearly see that certain domains mention safety much more frequently than other domains. Third, we explore the ease with which interactions with users can be sampled. Again, we expect to see substantial differences between domains. Figure 7 confirms our intuition. Interactions can be sampled with ease more frequently in studies in the commerce, entertainment, energy, and smart homes domains when compared to communication and health domains.

Fig. 6.

Availability of user responses over time (a), and mentions of safety as a concern over domains (b).

Finally, we investigate whether upfront knowledge is available. In our analysis, we explore both real data as well user models being available upfront. One would expect papers to have at least one of these two prior to starting experiments. User models and not real data were reported in 41 studies, while 53 articles used real data but no user model and 12 use both. We see that for 71 studies neither is available. In roughly half of these, simulators were used for both training (38/71) and evaluation (37/71). In a minority, training (15/71) and evaluation (17/71) were performed in a live setting, e.g. while collecting data.

6.2.Solution

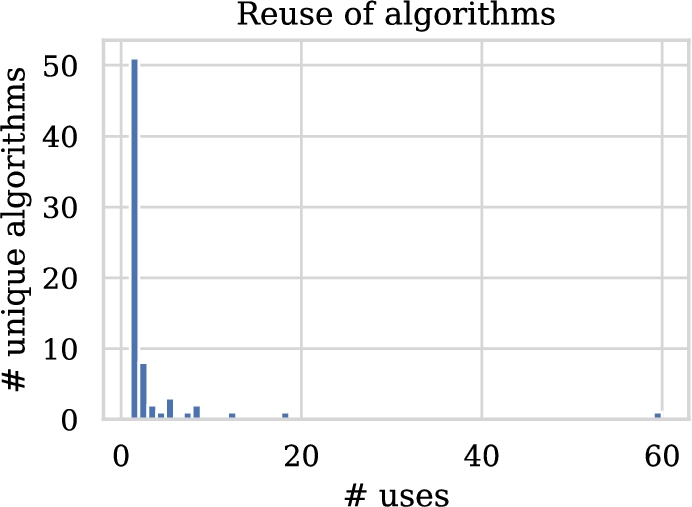

In our investigation into solutions, we first explore the algorithms that were used. Figure 8 shows the distribution of usage frequency. A vast majority of the algorithms are used only once, some techniques are used a couple of times and one algorithm is used 60 times. Note again that we use the name of the algorithms used by the authors as a basis for this analysis. Table 4 lists the algorithms that were used more than once. A significant number of studies (60/166) use the Q-learning algorithm. At the same time, a substantial number of articles (18/166) reports the use of RL as the underlying algorithmic framework without specifying an actual algorithm. The contextual bandits, Sarsa, actor-critic and inverse RL (IRL) algorithms are used in respectively (18/166), (12/166), (8/166), (8/166) and (7/166) papers. We also observe some additional algorithms from the contextual bandits family, such as UCB and LinUCB. Furthermore, we find various mentions that indicate the usage of deep neural networks: deep reinforcement learning, DQN and DDQN. In general, we find that some publications refer to a specific algorithm whereas others only report generic techniques or families thereof.

Fig. 7.

New interactions with users can be sampled with ease.

Fig. 8.

Distribution of algorithm usage frequencies.

Table 4

Algorithm usage for all algorithms that were used in more than one publication

| Algorithm | # of uses |

| Q-learning [219] | 60 |

| RL, not further specified | 18 |

| Contextual bandits | 12 |

| Sarsa [187] | 8 |

| Actor-critic | 8 |

| Inverse reinforcement learning | 7 |

| UCB [7] | 5 |

| Policy iteration | 5 |

| LinUCB [37] | 5 |

| Deep reinforcement learning | 4 |

| Fitted Q-iteration [166] | 3 |

| DQN [133] | 3 |

| Interactive reinforcement learning | 2 |

| TD-learning | 2 |

| DYNA-Q [186] | 2 |

| Policy gradient | 2 |

| CLUB [69] | 2 |

| Monte Carlo | 2 |

| Thompson sampling | 2 |

| DDQN [212] | 2 |

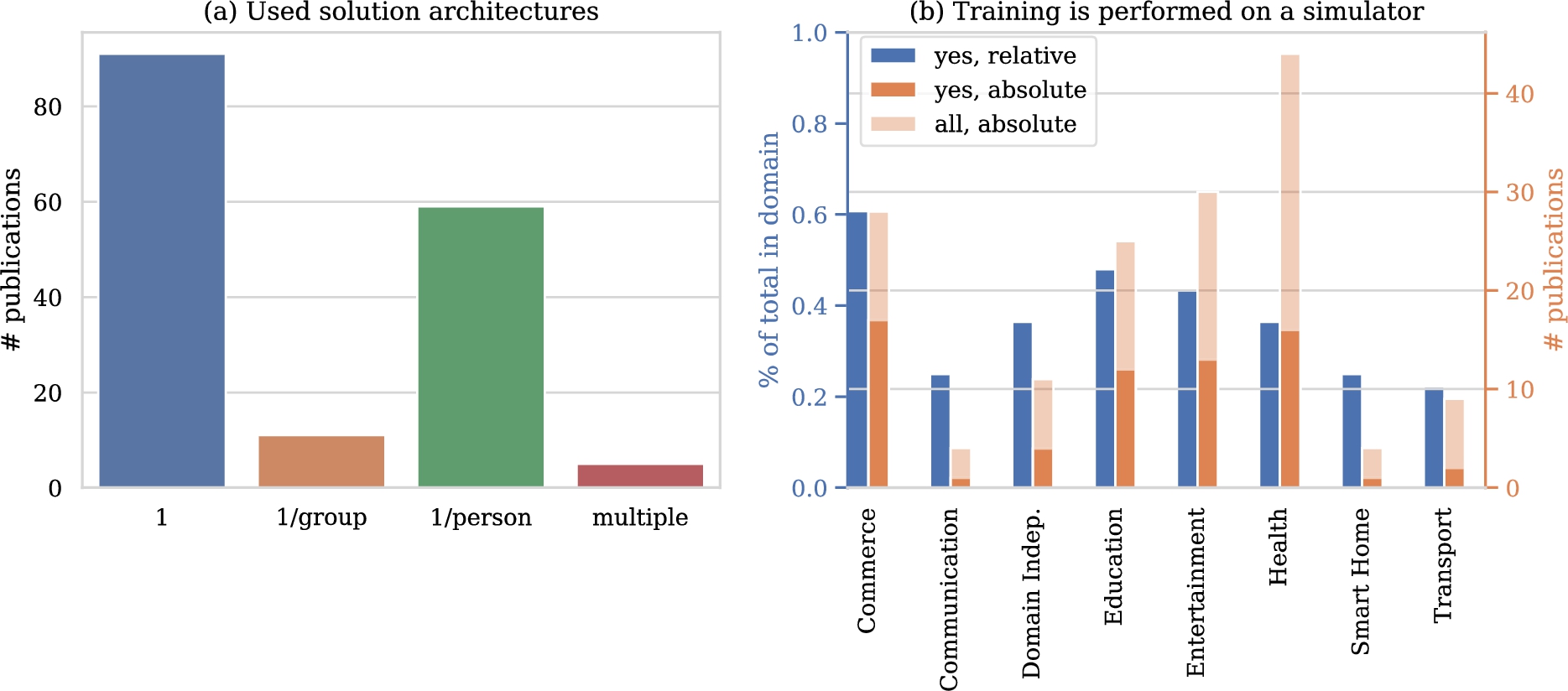

Fig. 9.

Occurence of different solution architectures (a) and usage of simulators in training (b). For (a), publications that compare architectures are represented in the ‘multiple’ category.

Figure 9(a) lists the number of models used in the included publications. The majority of solutions relies on a single-model architecture. On the other end of the spectrum lies the architecture of using one model per person. This architecture comes second in usage frequency. The architecture that uses one model per group can be considered a middle ground between these former two. In this architecture, only experiences with relevant individuals can be shared. Comparisons between architectures are rare. We continue by investigating whether and where traits of the individual were used in relation to these architectures. Table 5 provides an overview. Out of all papers that use one model, 52.7% did not use the traits of the individuals and 41.7 % included traits in the state space. 47.5% of the papers include the traits of the individuals in the state representation while in 37.3% of the papers the traits were not included. In 15.3% of the cases this was not known.

Figure 9(b) shows the popularity of using a simulator for training per domain. We see that a substantial percentage of publications use a simulator and that simulators are used in all domains. Simulators are used in the majority of publications for the energy, transport, communication and entertainment domains. In publications in the first three out of these domains, we typically find applications that require large-scale implementation and have a big impact on infrastructure, e.g. control of the entire energy grid or a fleet of taxis in a large city. This complicates the collection of useful realistic dataset and training in a live setting. This is not the case for the entertainment domain with 17 works using a simulator for training. Further investigation shows that nine out of these 17 also include training on real data or in a ‘live’ setting. It seems that training on a simulator is part of the validation of the algorithm rather than the prime contribution of the paper in the entertainment domain.

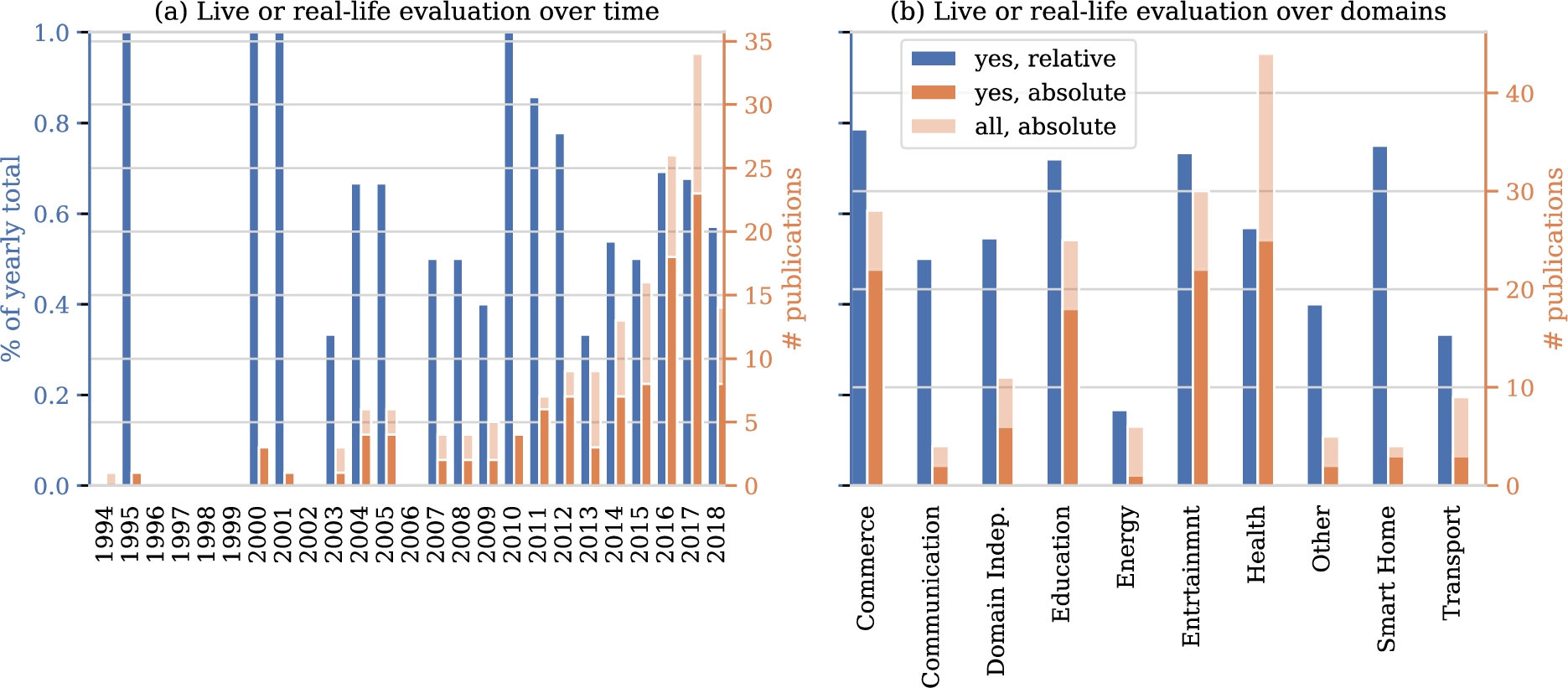

6.3.Evaluation

In investigating evaluation rigor, we first turn to the data on which evaluations are based. Figure 10 shows how many studies include an evaluation in a ‘live’ setting or using existing interactions with users. In the years up to 2007 few studies were done and most of these included realistic evaluations. In more recent years, the absolute number of studies shows a marked upward trend to which the relative number of articles that include a realistic evaluation fails to keep pace. Figure 10 also shows the number of realistic evaluations per domain. Disregarding the smart home domain, as it contains only four studies, the highest ratio of real evaluations can be found in the commerce and entertainment domains, followed by the health domain.

Table 5

Number of models and the inclusion of user traits

| Traits of users were used | Number of models | |||

| 1 | 1/group | 1/person | Multiple | |

| In state representation | 38 | 8 | 28 | 2 |

| Other | 5 | 0 | 9 | 3 |

| Not used | 48 | 3 | 22 | 0 |

| Total | 91 | 11 | 59 | 5 |

Fig. 10.

Number of papers with a ‘live’ evaluation or evaluation using data on user responses to system behavior.

We look at possible reasons for a lack of realistic evaluation using our categorization of settings from Section 4. Indeed, there are 63 studies with no realistic evaluation versus 104 with a realistic evaluation. Because these group sizes differ, we include ratios with respect to these totals in Table 6. The biggest difference between ratios of studies with and without a realistic evaluation is in the upfront availability of data on interactions with users. This is not surprising, as it is natural to use existing interactions for evaluation when they are available already. The second biggest difference between the groups is whether safety is mentioned as a concern. Relatively, studies that refrain from a realistic evaluation mention safety concerns almost twice as often as studies that do a realistic evaluation. The third biggest difference can be found in availability of user models. If a model is available, user responses can be simulated more easily. Privacy concerns are not mentioned frequently, so little can be said on its contribution to a lacking realistic evaluation. Finally and surprisingly, the ease of sampling interactions is comparable between studies with a realistic and without realistic evaluation.

Table 6

Comparison of settings with realistic and other evaluation

| Real-world evaluation | Other evaluation | |||

| Count | % of column total | Count | % of column total | |

| Total | 104 | 100.0% | 63 | 100.0% |

| Data on user responses to system behavior are available | 57 | 54.8% | 9 | 14.5% |

| Safety is mentioned as a concern in the article | 14 | 13.5% | 16 | 25.8% |

| Models of user responses to system behavior are available | 21 | 20.2% | 20 | 32.3% |

| Privacy is mentioned as a concern in the article | 7 | 6.7% | 2 | 3.2% |

| New interactions with users can be sampled with ease | 60 | 57.7% | 37 | 59.7% |

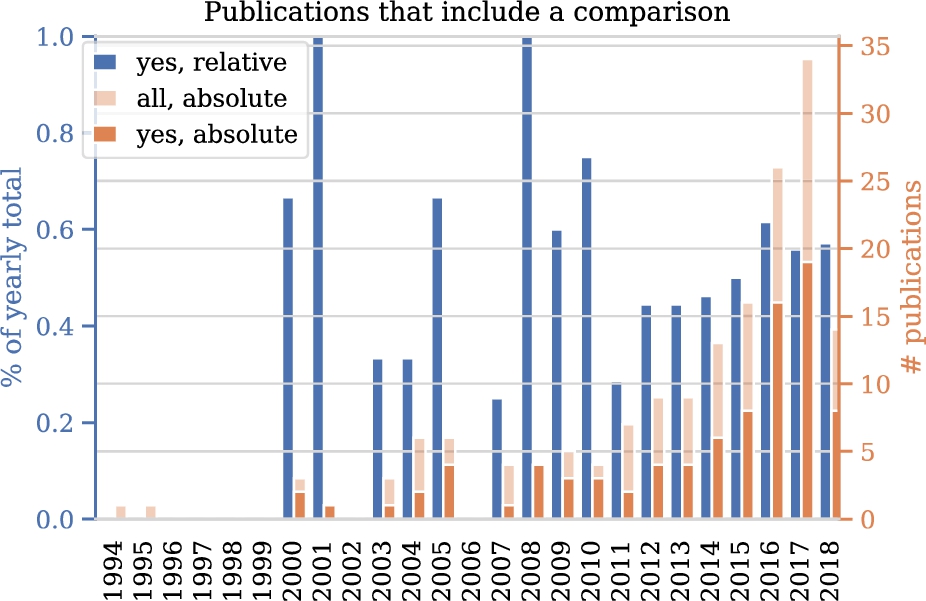

Figure 11 describes how many studies include any of the comparisons in scope in this survey, that is: comparisons between solutions with and without personalization, comparisons between RL approaches and other approaches to personalization and comparisons between different RL algorithms. In the first years, no papers includes such a comparison. The period 2000-2010 contains relatively little studies in general and the absolute and relative numbers of studies with a comparison vary. From 2011 to 2018, the absolute number maintains it upward trend. The relative number follows this trend but flattens after 2016.

Fig. 11.

Number of papers that include any comparison between solutions over time.

7.Discussion

The goal of this study was to give an overview and categorization of RL applications for personalization in different application domains which we addressed using a SLR on settings, solution architectures and evaluation strategies. The main result is the marked increase in studies that use RL for personalization problems over time. Additionally, techniques are increasingly evaluated on real-life data. RL has proven a suitable paradigm for adaptation of systems to individual preferences using data.

Results further indicate that this development is driven by various techniques, which we list in no particular order. Firstly, techniques have been developed to estimate the performance of deploying a particular RL model prior to deployment. This helps in communicating risks and benefits of RL solutions with stakeholders and moves RL further into the realm of feasible technologies for high-impact application domains [200]. For single-step decision making problems, contextual bandit algorithms with theoretical bounds on decision-theoretic regret have become available. For multi-step decision making problems, methods that can estimate the performance of some policy based on data generated by another policy have been developed [37,90,204]. Secondly, advances in the field of deep learning have wholly or partly removed the need for feature engineering [53]. This may be especially challenging for sequential decision-making problems as different features may be of importance in different states encountered over time. Finally, research on safe exploration in RL has developed means to avoid harmful actions during exploratory phases of learning [64]. How any these techniques are best applied depends on setting. The collected data can be used to find suitable related work for any particular setting [46].

Since the field of RL for personalization is growing in size, we investigated whether methodological maturity is keeping pace. Results show that the growth in the number of studies with a real-life evaluation is not mirrored by growth of the ratio of studies with such an evaluation. Similarly, results show no increase in the relative number of studies with a comparison of approaches over time. These may be signs that the maturity of the field fails to keep pace with its growth. This is worrisome, since the advantages of RL over other approaches or between RL algorithms cannot be understood properly without such comparisons. Such comparisons benefit from standardized tasks. Developing standardized personalization datasets and simulation environments is an excellent opportunity for future research [87,112].

We found that algorithms presented in literature are reused infrequently. Although this phenomenon may be driven by various different underlying dynamics that cannot be untangled using our data, we propose some possible explanations here without particular order. Firstly, it might be the case that separate applications require tailored algorithms to the extend that these can only be used once. This raises the question on the scientific contribution of such a tailored algorithm and does not fit with the reuse of some well-established algorithms. Another explanation is that top-ranked venues prefer contributions that are theoretical or technical in nature, resulting in minor variations to well-known algorithms being presented as novel. Whether this is the case is out of scope for this research and forms an excellent avenue for future work. A final explanation for us to propose, is the myriad axes along which any RL algorithm can be identified, such as whether and where estimation is involved, which estimation technique is used and how domain knowledge is encoded in the algorithm. This may yield a large number of unique algorithms, constructed out of a relatively small set of core ideas in RL. An overview of these core ideas would be useful in understanding how individual algorithms relate to each other.

On top of algorithm reuse, we analyzed which RL algorithms were used most frequently. Generic and well-established (families of) algorithms such as Q-learning are the most popular. A notable entry in the top six most-used techniques is inverse reinforcement learning (IRL). Its frequent usage is surprising, as the only viable application area of IRL under a decade ago was robotics [97]. Personalization may be one of the other useful application areas of this branch of RL and many existing personalization challenges may still benefit from an IRL approach. Finally, we investigated how many RL models were included in the proposed solutions and found that the majority of studies resorts to using either one RL model in total or one RL model per user. Inspired by common practice of clustering in the related fields such as e.g. recommender systems, we believe that there exists opportunities in pooling data of similar users and training RL models on the pooled data.

Besides these findings, we contribute a categorization of personalization settings in RL. This framework can be used to find related work based on the setting of a problem at hand. In designing such a framework, one has to balance specificity and usefulness of aspects in the framework. We take the aspect of ‘safety’ as an example: any application of RL will imply safety concerns at some level, but they are more prominent in some application areas. The framework intentionally includes a single ambiguous aspect to describe a broad range ‘safety sensitivity levels’ in order for it to suit its purpose of navigating literature. A possibility for future work is to extend the framework with other, more formal, aspects of problem setting such as those identified in [170].

Notes

Acknowledgements

The authors would like to thank Frank van Harmelen for useful feedback on the presented classification of personalization settings.

The authors declare that they have no conflict of interest.

Appendices

Appendix A.

Appendix A.Queries

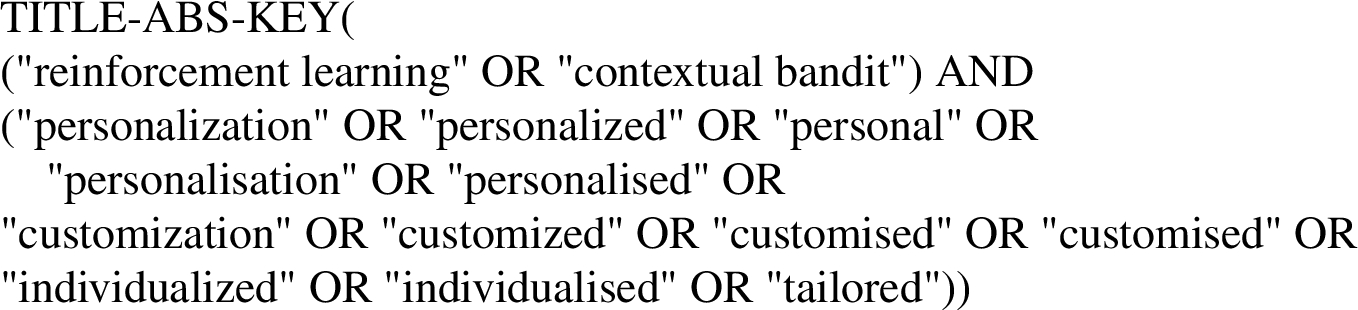

Listing 1.

Query for Scopus database

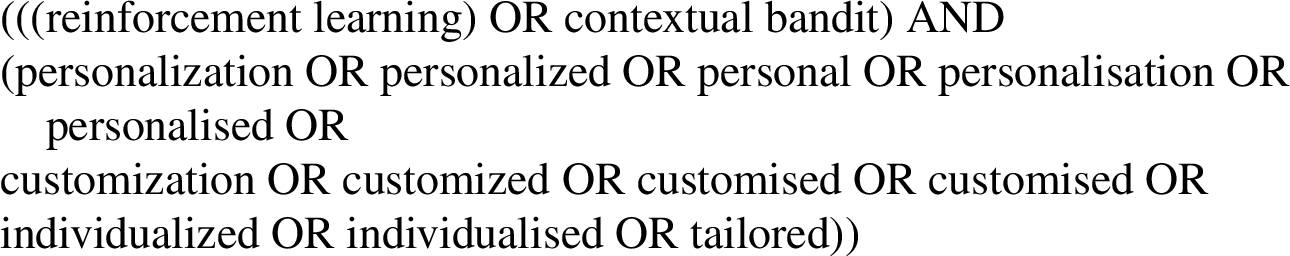

Listing 2.

Query for IEEE Xplore database command search

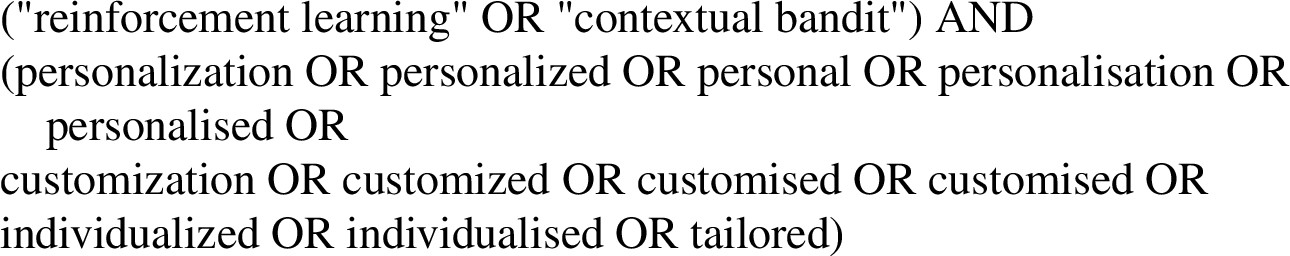

Listing 3.

Query for ACM DL database

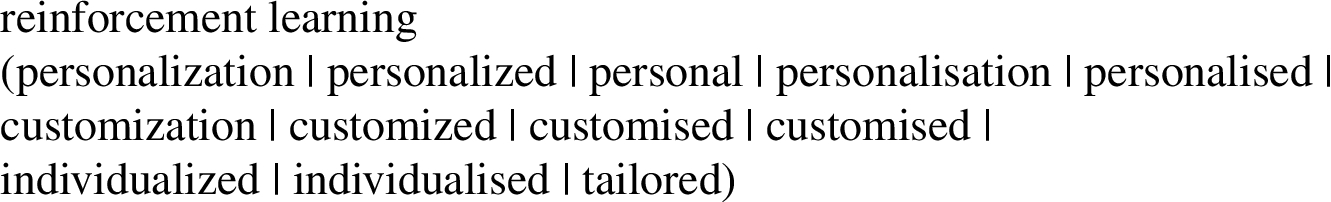

Listing 4.

First query for DBLP database

Listing 5.

Second query for DBLP database

Listing 6.

First query for Google Scholar database

Listing 7.

Second query for Google Scholar database

Appendix B.

Appendix B.Tabular view of data

Table 7

Table containing all included publications. The first column refers to the data items in Table 2

References

[1] | N. Abe, N. Verma, C. Apte and R. Schroko, Cross channel optimized marketing by reinforcement learning, in: Proceedings of the 2004 ACM SIGKDD International Conference on Knowledge Discovery and Data Mining – KDD ’04, (2004) . doi:10.1145/1014052.1016912. |

[2] | G. Abowd, A. Dey, P. Brown, N. Davies, M. Smith and P. Steggles, Towards a better understanding of context and context-awareness, in: Handheld and Ubiquitous Computing, Springer, (1999) , p. 319. doi:10.1007/3-540-48157-5_29. |

[3] | S. Ahrndt, M. Lützenberger and S.M. Prochnow, Using personality models as prior knowledge to accelerate learning about stress-coping preferences: (demonstration), in: AAMAS, (2016) . doi:10.5555/2936924.2937221. |

[4] | G. Andrade, G. Ramalho, H. Santana and V. Corruble, Automatic computer game balancing, in: Proceedings of the Fourth International Joint Conference on Autonomous Agents and Multiagent Systems – AAMAS ’05, (2005) . doi:10.1145/1082473.1082648. |

[5] | M.G. Aspinall and R.G. Hamermesh, Realizing the promise of personalized medicine, Harvard Business Review 85: (10) ((2007) ), 108. https://hbr.org/2007/10/realizing-the-promise-of-personalized-medicine. |

[6] | A. Atrash and J. Pineau, A Bayesian reinforcement learning approach for customizing human–robot interfaces, in: Proceedings of the 13th International Conference on Intelligent User Interfaces – IUI ’09, (2008) . doi:10.1145/1502650.1502700. |

[7] | P. Auer, N. Cesa-Bianchi and P. Fischer, Finite-time analysis of the multiarmed bandit problem, Machine Learning 47: (2–3) ((2002) ), 235–256. doi:10.1023/A:1013689704352. |

[8] | S. Ávila-Sansores, F. Orihuela-Espina and L. Enrique-Sucar, Patient tailored virtual rehabilitation, in: Converging Clinical and Engineering Research on Neurorehabilitation, Biosystems & Biorobotics, Vol. 1: , (2013) , pp. 879–883. doi:10.1007/978-3-642-34546-3_143. |

[9] | N.F. Awad and M.S. Krishnan, The personalization privacy paradox: An empirical evaluation of information transparency and the willingness to be profiled online for personalization, MIS Quarterly 30: (1) ((2006) ), 13–28. doi:10.2307/25148715. |

[10] | N. Bagdure and B. Ambudkar, Reducing delay during vertical handover, in: 2015 International Conference on Computing Communication Control and Automation, (2015) . doi:10.1109/ICCUBEA.2015.44. |

[11] | A. Baniya, S. Herrmann, Q. Qiao and H. Lu, Adaptive interventions treatment modelling and regimen optimization using sequential multiple assignment randomized trials (SMART) and Q-learning, in: IIE Annual Conference. Proceedings, Institute of Industrial and Systems Engineers (IISE), (2017) , pp. 1187–1192. https://pdfs.semanticscholar.org/858e/ffd10b711ad6c86eff9c32cdc0bc320a6e1a.pdf. |

[12] | A.G. Barto, P.S. Thomas and R.S. Sutton, Some recent applications of reinforcement learning, 2017. https://people.cs.umass.edu/~pthomas/papers/Barto2017.pdf. |

[13] | A.L.C. Bazzan, Synergies between evolutionary computation and multiagent reinforcement learning, in: Proceedings of the Genetic and Evolutionary Computation Conference Companion – GECCO ’17, (2017) . doi:10.1145/3067695.3075970. |

[14] | M.G. Bellemare, Y. Naddaf, J. Veness and M. Bowling, The arcade learning environment: An evaluation platform for general agents, Journal of Artificial Intelligence Research 47: ((2013) ), 253–279. doi:10.1613/jair.3912. |

[15] | R.E. Bellman, Adaptive Control Processes: A Guided Tour, Vol. 2045: , Princeton University Press, (2015) . doi:10.1002/nav.3800080314. |

[16] | H. Bi, O.J. Akinwande and E. Gelenbe, Emergency navigation in confined spaces using dynamic grouping, in: 2015 9th International Conference on Next Generation Mobile Applications, Services and Technologies, (2015) . doi:10.1109/NGMAST.2015.12. |

[17] | G. Biegel and V. Cahill, A framework for developing mobile, context-aware applications, in: Proceedings of the Second IEEE Annual Conference on Pervasive Computing and Communications, PerCom 2004, IEEE, (2004) , pp. 361–365. doi:10.1109/PERCOM.2004.1276875. |

[18] | A. Bodas, B. Upadhyay, C. Nadiger and S. Abdelhak, Reinforcement learning for game personalization on edge devices, in: 2018 International Conference on Information and Computer Technologies (ICICT), (2018) . doi:10.1109/INFOCT.2018.8356853. |

[19] | D. Bouneffouf, A. Bouzeghoub and A.L. Gançarski, Hybrid-ϵ-greedy for mobile context-aware recommender system, in: Pacific-Asia Conference on Knowledge Discovery and Data Mining, Lecture Notes in Computer Science, Vol. 7301: , (2012) , pp. 468–479. doi:10.1007/978-3-642-30217-6_39. |

[20] | J. Bragg, Mausam and D.S. Weld, Optimal testing for crowd workers, in: AAMAS, (2016) . doi:10.5555/2936924.2937066. |

[21] | G. Brockman, V. Cheung, L. Pettersson, J. Schneider, J. Schulman, J. Tang and W. Zaremba, Openai gym, Preprint, arXiv:1606.01540, 2016. |

[22] | P. Brusilovski, A. Kobsa and W. Nejdl, The Adaptive Web: Methods and Strategies of Web Personalization, Vol. 4321: , Springer, (2007) . doi:10.1007/978-3-540-72079-9. |

[23] | D. Budgen and P. Brereton, Performing systematic literature reviews in software engineering, in: Proceedings of the 28th International Conference on Software Engineering, ACM, (2006) , pp. 1051–1052. doi:10.1145/1134285.1134500. |

[24] | A.B. Buduru and S.S. Yau, An effective approach to continuous user authentication for touch screen smart devices, in: 2015 IEEE International Conference on Software Quality, Reliability and Security, (2015) . doi:10.1109/QRS.2015.40. |

[25] | I. Casanueva, T. Hain, H. Christensen, R. Marxer and P. Green, Knowledge transfer between speakers for personalised dialogue management, in: Proceedings of the 16th Annual Meeting of the Special Interest Group on Discourse and Dialogue, (2015) , pp. 12–21. doi:10.18653/v1/W15-4603. |

[26] | A. Castro-Gonzalez, F. Amirabdollahian, D. Polani, M. Malfaz and M.A. Salichs, Robot self-preservation and adaptation to user preferences in game play, a preliminary study, in: 2011 IEEE International Conference on Robotics and Biomimetics, (2011) . doi:10.1109/ROBIO.2011.6181679. |

[27] | L. Cella, Modelling user behaviors with evolving users and catalogs of evolving items, in: Adjunct Publication of the 25th Conference on User Modeling, Adaptation and Personalization – UMAP ’17, (2017) . doi:10.1145/3099023.3102251. |

[28] | B. Chakraborty and S.A. Murphy, Dynamic treatment regimes, Annual Review of Statistics and Its Application 1: (1) ((2014) ), 447–464. doi:10.1146/annurev-statistics-022513-115553. |

[29] | J. Chan and G. Nejat, A learning-based control architecture for an assistive robot providing social engagement during cognitively stimulating activities, in: 2011 IEEE International Conference on Robotics and Automation, (2011) . doi:10.1109/ICRA.2011.5980426. |

[30] | R.K. Chellappa and R.G. Sin, Personalization versus privacy: An empirical examination of the online consumer’s dilemma, Information Technology and Management 6: (2–3) ((2005) ), 181–202. doi:10.1007/s10799-005-5879-y. |

[31] | J. Chen and Z. Yang, A learning multi-agent system for personalized information filtering, in: Proceedings of the 2003 Joint Fourth International Conference on Information, Communications and Signal Processing, 2003 and the Fourth Pacific Rim Conference on Multimedia, (2003) . doi:10.1109/ICICS.2003.1292790. |

[32] | X. Chen, Y. Zhai, C. Lu, J. Gong and G. Wang, A learning model for personalized adaptive cruise control, in: 2017 IEEE Intelligent Vehicles Symposium (IV), (2017) . doi:10.1109/IVS.2017.7995748. |

[33] | Z. Cheng, Q. Zhao, F. Wang, Y. Jiang, L. Xia and J. Ding, Satisfaction based Q-learning for integrated lighting and blind control, Energy and Buildings 127: ((2016) ), 43–55. doi:10.1016/j.enbuild.2016.05.067. |

[34] | C.-Y. Chi, R.T.-H. Tsai, J.-Y. Lai and J.Y. Hsu, A reinforcement learning approach to emotion-based automatic playlist generation, in: 2010 International Conference on Technologies and Applications of Artificial Intelligence, (2010) . doi:10.1109/TAAI.2010.21. |

[35] | M. Chi, K. VanLehn, D. Litman and P. Jordan, Inducing effective pedagogical strategies using learning context features, in: International Conference on User Modeling, Adaptation, and Personalization, Lecture Notes in Computer Science, Vol. 6075: , (2010) , pp. 147–158. doi:10.1007/978-3-642-13470-8_15. |

[36] | Y.-S. Chiang, T.-S. Chu, C.D. Lim, T.-Y. Wu, S.-H. Tseng and L.-C. Fu, Personalizing robot behavior for interruption in social human–robot interaction, in: 2014 IEEE International Workshop on Advanced Robotics and Its Social Impacts, (2014) . doi:10.1109/ARSO.2014.7020978. |

[37] | W. Chu, L. Li, L. Reyzin and R. Schapire, Contextual bandits with linear payoff functions, in: Proceedings of the Fourteenth International Conference on Artificial Intelligence and Statistics, (2011) , pp. 208–214. http://proceedings.mlr.press/v15/chu11a. |

[38] | M. Claeys, S. Latre, J. Famaey and F. De Turck, Design and evaluation of a self-learning HTTP adaptive video streaming client, IEEE Communications Letters 18: (4) ((2014) ), 716–719. doi:10.1109/LCOMM.2014.020414.132649. |

[39] | G. Da Silveira, D. Borenstein and F.S. Fogliatto, Mass customization: Literature review and research directions, International Journal of Production Economics 72: (1) ((2001) ), 1–13. doi:10.1016/S0925-5273(00)00079-7. |

[40] | M. Daltayanni, C. Wang and R. Akella, A fast interactive search system for healthcare services, in: 2012 Annual SRII Global Conference, (2012) . doi:10.1109/SRII.2012.65. |

[41] | E. Daskalaki, P. Diem and S.G. Mougiakakou, Personalized tuning of a reinforcement learning control algorithm for glucose regulation, in: 2013 35th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), (2013) . doi:10.1109/EMBC.2013.6610293. |

[42] | E. Daskalaki, P. Diem and S.G. Mougiakakou, An actor–critic based controller for glucose regulation in type 1 diabetes, Computer Methods and Programs in Biomedicine 109: (2) ((2013) ), 116–125. doi:10.1016/j.cmpb.2012.03.002. |

[43] | E. Daskalaki, P. Diem and S.G. Mougiakakou, Model-free machine learning in biomedicine: Feasibility study in type 1 diabetes, PLoS ONE 11: (7) ((2016) ), e0158722. doi:10.1371/journal.pone.0158722. |

[44] | M. De Paula, G.G. Acosta and E.C. Martínez, On-line policy learning and adaptation for real-time personalization of an artificial pancreas, Expert Systems with Applications 42: (4) ((2015) ), 2234–2255. doi:10.1016/j.eswa.2014.10.038. |

[45] | M. De Paula, L.O. Ávila and E.C. Martínez, Controlling blood glucose variability under uncertainty using reinforcement learning and Gaussian processes, Applied Soft Computing 35: ((2015) ), 310–332. doi:10.1016/j.asoc.2015.06.041. |

[46] | F. den Hengst, E. Grua, A. el Hassouni and M. Hoogendoorn, Release of the systematic literature review into reinforcement learning for personalization, Zenodo, 2020. doi:10.5281/zenodo.3627118. |

[47] | F. den Hengst, M. Hoogendoorn, F. van Harmelen and J. Bosman, Reinforcement learning for personalized dialogue management, in: IEEE/WIC/ACM International Conference on Web Intelligence, (2019) , pp. 59–67. doi:10.1145/3350546.3352501. |

[48] | K. Deng, J. Pineau and S. Murphy, Active learning for personalizing treatment, in: 2011 IEEE Symposium on Adaptive Dynamic Programming and Reinforcement Learning (ADPRL), (2011) . doi:10.1109/ADPRL.2011.5967348. |

[49] | A.A. Deshmukh, Ü. Dogan and C. Scott, Multi-task learning for contextual bandits, in: NIPS, (2017) . doi:10.5555/3295222.3295238. |

[50] | Y. Duan, X. Chen, R. Houthooft, J. Schulman and P. Abbeel, Benchmarking deep reinforcement learning for continuous control, in: International Conference on Machine Learning, (2016) , pp. 1329–1338. http://proceedings.mlr.press/v48/duan16.html. |

[51] | A. Durand and J. Pineau, Adaptive treatment allocation using sub-sampled Gaussian processes, in: 2015 AAAI Fall Symposium Series, (2015) . https://www.aaai.org/ocs/index.php/FSS/FSS15/paper/view/11671. |

[52] | M. El Fouki, N. Aknin and K.E. El Kadiri, Intelligent adapted e-learning system based on deep reinforcement learning, in: Proceedings of the 2nd International Conference on Computing and Wireless Communication Systems – ICCWCS’17, (2017) . doi:10.1145/3167486.3167574. |

[53] | A. El Hassouni, M. Hoogendoorn, A.E. Eiben, M. van Otterlo and V. Muhonen, End-to-end personalization of digital health interventions using raw sensor data with deep reinforcement learning: A comparative study in digital health interventions for behavior change, in: 2019 IEEE/WIC/ACM International Conference on Web Intelligence (WI), IEEE, (2019) , pp. 258–264. doi:10.1145/3350546.3352527. |

[54] | A. el Hassouni, M. Hoogendoorn, M. van Otterlo and E. Barbaro, Personalization of health interventions using cluster-based reinforcement learning, in: International Conference on Principles and Practice of Multi-Agent Systems, Springer, (2018) , pp. 467–475. doi:10.1007/978-3-030-03098-8_31. |

[55] | H. Fan and M.S. Poole, What is personalization? Perspectives on the design and implementation of personalization in information systems, Journal of Organizational Computing and Electronic Commerce 16: (3–4) ((2006) ), 179–202. doi:10.1207/s15327744joce1603&4_2. |

[56] | J. Feng, H. Li, M. Huang, S. Liu, W. Ou, Z. Wang and X. Zhu, Learning to collaborate, in: Proceedings of the 2018 World Wide Web Conference on World Wide Web – WWW ’18, (2018) . doi:10.1080/10919392.2006.9681199. |

[57] | B. Fernandez-Gauna and M. Grana, Recipe tuning by reinforcement learning in the SandS ecosystem, in: 2014 6th International Conference on Computational Aspects of Social Networks, (2014) . doi:10.1109/CASoN.2014.6920422. |

[58] | S. Ferretti, S. Mirri, C. Prandi and P. Salomoni, Exploiting reinforcement learning to profile users and personalize web pages, in: 2014 IEEE 38th International Computer Software and Applications Conference Workshops, (2014) . doi:10.1109/COMPSACW.2014.45. |

[59] | S. Ferretti, S. Mirri, C. Prandi and P. Salomoni, User centered and context dependent personalization through experiential transcoding, in: 2014 IEEE 11th Consumer Communications and Networking Conference (CCNC), (2014) . doi:10.1109/CCNC.2014.6940520. |

[60] | S. Ferretti, S. Mirri, C. Prandi and P. Salomoni, Automatic web content personalization through reinforcement learning, Journal of Systems and Software 121: ((2016) ), 157–169. doi:10.1016/j.jss.2016.02.008. |

[61] | S. Ferretti, S. Mirri, C. Prandi and P. Salomoni, On personalizing web content through reinforcement learning, Universal Access in the Information Society 16: (2) ((2017) ), 395–410. doi:10.1007/s10209-016-0463-2. |

[62] | L. Fournier, Learning capabilities for improving automatic transmission control, in: Proceedings of the Intelligent Vehicles ’94 Symposium, (1994) . doi:10.1109/IVS.1994.639561. |

[63] | A.Y. Gao, W. Barendregt and G. Castellano, Personalised human–robot co-adaptation in instructional settings using reinforcement learning, in: IVA Workshop on Persuasive Embodied Agents for Behavior Change: PEACH 2017, August 27, Stockholm, Sweden, (2017) . http://www.diva-portal.org/smash/get/diva2:1162389/FULLTEXT01.pdf. |

[64] | J. García and F. Fernández, A comprehensive survey on safe reinforcement learning, Journal of Machine Learning Research 16: (1) ((2015) ), 1437–1480. http://jmlr.org/papers/v16/garcia15a.html. |

[65] | A. Garivier and E. Moulines, On upper-confidence bound policies for switching bandit problems, in: International Conference on Algorithmic Learning Theory, Springer, (2011) , pp. 174–188. doi:10.1007/978-3-642-24412-4_16. |

[66] | A.E. Gaweda, Improving management of anemia in end stage renal disease using reinforcement learning, in: 2009 International Joint Conference on Neural Networks, (2009) . doi:10.1109/IJCNN.2009.5179004. |

[67] | A.E. Gaweda, M.K. Muezzinoglu, G.R. Aronoff, A.A. Jacobs, J.M. Zurada and M.E. Brier, Individualization of pharmacological anemia management using reinforcement learning, Neural Networks 18: (5) ((2005) ), 826–834. doi:10.1016/j.neunet.2005.06.020. |

[68] | A.E. Gaweda, M.K. Muezzinoglu, G.R. Aronoff, A.A. Jacobs, J.M. Zurada and M.E. Brier, Incorporating prior knowledge into Q-learning for drug delivery individualization, in: Fourth International Conference on Machine Learning and Applications (ICMLA’05), (2005) . doi:10.1109/ICMLA.2005.40. |

[69] | C. Gentile, S. Li and G. Zappella, Online clustering of bandits, in: International Conference on Machine Learning, (2014) , pp. 757–765. http://proceedings.mlr.press/v32/gentile14.html. |

[70] | B.S. Ghahfarokhi and N. Movahhedinia, A personalized QoE-aware handover decision based on distributed reinforcement learning, Wireless Networks 19: (8) ((2013) ), 1807–1828. doi:10.1007/s11276-013-0572-2. |

[71] | G.S. Ginsburg and J.J. McCarthy, Personalized medicine: Revolutionizing drug discovery and patient care, Trends in Biotechnology 19: (12) ((2001) ), 491–496. doi:10.1016/S0167-7799(01)01814-5. |

[72] | D. Glowacka, T. Ruotsalo, K. Konuyshkova, K. Athukorala, S. Kaski and G. Jacucci, Directing exploratory search: reinforcement learning from user interactions with keywords, in: Proceedings of the 2013 International Conference on Intelligent User Interfaces – IUI ’13, (2013) . doi:10.1145/2449396.2449413. |

[73] | Y. Goldberg and M.R. Kosorok, Q-learning with censored data, The Annals of Statistics 40: (1) ((2012) ), 529–560. doi:10.1214/12-AOS968. |

[74] | G. Gordon, S. Spaulding, J.K. Westlund, J.J. Lee, L. Plummer, M. Martinez, M. Das and C. Breazeal, Affective personalization of a social robot tutor for children’s second language skills, in: Thirtieth AAAI Conference on Artificial Intelligence, (2016) . doi:10.5555/3016387.3016461. |

[75] | E.M. Grua and M. Hoogendoorn, Exploring clustering techniques for effective reinforcement learning based personalization for health and wellbeing, in: 2018 IEEE Symposium Series on Computational Intelligence (SSCI), IEEE, (2018) , pp. 813–820. doi:10.1109/SSCI.2018.8628621. |

[76] | X. Guo, Y. Sun, Z. Yan and N. Wang, Privacy-personalization paradox in adoption of mobile health service: The mediating role of trust, in: PACIS 2012 Proceedings, (2012) , p. 27. https://aisel.aisnet.org/pacis2012/27. |

[77] | M.A. Hamburg and F.S. Collins, The path to personalized medicine, N. Engl. J. Med. 2010: (363) ((2010) ), 301–304. doi:10.1056/NEJMp1006304. |

[78] | A. Hans, D. Schneegaß, A.M. Schäfer and S. Udluft, Safe exploration for reinforcement learning., in: ESANN, (2008) , pp. 143–148. http://www.elen.ucl.ac.be/Proceedings/esann/esannpdf/es2008-36.pdf. |

[79] | F.M. Harper, X. Li, Y. Chen and J.A. Konstan, An economic model of user rating in an online recommender system, in: International Conference on User Modeling, Lecture Notes in Computer Science, Vol. 3538: , (2005) , pp. 307–316. doi:10.1007/11527886_40. |

[80] | J. Hemminghaus and S. Kopp, Adaptive behavior generation for child–robot interaction, in: Companion of the 2018 ACM/IEEE International Conference on Human–Robot Interaction – HRI ’18, (2018) . doi:10.1145/3173386.3176916. |

[81] | T. Hester and P. Stone, Learning and using models, in: Reinforcement Learning, M. Wiering and M. Van Otterlo, eds, Adaptation, Learning, and Optimization, Vol. 12: , Springer, (2012) , p. 120. doi:10.1007/978-3-642-27645-3_4. |

[82] | D.N. Hill, H. Nassif, Y. Liu, A. Iyer and S.V.N. Vishwanathan, An efficient bandit algorithm for realtime multivariate optimization, in: Proceedings of the 23rd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining – KDD ’17, (2017) . doi:10.1145/3097983.3098184. |

[83] | T. Hiraoka, G. Neubig, S. Sakti, T. Toda and S. Nakamura, Learning cooperative persuasive dialogue policies using framing, Speech Communication 84: ((2016) ), 83–96. doi:10.1016/j.specom.2016.09.002. |

[84] | L. Hood and M. Flores, A personal view on systems medicine and the emergence of proactive P4 medicine: Predictive, preventive, personalized and participatory, New Biotechnology 29: (6) ((2012) ), 613–624. doi:10.1016/j.nbt.2012.03.004. |

[85] | Z. Huajun, Z. Jin, W. Rui and M. Tan, Multi-objective reinforcement learning algorithm and its application in drive system, in: 2008 34th Annual Conference of IEEE Industrial Electronics, (2008) . doi:10.1109/IECON.2008.4757965. |

[86] | S. Huang and F. Lin, Designing intelligent sales-agent for online selling, in: Proceedings of the 7th International Conference on Electronic Commerce – ICEC ’05, (2005) . doi:10.1145/1089551.1089605. |

[87] | E. Ie, C. Hsu, M. Mladenov, V. Jain, S. Narvekar, J. Wang, R. Wu and C. Boutilier, RecSim: A configurable simulation platform for recommender systems, Preprint, arXiv:1909.04847, 2019. |

[88] | S. Jaradat, N. Dokoohaki, M. Matskin and E. Ferrari, Trust and privacy correlations in social networks: A deep learning framework, in: 2016 IEEE/ACM International Conference on Advances in Social Networks Analysis and Mining (ASONAM), (2016) . doi:10.1109/ASONAM.2016.7752236. |

[89] | G. Jawaheer, M. Szomszor and P. Kostkova, Comparison of implicit and explicit feedback from an online music recommendation service, in: Proceedings of the 1st International Workshop on Information Heterogeneity and Fusion in Recommender Systems, (2010) , ACM, pp. 47–51. doi:10.1145/1869446.1869453. |

[90] | N. Jiang and L. Li, Doubly robust off-policy value evaluation for reinforcement learning, in: International Conference on Machine Learning, (2016) , pp. 652–661. http://proceedings.mlr.press/v48/jiang16.html. |

[91] | Z. Jin and Z. Huajun, Multi-objective reinforcement learning algorithm and its improved convergency method, in: 2011 6th IEEE Conference on Industrial Electronics and Applications, (2011) . doi:10.1109/ICIEA.2011.5976002. |

[92] | S. Junges, N. Jansen, C. Dehnert, U. Topcu and J.-P. Katoen, Safety-constrained reinforcement learning for MDPs, in: International Conference on Tools and Algorithms for the Construction and Analysis of Systems, Springer, (2016) , pp. 130–146. doi:10.1007/978-3-662-49674-9_8. |

[93] | A.A. Kardan and O.R.B. Speily, Smart lifelong learning system based on Q-learning, in: 2010 Seventh International Conference on Information Technology: New Generations, (2010) . doi:10.1109/ITNG.2010.140. |

[94] | I. Kastanis and M. Slater, Reinforcement learning utilizes proxemics, ACM Transactions on Applied Perception 9: (1) ((2012) ), 1–15. doi:10.1145/2134203.2134206. |

[95] | S. Keizer, S. Rossignol, S. Chandramohan and O. Pietquin, User simulation in the development of statistical spoken dialogue systems, in: Data-Driven Methods for Adaptive Spoken Dialogue Systems, Springer, (2012) , pp. 39–74. doi:10.1007/978-1-4614-4803-7_4. |

[96] | M.K. Khribi, M. Jemni and O. Nasraoui, Automatic recommendations for e-learning personalization based on web usage mining techniques and information retrieval, in: Eighth IEEE International Conference on Advanced Learning Technologies, ICALT’08, IEEE, (2008) , pp. 241–245. doi:10.1109/ICALT.2008.198. |

[97] | J. Kober and J. Peters, Reinforcement learning in robotics: A survey, in: Reinforcement Learning, M. Wiering and M. Van Otterlo, eds, Adaptation, Learning, and Optimization, Vol. 12: , Springer, (2012) , pp. 596–597. doi:10.1007/978-3-642-27645-3_18. |

[98] | V.R. Konda and J.N. Tsitsiklis, Actor-critic algorithms, in: Advances in Neural Information Processing Systems, (2000) , pp. 1008–1014. doi:10.5555/3009657.3009799. |

[99] | I. Koukoutsidis, A learning strategy for paging in mobile environments, in: 5th European Personal Mobile Communications Conference 2003, (2003) . doi:10.1049/cp:20030322. |

[100] | R. Kozierok and P. Maes, A learning interface agent for scheduling meetings, in: Proceedings of the 1st International Conference on Intelligent User Interfaces, ACM, (1993) , pp. 81–88. doi:10.1145/169891.169908. |

[101] | E.F. Krakow, M. Hemmer, T. Wang, B. Logan, M. Arora, S. Spellman, D. Couriel, A. Alousi, J. Pidala, M. Last et al., Tools for the precision medicine era: How to develop highly personalized treatment recommendations from cohort and registry data using Q-learning, American Journal of Epidemiology 186: (2) ((2017) ), 160–172. doi:10.1093/aje/kwx027. |

[102] | T.L. Lai and H. Robbins, Asymptotically efficient adaptive allocation rules, Advances in Applied Mathematics 6: (1) ((1985) ), 4–22. doi:10.1016/0196-8858(85)90002-8. |

[103] | A.S. Lan and R.G. Baraniuk, A contextual bandits framework for personalized learning action selection, in: EDM, (2016) . http://www.educationaldatamining.org/EDM2016/proceedings/paper_18.pdf. |

[104] | G. Lee, S. Bauer, P. Faratin and J. Wroclawski, Learning user preferences for wireless services provisioning, in: Proceedings of the Third International Joint Conference on Autonomous Agents and Multiagent Systems, AAMAS 2004, (2004) , pp. 480–487. doi:10.5555/1018409.1018782. |

[105] | O. Lemon, Conversational interfaces, in: Data-Driven Methods for Adaptive Spoken Dialogue Systems, Springer, (2012) , pp. 1–4. doi:10.1007/978-1-4614-4803-7. |

[106] | K. Li and M.Q.-H. Meng, Personalizing a service robot by learning human habits from behavioral footprints, Engineering 1: (1) ((2015) ), 079. doi:10.15302/J-ENG-2015024. |

[107] | L. Li, W. Chu, J. Langford and R.E. Schapire, A contextual-bandit approach to personalized news article recommendation, in: Proceedings of the 19th International Conference on World Wide Web, ACM, (2010) , pp. 661–670. doi:10.1145/1772690.1772758. |

[108] | Z. Li, J. Kiseleva, M. de Rijke and A. Grotov, Towards learning reward functions from user interactions, in: Proceedings of the ACM SIGIR International Conference on Theory of Information Retrieval – ICTIR ’17, (2017) . doi:10.1145/3121050.3121098. |

[109] | E. Liebman and P. Stone, DJ-MC: A reinforcement-learning agent for music playlist recommendation, in: AAMAS, (2015) . doi:10.5555/2772879.2772954. |

[110] | J. Lim, H. Son, D. Lee and D. Lee, An MARL-based distributed learning scheme for capturing user preferences in a smart environment, in: 2017 IEEE International Conference on Services Computing (SCC), (2017) . doi:10.1109/SCC.2017.24. |