Administering Cognitive Tests Through Touch Screen Tablet Devices: Potential Issues

Abstract

Mobile technologies, such as tablet devices, open up new possibilities for health-related diagnosis, monitoring, and intervention for older adults and healthcare practitioners. Current evaluations of cognitive integrity typically occur within clinical settings, such as memory clinics, using pen and paper or computer-based tests. In the present study, we investigate the challenges associated with transferring such tests to touch-based, mobile technology platforms from an older adult perspective. Problems may include individual variability in technical familiarity and acceptance; various factors influencing usability; acceptability; response characteristics and thus validity per se of a given test. For the results of mobile technology-based tests of reaction time to be valid and related to disease status rather than extraneous variables, it is imperative the whole test process is investigated in order to determine potential effects before the test is fully developed. Researchers have emphasized the importance of including the ‘user’ in the evaluation of such devices; thus we performed a focus group-based qualitative assessment of the processes involved in the administration and performance of a tablet-based version of a typical test of attention and information processing speed (a multi-item localization task), to younger and older adults. We report that although the test was regarded positively, indicating that using a tablet for the delivery of such tests is feasible, it is important for developers to consider factors surrounding user expectations, performance feedback, and physical response requirements and to use this information to inform further research into such applications.

INTRODUCTION

The past five years have seen a rapid growth in the number of people over the age of 65 using mobile devices. Almost one in five older adults in the United States possess a smart phone with increased usage driven by factors such as the advanced capabilities of smart devices, the value placed on the ability to communicate with relatives, and the perceived usability of touch screen technology [1, 2]. The trend opens new avenues for adjuncts to health-related diagnosis, monitoring, and intervention and thus the delivery of healthcare to a population that typically find it harder to access such services. This is of particular relevance for older adults who are increasingly at risk of developing dementia and associated disorders, and an often-corresponding reduction in both mobility and the ability to access healthcare services. As a result of increased engagement with digital technology devices such as tablets and smart phones, mass healthcare monitoring in older adulthood is a real possibility. Furthermore, healthcare solutions that economically scale up for a large number of users are increasingly in demand.

Mobile healthcare technology (mHealth) has been applied to many different healthcare challenges to help individuals living with chronic conditions such as diabetes [3]. Due to the ‘connected’ nature of these devices and the growing availability of broadband internet, the idea of ‘information to support the user’ has been expanded beyond traditional medical sources and now provides a platform for community-based solutions where users share experiences and advice on managing a condition [4]. More advanced mHealth concepts include the idea of using on-body biometric sensors to monitor people’s health and to communicate these readings to their mobile device using wireless body area networks [5, 6]. Data gathered and disseminated through these means can be used to augment diagnosis and monitoring processes [7]. In addition to their use in physical conditions [8], mHealth may also be applied to the management of cognitive health [9]. However, although research examining the use of various health-related apps by older adults is helping to indicate what factors affect the use of such apps by this population [2, 8–10], there is a paucity of research investigating the use of touch screen tablets in assessing information or cognitive processing in older adults. This is especially so in relation to individuals living with cognitive impairment and dementia. Although it sounds simple in theory to move away from testing on PCs by adapting cognitive tests for use with touch screen technology, using this platform can introduce new biases or effects, related to the technology per se or the technology/human interface. Biases may, for example, detrimentally affect the accuracy, validity, sensitivity, and specificity of the test and the robustness and clinical relevance of the results, when used either in a home or clinical setting and whether self-administered or given by another person. An individual’s test score/results must be indicative of the integrity of a given function and not be contaminated by extraneous factors arising from physical, e.g., stimulus-related effects, related to the test itself, the procedure, the platform it is administered from, the test environment, and any administrator/patient interaction [11, 12].

Such factors are particularly pertinent to the testing of an individual’s reaction speed and variability. Reaction time (RT) speed and its intra-individual variability (IIVRT) are measures regularly employed as behavioral indicators of the speed of information processing and the integrity of cognitive and attention-related function in older adulthood, in both research and clinical arenas, with disproportionate slowing and raised variability associated with mild cognitive impairment, Alzheimer’s disease, and vascular dementia [13–16]. As RT speed and variability appear to be behavioral indicators of the integrity (at least in part) of white and grey matter [17] in older adulthood and neurodegenerative dementia processes such as Alzheimer’s disease, such measures may be of use clinically.

Arguably, RT and IIVRT testing appear particularly suited to delivery or presentation via a touch screen tablet as they tend to be cheaper and simpler to use than laptops or desktop computers and can have multiple advantages over computers for testing information processing in older adults [9, 18, 19]. However, it is also increasingly clear that factors unrelated to brain structure and function and a disease process can influence RT and IIVRT and that it is vital to determine, investigate, and ameliorate such effects with respect to the touch screen tablet platform, in order to ensure test validity.

Evidence already reveals that there are a number of challenges to be aware of when digital technologies are used by older adults including physical issues such as decline in manual dexterity and eyesight and decreasing cognitive capabilities, frustration, the need for specific training, age, gender, dry finger skin, and age-related cognitive motor skills [2, 18–22], all factors likely to affect the performance of RT and IIVRT tests using a touch screen platform and thus their clinical validity, usefulness, and robustness. Furthermore, RT research has revealed many participant and methodology-related factors capable of significantly affecting RT study outcome including: the test item, the environment, response requirements, participant and tester, feedback, concurrent disease, medication, abnormal visual and attention-related processes, caffeine, depression, personality, and gender [11–13, 23–27].

Factors specific to the use of a touch screen tablet may also affect performance on such tasks. In a first step to investigating such factors, we took a novel approach, using a simple, focus group-based paradigm. We [28] examined the experience of a group of younger and older adults while performing the Multi-Item Localization (MILO) task— a typical, but touch screen tablet-based, RT test typical of those contributing to the clinical determination of cognitive integrity.

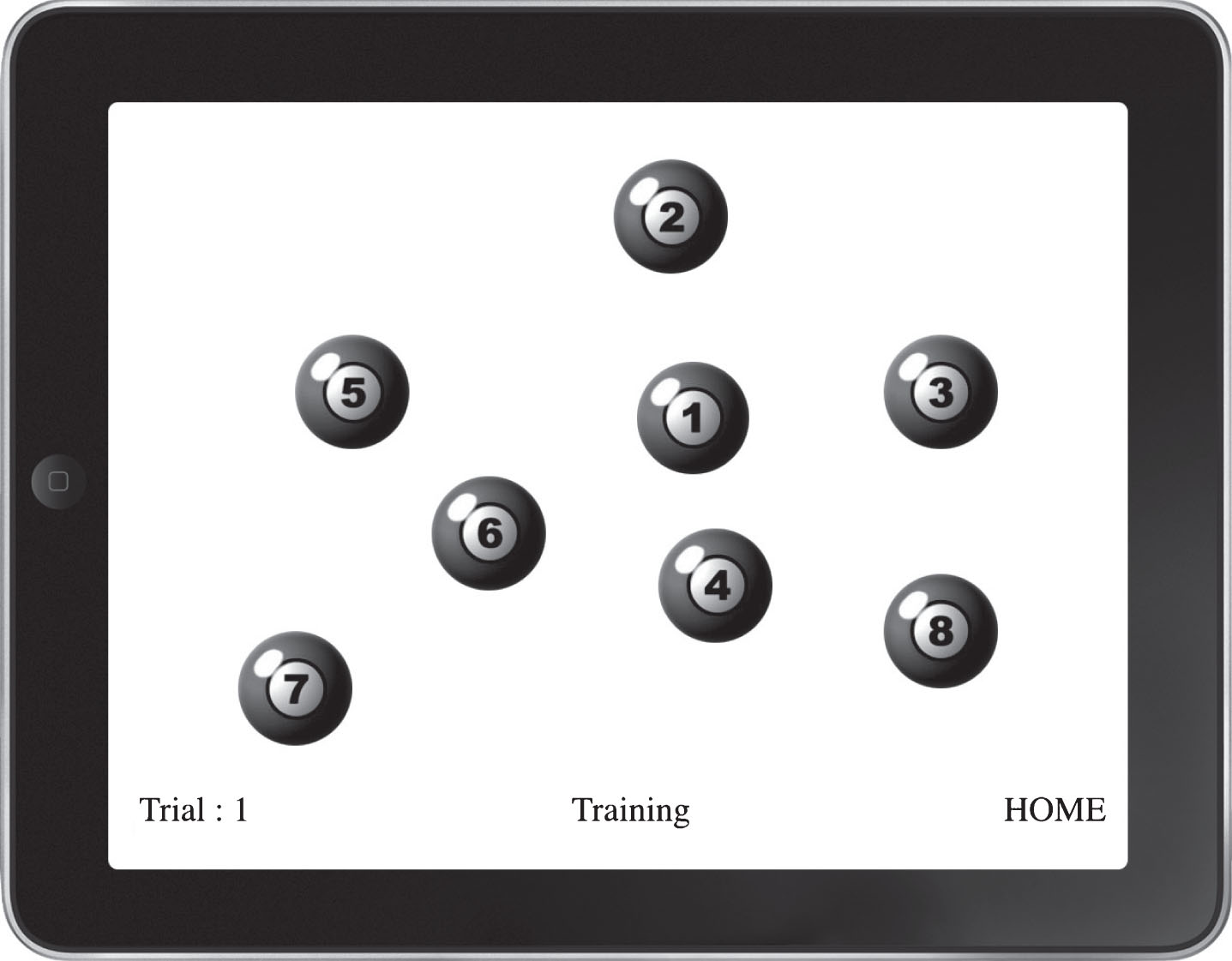

MILO has been used in previous objective research studies, to explore the speed and accuracy with which participants can perform sequences of actions [29, 30]. It is similar to other well-established paper-and-pencil (e.g., The Trail Making Task [31]) and computer-based cancellation tests [32] in requiring a sequence of items to be identified in a specific order. Figure 1 presents a typical trial from the tablet implementation of MILO that was used in the current study [33]. The task for the participant would be to touch each virtual pool ball in sequence, from one to eight.

The general advantages of computer-based presentation as compared to paper-and-pencil tasks include the recording of RTs for each item, rather than simply overall completion time (e.g., [32]) and the ability to easily explore spatial patterns of search organization (e.g., [34]). In addition to these, the MILO task makes it possible to easily manipulate the sequence type (e.g., letters, digits, or both) and sequence behavior (e.g., items vanishing or remaining, sequence position remaining fixed or shuffling between responses), to explore the temporal context of visual search [29]. Such a task therefore represents the type that might be considered for use in a clinical situation, providing information about RT speed and variability, and attention processing and other aspects of higher level, cognitive processing.

MATERIALS AND METHODS

For the purpose of the current study, we used a fixed sequence of the digits one to eight, and configured the display so that items vanished when touched. Although this MILO configuration was not initially designed specifically for use with older adults, we chose the task specifically because the display layout and physical response demands were appropriate for use with this population [35–37]. For example, there are a number of challenges to be aware of when digital technologies are used by older adults including physical issues such as decline in manual dexterity and eyesight and decreasing cognitive capabilities, both potentially hindering interaction with mobile platforms, which are not adapted to their needs [18, 19, 22]. In the MILO task, the target object size and spacing were well within these suggested limits and responses could be self-paced. More specifically, when the iPad was placed on a table 50 cm in front of participants, each 1.9 cm item subtended approximately 2° visual angle, with gaps between items varying between 0.8° and 8° visual angle. To successfully complete a trial, participants were required to touch each object following the numeric sequence one to eight as quickly as possible, but there were no specific time limits, so participants could calibrate their responses taking into account any motor limitations.

When an item was touched, it vanished from the screen, so that the set size, and search difficulty was reduced with each response. Touching an item out of sequence (i.e., a mistake) resulted in the termination of the trial and visual feedback in the form of a schematic sad face. There was a two second inter-trail interval and no feedback on speed or accuracy was provided for correct trials. Each participant completed 10 training and up to 10 experimental trials and at the start of each trial the position of all target items was randomized within the constraints of a virtual grid that was programmed to ensure items did not overlap. As our goal was to explore factors related to presenting a RT task using a touch screen tablet format per se, we did not record actual RT performance as participants were allowed to comment upon any aspects the task while they were doing it. Instead, as detailed below, we used a focus-group design to make a qualitative assessment of individuals’ experiences and device usability.

In an approach that is interdisciplinary and draws from Human Computer Interaction (HCI) and User Experience (UX) research traditions, a focus group approach was adopted in order to determine from the individuals themselves potential issues relating to the use of mobile technology for cognitive testing that may influence the RT results. To provide information of relevance to real life test scenarios, as it is common in MILO and similar computer-based tests of attention and cognition to provide on-screen feedback using a visual or auditory warning indicative of incorrect response, we also investigated the potential influence of this real-time feedback upon task acceptability and performance. Furthermore, the researcher or clinician administering the test typically sits close to the individual taking the test; anecdotally this has been reported to be off-putting to the person taking the test in research situations, but it may also be reassuring for some. We therefore also examined this factor with respect to task acceptability and performance.

We, Jenkins et al. [28], recruited eleven younger adults (18–30 years) and twelve older adults (65+ years) for a one and a half hour focus group. The younger adults were recruited via University block-emails, electronic notices, and word of mouth. The older adults were recruited via the Older People and Ageing Research and Development Network (OPAN) and the local 50+ Networks. Poor general health and visual and dexterity limitations and participation in similar research studies formed exclusion criteria. Two members of the research team were present, one leading and the other observing and taking notes. A semi-structured schedule was followed. Our research method is discussed in full in Jenkins et al. [28], but to reiterate; there are of course limitations associated with this qualitative technique, which we acknowledged and addressed in order to ensure, as far as possible, that they did not introduce bias. For instance, the knowledge, skills, and experience of the researcher leading the focus group can have an unfavorable bias on the generation of information from the participants. In order to avoid such an impact, the research team ensured there were two members of the research team present, one leading and the other observing and taking notes. A semi-structured schedule was followed but also encouraged expansion of discussed areas. Qualitative analysis is rarely employed in the field of computer science therefore this research is novel, rich, and pushes the boundaries of what is already known in the research community.

The focus group was split into three parts. The first part of the focus group was based on discussions around the participants’ understanding of attention, the importance of attention, and changes in attention [28]. In the second part, the participants performed the tablet-based MILO task in a separate room with another member of the research team sitting beside them. In the third part, all participants reformed the focus group to discuss their experience of taking the tablet-based test. This paper specifically focuses on the participants’ experience of using the tablet in the context of a RT test and the participants were made aware that their actual RT was not looked at during the debriefing session.

The focus groups were audio-recorded, and a member of the research team took notes. A semi-structured predetermined framework of open-ended questions was used to ensure all aspects relating to the topic area were explored (Table 1). The focus group recordings were transcribed verbatim, and all identifiable information was either removed or consistently anonymized. Thematic analysis was employed on the interview data, which was realist driven, inductive, and bottom-up [38]. Two members of the research team read and re-read the transcripts making initial comments and codes. The process was repeated twice more until individual codes were identified. Subsequently these were grouped into three major themes that emerged across both younger and older participant groups, namely ‘views of test experience’, ‘testing situation and materials’, and ‘test performance’.

RESULTS

A number of themes and sub-themes have been identified highlighting categories rather than prevalence. The three major themes that emerged across both younger and older participant groups were ‘views of test experience’, ‘testing situation and materials’, and ‘test performance’. In the results section, we will describe each of these themes and contrast the attitudes of younger and older groups before presenting an amalgamated discussion of the results.

Views of test experience

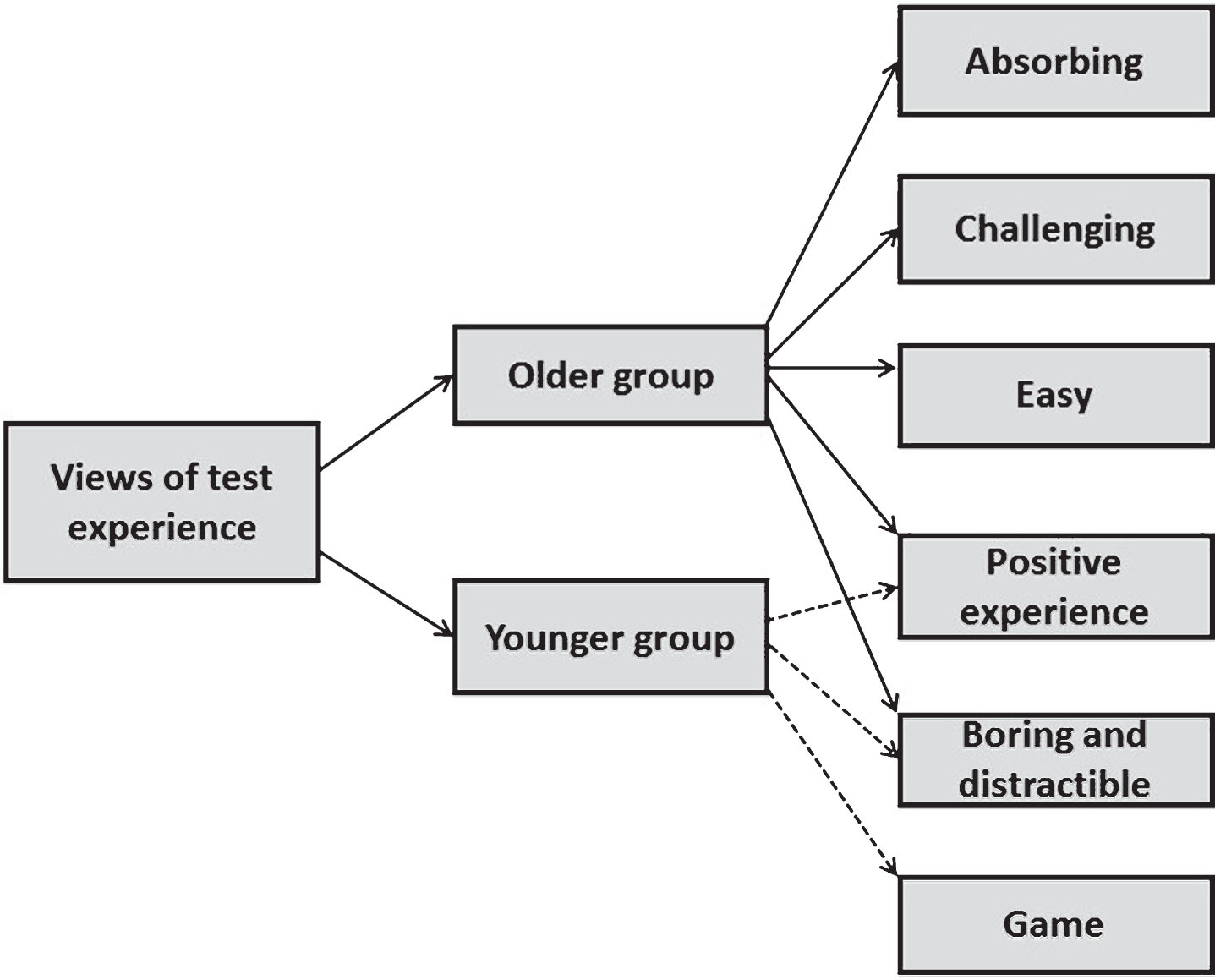

This theme represents the view both the older and younger participants had of the iPad-based attention-related RT task experience. Six sub-themes have been identified, three unique to the older participants, one unique to the younger participants, and two which both age groups contributed to (Fig. 2).

First is the sub-theme ‘absorbing’ which represents the view that some of the older participants said they were absorbed into the iPad test experience. For instance:

“[W]: I found it quite absorbing myself because you had to concentrate on what was in front of you and you have to pin point what the next number was. I have to say it occupied all my thoughts I was just trying to do it as quickly as I could, and as accurately as I could. I was totally absorbed by those 1–8 numbers. Which is strange for me because my mind does tend to wander and it didn’t wander on that occasion”.

The second sub-theme reflects the older participants competing views that the test was a ‘challenge’, and the third sub-theme that it was ‘easy’. For instance:

Challenge: “[J]: I found it absolutely entertaining. I found it quite a challenge [mumbling]. I was sort of trying to do it quite quickly, I failed a couple of times but I think that was these [pointing out his fingers]”.

Easy: “[RA]: I thought it was easier than I thought it would be. I thought ‘I have never used an iPad before!’ And sometimes when I go onto the computer I press something and it goes off, I have done that a few times actually. The iPad I made a few mistakes”

The sub-theme ‘positive experience’ was a shared view of both the older and younger groups. For instance:

Positive experience (older): “[P]: it was quite enjoyable. [W]: and I think the more you did it the more you wanted to do it somehow”.

Positive experience (younger): “[R]: fab, thank you. Did you enjoy doing the test? [A]: it makes me want one [iPad]. [P]: it was interesting but I wouldn’t use the word ‘enjoy’ [laughter] I was just counting dots but it was a little more engaging that some can be. [S]: it made me wonder if they were dots or pool balls [laughter] I think it was nice that it changed on each trial. Like in a paper pencil version of a trail making there is only one set way of doing it and I like having the variation that it is new every time you do it, maybe it is more accurate that way”.

The sub-theme ‘boring and distractible’ is also a shared view in opposition to the test being a positive experience. For instance:

Boring and distractible (older): “[R]: so how did you find the test? [G]: a bit boring I found it, sorry. Repetitively boring there was obviously a sequence for that. I said that to [researcher] I said ‘is this um could you memorise these if you had a good memory and numerative memory?’ The problem is going too fast and then thinking something more interesting may come up next time. It was the same numbers just in a different location. Yeah I found it boring towards the end. [R]: yes and that is perfectly fine, I want you to be as honest as you can. Thank you [G]”.

Boring and distractible (younger): “[R]: ok, so would you say then something like that could be used on a regular basis or would you say no? [L]: I think it was boring”.

The final sub-theme is unique to the younger group and represents the view that the test was like a ‘game’. For instance:

“[B]: it was like many games that you can get on the iPad already, like I have a few already that are similar. [R]: are there any that you think are similar to it? [S]: I wouldn’t know. [A]: not sure. [P]: when she was initially explaining it to me it did kind of remind me almost of like a word search type thing because you are obviously looking for like a 1 and then linking it. [B]: I have quite a few games where you have to link patterns between things and there is ummm well I have about 5 on here and there are millions available as welllike [famous game]. [R]: yeah it is a similar thing

isn’t it. [S]: see I was thinking well what the purpose of the game is, what it is going to be used as. For example, if it is something to do with cognitive training then I wondered what well if it would be of any use to have like a kind of positive feedback mechanism put in because I made a mistake and there was a little sad face and that was feedback too but you know to get people to play it maybe more regularly maybe it would have like increasing difficulty and a score. That would make them go back to it. I don’t know if I would play it regularly just for the sake of doing it as it is now because it is just like tapping the numbers and I want to know that I am doing good. [A]: yeah like in games you want to improve and beat your score. [S]: yeah like progression or how well I am doing. [B]: or different levels, like the next level could have like 10 numbers”.

Testing situation and materials

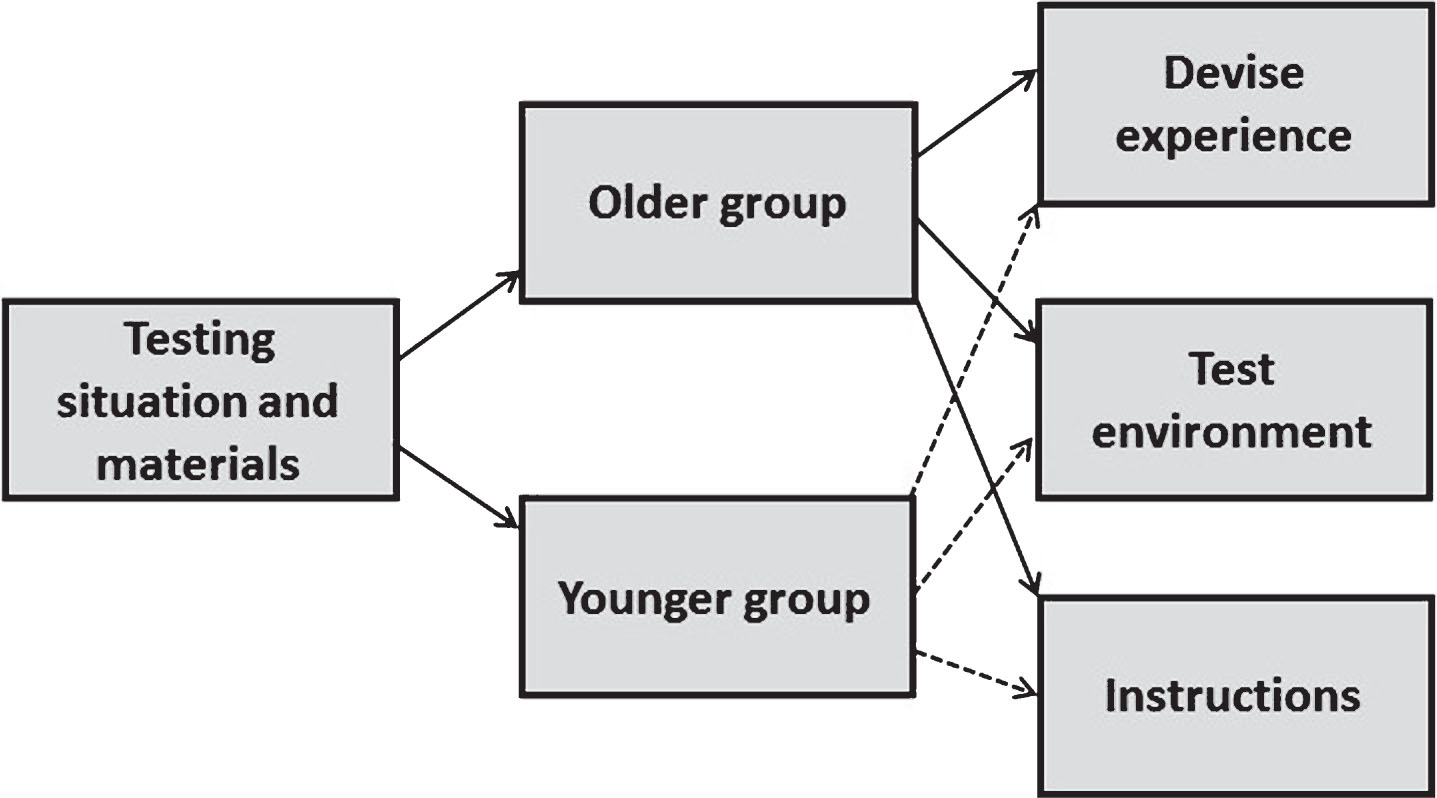

This second theme has three sub-themes developed from the findings of both the older and younger groups (Fig. 3). The first sub-theme reflects the views of both groups regarding the experience they had of using the iPad. For instance:

Device experience (older): “[R]: yes but she won’t be giving scores, what’s more important to us is your feedback from the tests. Did you find the iPad easy to use? [A]: yeah. [G]: well I did and I don’t see very well but it was fine. [J]: I made two mistakes the same as you; as soon as I slowed down a bit I was more accurate. And these would slip down all the time [glasses], but it was ok once I pushed them back up. [R]: yeah ok so that was a challenge you found with your glasses. [J]: yeah. [G]: well if you have a problem with your sight it affects your mobility doesn’t it. [A]: yes I have to agree with you and varifocals; you have got to look over them. [G]: yes, I have to use my reading glasses so that I could see properly.”

Also: “[R]: does anyone have any experience of using a device like an iPad? [A]: yes I do. [Others]: no. [R]: do you think then that having that device and using it previously made an impact on it? [A]: yes I think so. I think when I first got my iPad I was very tentative. But now I sit there with my iPad and go ‘large then small’ [actions], that was news to me at first, I never knew you could do that [laughter]. [M]: so do you have any idea whether or not someone who either type or play the piano are quicker at that than people who are not? [R2]: at the moment we don’t for that test but from what we know of other things we wouldn’t be surprised if they were, absolutely. [A]: I think you’re right though, it’s like kids on mobile phones, they are so fast. Like when I text...well I am faster than I used to be but not as fast as they are. [J]: when using a keyboard I do try and type properly. My granddaughter goes so fast when typing but then has to go back to attend to her mistakes, where as I go slower but have less mistakes”.

Device experience (younger): “[R]: ok, thank you. How about the positioning of the iPad? [L]: fine. [P]: I moved it. [R]: where did you move it to? [P]: I just moved it closer. The angle was a bit well I didn’t move the angle. For me it would have been better flat but maybe because it was quite far into the table. [RB]: it would have been helpful to have one of those holders, what are they called? [P]: like a copy holder? [RB]: yeah, just to have it in front of you, I wonder what that would have been like. [P]: oh I know I like pushing down instead of forwards. [R]: yeah it’s so interesting that the position of it, where it is, the lighting, you have got to think of all these things when it comes to testing situations”

The second sub-theme is the shared collection of views regarding the ‘test environment’ of both age groups. For instance:

Test environment (older group): “N: I was very conscious that [researcher] was watching me. [J]: yes and me. [N]: so I wasn’t quite relaxed doing it from that point of view. I was still conscious that someone is watching me doing this and you think ‘what are they thinking? Arethey taking a note on how I am approaching this?’ so I was very concsious of that as well. [S]: yes that crossed my mind as well. [N]: I think it might have been slightly different if she had said ‘right just go in and do this. This is what you have got to do, sit down and do it and I am going out of the room’ I think I would have approached it slightly differently mentally”.

Test environment (younger group): “[L]: yeah so maybe that unhappy face could spur someone on to do better and faster but then other people will see that unhappy face and think ‘oh no!’. [P]: it put me off completely. [RB]: same [laughter]. I knew [researcher] was sat next to me and I didn’t want her to see the faces. [R]: do you think it would have made a difference if [researcher] was not in the room? [RB]: yeah, I didn’t want her to see it so I kept well at that angle she couldn’t have. [B]: it does show that the unhappy face does mean more”.

The final sub-theme relating to testing situation and materials is regarding the ‘instructions’ that were given to the participants to complete the iPad test. For instance:

Instructions (older group): “[M]: yes I am with him, I found it quite interesting and I am not a trained typist but I do use all my fingers on the keyboard and so I had all my right hand out. And at one point I though ahhh maybe I could use my left hand too but I didn’t because I thought it may get confusing. I learned to look at the pattern before I started, but I wondered if you ever considered having one of these clever gadgets that they can put on your glasses or on your head or something now so that they can see where you are looking. Did you know that they are doing these things in supermarkets now to see where you look on the shelves? I don’t think the object of them doing it is a very good object but the technology is interesting, I didn’t know because she was sitting beside me I couldn’t tell if she could see where I was looking. But I thought that might be interesting because her introduction about looking for someone in the crowd, you know your first reaction is look at the whole thing first and before doing the numbers”.

Instructions (younger group): “[S]: yeah I was going to say that because initially it was not right in front of me it was over there [pointing further away] and I felt I needed to pull it in front of me and I think maybe if you have it on your lap it would be different. So I don’t know, again in terms of the instructions of the set way of doing the task maybe there has to be a certain distance from the screen or uh I don’t know, something that would make sure it is standardised for everyone”.

Test performance

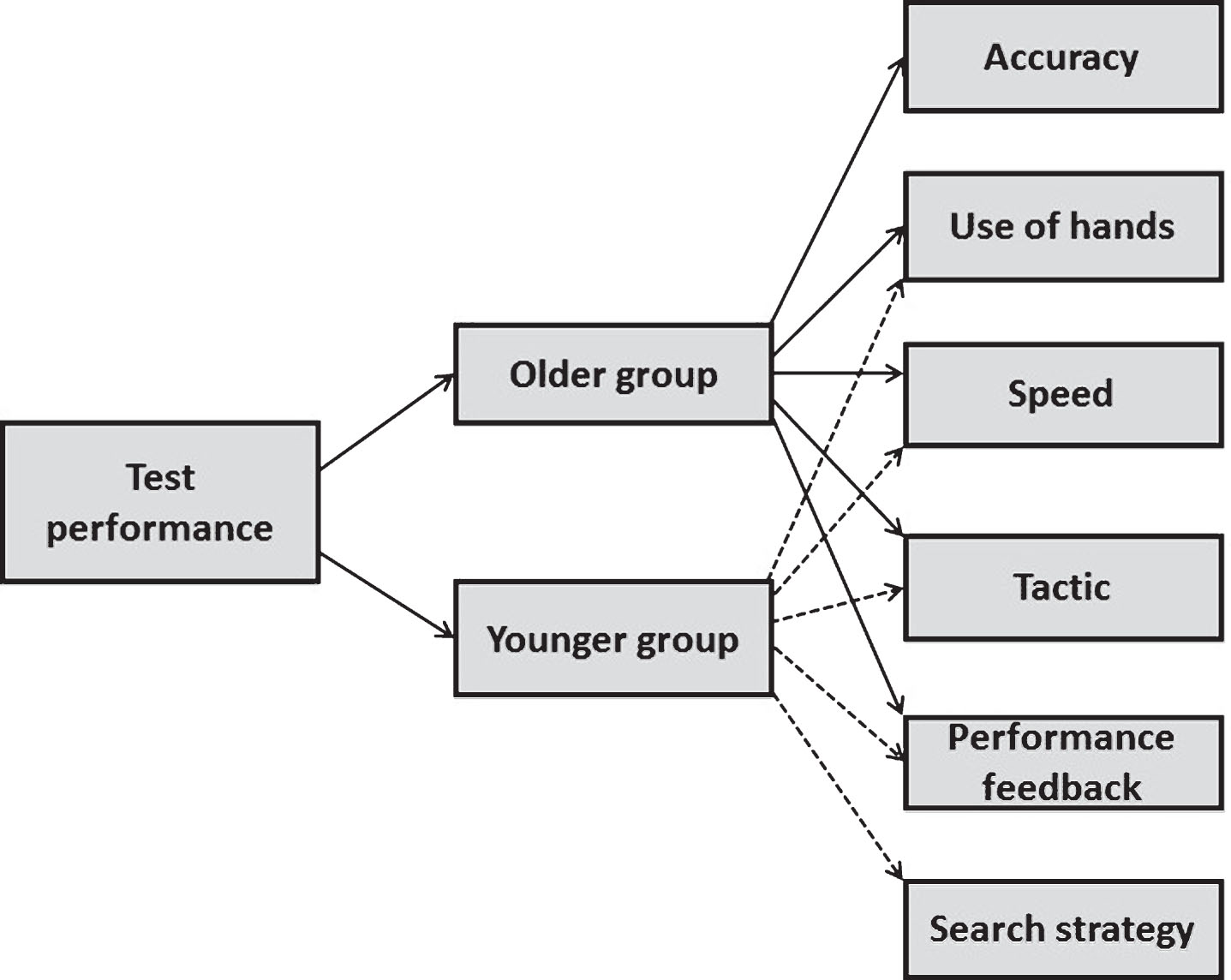

This theme has six sub-themes, four of which are shared between the two age groups, and one unique to each (Fig. 4). The theme relates to how the participants felt they performed at the iPad test. The first sub-theme ‘accuracy’ is based only on the older participants. For instance:

“[R]: so what did you think? Was it due to more accuracy or speed? [N]: a combination of both I think. [P]: yeah it is no good going fast if you’re going to get it all wrong is there. [J]: I was disappointed with the number of mistakes I did make, obviously trying to go too fast. [P]: I made one but I think it was because I didn’t press hard enough on the screen. The face came up [showing sad face]”.

The second sub-theme is the ‘use of hands’ whilst using the iPad. For instance:

Use of hands (older group): “[A]: the only problem I had with the touch screen is my nails. I have this problem at home, and that’s why I use a [brand name] pen because I find you have to develop a certain technique of touching. You can’t just go like that [action] because your nail would touch it and that doesn’t work so you have to slide off rather than...and I found that at home. But as I said I do find it easier to just use a [brand name] pen”.

Also: “[J]: I found it absolutely entertaining. I found it quite a challenge [mumbling]. I was sort of trying to do it quite quickly, I failed a couple of times but I think that was these [pointing out his wide fingers]”.

Use of hands (younger group): “[R]: ok, that’s interesting. How did you use it? [RB]: oh just the one for me. [C]: two fingers. [L]: just one finger. [B]: one hand. [C]: one hand. [P]: that was one of the first questions I asked was ‘can I use both hands?’ [R]: did you just use the one finger? [RB]: yeah my index finger. [L]: yeah me too”.

The third sub-theme ‘speed’ is also shared by the older and younger groups. It reflects the speed participants thought they were supposed to go, or did go when using the iPad. For instance:

Speed (older): “[G]: we know ultimately what the tests are about and that’s cognitive impairment. [A]: or is it speed. [G]: I don’t think speed matters; it’s a balance between speed and accuracy. [M]: I think accuracy. [R]: there are lots of factors, there’s speed and accuracy. [R]: so how do you feel (J)? [J]: I would say about 85%, I think it was ok”.

Speed (younger): “[R]: so did you find the test enjoyable? [L]: in the beginning. [C]: yeah with my competitive edge to it. [L]: yeah I was a bit competitive, I wish we was being timed and we could find out how we done. I get really competitive, I was thinking ‘I need to do this the quickest out of everybody’, I was going for it. [C]: it’s not all about rewards because a reward is obviously a motivator to do well but for me thinking that someone could see a bad kind of response, that would make me want to do even better because I would like ‘I don’t want to be the slow one’ [P]: I work better with positive reinforcement so something to say ‘that you’re doing well’ because if you show well you performed in the worst quartile well I would be like oh I cannot be bothered now, but that’s just me I don’t work very well with punishment. [L]: I am the same. [RB]: yeah like it kind of deflates you a little bit so maybe performance goes down with that as well maybe. [L]: yeah so maybe that unhappy face could spur someone on to do better and faster but then other people will see that unhappy face and think ‘oh no!’. [P]: it put me off completely. [RB]: same [laughter]. I knew [researcher] was sat next to me and I didn’t want her to see the faces. [R]: do you think it would have made a difference if [researcher] was not in the room? [RB]: yeah, I didn’t want her to see it so I kept well at that angle she couldn’t have. [B]: it does show that the unhappy face does mean more”.

The sub-theme ‘tactic’ refers to the tactics both the older and younger groups had when completing the iPad test. For instance:

Tactic (older): “[JC]: I used the one finger all the time, I think I intuitively was picking out the first four numbers and then the other four. Also, I am very competitive, I was trying to go faster and faster so not much focus on being accurate so I had two errors.”

Tactic (younger): “[C]: yeah and also like how I went about it, like at the start I was just like looking 1, 2, 3, 4, as opposed to once I had an unhappy face it changed how I did it, like I was looking at groups so I would find 1, 2, then 3 and 4, then 5 and 6, and I found that I was quicker because it would take me an extra second to look but I tap quicker then because I already knew where the other one was. So I changed how I attended to it. [L]: changed your strategy. [C]: yeah”.

The final shared sub-theme is ‘performance feedback’ which relates to how much feedback they would ideally like to have had from performing the iPad test. For instance:

Performance feedback (older): “[N]: I have to say I would love to know how well I did. I would like to have some feedback on it. I think most of us who have done a test would like that. And what I assume is looking at how many mistakes someone makes is information I would like to have in feedback you know”.

Performance feedback (younger): “[R]: fab ok, how did you find it? [B]: same here yeah and then I got an unhappy face then all of a sudden I was like “wow slow down”. [RB]: I didn’t get an unhappy face. [B]: I got two. [L]: I got two. [C]: I got two. [L]: but I think my finger accidently went too far next to the other ball, basically I shouldn’t have had the second unhappy face. [P]: do you want to appeal the judgement? [Laughter].: I do yes [laughter]. [P]: see you have got no excuse, I have, I have to hit the keys with my podgy fingers [laughter]. [C]: yeah it was like 6 and 8 for me that looked similar, that was the two that I noticed I got wrong. I went for an 8 instead of a 6 because they look so similar, but I knew straight away that I got it wrong”.

The final sub-theme ‘search strategy’ is unique to the younger group. It reflects the strategies employed by some of the younger participants to perform the iPad test. For instance:

“[P]: I suppose it depends on how you attend to the whole task whether you’re a linear searcher or whether you look at the holistic picture and I could generally sit back and look at the whole thing. And at that point you’re more susceptible to different shapes because I could just sit there with both hands and then if they were split between left and right I found it easier to go from one side of the screen to the other using two hands rather than if they were grouped around one area”.

DISCUSSION

To reiterate, the main aim of this study was to provide a focus group-based qualitative evaluation of administering a cognitive test on a mobile device and to gauge levels of acceptability with both younger and older adults, particularly related to the participant’s familiarity with tablet technology. The potential influence of real-time feedback and researcher presence upon task performance was also examined.

Engagement level

Our results suggest that use of a mobile device-based cognitive test was both engaging and enjoyable for some older and younger adults but that for many others it was not. For instance, for some older adults it was deemed to be a ‘positive experience’, thus some said“[P]: it was quite enjoyable. [W]: and I think the more you did it the more you wanted to do it somehow”. However, for other older adults it was believed to be ‘boring and distractible’, thus one said “[R]: so how did you find the test? [G]: a bit boring I found it, sorry. Repetitively boring there was obviously a sequence for that. I said that to [researcher] I said ‘is this um could you memorise these if you had a good memory and numerative memory?’ The problem is going too fast and then thinking something more interesting may come up next time. It was the same numbers just in a different location. Yeah I found it boring towards the end. [R]: yes and that is perfectly fine, I want you to be as honest as you can. Thank you [G]”. The younger participants also expressed the test experience as positive, for instance,“[R]: fab, thank you. Did you enjoy doing the test? [A]: it makes me want one [iPad]. [P]: it was interesting but I wouldn’t use the word ‘enjoy’ [laughter] I was just counting dots but it was a little more engaging that some can be. However, others also deemed it to be ‘boring and distractible’, thus “[R]: ok, so would you say then something like that could be used on a regular basis or would you say no? [L]: I think it was boring”.

Feedback

In the MILO test, performance feedback was given in the form of an unhappy face icon when a mistake was made. However, we can see from the comments made in this study that in real life, rather than providing a potential learning opportunity, via feedback, such an icon can have a demoralizing effect, with evidence that an individual experiences embarrassment if an observer can see the unhappy faces, i.e., their poor performance. These factors may detrimentally affect test results and render the individual less likely to want to do the task again. Related to this was the finding that people could feel very self-conscious when being watched; again the presence or not of an observer may affect an individual’s test performance. A number of participants were embarrassed at the thought that the researcher present could see if they had an unhappy face pop up. Although this might not be of importance if the tests are self-administered, it is a pertinent consideration when administered by another individual.

A suggestion from the participants wanting feedback on their performance was the implementation of a score count, or differing test levels. Test levels could be signified by a change in the color of the balls. An addition of subtle performance feedback to the test design could be what facilitates further interest and engagement. One has to consider that this may, however, affect performance; some people may rise to it and see it as a challenge and be more motivated to do well while others may feel demoralized and give up trying; individual differences are then likely to play an important part in such considerations. A potential limitation to our study is that we did not have happy feedback; instead lack of a sad face meant that performance was acceptable. It is likely therefore that developers will need to take into account that feedback per se and how it is presented may influence performance. It is certainly the case that individuals in our focus groups definitely noticed and talked about this issue.

Time of day

The time of day that one would best engage with the task is highly individualistic. Some said they would be most alert and attentive early in the morning, others later at night. Using this test in a clinical setting would also struggle to take into account the test users’ preferred time of day and the actual time of day. Realistically, only in exceptional circumstances where the test user is especially tired could allowances be made. In the context of the test being used regularly as a cognitive monitoring tool, they would be advised to use it at their preferred time of day and the times tests were taken could be recorded if the impact was severe.

Test design and associated instructions

The participants in this study have highlighted several issues pertinent to the development of tablet or mobile-based tests of attention and reaction time tests typical of those used in the assessment of cognitive impairment.

One factor that may introduce bias, variability and low validity, in test outcome is the reported heterogeneity in response strategy, e.g., the use of one or two fingers on one or both hands. It is important therefore to realize that unless highly specific instructions are provided, study outcome (e.g., speed and accuracy) can be related to an individual’s choice and execution of a particular search strategy. This is also a factor to consider when the same test is repeated, i.e., does the individual adopt the same search and response strategy each time?, a factor which may detrimentally affect task validity. It was also apparent that individual differences in hand and finger mobility, related to factors such as arthritis or long fingernails may also influence performance.

The focus group analysis indicated that the instructions provided need to be very specific in relation to what the test user understands to be most important, i.e., speed or accuracy of their performance/fingers to use/strategy, etc. There was much disparity regarding what the participants felt was most important (in terms of strategy/technique) despite clear instructions given prior to the start of the test. Furthermore, the test’s validity could be hindered if instructions regarding what is most important of their performance are not made clear. For instance, the level of education about the systems purpose, i.e., is it the speed or the accuracy of their performance which is most important? There was much disparity regarding what the participants thought was most important despite clear instructions given prior to the start of the test. Their lack of clarity could have been due to their preoccupation with the testing situation. If so, then it should be made a priority that they fully engage with the instruction process prior to the start of the test. The inclusion of a practice trial could be implemented in the future.

These issues seem to suggest that participants might have treated the test more like it was a video game as opposed to a cognitive test with an approach that involves strategizing to maximize the score they receive and possibly an increased sense of motivation or competitiveness with other players to get a “high score”. Researchers have not examined the attitudes and motivations of people who engage with cognitive testing, however, the motivations for video game play are quite well understood. Engagement with video games can be intrinsically motivating with reward derived from simple actions and immersion in game [39] or motivation can be derived from a sense of challenge or competition in the game and the accomplishment that come with it [40]. In conventional video games, these motivators can drive people to practice/play more and become extremely skilled with the games, improving their scores and their visuospatial awareness [41]. The questions this raises for the digital tests are first, whether the test motivates practice in the same way a game does, and second, whether this practice invalidates the test. For example, if one becomes too practiced, then test-performance ceiling effects can be induced.

Physical challenges

Several people also indicated physical challenges that affected their performance, such as wearing glasses (slipping down their nose) and difficulty with varifocals because of the iPad being positioned flat on the desk and the individual having to lean over it. Therefore, the ergonomics of the iPad positioning in relation to the required use of visual aids is of great importance when developing such tests. A suggestion from some of the participants was that the iPad is positioned on a tilted stand in front of them. This position would ameliorate the physical difficulties reported in this study but could affect test score and might not be consistently used. The positioning of the iPad in relation to lighting in the room could also interfere with the ability to see the stimuli. Again, the tilting of the iPad on a stand could assist in reducing the light disruption but also the researcher should take lighting into account when selecting an appropriate environment.

Furthermore, having long finger nails physically interfered with users and affected their responses as did having large fingers, and having arthritis in their wrists, hands, or fingers (see above). Some of the participants suggested the use of a pen/pointer instead of relying on the skin conductance of their fingers. This would also alleviate the need for too much emphasis on how many hands or fingers should be used, they would only use the pen/pointer. This indicates the importance of considering when developing such tests that manual dexterity and concurrent illnesses may also affect the physical ability to respond appropriately As such, allowances need to be put in place in order for researchers and clinicians to control for physical disability affecting their results.

The physical challenges reported above are consistent with findings in Weilenmann [42] in the context of texting on mobile phones. The senior informants in this paper entered text on the mobile phone, which relied on sequential pressing of keys within certain time-frame. Participants reported issues regarding timing and the rhythm of key-pressing: (1) Doing a sequential key-pressing was not a straightforward task, (2) they tended to press too slowly or pressing one longer period of time than the other, (3) slow rhythm of their hand movements.

Although it has been argued that touch-displays are easier and more intuitive to use for older adults [43], there is no robust evidence in the HCI literature supporting this commonly believed argument. For example, Culén and Bratteteig [44] argue touch-displays are not an optimal choice. However, they conclude that with customization and adaptation strategies, they may become a better match.

In a multi-directional tapping task on an Android tablet, Burkhard and Koch [45] asked 30 older adults (65+) to perform eleven single taps (eleven targets) around a circle starting from target one and finishing at target eleven, all targets located in a random order around the table. The authors used Fitts’ Law to compare the measurements on different Android tablet sizes. Their initial findings show that factors such as age and gender as well as dry-finger skin and different age-related cognitive-motor skills should be considered in design of interfaces on touch-displays. In particular, their observations indicated that elderly people with dry or wrinkled fingertips had a significantly higher touch recognition error rate on some tablets. This could also be related with the layer types of the resistive touch-screen technology. Harada et al.’s [46] study also support dry-finger and users’ frustrations with unresponsive taps.

CONCLUSION

Arguably iPad-based tests may be an ideal base for home testing, with subsequent increased compliance in clinical trials, longitudinal clinical and research follow up, and the ability to signal deterioration and thus to facilitate intervention, but many factors need to be considered in their development if such tests are to reliable, valid, and objective. The participants in this study highlighted several issues pertinent to the development of tablet or mobile-based tests typical of those used in the assessment of cognitive function in older adults, which can then be used to inform more specific development for testing in individuals with cognitive impairment and dementia. In order to inform those considering developing tasks of RT and other aspects of cognitive function on touch screen based tablets, we summarize the information gained from our focus groups in the following section in a series of bullet points. It is clear from this information that many factors, which may not be currently taken into account when designing such tasks for use on touch screen tablets, but which, without being addressed could significantly influence task performance and thus adversely affect the clinical validity of such a test.

•Without highly specific instructions, response strategy to test components and stimuli can vary between individuals, despite clear instructions given prior to the start of the test. Variability in the use of one finger, or several fingers on the same or different hands, was common when participants were requested to touch the stimuli upon the screen. The instructions provided therefore need to be highly specific in order to preserve test validity and consistency of administration.

•Arthritis, long fingernails, and dry skin appeared to adversely affect performance leading to some participants suggesting the use of a pen/pointer instead of relying on the skin conductance of their fingers. Arguably, this would also alleviate concerns about the potential variability in finger and hand use. This indicates the importance of considering when developing such tests that manual dexterity and concurrent illnesses may also affect the physical ability to respond appropriately. As such, allowances need to be put in place in order for researchers and clinicians to control for changes in physical ability affecting results.

•Some participants treated the test more like a video game as opposed to a cognitive test and thus appeared to adopt an approach that involves strategies to maximize their score, and possibly an increased sense of motivation or competitiveness with other ‘players’ (members of the focus group) to get a “high score”. Motivation related to videogame play is relatively well understood. For example, engagement with video games can be intrinsically motivating with reward derived from simple actions and immersion in game [39] or motivation can be derived from a sense of challenge or competition in the game and the accomplishment that come with it [40]. In conventional video games, these motivators can drive people to practice/play more and become extremely skilled with the games, improving their scores and their visuospatial awareness [41]. The questions this raises for touch screen-based cognitive tests are whether the test motivates practice in the same way a game does (because of its similarity with a given game or the fact that tablets are commonly used for gaming) and that fact that motivation can affect RT speed performance [47] and whether this practice invalidates the test. For example, if one becomes too practiced then test-performance ceiling effects can be induced, or indeed such factors may help to improve or stabilize performance in those with cognitive decline.

•Feedback. In the MILO test, performance feedback was given in the form of an unhappy face icon when a mistake was made. However, we see from the comments made in this study that in real life, rather than providing a potential learning opportunity, via feedback, such an icon can have a demoralizing effect, with evidence that an individual experiences embarrassment if an observer can see the unhappy faces, i.e., that their performance is poor. Some individuals clearly felt self-conscious when being watched; thus the presence or not, of a test administrator may affect an individual’s test performance. Although this might not be of importance if the tests are self-administered (e.g., take home cognitive monitoring tests), it is a pertinent consideration if administered by others. A limitation to our study, however, is that our lack of ‘happy feedback’; instead lack of a sad face meant that performance was acceptable. It is likely therefore that developers will need to take into account that feedback per se and how it is presented may influence performance.

•Physical challenges that affected test performance included the wearing of glasses (e.g., slipping down their nose when their head was bent over the tablet which was positioned flat upon a table), particularly with varifocals. Therefore, the ergonomics of the tablet positioning in relation to the required use of visual aids is of great importance when developing such tests, see also [42]. A suggestion from some of the participants was that the tablet should be placed in a tilted stand, and indeed spontaneous tried to hold it in this position so they could see the stimuli. However, although this position may ameliorate some physical difficulties, it is possible that it may affect performance in other ways as yet investigated and thus once again consistency of positioning would be highly important. The positioning of the tablet in relation to lighting in the room can also interfere with the ability to see the stimuli, thus lighting becomes an important consideration when selecting the testing environment.

There are of course limitations with our focus group study. For example, individuals living with dementia or cognitive impairment were not included, and it is possible that test administration, reaction to it, and performance varies with the integrity of cognitive function. Future studies should include a wider range of tests and their validation with other forms of computerized testing, groups representative of a wider range of age-related changes such those found in relation to vision (such as cataracts, wearing glasses, color blindness), hearing, mobility and dexterity, memory function (what happens if individuals forget the instructions?), and levels of motivation and response confidence (e.g., examining the potential for guessing the response). Other pertinent factors for developers to consider in the future relate to the minimum time for each test (to ensure that the time taken to perform the test is short so as not to induce fatigue, especially when a number of tests are presented in a battery) but reliable, practical usage and efficiency (both time and economic) within a diagnostic workflow, test anxiety in relation to using the iPads and how this may affect performance, potential influence of practice effects (which may be minimized through dynamic item generation or randomization), whether or not to build in checks that reactions are valid with respect to test instructions and how might negative effects of psychometric testing, such as those induced by performance feedback, demotivate and possibly even disclose a diagnosis to impaired participants. Response strategies also need to be considered in greater detail; for example in terms of verbalization, whether individuals always use the same response strategy throughout the test and whether different people use different strategies. Methodological considerations regarding the optimal viewing and performance such as fixed viewing distances (in that individuals may move the iPad closer or further away to compensate for changes in their visual function), the angle of the iPad during stimulus presentation (at an angle or flat on a table), viewing distance and lighting, technical aspects such as the display and operating systems [11], the feasibility of using the internet to access the test or to upload test results [9], how used to using the internet or tablet technology a person is [9], how to ensure the correct identification of the person taking the test [9], and whether the intrinsic design of the iPad can affect performance [11]. Finally, it is important to recognize that for a test to be included in routine clinical and indeed in research practice, the needs of all stakeholders (e.g., patient, clinicians, scientists, programmers/developers) need to be investigated and considered in the development stage of such tests with the resultant development of quality criteria for the use of mHealth apps.

Our hope is that the results of this small study lead to a greater investigation of such factors relevant to the validity of tablet-based tests of cognitive function. However, future work will need to focus on better understanding the impact of physical challenges to use, practice, and technical familiarity as the number of older adults who regularly engage with such technology rises.

ACKNOWLEDGMENTS

Ethical approval for this study was granted by the Department of Psychology at Swansea University. Funding was provided by the BTG Seedcorn award. Thank you to all the participants who were involved in this study.

Authors’ disclosures available online (http://j-alz.com/manuscript-disclosures/16-0545r2).

REFERENCES

[1] | Smith A ((2014) ) Older adults and technology use. Pew Research Centre, Washington DC. http://www.pewinternet.org/files/2014/04/PIP_Seniors-and-Tech-Use_040314.pdf |

[2] | Grindrod KA , Li M , Gates A ((2014) ) Evaluating user perceptions of mobile medication management applications with older adults: A usability study. JMIR Mhealth Uhealth 2: , e11. |

[3] | Georgsson M , Staggers N ((2006) ) Quantifying usability: An evaluation of a diabetes mHealth system of effectiveness, efficiency, and satisfaction metrics with associated user characteristics. J Am Med Inform Assoc 1: , 5–11. |

[4] | Peyton T , Poole E , Reddy M , Kraschnewski J , Chuang C ((2014) ) Information, sharing and support in pregnancy: Addressing needs for mHealth design. In Proceedings of the Companion Publication of the 17th ACM Conference on Computer Supported Cooperative Work and Social Computing. ACM, pp. 213–16. |

[5] | Jones V , Gay V , Leijdekkers P ((2010) ) Body sensor networks for mobile health monitoring: Experience in Europe and Australia. In Digital Society, ICDS’10 Fourth International Conference on IEEE pp. 204–209. |

[6] | Cinaz B , Amrich B , La Marca R , Tröster G ((2012) ) A case study on monitoring reaction times with a wearable user interface during daily life. Int J Comput Healthc 1: , 283–303. |

[7] | Alepis E , Lambrinidis C ((2013) ) M-health: Supporting automated diagnosis and electronic health records. Springer Plus 2: , 1–9. |

[8] | Zapata BC , Fernández-Alemán JL , Idri A , Toval A ((2015) ) Empirical studies on usability of mHealth Apps: A systematic literature review. J Med Syst 39: , 1. |

[9] | Rentz DM , Dekhtyar M , Sherman J , Burnham S , Blacker D , Aghjayan SL , Papp KV , Amariglio RE , Schembri A , Chenhall T , Maruff P , Aisen P , Hyman BT , Sperling RA ((2016) ) The feasibility of At-Home iPad cognitive testing for use in clinical trails. J Prev Alzheimers Dis 3: , 8–12. |

[10] | Waters AJ , Li Y ((2008) ) Evaluating the utility of administering a reaction time task in an ecological momentary assessment study. Psychopharmacology 197: , 25–35. |

[11] | Schatz P , Ybarra V , Leitner D ((2015) ) Validating the accuracy of reaction time assessment on computer-based tablet devices. Assessment 22: , 405–410. |

[12] | Crabtree DA , Antrim LR ((1988) ) Guidelines for measuring reaction time. Percept Mot Skills 66: , 363–370. |

[13] | Eckner JT , Chandran S , Richardson JK ((2011) ) Investigating the role of feedback and motivation in clinical reaction time assessment. PM R 3: , 1092–1097. |

[14] | Firbank M , Kobeleva X , Cherry G , Killen A , Gallagher P , Burn DJ , Thomas AJ , O’Brian JT , Taylor JP ((2015) ) Neural correlates of attention-executive dysfunction in lewy body dementia and Alzheimer’s disease. Hum Brain Mapp 37: , 1254–1270. |

[15] | Haworth J , Phillips M , Newson M , Rogers P , Torrens-Burton A , Tales A ((2016) ) Measuring information processing speed in mild cognitive impairment: Clinical versus research dichotomy. J Alzheimers Dis 51: , 263–275. |

[16] | Phillips M , Rogers P , Haworth J , Bayer A , Tales A ((2013) ) Intra-individual reaction time variability in mild cognitive impairment and Alzheimer’s disease: Gender, processing load and speed factors. PLoS 8: , e65712. |

[17] | Fjell AM , Walhovd KB ((2010) ) Structural brain changes in aging: Courses, causes and cognitive consequences. Rev Neurosci 21: , 187–221. |

[18] | Werner F , Werner K , Oberzaucher J ((2012) ) Tablets for seniors – An evaluation of a current model (iPad). In Ambient Assisted Living, Wichert R , Eberhardt B , eds. Springer Berlin Heidelberg, pp. 177–184. |

[19] | Culén AL , Bratteteig T ((2013) ) Touch-screens and elderly users: A perfect match? In ACHI 2013, The Sixth International Conference on Advances in Computer-Human Interactions, pp. s460–465. |

[20] | Burkhard M , Koch M ((2012) ) Evaluating touchscreen interfaces of tablet computers for elderly people. In Mensch and Computer, Reiterer H , Deussen O , (Hrsg.), Workshopband: Interaktiv informiert – allgegenwärtig und allumfassend!? Oldenbourg Verlag, München, pp. 53–59. |

[21] | Harada S , Sato D , Takagi H , Asakawa C ((2013) ) Characteristics of elderly user behavior on mobile multi-touch devices. In Human-Computer Interaction – INTERACT 2013, Kotze P , Marsden G , Lindgaard G , Wesson J , Winckler M , eds. Springer Berlin Heidelberg, pp. 323–341. |

[22] | Stone RG ((2008) ) Mobile touch interfaces for the elderly. In Proceedings of ICT, Society and Human Beings. |

[23] | Smith AP , Nutt DJ ((2014) ) Effects of upper respiratory tract illnesses, ibuprofen and caffeine on reaction time and alertness. Psychopharmacology 231: , 1963–1974. |

[24] | Padilla-Medina JA , Prado-Olivarez J , Amador-Licona N , Cardona-Torres LM , Galicia-Resendiz D , Diaz-Carmona J ((2013) ) Study on simple reaction and choice times in patients with type 1diabetes. Comput Biol Med 43: , 368–376. |

[25] | Yoonessi A , Yoonessi A ((2011) ) Functional assessment of magno, parvo and konio-cellular pathways; current state and future clinical applications. J Ophthalmic Vis Res 6: , 119–126. |

[26] | Chase HW , Michael A , Bullmore ET , Sahakian BJ , Robbins TW ((2010) ) Paradoxical enhancement of choice reaction time performance in patients with major depression. J Psychopharmacol 24: , 471–479. |

[27] | Hagger-Johnson GE , Shickle DA , Roberts BA , Deary IJ ((2012) ) Neuroticism combined with slower and more variable reaction time: Synergistic risk factors for 7-year cognitive decline in females. J Gerontol B Psychol Sci Soc Sci 67: , 572–581. |

[28] | Jenkins A , Eslambolchilar P , Lindsay S , Tales A , Thornton I ((2016) ) Attitudes towards attention and ageing: What differences between younger and older adults tell us about mobile technology design. IJMHCI 8: , 46–67. |

[29] | Thornton IM , Horowitz TS ((2004) ) The Multi-Item Localization (MILO) task. Percept Psychophys 66: , 38–50. |

[30] | Horowitz TS , Thornton IM ((2008) ) Objects or locations in vision for action? Evidence from the MILO task. Vis Cog 16: , 486–513. |

[31] | Reitan RM ((1958) ) Validity of the Trail Making Test as an indicator of organic brain damage. Percept Mot Skills 8: , 271–276. |

[32] | Dalmaijer ES , Van der Stigchel S , Nijboer TC , Cornelissen TH , Husain M ((2015) ) Cancellation tools: All-in-one software for administration and analysis of cancellation tasks. Behav Res Methods 47: , 1065–1075. |

[33] | Tsui Y , Horowitz TS , Thornton IM ((2013) ) Planning search for multiple targets using the iPad. Perception 42: , 217–217. |

[34] | Woods AJ , Göksun T , Chatterjee A , Zelonis S , Mehta A , Smith SE ((2013) ) The development of organized visual search. Acta Psychol 143: , 191–199. |

[35] | Kobayashi M , Hiyama A , Miura T , Asakawa T , Hirose M , Ifukube T ((2011) ) Elderly user evaluation of mobile touchscreen interactions. In Proceedings of the 13th IFIP TC 13 International Conference on Human-Computer Interaction (INTERACT’11), Campos P , Nunes N , Graham N , Jorge J , Palanque P , eds. Springer-Verlag, Berlin, Heidelberg, pp. 83–99. |

[36] | Leitao R , Silva PA ((2012) ) Target and spacing sizes for smartphone user interfaces for older adults: Design patterns based on an evaluation with users. In Conference on Pattern Languages of Programs, Arizona, United States, pp. 19–21. |

[37] | Motti LG , Vigouroux N , Gorce P ((2013) ) Interaction techniques for older adults using touchscreen devices: A literature review. 25eme conference francophone sur l’Interaction Homme-Machine, IHM’13, Nov 2013, Bordeaux, France. |

[38] | Braun V , Clarke V ((2006) ) Using thematic analysis in psychology. Qual Res Psychol 3: , 77–101. |

[39] | Cox A , Cairns P , Shah P , Carroll M ((2012) ) Not doing but thinking: The role of challenge in the gaming experience. In Proceedings of the 30th Annual ACM Conference on Human factors in Computing Systems (CHI ’12). ACM, New York, pp. 79–88. |

[40] | Yee N ((2006) ) Motivations for play in online games. Cyberpsychol Behav 9: , 772–775. |

[41] | Green CS , Bavelier D ((2006) ) Effect of action video games on the spatial distribution of visuospatial attention. J Exp Psychol Hum Percept Perform 32: , 1465–1478. |

[42] | Weilenmann A ((2010) ) Learning to text: An interaction analytic study of how seniors learn to enter text on mobile phones. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (CHI ’10), ACM, New York, pp. 1135–1144. |

[43] | Werner F , Werner K , Oberzaucher J ((2012) ) Tablets for seniors- An evaluation of a current model (iPad). In Ambient assisted Living, Wichert R , Eberhardt B , eds. Springer Berlin Heidelberg, pp. 177–184. |

[44] | Culén AL , Bratteteig T ((2013) ) Touch-screens and elderly users: A perfect match? Changes 7: , 15. |

[45] | Burkhard M , Koch M ((2012) ) Evaluating touchscreen interfaces of tablet computers for elderly people. Workshopband Mensch and Computer, S, 53–59. |

[46] | Harada S , Sato D , Takagi H , Asakawa C ((2013) ) Characteristics of elderly user behavior on mobile-touch devices. In IFIP Conference on Human-Computer Interaction. Springer berlin Heidelberg, pp. 323–341. |

[47] | Mir P , Trender-Gerhard I , Edwards MJ , Schneider SA , Bhatia KP , Jahanshahi M ((2011) ) Motivation and movement: The effect of monetary incentive on performance speed. Exp Brain Res 209: , 551–559. |

Figures and Tables

Fig.1

A screen shot of the iPad MILO task used in the current study.

Fig.2

Views of test experience.

Fig.3

Testing situation and materials.

Fig.4

Test performance.

Table 1

Focus group schedule (iPad test experience)

| Focus group section | Questions and prompts |

| iPad test feedback questions | -Has anyone used an iPad/similar device before? |

| -How would you describe your experiences ofusing the test? | |

| -Prompt – was it enjoyable or not? | |

| -How well did you think you have done? | |

| -What parts of the tests did you find challenging? | |

| -Prompt - was it too fast? Hard to pay attention to, etc.? | |

| -Was the iPad easy to use? |